Best AI tools for< Reduce Memory Usage >

20 - AI tool Sites

Chatty

Chatty is an AI-powered chat application that utilizes cutting-edge models to provide efficient and personalized responses to user queries. The application is designed to optimize VRAM usage by employing models with specific suffixes, resulting in reduced memory requirements. Users can expect a slight delay in the initial response due to model downloading. Chatty aims to enhance user experience through its advanced AI capabilities.

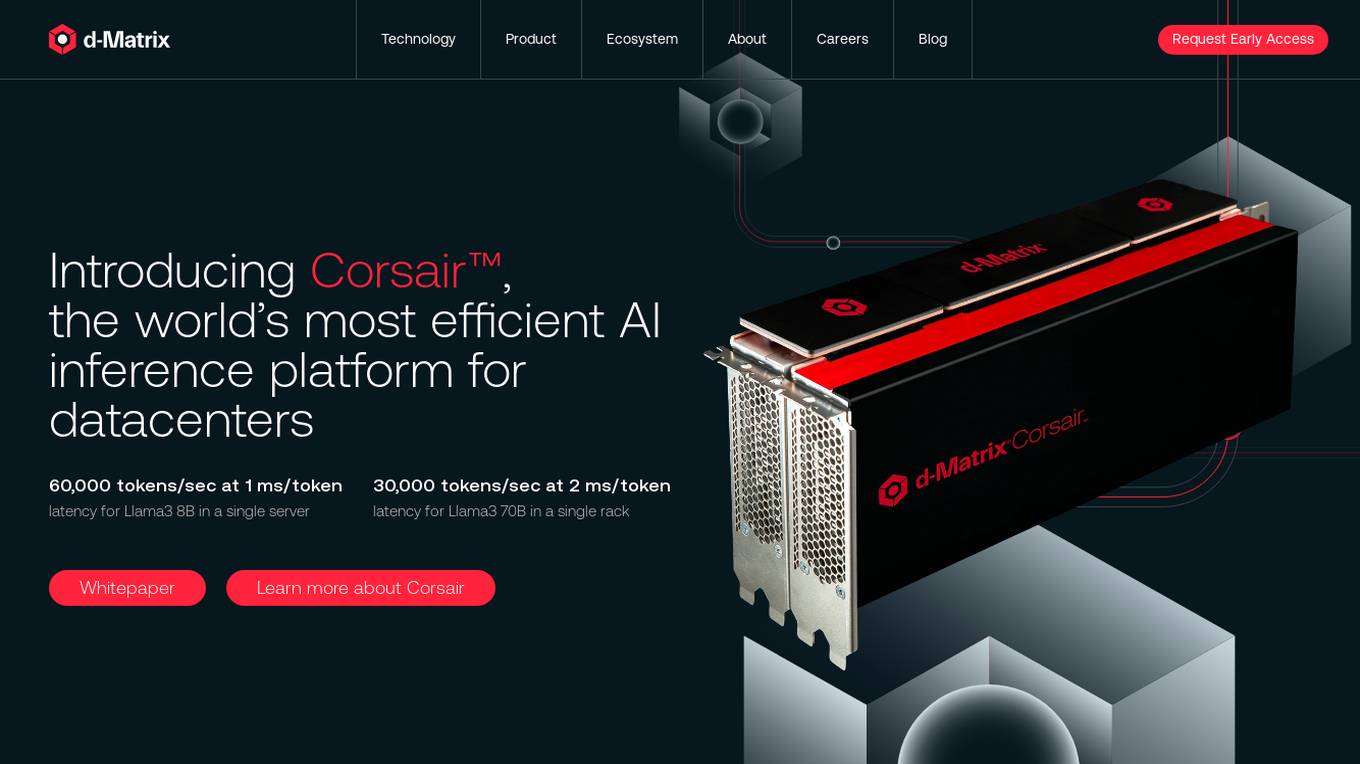

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

GoCharlie

GoCharlie is a leading Generative AI company specializing in developing cognitive agents and models optimized for businesses. Its AI technology enables professionals and businesses to amplify their productivity and create high-performing content tailored to their needs. GoCharlie's AI assistant, Charlie, automates repetitive tasks, allowing teams to focus on more strategic and creative work. It offers a suite of proprietary LLM and multimodal models, a Memory Vault to build an AI Brain for businesses, and Agent AI to deliver the full power of AI to operations. GoCharlie can automate mundane tasks, drive complex workflows, and facilitate instant, precise data retrieval.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Olympia

Olympia is an AI-powered consultancy platform that offers smart and affordable AI consultants to help businesses with various tasks such as business strategy, online marketing, content generation, legal advice, software development, and sales. The platform features continuous learning capabilities, real-time research, email integration, vision capabilities, and more. Olympia aims to streamline operations, reduce expenses, and boost productivity for startups, small businesses, and solopreneurs by providing expert AI teams powered by advanced language models like GPT4 and Claude 3. The platform ensures secure communication, no rate limits, long-term memory, and outbound email capabilities.

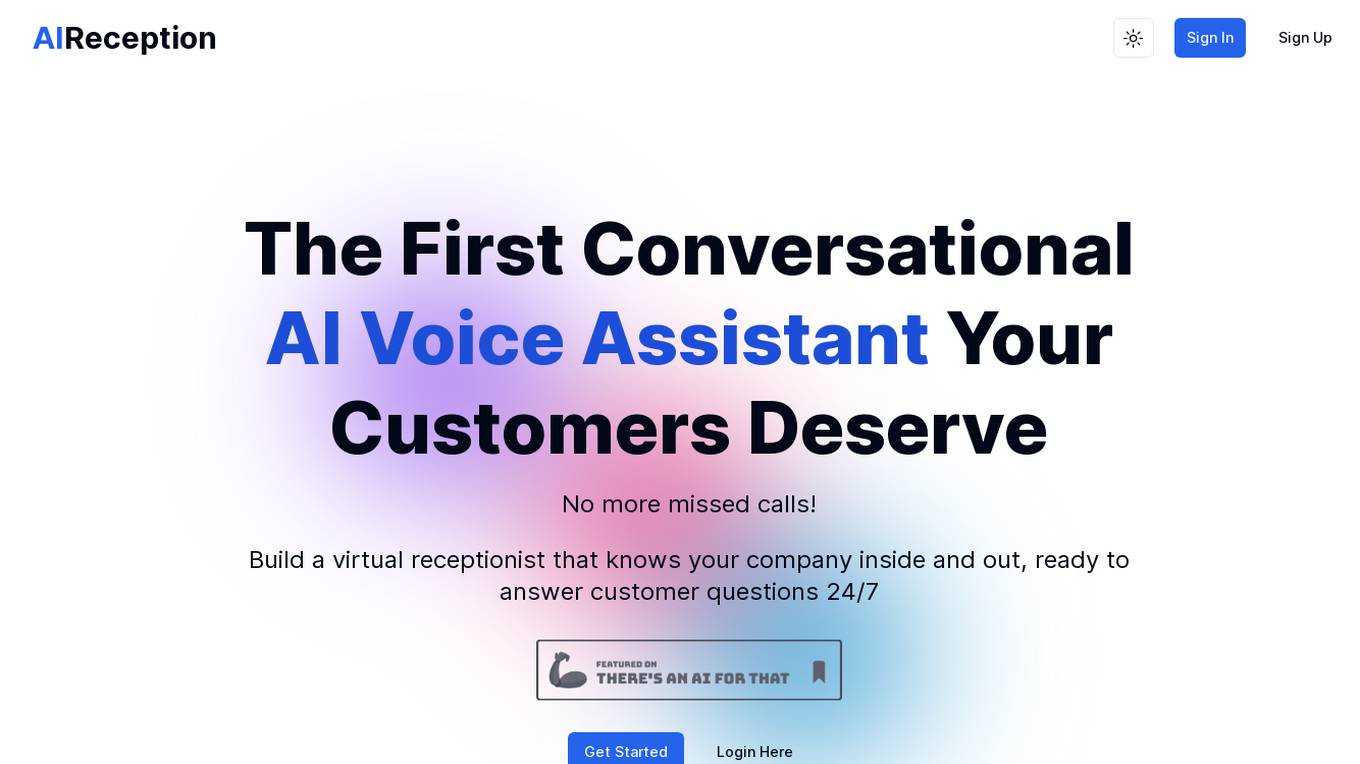

AIReception

AIReception is a conversational AI voice assistant platform that allows businesses to build virtual receptionists capable of answering customer questions 24/7. The AI voice assistants are designed to replicate human speech patterns and interactions, providing a natural and immersive experience. The platform offers features such as hyper-realistic voices, human-like interaction, perfect memory, customizable responses, and call transferring. AIReception aims to enhance customer service, reduce overhead costs, and provide detailed analytics for customer interactions.

Memorly.AI

Memorly.AI is an AI tool designed to help businesses grow by leveraging AI agents to manage sales and support operations seamlessly. The tool offers personalized engagement and optimized processes through autonomous workflows, efficient customer interactions across multiple channels, and 24/7 human-like interactions. With Memorly.AI, businesses can handle high volumes without increasing costs, leading to improved conversation rates, customer engagement, and cost reduction. The tool is pay-per-use and allows businesses to deploy AI agents on various channels like WhatsApp, websites, VoIP, and more.

Pongo

Pongo is an AI-powered tool that helps reduce hallucinations in Large Language Models (LLMs) by up to 80%. It utilizes multiple state-of-the-art semantic similarity models and a proprietary ranking algorithm to ensure accurate and relevant search results. Pongo integrates seamlessly with existing pipelines, whether using a vector database or Elasticsearch, and processes top search results to deliver refined and reliable information. Its distributed architecture ensures consistent latency, handling a wide range of requests without compromising speed. Pongo prioritizes data security, operating at runtime with zero data retention and no data leaving its secure AWS VPC.

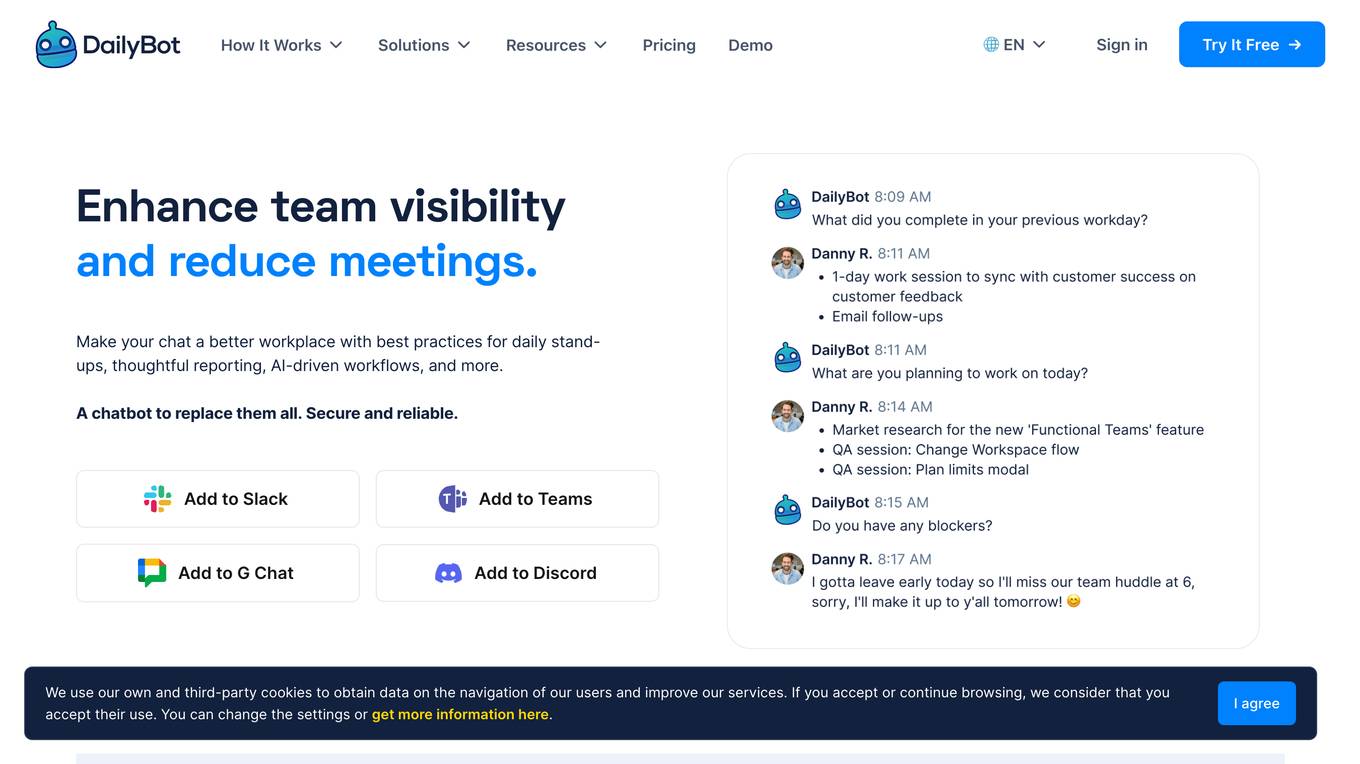

DailyBot

DailyBot is an AI-powered toolkit for teams that want automation, better reporting, and customization. It offers a range of features to enhance team visibility, reduce meetings, and improve collaboration. With DailyBot, teams can run asynchronous standups, retros, and other meetings, send kudos and recognition, create surveys and collect data, and access a variety of add-ons like watercoolers and random coffees. DailyBot also integrates with popular tools like Zapier, Jira, and Trello, making it easy to connect with the tools teams already use. Trusted by leading companies and backed by Y Combinator, DailyBot is a valuable tool for teams looking to improve their collaboration and productivity.

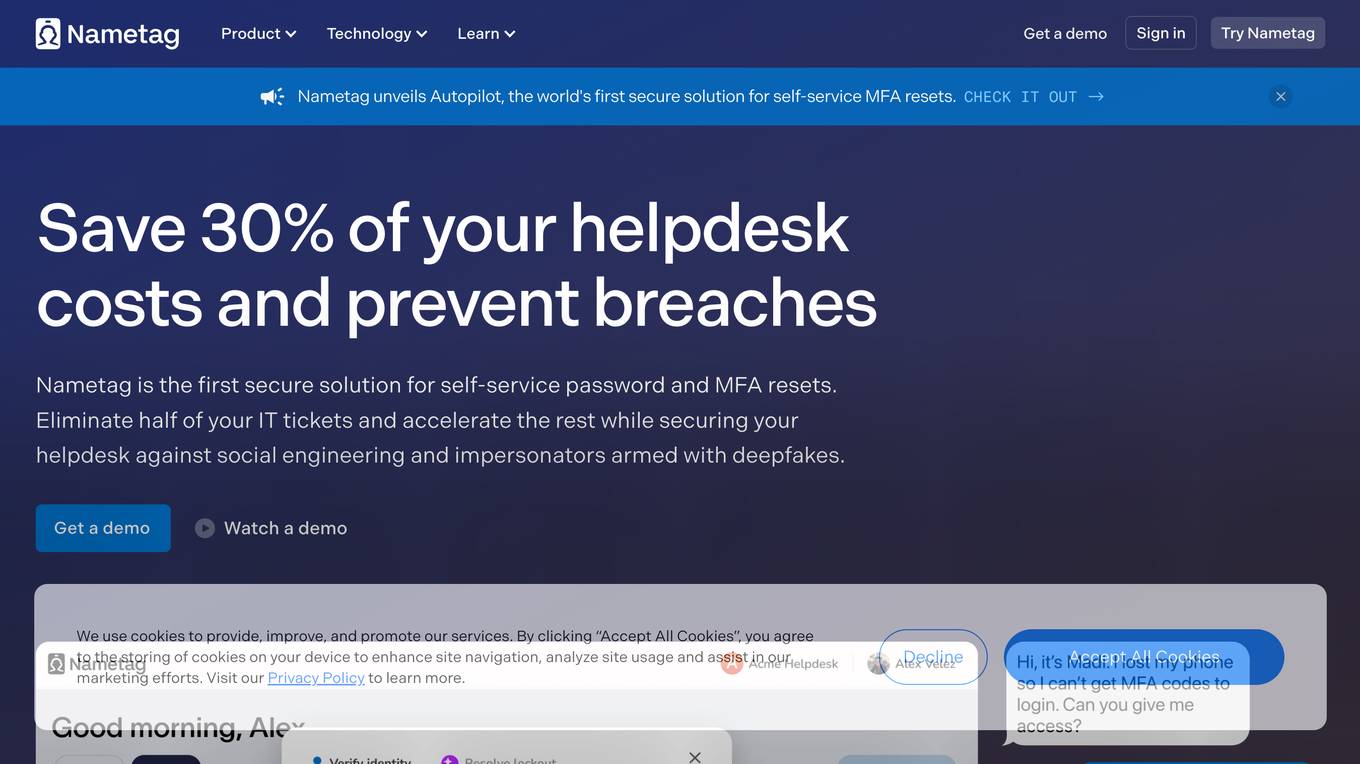

Nametag

Nametag is an identity verification solution designed specifically for IT helpdesks. It helps businesses prevent social engineering attacks, account takeovers, and data breaches by verifying the identity of users at critical moments, such as password resets, MFA resets, and high-risk transactions. Nametag's unique approach to identity verification combines mobile cryptography, device telemetry, and proprietary AI models to provide unmatched security and better user experiences.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages AI technology to anticipate threats, manage vulnerabilities, and protect resources across the enterprise ecosystem. SentinelOne provides real-time threat hunting, managed services, and actionable insights through its unified data lake, empowering security teams to respond effectively to cyber threats. With a focus on automation, efficiency, and value maximization, SentinelOne is a trusted cybersecurity solution for leading enterprises worldwide.

Codimite

Codimite is an AI-assisted offshore development company that provides a range of services to help businesses accelerate their software development, reduce costs, and drive innovation. Codimite's team of experienced engineers and project managers use AI-powered tools and technologies to deliver exceptional results for their clients. The company's services include AI-assisted software development, cloud modernization, and data and artificial intelligence solutions.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages artificial intelligence to anticipate threats, manage vulnerabilities, and protect resources across the entire enterprise ecosystem. With features such as Singularity XDR, Purple AI, and AI-SIEM, SentinelOne empowers security teams to detect and respond to cyber threats in real-time. The platform is trusted by leading enterprises worldwide and has received industry recognition for its innovative approach to cybersecurity.

CogniSpark AI

CogniSpark AI is an advanced AI-powered eLearning Authoring Tool that revolutionizes course creation by providing a set of AI tools for content generation, translation, voiceover, video creation, and more. It offers a user-friendly interface, quick course creation, and cost-effective solutions for educators, instructional designers, and training professionals. With features like AI content generator, AI translator, AI voiceover, and AI video generator, CogniSpark AI enhances productivity and engagement in learning and development.

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

Video Highlight

Video Highlight is an AI-powered tool that helps you summarize and take notes from videos. It uses the latest AI technology to generate timestamped summaries and transcripts, highlight key moments, and engage in interactive chats. With Video Highlight, you can save hours of research time and focus on exploring, analyzing, and absorbing content.

SnapMeasureAI

SnapMeasureAI is an AI application that specializes in automated AI image labeling, precise 3D body measurements, and video-based motion capture. It uses advanced AI technology to accurately understand and model the human body, working with any body type, skin tone, pose, or background. The application caters to various industries such as retail, fitness & health, AI training data, and security, offering a free demo for interested users.

AutoScreen

AutoScreen is an AI-powered recruitment tool that revolutionizes the hiring process. It utilizes advanced algorithms and machine learning to streamline the recruitment process, saving time and resources for businesses. With AutoScreen, employers can efficiently screen and shortlist candidates based on predefined criteria, leading to faster and more accurate hiring decisions. The tool offers a user-friendly interface, customizable features, and seamless integration with existing HR systems, making it a valuable asset for modern recruitment practices.

Hypergro

Hypergro is an AI-powered platform that specializes in UGC video ads for smart customer acquisition. Leveraging the 4th Generation of AI-powered growth marketing on Meta and Youtube, Hypergro helps businesses discover their audience, drive sales, and increase revenue through real-time AI insights. The platform offers end-to-end solutions for creating impactful short video ads that combine creator authenticity with AI-driven research for compelling storytelling. With a focus on precision targeting, competitor analysis, and in-depth research, Hypergro ensures maximum ROI for brands looking to elevate their growth strategies.

hirex.ai

hirex.ai is an AI-powered platform that revolutionizes the job interview process by making it open, fair, and accessible to all. The platform utilizes GenAI assistant to help candidates get interviewed instantly, jump the line to secure their dream job, and undergo a faster screening process. With no credit card required, users can register for free early access, upload their resumes, and post job offers. The platform aims to transform HR and talent acquisition by providing innovative solutions for both job seekers and employers.

5 - Open Source AI Tools

litdata

LitData is a tool designed for blazingly fast, distributed streaming of training data from any cloud storage. It allows users to transform and optimize data in cloud storage environments efficiently and intuitively, supporting various data types like images, text, video, audio, geo-spatial, and multimodal data. LitData integrates smoothly with frameworks such as LitGPT and PyTorch, enabling seamless streaming of data to multiple machines. Key features include multi-GPU/multi-node support, easy data mixing, pause & resume functionality, support for profiling, memory footprint reduction, cache size configuration, and on-prem optimizations. The tool also provides benchmarks for measuring streaming speed and conversion efficiency, along with runnable templates for different data types. LitData enables infinite cloud data processing by utilizing the Lightning.ai platform to scale data processing with optimized machines.

llm-awq

AWQ (Activation-aware Weight Quantization) is a tool designed for efficient and accurate low-bit weight quantization (INT3/4) for Large Language Models (LLMs). It supports instruction-tuned models and multi-modal LMs, providing features such as AWQ search for accurate quantization, pre-computed AWQ model zoo for various LLMs, memory-efficient 4-bit linear in PyTorch, and efficient CUDA kernel implementation for fast inference. The tool enables users to run large models on resource-constrained edge platforms, delivering more efficient responses with LLM/VLM chatbots through 4-bit inference.

Liger-Kernel

Liger Kernel is a collection of Triton kernels designed for LLM training, increasing training throughput by 20% and reducing memory usage by 60%. It includes Hugging Face Compatible modules like RMSNorm, RoPE, SwiGLU, CrossEntropy, and FusedLinearCrossEntropy. The tool works with Flash Attention, PyTorch FSDP, and Microsoft DeepSpeed, aiming to enhance model efficiency and performance for researchers, ML practitioners, and curious novices.

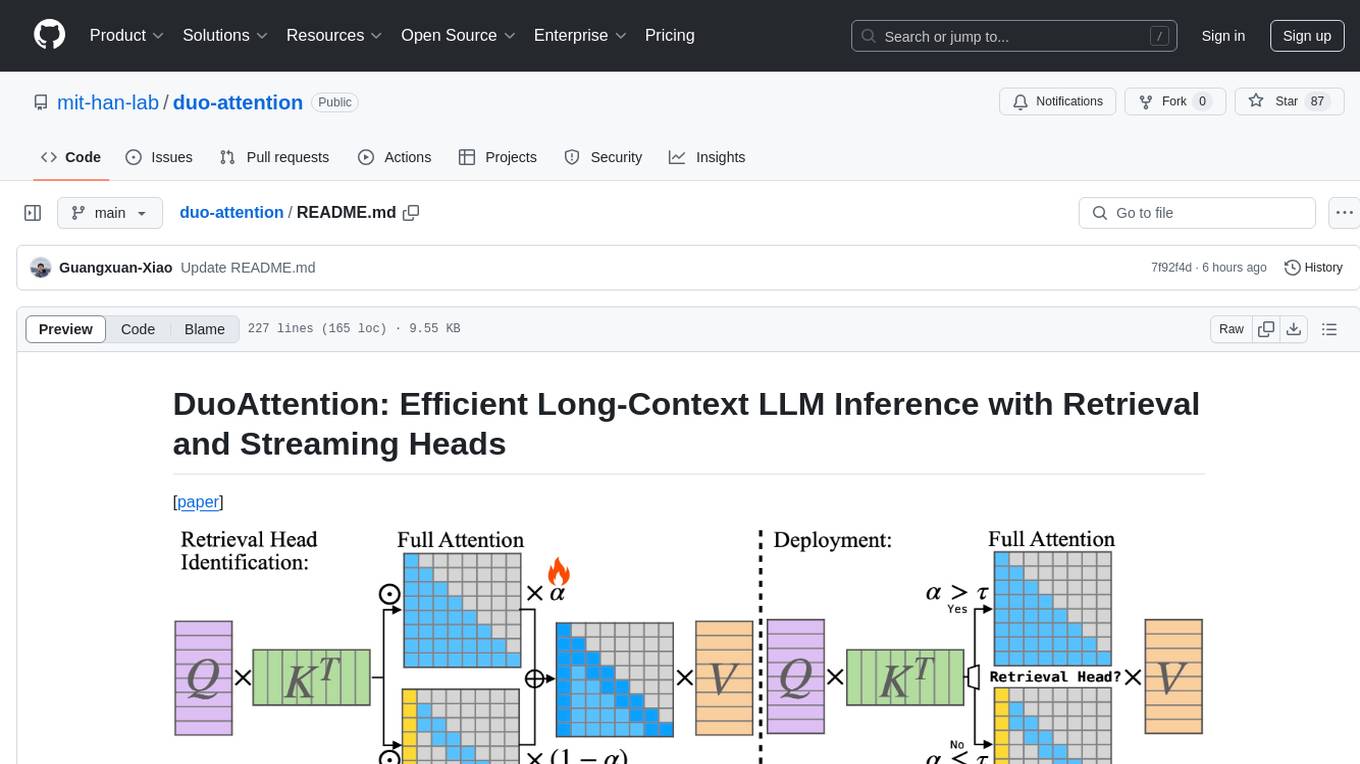

duo-attention

DuoAttention is a framework designed to optimize long-context large language models (LLMs) by reducing memory and latency during inference without compromising their long-context abilities. It introduces a concept of Retrieval Heads and Streaming Heads to efficiently manage attention across tokens. By applying a full Key and Value (KV) cache to retrieval heads and a lightweight, constant-length KV cache to streaming heads, DuoAttention achieves significant reductions in memory usage and decoding time for LLMs. The framework uses an optimization-based algorithm with synthetic data to accurately identify retrieval heads, enabling efficient inference with minimal accuracy loss compared to full attention. DuoAttention also supports quantization techniques for further memory optimization, allowing for decoding of up to 3.3 million tokens on a single GPU.

Awesome-Resource-Efficient-LLM-Papers

A curated list of high-quality papers on resource-efficient Large Language Models (LLMs) with a focus on various aspects such as architecture design, pre-training, fine-tuning, inference, system design, and evaluation metrics. The repository covers topics like efficient transformer architectures, non-transformer architectures, memory efficiency, data efficiency, model compression, dynamic acceleration, deployment optimization, support infrastructure, and other related systems. It also provides detailed information on computation metrics, memory metrics, energy metrics, financial cost metrics, network communication metrics, and other metrics relevant to resource-efficient LLMs. The repository includes benchmarks for evaluating the efficiency of NLP models and references for further reading.

20 - OpenAI Gpts

Carbon Footprint Calculator

Carbon footprint calculations breakdown and advices on how to reduce it

Eco Advisor

I'm an Environmental Impact Analyzer, here to calculate and reduce your carbon footprint.

Your Business Taxes: Guide

insightful articles and guides on business tax strategies at AfterTaxCash. Discover expert advice and tips to optimize tax efficiency, reduce liabilities, and maximize after-tax profits for your business. Stay informed to make informed financial decisions.

EcoTracker Pro 🌱📊

Track & analyze your carbon footprint with ease! EcoTracker Pro helps you make eco-friendly choices & reduce your impact. 🌎♻️

Tax Optimization Techniques for Investors

💼📉 Maximize your investments with AI-driven tax optimization! 💡 Learn strategies to reduce taxes 📊 and boost after-tax returns 💰. Get tailored advice 📘 for smart investing 📈. Not a financial advisor. 🚀💡

🥦✨ Low-FODMAP Meal Guide 🍇📘

Your go-to GPT for navigating the low-FODMAP diet! Find recipes, substitutes, and meal plans tailored to reduce IBS symptoms. 🍽️🌿

Process Optimization Advisor

Improves operational efficiency by optimizing processes and reducing waste.

Sustainable Energy K-12 School Expert

The world's trusted source for cost effective energy management in schools