Awesome-Efficient-Agents

Survey and paper list on efficiency-guided LLM agents (memory, tool learning, planning).

Stars: 159

This repository, Awesome Efficient Agents, is a curated collection of papers focusing on memory, tool learning, and planning in agentic systems. It provides a comprehensive survey of efficient agent design, emphasizing memory construction, tool learning, and planning strategies. The repository categorizes papers based on memory processes, tool selection, tool calling, tool-integrated reasoning, and planning efficiency. It aims to help readers quickly access representative work in the field of efficient agent design.

README:

🤝 Contributions welcome! Open an issue or submit a pull request to add papers, fix links, or improve categorization.

Recent years have seen growing interest in extending large language models into agentic systems. While agent capabilities have advanced rapidly, efficiency has received comparatively less attention despite being crucial for real-world deployment. This repository studies efficiency-guided agent design from three core components: memory, tool learning, and planning.

We provide a curated paper list to help readers quickly locate representative work, along with lightweight notes on how each topic connects to efficiency.

- Efficient Memory. We organize memory-related papers into three processes: construction, management, and access.

- Efficient Tool Learning. We group papers into tool selection, tool calling, and tool-integrated reasoning.

- Efficient Planning. We collect work on planning that improves overall agent efficiency by reducing unnecessary actions and shortening trajectories.

📂 Table of Contents(click to expand/collapse)

In the paper, we organize memory into construction, management, and access. Since many papers overlap across these stages, this README is primarily organized around memory construction to avoid redundancy.

-

(2025-10) AgentFold: Long-Horizon Web Agents with Proactive Context Management

-

(2025-07) MemAgent: Reshaping Long-Context LLM with Multi-Conv RL-based Memory Agent

-

(2025-06) MEM1: Learning to Synergize Memory and Reasoning for Efficient Long-Horizon Agents

-

(2025-04) Dynamic Cheatsheet: Test-Time Learning with Adaptive Memory

-

(2024-02) Compress to Impress: Unleashing the Potential of Compressive Memory in Real-World Long-Term Conversations

- (2025-09) MemGen: Weaving Generative Latent Memory for Self-Evolving Agents

- (2025-02) M+: Extending MemoryLLM with Scalable Long-Term Memory

- (2025-01) Titans: Learning to Memorize at Test Time

- (2024-09) MemoRAG: Boosting Long Context Processing with Global Memory-Enhanced Retrieval Augmentation

- (2024-07) $\text{Memory}^3$: Language Modeling with Explicit Memory

- (2024-02) MEMORYLLM: Towards Self-Updatable Large Language Models

- (2024-01) Long Context Compression with Activation Beacon

- (2025-10) Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models

- (2025-09) ReasoningBank: Scaling Agent Self-Evolving with Reasoning Memory

- (2025-08) Memory-R1: Enhancing Large Language Model Agents to Manage and Utilize Memories via Reinforcement Learning

- (2025-08) Memento: Fine-tuning LLM Agents without Fine-tuning LLMs

- (2025-07) Agent KB: Leveraging Cross-Domain Experience for Agentic Problem Solving

- (2025-06) Cost-Efficient Serving of LLM Agents via Test-Time Plan Caching(Agentic Plan Caching: Test-Time Memory for Fast and Cost-Efficient LLM Agents)

- (2025-04) Mem0: Building Production-Ready AI Agents with Scalable Long-Term Memory

- (2025-03) MemInsight: Autonomous Memory Augmentation for LLM Agents

- (2025-03) In Prospect and Retrospect: Reflective Memory Management for Long-term Personalized Dialogue Agents

- (2025-02) A-MEM: Agentic Memory for LLM Agents

- (2025-02) On Memory Construction and Retrieval for Personalized Conversational Agents

- (2024-06) Hello Again! LLM-powered Personalized Agent for Long-term Dialogue

- (2024-04) "My agent understands me better": Integrating Dynamic Human-like Memory Recall and Consolidation in LLM-Based Agents

- (2023-10) RECOMP: Improving Retrieval-Augmented LMs with Compression and Selective Augmentation

- (2023-08) ExpeL: LLM Agents Are Experiential Learners

- (2023-08) MemoChat: Tuning LLMs to Use Memos for Consistent Long-Range Open-Domain Conversation

- (2023-05) MemoryBank: Enhancing Large Language Models with Long-Term Memory

- (2026-01) MAGMA: A Multi-Graph based Agentic Memory Architecture for AI Agents

- (2025-10) D-SMART: Enhancing LLM Dialogue Consistency via Dynamic Structured Memory And Reasoning Tree

- (2025-04) Mem0: Building Production-Ready AI Agents with Scalable Long-Term Memory

- (2025-01) Zep: A Temporal Knowledge Graph Architecture for Agent Memory

- (2024-07) AriGraph: Learning Knowledge Graph World Models with Episodic Memory for LLM Agents

- (2024-06) GraphReader: Building Graph-based Agent to Enhance Long-Context Abilities of Large Language Models

- (2024-02) KG-Agent: An Efficient Autonomous Agent Framework for Complex Reasoning over Knowledge Graph

- (2025-10) Beyond a Million Tokens: Benchmarking and Enhancing Long-Term Memory in LLMs

- (2025-10) LightMem: Lightweight and Efficient Memory-Augmented Generation

- (2025-07) Hierarchical Memory for High-Efficiency Long-Term Reasoning in LLM Agents

- (2025-07) MemOS: A Memory OS for AI System

- (2025-06) Memory OS of AI Agent

- (2024-08) HiAgent: Hierarchical Working Memory Management for Solving Long-Horizon Agent Tasks with Large Language Model

- (2024-02) A Human-Inspired Reading Agent with Gist Memory of Very Long Contexts

- (2023-10) MemGPT: Towards LLMs as Operating Systems

- (2025-11) Latent Collaboration in Multi-Agent Systems

- (2025-11) MemIndex: Agentic Event-based Distributed Memory Management for Multi-agent Systems

- (2025-10) Cache-to-Cache: Direct Semantic Communication Between Large Language Models

- (2025-10) KVCOMM: Online Cross-context KV-cache Communication for Efficient LLM-based Multi-agent Systems

- (2025-08) RCR-Router: Efficient Role-Aware Context Routing for Multi-Agent LLM Systems with Structured Memory

- (2025-07) MIRIX: Multi-Agent Memory System for LLM-Based Agents

- (2025-06) G-Memory: Tracing Hierarchical Memory for Multi-Agent Systems

- (2024-04) Memory Sharing for Large Language Model based Agents

- (2025-08) Intrinsic Memory Agents: Heterogeneous Multi-Agent LLM Systems through Structured Contextual Memory

- (2025-04) AgentNet: Decentralized Evolutionary Coordination for LLM-based Multi-Agent Systems

- (2025-02) LLM-Powered Decentralized Generative Agents with Adaptive Hierarchical Knowledge Graph for Cooperative Planning

- (2025-10) LEGOMem: Modular Procedural Memory for Multi-agent LLM Systems for Workflow Automation

- (2025-05) Collaborative Memory: Multi-User Memory Sharing in LLM Agents with Dynamic Access Control

- (2025-01) SRMT: Shared Memory for Multi-agent Lifelong Pathfinding

-

(2025-10) ToolScope: Enhancing LLM Agent Tool Use through Tool Merging and Context-Aware Filtering

-

(2024-10) Toolshed: Scale Tool-Equipped Agents with Advanced RAG-Tool Fusion and Tool Knowledge Bases

-

(2024-10) From Exploration to Mastery: Enabling LLMs to Master Tools via Self-Driven Interactions

-

(2024-02) AnyTool: Self-Reflective, Hierarchical Agents for Large-Scale API Calls

-

(2023-12) ProTIP: Progressive Tool Retrieval Improves Planning

- (2024-09) Efficient and Scalable Estimation of Tool Representations in Vector Space

- (2024-09) TinyAgent: Function Calling at the Edge

- (2025-03) Chain-of-Tools: Utilizing Massive Unseen Tools in the CoT Reasoning of Frozen Language Models

- (2024-10) Toolken+: Improving LLM Tool Usage with Reranking and a Reject Option

- (2024-10) ToolGen: Unified Tool Retrieval and Calling via Generation

- (2024-07) Concise and Precise Context Compression for Tool-Using Language Models

- (2023-05) ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings

- (2024-01) Efficient Tool Use with Chain-of-Abstraction Reasoning

- (2023-02) Toolformer: Language Models Can Teach Themselves to Use Tools

- (2024-11) CATP-LLM: Empowering Large Language Models for Cost-Aware Tool Planning

- (2024-05) An LLM-Tool Compiler for Fused Parallel Function Calling

- (2023-12) An LLM Compiler for Parallel Function Calling

- (2025-07) A Joint Optimization Framework for Enhancing Efficiency of Tool Utilization in LLM Agents

- (2025-05) Distilling LLM Agent into Small Models with Retrieval and Code Tools

- (2025-03) Alignment for Efficient Tool Calling of Large Language Models

- (2025-02) ToolCoder: A Systematic Code-Empowered Tool Learning Framework for Large Language Models

- (2024-02) Budget-Constrained Tool Learning with Planning

- (2024-01) TroVE: Inducing Verifiable and Efficient Toolboxes for Solving Programmatic Tasks

- (2024-09) ToolPlanner: A Tool Augmented LLM for Multi Granularity Instructions with Path Planning and Feedback

- (2023-10) ToolChain*: Efficient Action Space Navigation in Large Language Models with A* Search

- (2025-11) ToolOrchestra: Elevating Intelligence via Efficient Model and Tool Orchestration

- (2025-09) ToolRM: Outcome Reward Models for Tool-Calling Large Language Models

- (2025-04) Acting Less is Reasoning More! Teaching Model to Act Efficiently

- (2025-09) TableMind: An Autonomous Programmatic Agent for Tool-Augmented Table Reasoning

- (2025-05) Tool-Star: Empowering LLM-Brained Multi-Tool Reasoner via Reinforcement Learning

- (2025-02) SMART: Self-Aware Agent for Tool Overuse Mitigation

- (2024-03) Agent-FLAN: Designing Data and Methods of Effective Agent Tuning for Large Language Models

- (2026-01) ET-Agent: Incentivizing Effective Tool-Integrated Reasoning Agent via Behavior Calibration

- (2025-10) PORTool: Tool-Use LLM Training with Rewarded Tree

- (2025-10) A$^2$FM: An Adaptive Agent Foundation Model for Tool-Aware Hybrid Reasoning

- (2025-09) Toward Effective Tool-Integrated Reasoning via Self-Evolved Preference Learning

- (2025-09) TableMind: An Autonomous Programmatic Agent for Tool-Augmented Table Reasoning

- (2025-07) Agentic Reinforced Policy Optimization

- (2025-07) AutoTIR: Autonomous Tools Integrated Reasoning via Reinforcement Learning

- (2025-05) Reinforced Internal-External Knowledge Synergistic Reasoning for Efficient Adaptive Search Agent

- (2025-05) Agentic Reasoning and Tool Integration for LLMs via Reinforcement Learning

- (2025-04) ToolRL: Reward is All Tool Learning Needs

- (2025-04) ReTool: Reinforcement Learning for Strategic Tool Use in LLMs

- (2025-04) Synthetic Data Generation & Multi-Step RL for Reasoning & Tool Use

- (2025-04) Acting Less is Reasoning More! Teaching Model to Act Efficiently

- (2025-11) Budget-Aware Tool-Use Enables Effective Agent Scaling

- (2025-09) Learning When to Plan: Efficiently Allocating Test-Time Compute for LLM Agents

- (2025-06) Query-Level Uncertainty in Large Language Models

- (2023-12) ReST meets ReAct: Self-Improvement for Multi-Step Reasoning LLM Agent

- (2023-05) SwiftSage: A Generative Agent with Fast and Slow Thinking for Complex Interactive Tasks

- (2023-03) Reflexion: Language Agents with Verbal Reinforcement Learning

- (2025-05) Cost-Augmented Monte Carlo Tree Search for LLM-Assisted Planning

- (2023-12) ProTIP: Progressive Tool Retrieval Improves Planning

- (2023-10) ToolChain*: Efficient Action Space Navigation in Large Language Models with A* Search

- (2023-10) Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models

- (2025-12) Video-Browser: Towards Agentic Open-web Video Browsing

- (2025-05) Alita: Generalist Agent Enabling Scalable Agentic Reasoning with Minimal Predefinition and Maximal Self-Evolution

- (2025-03) ReSo: A Reward-driven Self-organizing LLM-based Multi-Agent System for Reasoning Tasks

- (2024-11) BudgetMLAgent: A Cost-Effective LLM Multi-Agent system for Automating Machine Learning Tasks

- (2024-02) AutoGPT+P: Affordance-based Task Planning with Large Language Models

- (2023-05) ReWOO: Decoupling Reasoning from Observations for Efficient Augmented Language Models

- (2023-03) HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face

- (2025-09) Planner-R1: Reward Shaping Enables Efficient Agentic RL with Smaller LLMs

- (2025-08) Encouraging Good Processes Without the Need for Good Answers: Reinforcement Learning for LLM Agent Planning

- (2025-05) Planning without Search: Refining Frontier LLMs with Offline Goal-Conditioned RL

- (2025-02) QLASS: Boosting Language Agent Inference via Q-Guided Stepwise Search

- (2024-03) Trial and Error: Exploration-Based Trajectory Optimization for LLM Agents

- (2025-10) GAP: Graph-Based Agent Planning with Parallel Tool Use and Reinforcement Learning

- (2024-07) Sibyl: Simple yet Effective Agent Framework for Complex Real-world Reasoning

- (2024-06) GraphReader: Building Graph-based Agent to Enhance Long-Context Abilities of Large Language Models

- (2024-02) Graph-enhanced Large Language Models in Asynchronous Plan Reasoning

- (2023-05) Voyager: An Open-Ended Embodied Agent with Large Language Models

- (2025-09) MARS: toward more efficient multi-agent collaboration for LLM reasoning

- (2025-08) SafeSieve: From Heuristics to Experience in Progressive Pruning for LLM-based Multi-Agent Communication

- (2025-03) AgentDropout: Dynamic Agent Elimination for Token-Efficient and High-Performance LLM-Based Multi-Agent Collaboration

- (2025-02) S$^2$-MAD: Breaking the Token Barrier to Enhance Multi-Agent Debate Efficiency

- (2024-10) Cut the Crap: An Economical Communication Pipeline for LLM-based Multi-Agent Systems

- (2024-09) GroupDebate: Enhancing the Efficiency of Multi-Agent Debate Using Group Discussion

- (2024-06) Scaling Large Language Model-based Multi-Agent Collaboration

- (2024-06) Chain of Agents: Large Language Models Collaborating on Long-Context Tasks

- (2025-10) Stop Wasting Your Tokens: Towards Efficient Runtime Multi-Agent Systems

- (2025-09) Free-MAD: Consensus-Free Multi-Agent Debate

- (2025-07) CONSENSAGENT: Towards Efficient and Effective Consensus in Multi-Agent LLM Interactions Through Sycophancy Mitigation

- (2025-07) CodeAgents: A Token-Efficient Framework for Codified Multi-Agent Reasoning in LLMs

- (2024-05) Smurfs: Multi-Agent System using Context-Efficient DFSDT for Tool Planning

- (2025-11) SMAGDi: Socratic Multi Agent Interaction Graph Distillation for Efficient High Accuracy Reasoning

- (2025-06) Debate, Reflect, and Distill: Multi-Agent Feedback with Tree-Structured Preference Optimization for Efficient Language Model Enhancement

- (2024-02) MAGDi: Structured Distillation of Multi-Agent Interaction Graphs Improves Reasoning in Smaller Language Models

Given that our work mainly focuses on efficiency, which is rooted in effectiveness, we’ve gathered a list of related survey papers to offer a complementary perspective. We hope this will help bring visibility to some valuable surveys that deserve more attention.💡

- (2026-01) Survey on AI Memory: Theories, Taxonomies, Evaluations, and Emerging Trends

- (2025-12) Memory in the Age of AI Agents

- (2025-05) Rethinking Memory in AI: Taxonomy, Operations, Topics, and Future Directions

- (2025-04) From Human Memory to AI Memory: A Survey on Memory Mechanisms in the Era of LLMs

- (2024-04) A Survey on the Memory Mechanism of Large Language Model based Agents

- (2025-08) LLM-based Agentic Reasoning Frameworks: A Survey from Methods to Scenarios

- (2024-02) Understanding the planning of LLM agents: A survey

If you find this survey useful, please cite:

@misc{yang2026efficientagentsmemorytool,

title={Toward Efficient Agents: Memory, Tool learning, and Planning},

author={Xiaofang Yang and Lijun Li and Heng Zhou and Tong Zhu and Xiaoye Qu and Yuchen Fan and Qianshan Wei and Rui Ye and Li Kang and Yiran Qin and Zhiqiang Kou and Daizong Liu and Qi Li and Ning Ding and Siheng Chen and Jing Shao},

year={2026},

eprint={2601.14192},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2601.14192},

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Efficient-Agents

Similar Open Source Tools

Awesome-Efficient-Agents

This repository, Awesome Efficient Agents, is a curated collection of papers focusing on memory, tool learning, and planning in agentic systems. It provides a comprehensive survey of efficient agent design, emphasizing memory construction, tool learning, and planning strategies. The repository categorizes papers based on memory processes, tool selection, tool calling, tool-integrated reasoning, and planning efficiency. It aims to help readers quickly access representative work in the field of efficient agent design.

chatgpt-auto-continue

ChatGPT Auto-Continue is a userscript that automatically continues generating ChatGPT responses when chats cut off. It relies on the powerful chatgpt.js library and is easy to install and use. Simply install Tampermonkey and ChatGPT Auto-Continue, and visit chat.openai.com as normal. Multi-reply conversations will automatically continue generating when cut-off!

llama.cpp

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

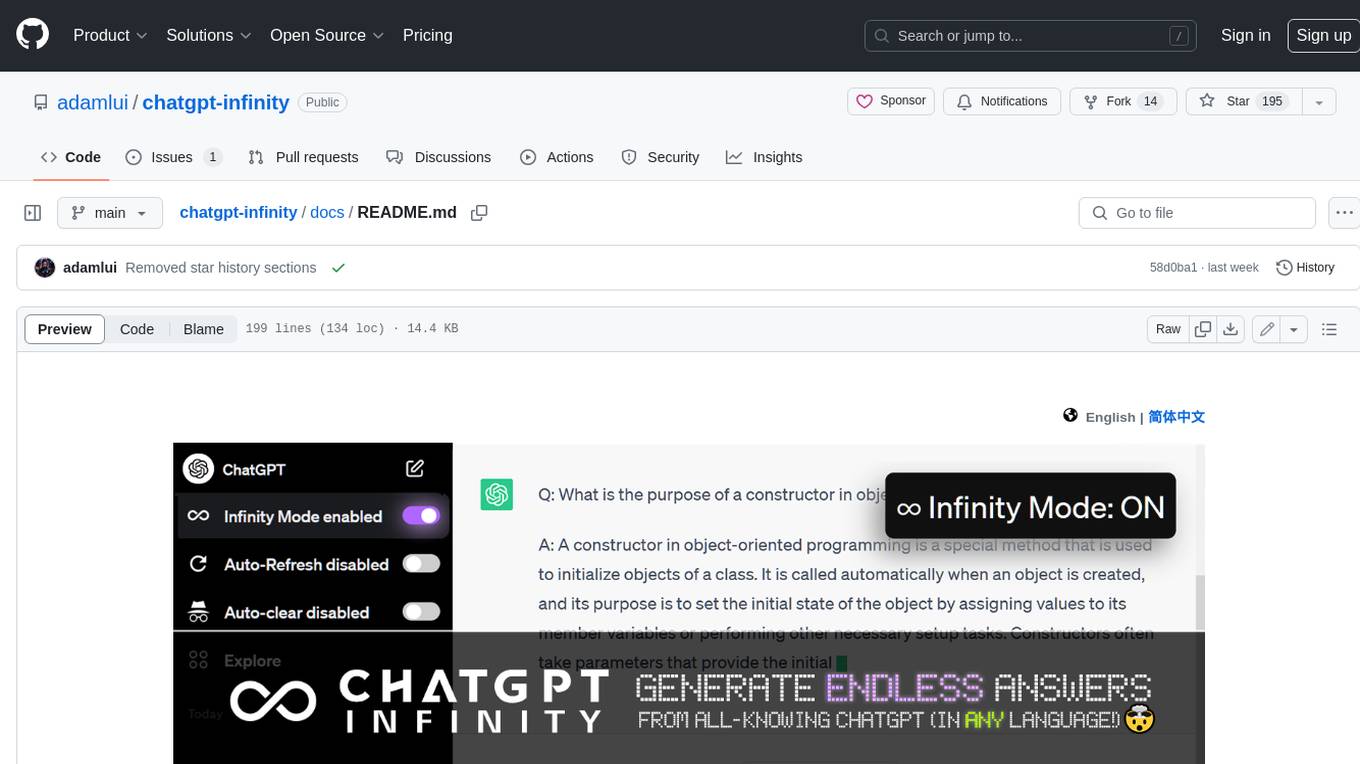

chatgpt-infinity

ChatGPT Infinity is a free and powerful add-on that makes ChatGPT generate infinite answers on any topic. It offers customizable topic selection, multilingual support, adjustable response interval, and auto-scroll feature for a seamless chat experience.

awesome-saas

The Alchemyst Platform Cookbook is a comprehensive guide for developers and builders to bring their AI ideas to life. It provides cutting-edge AI tools and templates to empower users in creating innovative projects. The platform offers API documentation, quick start guides, official and community templates for various projects. Users can contribute to the platform by forking the repository, adding the topic 'alchemyst-awesome-saas', making their repository public, and submitting a pull request. Troubleshooting guidelines are provided for contributors. The platform is actively maintained by the Alchemyst AI Team.

Plug-play-modules

Plug-play-modules is a comprehensive collection of plug-and-play modules for AI, deep learning, and computer vision applications. It includes various convolution variants, latest attention mechanisms, feature fusion modules, up-sampling/down-sampling modules, suitable for tasks like image classification, object detection, instance segmentation, semantic segmentation, single object tracking (SOT), multi-object tracking (MOT), infrared object tracking (RGBT), image de-raining, de-fogging, de-blurring, super-resolution, and more. The modules are designed to enhance model performance and feature extraction capabilities across various tasks.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

awesome-cuda-tensorrt-fpga

Okay, here is a JSON object with the requested information about the awesome-cuda-tensorrt-fpga repository:

For similar tasks

Awesome-Efficient-Agents

This repository, Awesome Efficient Agents, is a curated collection of papers focusing on memory, tool learning, and planning in agentic systems. It provides a comprehensive survey of efficient agent design, emphasizing memory construction, tool learning, and planning strategies. The repository categorizes papers based on memory processes, tool selection, tool calling, tool-integrated reasoning, and planning efficiency. It aims to help readers quickly access representative work in the field of efficient agent design.

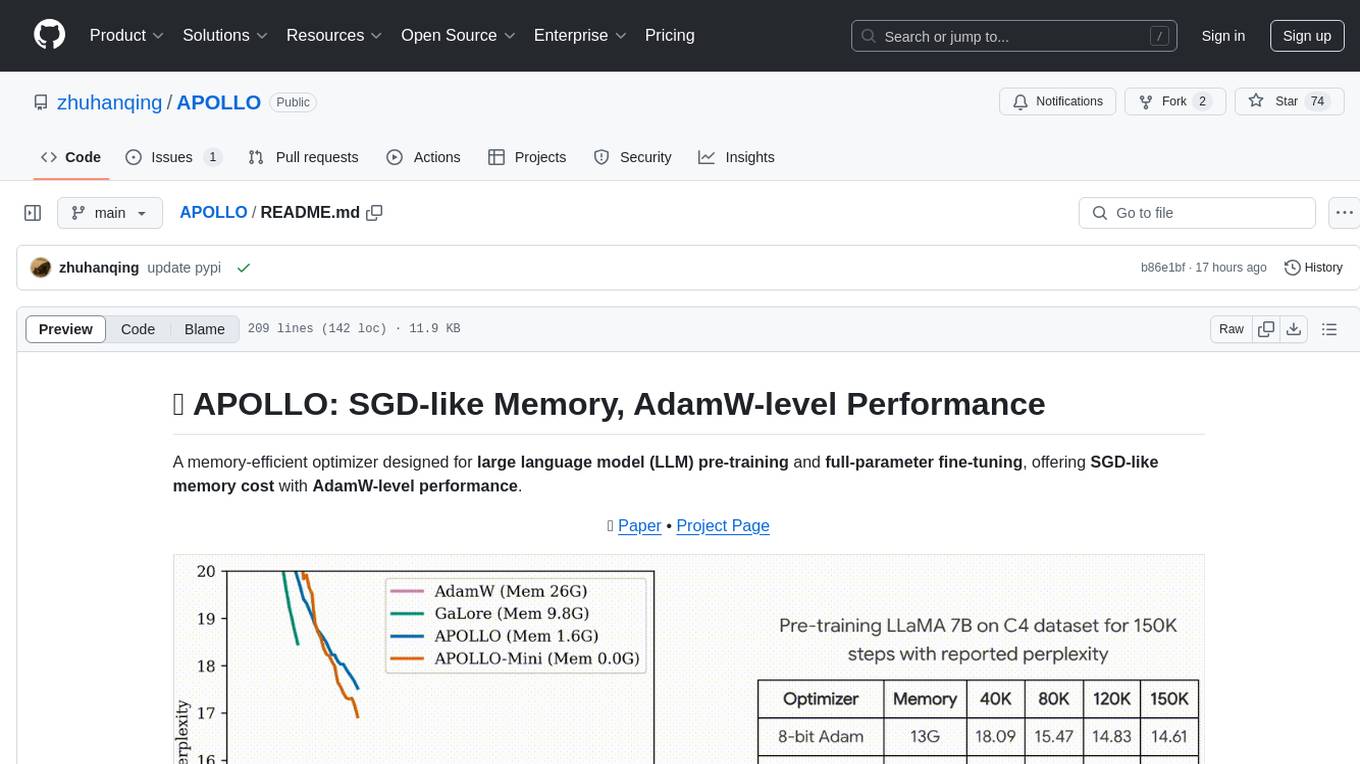

APOLLO

APOLLO is a memory-efficient optimizer designed for large language model (LLM) pre-training and full-parameter fine-tuning. It offers SGD-like memory cost with AdamW-level performance. The optimizer integrates low-rank approximation and optimizer state redundancy reduction to achieve significant memory savings while maintaining or surpassing the performance of Adam(W). Key contributions include structured learning rate updates for LLM training, approximated channel-wise gradient scaling in a low-rank auxiliary space, and minimal-rank tensor-wise gradient scaling. APOLLO aims to optimize memory efficiency during training large language models.

mountain-goap

Mountain GOAP is a generic C# GOAP (Goal Oriented Action Planning) library for creating AI agents in games. It favors composition over inheritance, supports multiple weighted goals, and uses A* pathfinding to plan paths through sequential actions. The library includes concepts like agents, goals, actions, sensors, permutation selectors, cost callbacks, state mutators, state checkers, and a logger. It also features event handling for agent planning and execution. The project structure includes examples, API documentation, and internal classes for planning and execution.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.