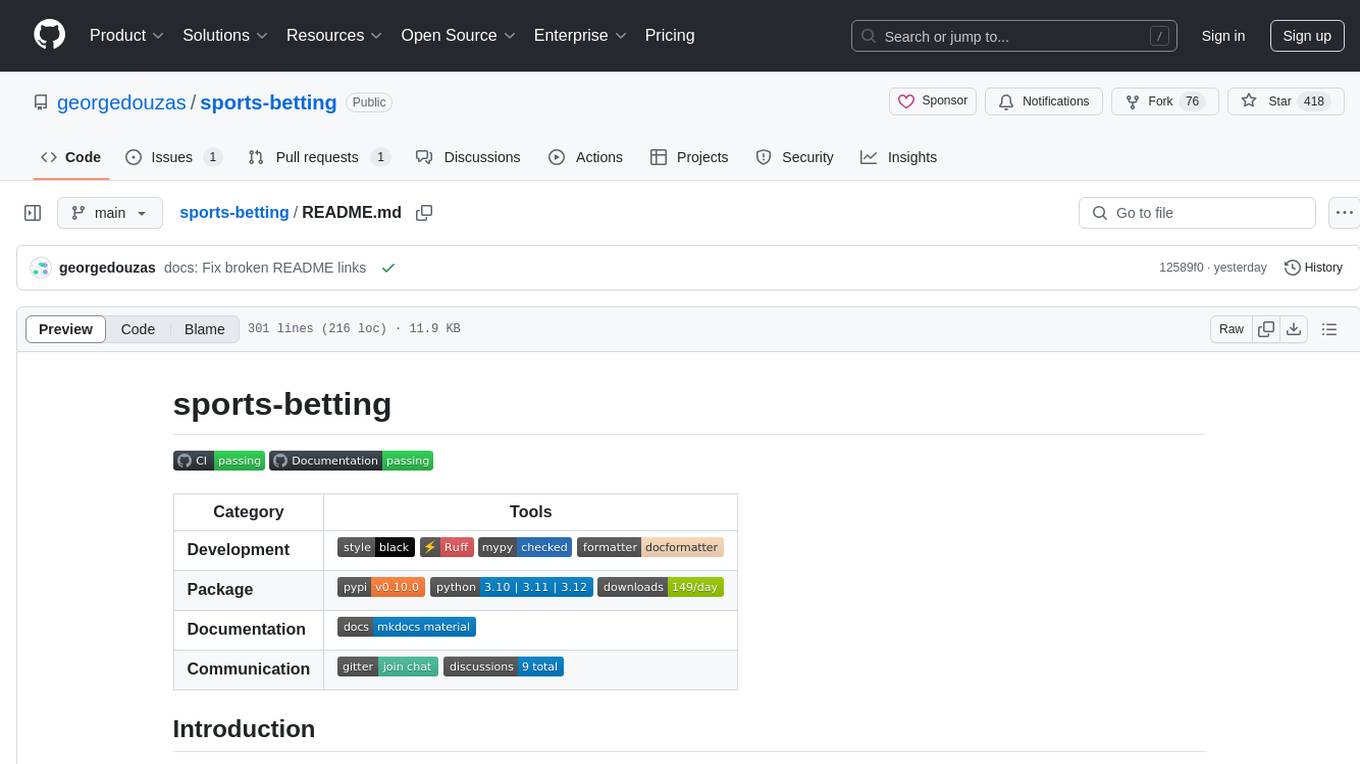

sports-betting

Collection of sports betting AI tools.

Stars: 493

Sports-betting is a Python library for implementing betting strategies and analyzing sports data. It provides tools for collecting, processing, and visualizing sports data to make informed betting decisions. The library includes modules for scraping data from sports websites, calculating odds, simulating betting strategies, and evaluating performance. With sports-betting, users can automate betting processes, test different strategies, and improve their betting outcomes.

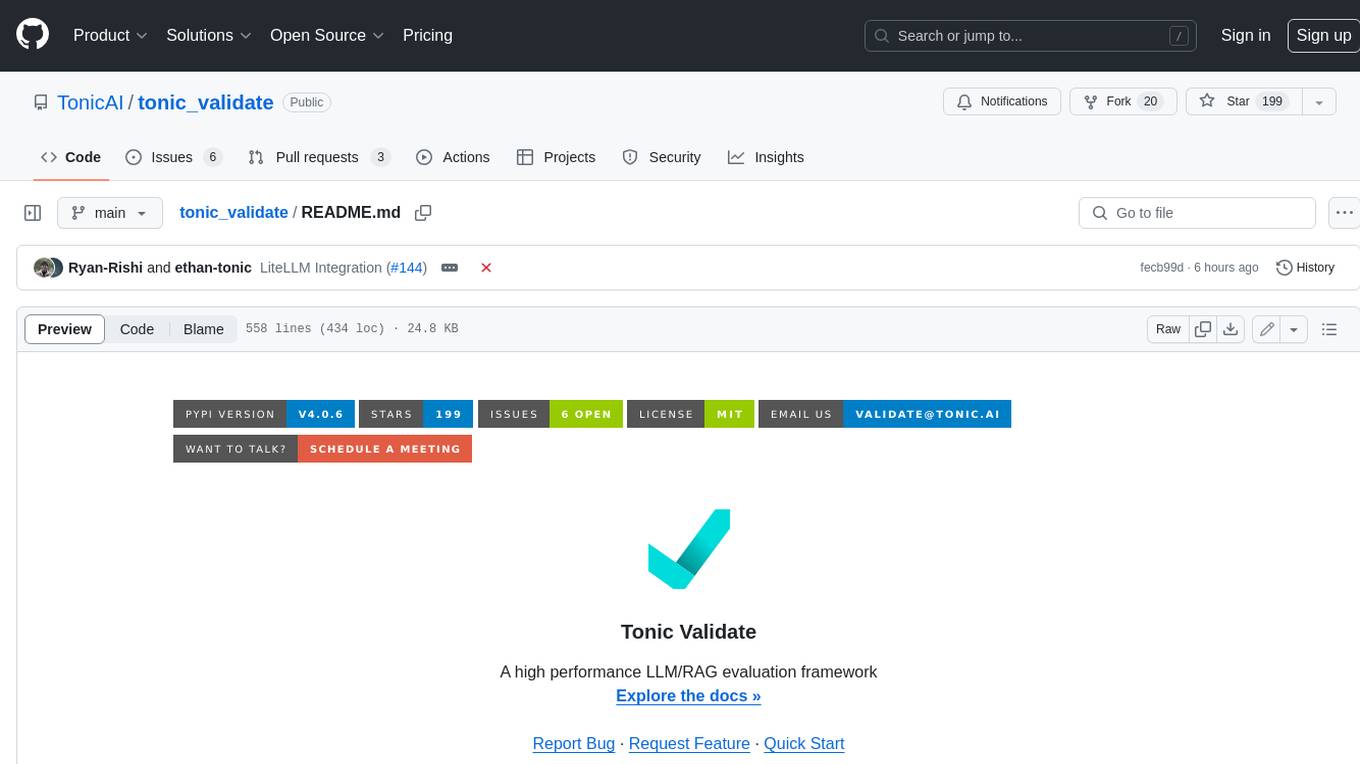

README:

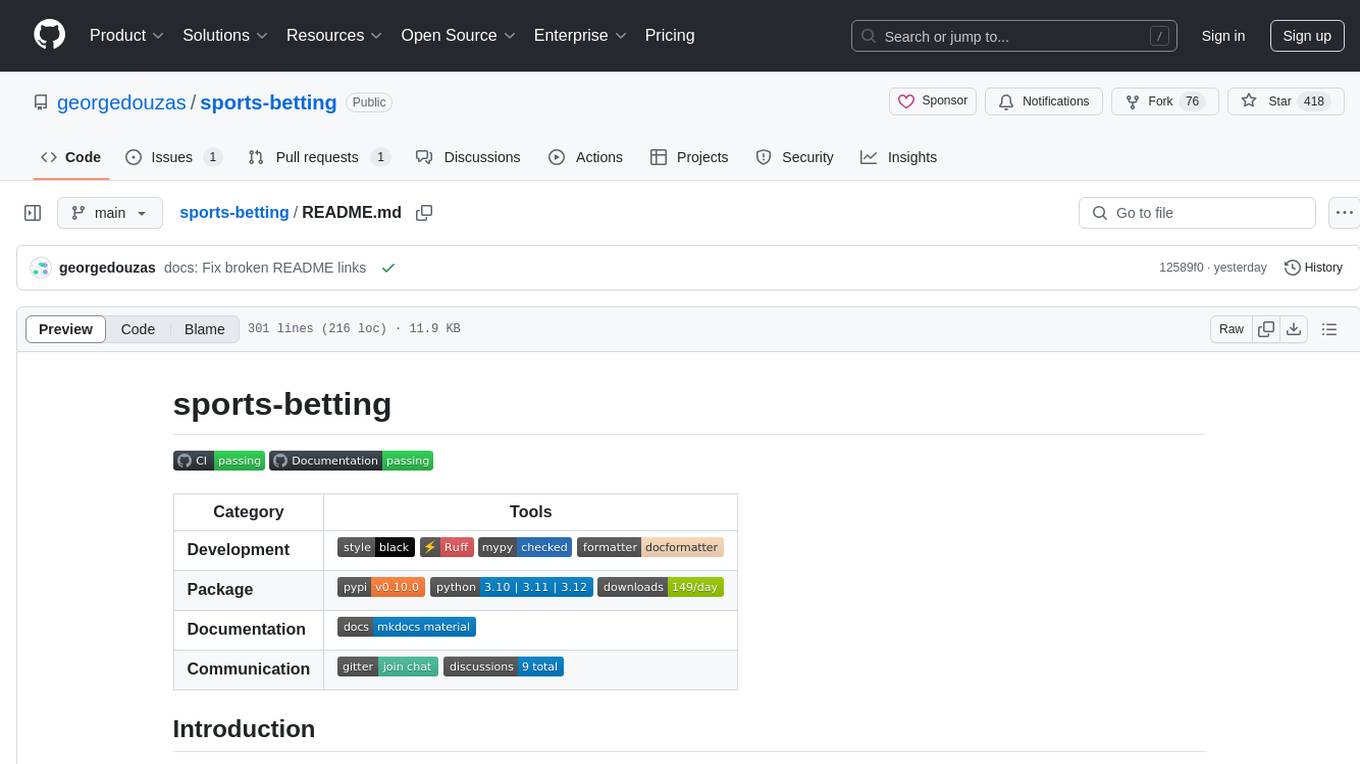

| Category | Tools |

|---|---|

| Development |

|

| Package |

|

| Documentation |  |

| Communication |

|

The sports-betting package is a handy set of tools for creating, testing, and using sports betting models. It comes

with a Python API, a CLI, and even a GUI built with Reflex to keep things simple:

The main components of sports-betting are dataloaders and bettors objects:

- Dataloaders download and prepare data suitable for predictive modelling.

- Bettors provide an easy way to backtest betting strategies and predict the value bets of future events.

sports-betting comes with a GUI that provides a intuitive way to interact with the library. It supports the following

functionalitites:

- Easily upload, create, or update dataloaders to handle historical and fixtures data.

- Develop and test betting models with tools for backtesting and identifying value bets.

To launch the GUI, simply run the command sportsbet-gui. Once started, you’ll see the initial screen:

Explore the functionality with guidance from the built-in bot, which streams helpful messages along the way.

The sports-betting package makes it easy to download sports betting data:

from sportsbet.datasets import SoccerDataLoader

dataloader = SoccerDataLoader(param_grid={'league': ['Italy'], 'year': [2020]})

X_train, Y_train, O_train = dataloader.extract_train_data(odds_type='market_maximum')

X_fix, Y_fix, O_fix = dataloader.extract_fixtures_data()X_train are the historical/training data and X_fix are the test/fixtures data. The historical data can be used to backtest the

performance of a bettor model:

from sportsbet.evaluation import ClassifierBettor, backtest

from sklearn.dummy import DummyClassifier

bettor = ClassifierBettor(DummyClassifier())

backtest(bettor, X_train, Y_train, O_train)We can use the trained bettor model to predict the value bets using the fixtures data:

bettor.fit(X_train, Y_train)

bettor.bet(X_fix, O_fix)You can think of any sports betting event as a random experiment with unknown probabilities for the various outcomes. Even for the most unlikely outcome, for example scoring more than 10 goals in a soccer match, a small probability is still assigned. The bookmaker estimates this probability P and offers the corresponding odds O. In theory, if the bookmaker offers the so-called fair odds O = 1 / P in the long run, neither the bettor nor the bookmaker would make any money.

The bookmaker's strategy is to adjust the odds in their favor using the over-round of probabilities. In practice, it offers odds less than the estimated fair odds. The important point here is that the bookmaker still has to estimate the probabilities of outcomes and provide odds that guarantee them long-term profit.

On the other hand, the bettor can also estimate the probabilities and compare them to the odds the bookmaker offers. If the estimated probability of an outcome is higher than the implied probability from the provided odds, then the bet is called a value bet.

The only long-term betting strategy that makes sense is to select value bets. However, you have to remember that neither the bettor nor the bookmaker can access the actual probabilities of outcomes. Therefore, identifying a value bet from the side of the bettor is still an estimation. The bettor or the bookmaker might be wrong, or both of them.

Another essential point is that bookmakers can access resources that the typical bettor is rare to access. For instance, they have

more data, computational power, and teams of experts working on predictive models. You may assume that trying to beat them is

pointless, but this is not necessarily correct. The bookmakers have multiple factors to consider when they offer their adjusted

odds. This is the reason there is a considerable variation among the offered odds. The bettor should aim to systematically

estimate the value bets, backtest their performance, and not create arbitrarily accurate predictive models. This is a realistic

goal, and sports-betting can help by providing appropriate tools.

For user installation, sports-betting is currently available on the PyPi's repository, and you can install it via pip:

pip install sports-bettingIf you have Node.js version v22.0.0 or higher, you can optionally install the GUI:

pip install sports-betting[gui]Development installation requires to clone the repository and then use PDM to install the project as well as the main and development dependencies:

git clone https://github.com/georgedouzas/sports-betting.git

cd sports-betting

pdm installYou can access sports-betting through the GUI application, the Python API, or the CLI. However, it’s a good idea to

get familiar with the Python API since you’ll need it to create configuration files for the CLI or load custom betting

models into the GUI. sports-betting supports all common sports betting needs i.e. fetching historical and fixtures

data as well as backtesting of betting strategies and prediction of value bets.

Launch the GUI app with the command sportsbet-gui.

Here are a few things you can do with the GUI:

- Configure the dataloader:

- Create a new betting model:

- Run the model to get either backtesting results or value bets:

Assume we would like to backtest the following scenario and use the bettor object to predict value bets:

- Selection of data

- First and second division of German, Italian and French leagues for the years 2021-2024

- Maximum odds of the market in order to backtest our betting strategy

- Configuration of betting strategy

- 5-fold time ordered cross-validation

- Initial cash of 10000 euros

- Stake of 50 euros for each bet

- Use match odds (home win, away win and draw) as betting markets

- Logistic regression classifier to predict probabilities and value bets

# Selection of data

from sportsbet.datasets import SoccerDataLoader

leagues = ['Germany', 'Italy', 'France']

divisions = [1, 2]

years = [2021, 2022, 2023, 2024]

odds_type = 'market_maximum'

dataloader = SoccerDataLoader({'league': leagues, 'year': years, 'division': divisions})

X_train, Y_train, O_train = dataloader.extract_train_data(odds_type=odds_type)

X_fix, _, O_fix = dataloader.extract_fixtures_data()

# Configuration of betting strategy

from sklearn.model_selection import TimeSeriesSplit

from sklearn.compose import make_column_transformer

from sklearn.linear_model import LogisticRegression

from sklearn.impute import SimpleImputer

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import OneHotEncoder

from sklearn.multioutput import MultiOutputClassifier

from sportsbet.evaluation import ClassifierBettor, backtest

tscv = TimeSeriesSplit(5)

init_cash = 10000.0

stake = 50.0

betting_markets = ['home_win__full_time_goals', 'draw__full_time_goals', 'away_win__full_time_goals']

classifier = make_pipeline(

make_column_transformer(

(OneHotEncoder(handle_unknown='ignore'), ['league', 'home_team', 'away_team']), remainder='passthrough'

),

SimpleImputer(),

MultiOutputClassifier(LogisticRegression(solver='liblinear', random_state=7, class_weight='balanced', C=50)),

)

bettor = ClassifierBettor(classifier, betting_markets=betting_markets, stake=stake, init_cash=init_cash)

# Apply backtesting and get results

backtesting_results = backtest(bettor, X_train, Y_train, O_train, cv=tscv)

# Get value bets for upcoming betting events

bettor.fit(X_train, Y_train)

bettor.bet(X_fix, O_fix)The command sportsbet provides various sub-commands to download data and predict the value bets. For any sub-command you may

add the --help flag to get more information about its usage.

In order to use the commands, a configuration file is required. You can find examples of such configuration files in

sports-betting/configs/. The configuration file should have a Python file extension and contain a few variables. The variables

DATALOADER_CLASS and PARAM_GRID are mandatory while the rest are optional.

The following variables configure the data extraction:

-

DATALOADER_CLASS: The dataloader class to use. -

PARAM_GRID: The parameters grid to select the type of information that the data includes. -

DROP_NA_THRES: The parameterdrop_na_thresof the dataloader'sextract_train_data. -

ODDS_TYPE: The parameterodds_typeof the dataloader'sextract_train_data.

The following variables configure the betting process:

-

BETTOR: A bettor object. -

CV: The parametercvof the functionbacktest. -

N_JOBS: The parametern_jobsof the functionbacktest. -

VERBOSE: The parameterverboseof the functionbacktest.

Once these variables are provided, we can select the appropriate commands to select any of the sports-betting's functionalities.

Show available parameters for dataloaders:

sportsbet dataloader params -c config.pyShow available odds types:

sportsbet dataloader odds-types -c config.pyExtract training data and save them as CSV files:

sportsbet dataloader training -c config.py -d /path/to/directoryExtract fixtures data and save them as CSV files:

sportsbet dataloader fixtures -c config.py -d /path/to/directoryBacktest the bettor and save the results as CSV file:

sportsbet bettor backtest -c config.py -d /path/to/directoryGet the value bets and save them as CSV file:

sportsbet bettor bet -c config.py -d /path/to/directoryFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for sports-betting

Similar Open Source Tools

sports-betting

Sports-betting is a Python library for implementing betting strategies and analyzing sports data. It provides tools for collecting, processing, and visualizing sports data to make informed betting decisions. The library includes modules for scraping data from sports websites, calculating odds, simulating betting strategies, and evaluating performance. With sports-betting, users can automate betting processes, test different strategies, and improve their betting outcomes.

LayerSkip

LayerSkip is an implementation enabling early exit inference and self-speculative decoding. It provides a code base for running models trained using the LayerSkip recipe, offering speedup through self-speculative decoding. The tool integrates with Hugging Face transformers and provides checkpoints for various LLMs. Users can generate tokens, benchmark on datasets, evaluate tasks, and sweep over hyperparameters to optimize inference speed. The tool also includes correctness verification scripts and Docker setup instructions. Additionally, other implementations like gpt-fast and Native HuggingFace are available. Training implementation is a work-in-progress, and contributions are welcome under the CC BY-NC license.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

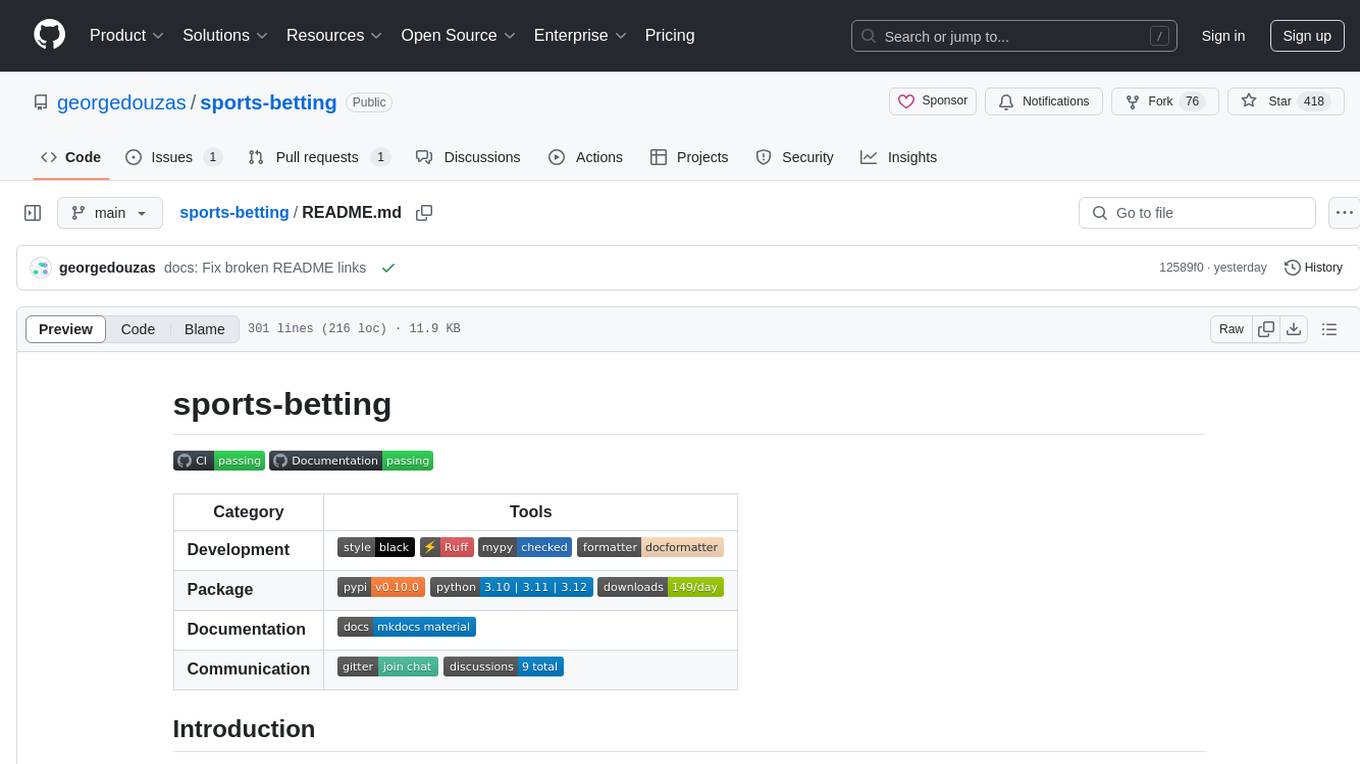

ice-score

ICE-Score is a tool designed to instruct large language models to evaluate code. It provides a minimum viable product (MVP) for evaluating generated code snippets using inputs such as problem, output, task, aspect, and model. Users can also evaluate with reference code and enable zero-shot chain-of-thought evaluation. The tool is built on codegen-metrics and code-bert-score repositories and includes datasets like CoNaLa and HumanEval. ICE-Score has been accepted to EACL 2024.

ScandEval

ScandEval is a framework for evaluating pretrained language models on mono- or multilingual language tasks. It provides a unified interface for benchmarking models on a variety of tasks, including sentiment analysis, question answering, and machine translation. ScandEval is designed to be easy to use and extensible, making it a valuable tool for researchers and practitioners alike.

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

Hurley-AI

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and includes a process of embedding docs, queries, searching for top passages, creating summaries, using an LLM to re-score and select relevant summaries, putting summaries into prompt, and generating answers. The tool can be used to answer specific questions related to scientific research by leveraging citations and relevant passages from documents.

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

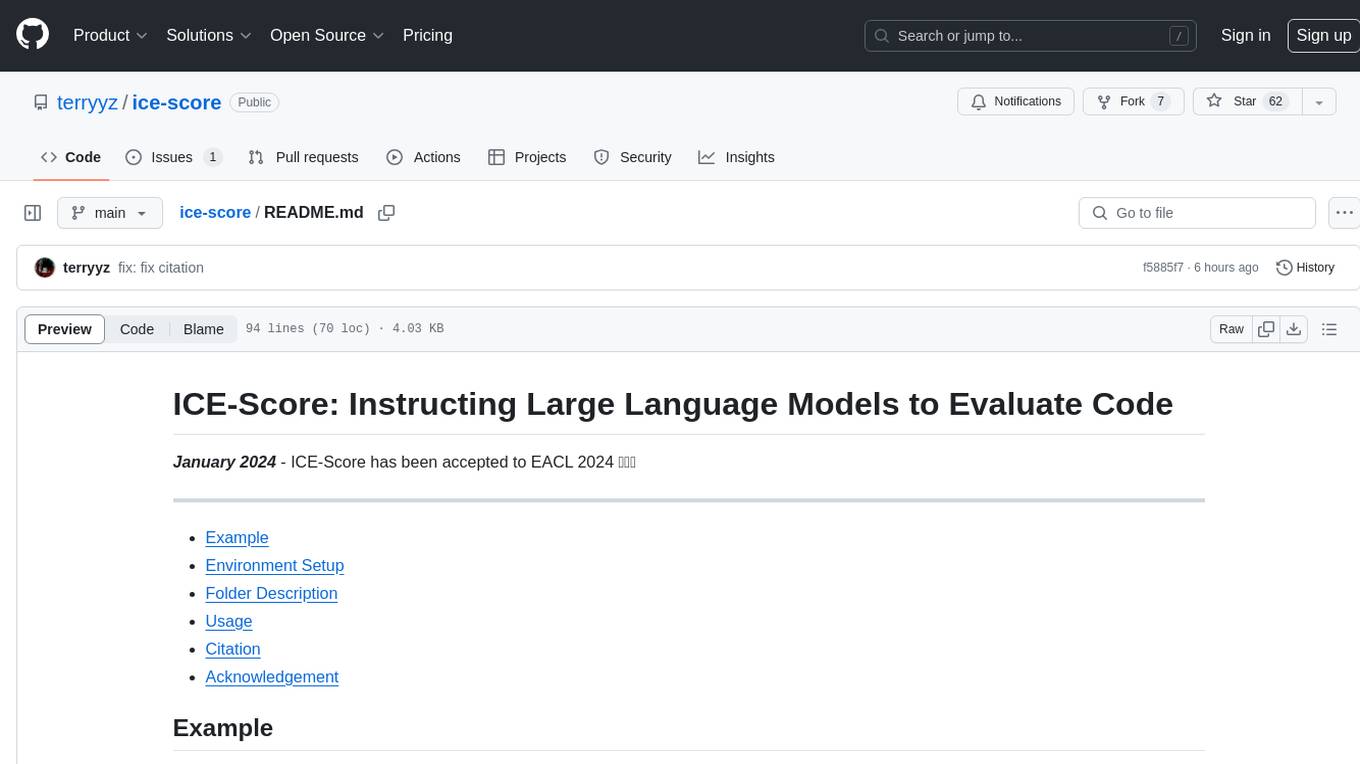

turnkeyml

TurnkeyML is a tools framework that integrates models, toolchains, and hardware backends to simplify the evaluation and actuation of deep learning models. It supports use cases like exporting ONNX files, performance validation, functional coverage measurement, stress testing, and model insights analysis. The framework consists of analysis, build, runtime, reporting tools, and a models corpus, seamlessly integrated to provide comprehensive functionality with simple commands. Extensible through plugins, it offers support for various export and optimization tools and AI runtimes. The project is actively seeking collaborators and is licensed under Apache 2.0.

storm

STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search. While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage. **Try out our [live research preview](https://storm.genie.stanford.edu/) to see how STORM can help your knowledge exploration journey and please provide feedback to help us improve the system 🙏!**

tonic_validate

Tonic Validate is a framework for the evaluation of LLM outputs, such as Retrieval Augmented Generation (RAG) pipelines. Validate makes it easy to evaluate, track, and monitor your LLM and RAG applications. Validate allows you to evaluate your LLM outputs through the use of our provided metrics which measure everything from answer correctness to LLM hallucination. Additionally, Validate has an optional UI to visualize your evaluation results for easy tracking and monitoring.

For similar tasks

sports-betting

Sports-betting is a Python library for implementing betting strategies and analyzing sports data. It provides tools for collecting, processing, and visualizing sports data to make informed betting decisions. The library includes modules for scraping data from sports websites, calculating odds, simulating betting strategies, and evaluating performance. With sports-betting, users can automate betting processes, test different strategies, and improve their betting outcomes.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

DeepBI

DeepBI is an AI-native data analysis platform that leverages the power of large language models to explore, query, visualize, and share data from any data source. Users can use DeepBI to gain data insight and make data-driven decisions.

client

DagsHub is a platform for machine learning and data science teams to build, manage, and collaborate on their projects. With DagsHub you can: 1. Version code, data, and models in one place. Use the free provided DagsHub storage or connect it to your cloud storage 2. Track Experiments using Git, DVC or MLflow, to provide a fully reproducible environment 3. Visualize pipelines, data, and notebooks in and interactive, diff-able, and dynamic way 4. Label your data directly on the platform using Label Studio 5. Share your work with your team members 6. Stream and upload your data in an intuitive and easy way, while preserving versioning and structure. DagsHub is built firmly around open, standard formats for your project. In particular: * Git * DVC * MLflow * Label Studio * Standard data formats like YAML, JSON, CSV Therefore, you can work with DagsHub regardless of your chosen programming language or frameworks.

SQLAgent

DataAgent is a multi-agent system for data analysis, capable of understanding data development and data analysis requirements, understanding data, and generating SQL and Python code for tasks such as data query, data visualization, and machine learning.

google-research

This repository contains code released by Google Research. All datasets in this repository are released under the CC BY 4.0 International license, which can be found here: https://creativecommons.org/licenses/by/4.0/legalcode. All source files in this repository are released under the Apache 2.0 license, the text of which can be found in the LICENSE file.

airda

airda(Air Data Agent) is a multi-agent system for data analysis, which can understand data development and data analysis requirements, understand data, and generate SQL and Python code for data query, data visualization, machine learning and other tasks.

Wandb.jl

Unofficial Julia Bindings for wandb.ai. Wandb is a platform for tracking and visualizing machine learning experiments. It provides a simple and consistent way to log metrics, parameters, and other data from your experiments, and to visualize them in a variety of ways. Wandb.jl provides a convenient way to use Wandb from Julia.

For similar jobs

sports-betting

Sports-betting is a Python library for implementing betting strategies and analyzing sports data. It provides tools for collecting, processing, and visualizing sports data to make informed betting decisions. The library includes modules for scraping data from sports websites, calculating odds, simulating betting strategies, and evaluating performance. With sports-betting, users can automate betting processes, test different strategies, and improve their betting outcomes.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.