Best AI tools for< Scale Resources >

20 - AI tool Sites

Heroku

Heroku is a cloud platform that enables developers to build, deliver, monitor, and scale applications quickly and easily. It supports multiple programming languages and provides a seamless deployment process. With Heroku, developers can focus on coding without worrying about infrastructure management.

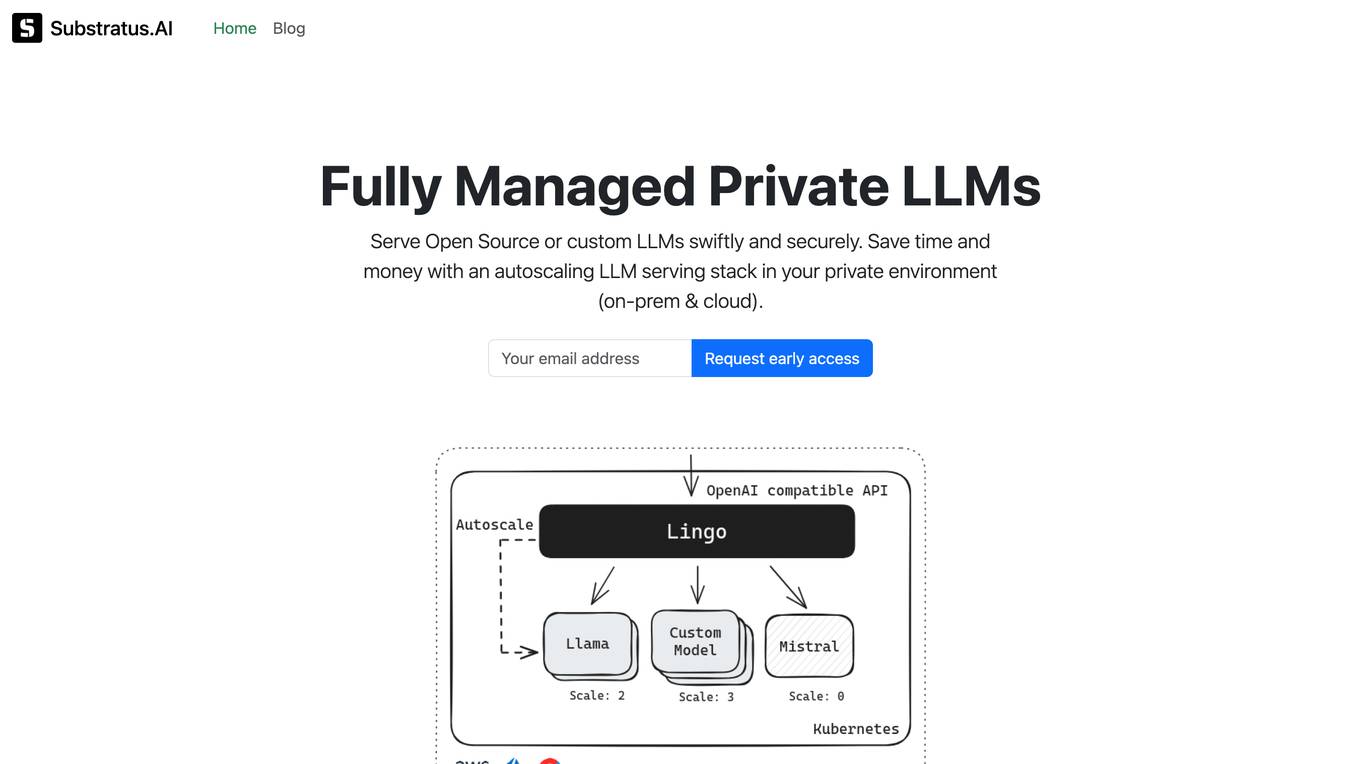

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

KubeHelper

KubeHelper is an AI-powered tool designed to reduce Kubernetes downtime by providing troubleshooting solutions and command searches. It seamlessly integrates with Slack, allowing users to interact with their Kubernetes cluster in plain English without the need to remember complex commands. With features like troubleshooting steps, command search, infrastructure management, scaling capabilities, and service disruption detection, KubeHelper aims to simplify Kubernetes operations and enhance system reliability.

WebServerPro

The website is a platform that provides web server hosting services. It helps users set up and manage their web servers efficiently. Users can easily deploy their websites and applications on the server, ensuring a seamless online presence. The platform offers a user-friendly interface and reliable hosting solutions to meet various needs.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

SaaStr AI

SaaStr AI is a platform that serves as the world's largest community for B2B + AI software. It focuses on sharing, scaling, and learning about the latest trends in AI and go-to-market strategies. The platform offers a wide range of resources, including podcasts, articles, and events, to help businesses navigate the AI era and drive growth.

LAION

LAION is a non-profit organization that provides datasets, tools, and models to advance machine learning research. The organization's goal is to promote open public education and encourage the reuse of existing datasets and models to reduce the environmental impact of machine learning research.

SaasPedia

SaasPedia is a website that provides resources and services to help SaaS businesses grow and scale their traffic, sales, and revenue with organic marketing campaigns and strategies. The website offers a variety of resources, including a blog, ebooks, webinars, and tools, as well as services such as cold outreach, SEO, and content marketing. SaasPedia also has a directory of SaaS companies and a list of SaaS-related podcasts and YouTube channels.

Cohere

Cohere is a customer support platform that uses AI to help businesses resolve tickets faster and reduce costs. It offers a range of features, including automated ticket resolution, personalized answers, step-by-step guidance, and advanced analytics. Cohere integrates with existing support resources and can be implemented quickly and easily. It has been used by leading companies to achieve industry-leading outcomes, including Ramp, Loom, Rippling, OpenPhone, Flock Safety, and Podium.

SunDevs

SunDevs is an AI application that focuses on solving business problems to provide exceptional customer experiences. The application offers various AI solutions, features, and resources to help businesses in different industries enhance their operations and customer interactions. SunDevs utilizes AI technology, such as chatbots and virtual assistants, to automate and scale business processes, leading to improved efficiency and customer satisfaction.

SolidGrids

SolidGrids is an AI-powered image enhancement tool designed specifically for e-commerce businesses. It automates the image post-production process, saving time and resources. With SolidGrids, you can easily remove backgrounds, enhance product images, and create consistent branding across your e-commerce site. The platform offers seamless cloud integrations and is cost-effective compared to traditional methods.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

JobSynergy

JobSynergy is an AI-powered platform that revolutionizes the hiring process by automating and conducting interviews at scale. It offers a real-world interview simulator that adapts dynamically to candidates' responses, custom questions and metrics evaluation, cheating detection using eye, voice, and screen, and detailed reports for better hiring decisions. The platform enhances efficiency, candidate experience, and ensures security and integrity in the hiring process.

Scalesleek

Scalesleek.app is a website that appears to have expired. It seems to have been a resource hub providing information on various topics. The domain is currently inactive, and users are advised to contact their domain registration service provider for assistance.

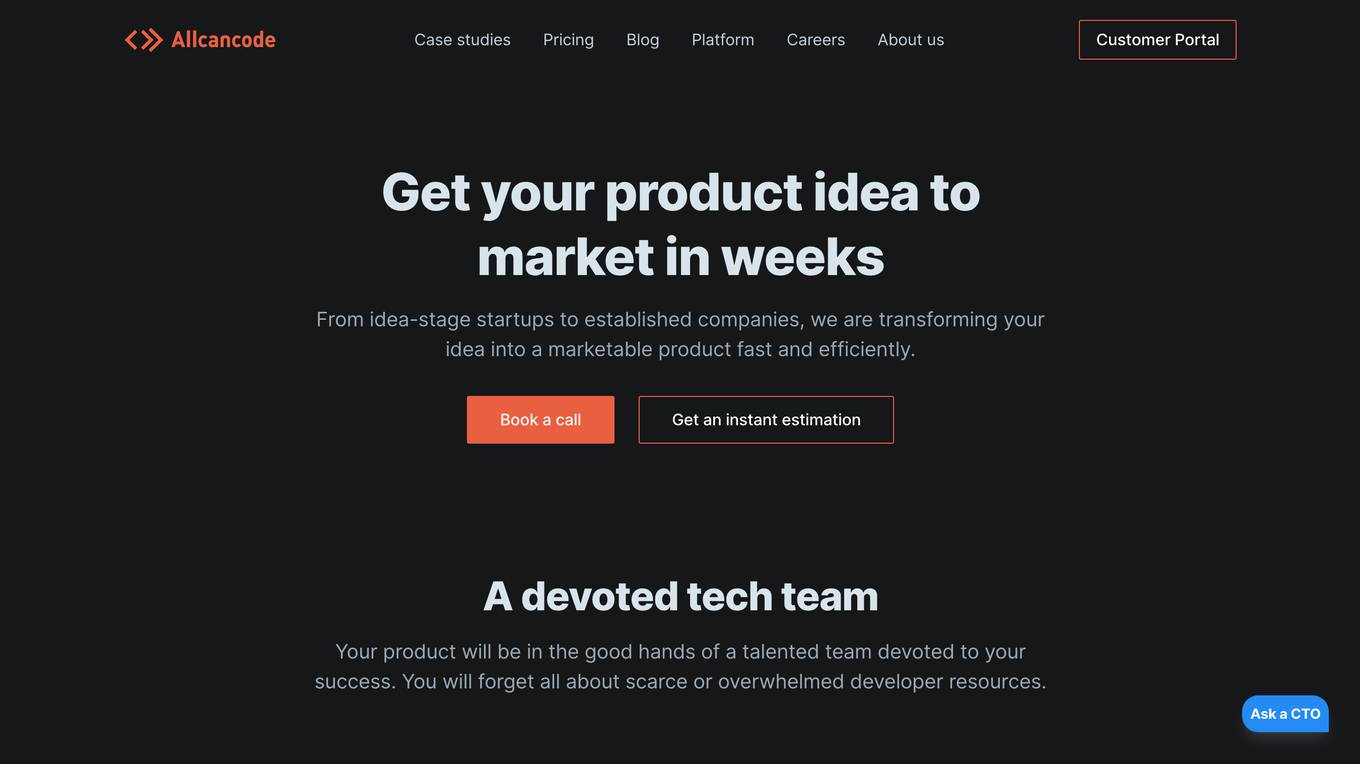

Allcancode

Allcancode is a platform that helps individuals and companies bring their product ideas to market quickly and efficiently. From idea-stage startups to established companies, Allcancode transforms ideas into marketable products by providing a devoted tech team, shortest time to market, staying on budget, and giving full ownership of the source code to the users. The platform follows a lean process in 4 steps - Discovery & Design Phase, Development Phase, Delivery Phase, and Evolution Phase, ensuring transparency and flexibility throughout the product development journey.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

Vendasta

Vendasta is a full-stack growth platform that provides agencies with the tools and resources they need to scale their businesses and help their clients succeed. With Vendasta, agencies can offer a wide range of services to their clients, including marketing automation, project management, sales and CRM, workflow automations, and more. Vendasta also offers a marketplace where agencies can resell products and services from other vendors, as well as a white-label client portal that allows clients to manage their own accounts and access all of the services that their agency offers.

Simsy

Simsy is an AI-driven platform that empowers graduate students, working professionals, and entrepreneurs to innovate, grow, and scale their ventures. It provides personalized recommendations, startup resources, and access to a global network of mentors, investors, and service providers. Simsy accelerates the startup journey by offering tailored tools, a hyper-personalized AI copilot, and a comprehensive support system. The platform fosters collaboration, drives growth, and shapes the future of entrepreneurship by providing accessible pricing, managed services, and venture studio launchpad programs.

2 - Open Source AI Tools

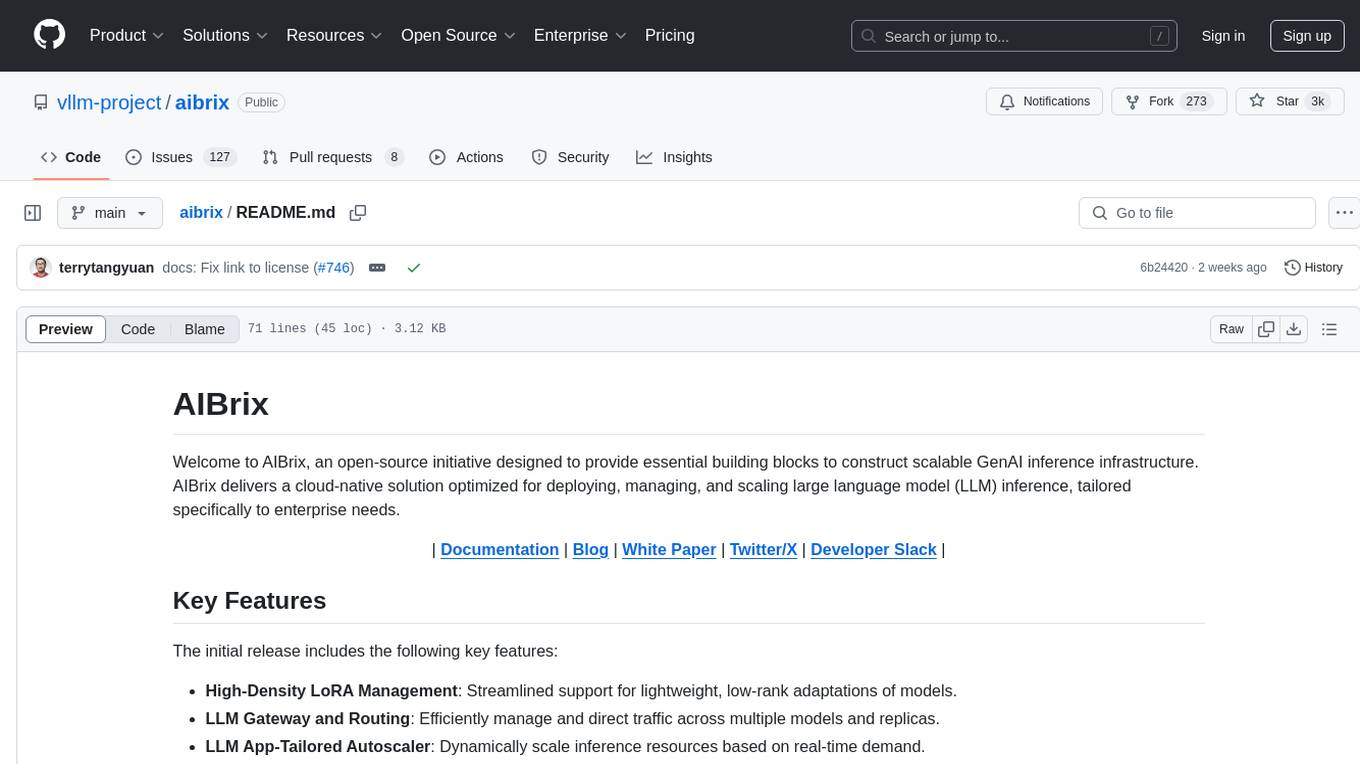

aibrix

AIBrix is an open-source initiative providing essential building blocks for scalable GenAI inference infrastructure. It delivers a cloud-native solution optimized for deploying, managing, and scaling large language model (LLM) inference, tailored to enterprise needs. Key features include High-Density LoRA Management, LLM Gateway and Routing, LLM App-Tailored Autoscaler, Unified AI Runtime, Distributed Inference, Distributed KV Cache, Cost-efficient Heterogeneous Serving, and GPU Hardware Failure Detection.

mcp-fundamentals

The mcp-fundamentals repository is a collection of fundamental concepts and examples related to microservices, cloud computing, and DevOps. It covers topics such as containerization, orchestration, CI/CD pipelines, and infrastructure as code. The repository provides hands-on exercises and code samples to help users understand and apply these concepts in real-world scenarios. Whether you are a beginner looking to learn the basics or an experienced professional seeking to refresh your knowledge, mcp-fundamentals has something for everyone.

20 - OpenAI Gpts

R&D Process Scale-up Advisor

Optimizes production processes for efficient large-scale operations.

CIM Analyst

In-depth CIM analysis with a structured rating scale, offering detailed business evaluations.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Business Angel - Startup and Insights PRO

Business Angel provides expert startup guidance: funding, growth hacks, and pitch advice. Navigate the startup ecosystem, from seed to scale. Essential for entrepreneurs aiming for success. Master your strategy and launch with confidence. Your startup journey begins here!

Sysadmin

I help you with all your sysadmin tasks, from setting up your server to scaling your already exsisting one. I can help you with understanding the long list of log files and give you solutions to the problems.

Seabiscuit Launch Lander

Startup Strong Within 180 Days: Tailored advice for launching, promoting, and scaling businesses of all types. It covers all stages from pre-launch to post-launch and develops strategies including market research, branding, promotional tactics, and operational planning unique your business. (v1.8)

Startup Advisor

Startup advisor guiding founders through detailed idea evaluation, product-market-fit, business model, GTM, and scaling.