Best AI tools for< Run Machine Learning Models On Microcontrollers >

20 - AI tool Sites

Replicate

Replicate is an AI tool that allows users to run and fine-tune open-source models, deploy custom models at scale, and generate images, text, videos, music, and speech with just one line of code. It provides a platform for the community to contribute and explore thousands of production-ready AI models, enabling users to push the boundaries of AI beyond academic papers and demos. With features like fine-tuning models, deploying custom models, and scaling on Replicate, users can easily create and deploy AI solutions for various tasks.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

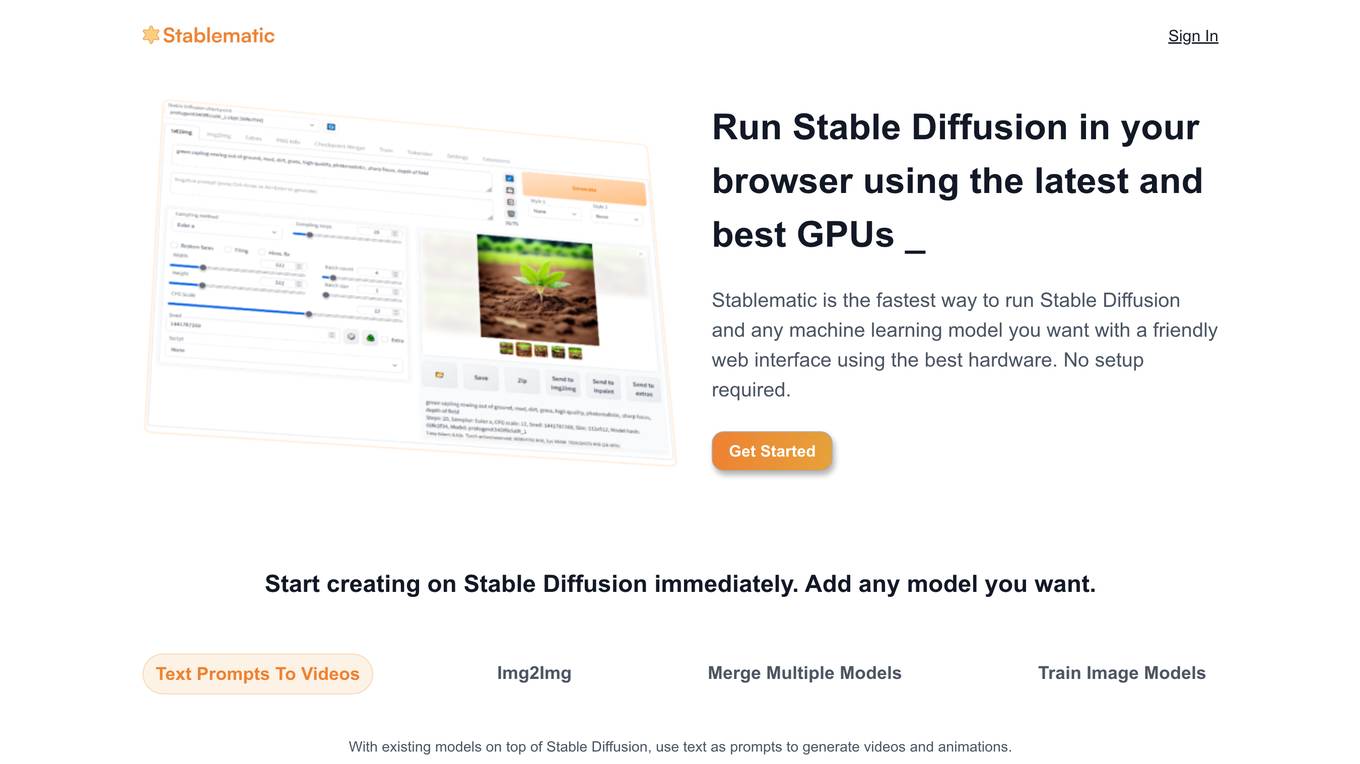

Stablematic

Stablematic is a web-based platform that allows users to run Stable Diffusion and other machine learning models without the need for local setup or hardware limitations. It provides a user-friendly interface, pre-installed plugins, and dedicated GPU resources for a seamless and efficient workflow. Users can generate images and videos from text prompts, merge multiple models, train custom models, and access a range of pre-trained models, including Dreambooth and CivitAi models. Stablematic also offers API access for developers and dedicated support for users to explore and utilize the capabilities of Stable Diffusion and other machine learning models.

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

Qubinets

Qubinets is a cloud data environment solutions platform that provides building blocks for building big data, AI, web, and mobile environments. It is an open-source, no lock-in, secured, and private platform that can be used on any cloud, including AWS, Digital Ocean, Google Cloud, and Microsoft Azure. Qubinets makes it easy to plan, build, and run data environments, and it streamlines and saves time and money by reducing the grunt work in setup and provisioning.

Nebius

Nebius is the ultimate cloud for AI explorers, designed to democratize AI infrastructure and empower builders everywhere. It offers flexible architecture to seamlessly scale AI from a single GPU to pre-optimized clusters with thousands of NVIDIA GPUs. Nebius is engineered for demanding AI workloads, integrating NVIDIA GPU accelerators, high-performance InfiniBand, and Kubernetes or Slurm orchestration for peak efficiency. The platform provides long-term value by optimizing every layer of the stack, delivering substantial customer value over competitors.

Sanctum

Sanctum is a private AI tool that brings the power of generative AI to your desktop. It enables you to download and run full-featured open-source LLMs directly on your device. With on-device encryption and processing, your data never leaves your Mac. You maintain complete privacy and control.

Cortex Labs

Cortex Labs is a decentralized world computer that enables AI and AI-powered decentralized applications (dApps) to run on the blockchain. It offers a Layer2 solution called ZkMatrix, which utilizes zkRollup technology to enhance transaction speed and reduce fees. Cortex Virtual Machine (CVM) supports on-chain AI inference using GPU, ensuring deterministic results across computing environments. Cortex also enables machine learning in smart contracts and dApps, fostering an open-source ecosystem for AI researchers and developers to share models. The platform aims to solve the challenge of on-chain machine learning execution efficiently and deterministically, providing tools and resources for developers to integrate AI into blockchain applications.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

Practice Run AI

Practice Run AI is an online platform that offers AI-powered tools for various tasks. Users can utilize the application to practice and run AI algorithms without the need for complex setups or installations. The platform provides a user-friendly interface that allows individuals to experiment with AI models and enhance their understanding of artificial intelligence concepts. Practice Run AI aims to democratize AI education and make it accessible to a wider audience by simplifying the learning process and providing hands-on experience.

Jan

Jan is an open-source ChatGPT-alternative that runs 100% offline. It allows users to chat with AI, download and run powerful models, connect to cloud AIs, set up a local API server, and chat with files. Highly customizable, Jan also offers features like creating personalized AI assistants, memory, and extensions. The application prioritizes local-first AI, user-owned data, and full customization, making it a versatile tool for AI enthusiasts and developers.

ThirdAI

ThirdAI is an AI platform that offers a production-ready solution for building and deploying AI applications quickly and efficiently. It provides advanced AI/GenAI technology that can run on any infrastructure, reducing barriers to delivering production-grade AI solutions. With features like enterprise SSO, built-in models, no-code interface, and more, ThirdAI empowers users to create AI applications without the need for specialized GPU servers or AI skills. The platform covers the entire workflow of building AI applications end-to-end, allowing for easy customization and deployment in various environments.

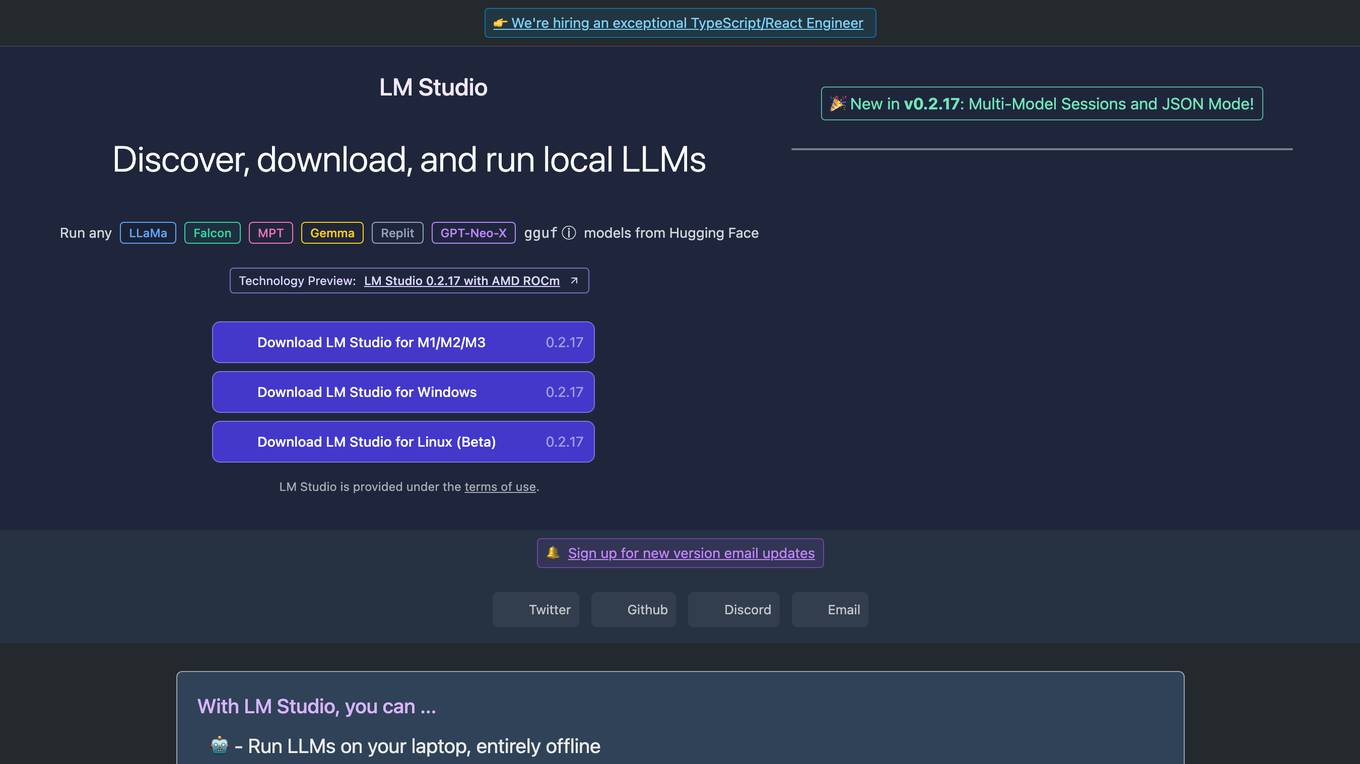

LM Studio

LM Studio is an AI tool designed for discovering, downloading, and running local LLMs (Large Language Models). Users can run LLMs on their laptops offline, use models through an in-app Chat UI or a local server, download compatible model files from HuggingFace repositories, and discover new LLMs. The tool ensures privacy by not collecting data or monitoring user actions, making it suitable for personal and business use. LM Studio supports various models like ggml Llama, MPT, and StarCoder on Hugging Face, with minimum hardware/software requirements specified for different platforms.

Edge Impulse

Edge Impulse is a leading edge AI platform that enables users to build datasets, train models, and optimize libraries to run directly on any edge device. It offers sensor datasets, feature engineering, model optimization, algorithms, and NVIDIA integrations. The platform is designed for product leaders, AI practitioners, embedded engineers, and OEMs across various industries and applications. Edge Impulse helps users unlock sensor data value, build high-quality sensor datasets, advance algorithm development, optimize edge AI models, and achieve measurable results. It allows for future-proofing workflows by generating models and algorithms that perform efficiently on any edge hardware.

TitanML

TitanML is a platform that provides tools and services for deploying and scaling Generative AI applications. Their flagship product, the Titan Takeoff Inference Server, helps machine learning engineers build, deploy, and run Generative AI models in secure environments. TitanML's platform is designed to make it easy for businesses to adopt and use Generative AI, without having to worry about the underlying infrastructure. With TitanML, businesses can focus on building great products and solving real business problems.

VESSL AI

VESSL AI is a platform offering Liquid AI Infra & Persistent GPU Cloud services, allowing users to easily access and utilize GPUs for running AI workloads. It provides a seamless experience from zero to running AI workloads, catering to AI startups, enterprise AI teams, and research & academia. VESSL AI offers GPU products for every stage, with options like spot, on-demand, and reserved capacity, along with features like multi-cloud failover, pay-as-you-go pricing, and production-ready reliability. The platform is designed to help users scale their AI projects efficiently and effectively.

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

1 - Open Source AI Tools

executorch

ExecuTorch is an end-to-end solution for enabling on-device inference capabilities across mobile and edge devices including wearables, embedded devices and microcontrollers. It is part of the PyTorch Edge ecosystem and enables efficient deployment of PyTorch models to edge devices. Key value propositions of ExecuTorch are: * **Portability:** Compatibility with a wide variety of computing platforms, from high-end mobile phones to highly constrained embedded systems and microcontrollers. * **Productivity:** Enabling developers to use the same toolchains and SDK from PyTorch model authoring and conversion, to debugging and deployment to a wide variety of platforms. * **Performance:** Providing end users with a seamless and high-performance experience due to a lightweight runtime and utilizing full hardware capabilities such as CPUs, NPUs, and DSPs.

20 - OpenAI Gpts

Creative Director GPT

I'm your brainstorm muse in marketing and advertising; the creativity machine you need to sharpen the skills, land the job, generate the ideas, win the pitches, build the brands, ace the awards, or even run your own agency. Psst... don't let your clients find out about me! 😉

Consulting & Investment Banking Interview Prep GPT

Run mock interviews, review content and get tips to ace strategy consulting and investment banking interviews

Dungeon Master's Assistant

Your new DM's screen: helping Dungeon Masters to craft & run amazing D&D adventures.

Database Builder

Hosts a real SQLite database and helps you create tables, make schema changes, and run SQL queries, ideal for all levels of database administration.

Restaurant Startup Guide

Meet the Restaurant Startup Guide GPT: your friendly guide in the restaurant biz. It offers casual, approachable advice to help you start and run your own restaurant with ease.

Community Design™

A community-building GPT based on the wildly popular Community Design™ framework from Mighty Networks. Start creating communities that run themselves.

Code Helper for Web Application Development

Friendly web assistant for efficient code. Ask the wizard to create an application and you will get the HTML, CSS and Javascript code ready to run your web application.

Pace Assistant

Provides running splits for Strava Routes, accounting for distance and elevation changes