vnc-lm

Add Large Language Models to Discord. Add DeepSeek R-1, Llama 3.3, Gemini, and other models.

Stars: 65

vnc-lm is a Discord bot designed for messaging with language models. Users can configure model parameters, branch conversations, and edit prompts to enhance responses. The bot supports various providers like OpenAI, Huggingface, and Cloudflare Workers AI. It integrates with ollama and LiteLLM, allowing users to access a wide range of language model APIs through a single interface. Users can manage models, switch between models, split long messages, and create conversation branches. LiteLLM integration enables support for OpenAI-compatible APIs and local LLM services. The bot requires Docker for installation and can be configured through environment variables. Troubleshooting tips are provided for common issues like context window problems, Discord API errors, and LiteLLM issues.

README:

Configure model parameters to change behavior. Branch conversations to see alternative paths. Edit prompts to improve responses.

Supported API Providers

Load models using the /model command. Configure model behavior by adjusting the system_prompt (base instructions), temperature (response randomness), and num_ctx (context length) parameters.

# model management examples

# loading a model without configuring it

/model model:gemini-exp-1206

# loading a model with system prompt and temperature

/model model:gemini-exp-1206 system_prompt: You are a helpful assistant. temperature: 0.4

# loading an ollama model with num_ctx

/model model:deepseek-r1:8b-llama-distill-fp16 num_ctx:32000

# downloading an ollama model by sending a model tag link

https://ollama.com/library/deepseek-r1:8b-llama-distill-fp16

# removing an ollama model

/model model:deepseek-r1:8b-llama-distill-fp16 remove:TrueA thread will be created once the model loads. To switch models within a thread, use + followed by any distinctive part of the model name.

# model switching examples

# switch to deepseek-r1

+ deepseek, +r1

# switch to gemini-exp-1206

+ gemini, + exp, + 1206

# switch to claude-sonnet-3.5

+ claude, + sonnet, + 3.5The bot integrates with LiteLLM, which provides a unified interface to access leading large language model APIs. This integration also supports OpenAI-compatible APIs, enabling support for open-source LLM projects. Add new models by editing litellm_config.yaml in the vnc-lm/ directory. While LiteLLM starts automatically with the Docker container, the bot can also run with ollama alone if preferred.

Messages are automatically paginated and support text files, links, and images (via multi-modal models or OCR based on .env settings). Edit prompts to refine responses, with conversations persisting across container restarts in bot_cache.json. Create conversation branches by replying branch to any message. Hop between different conversation paths while maintaining separate histories.

Docker: Docker is a platform designed to help developers build, share, and run container applications. We handle the tedious setup, so you can focus on the code.

# clone the repository or download a recent release

git clone https://github.com/jake83741/vnc-lm.git

# enter the directory

cd vnc-lm

# rename the env file

mv .env.example .env# configure the below .env fields

# Discord bot token

TOKEN=

# administrator Discord user id (necessary for model downloading / removal privileges)

ADMIN=

# require bot mention (default: false)

REQUIRE_MENTION=

# turn vision on or off. turning vision off will turn ocr on. (default: false)

USE_VISION=

# leave blank to not use ollama

OLLAMAURL=http://host.docker.internal:11434

# example provider api keys

OPENAI_API_KEY=sk-...8YIH

ANTHROPIC_API_KEY=sk-...2HZF Generating a bot token

Inviting the bot to a server

# add models to the litellm_config.yaml

# it is not necessary to include ollama models here

model_list:

- model_name: gpt-3.5-turbo-instruct

litellm_params:

model: openai/gpt-3.5-turbo-instruct

api_key: os.environ/OPENAI_API_KEY

- model_name:

litellm_params:

model:

api_key: Additional parameters may be required

# build the container with Docker

docker compose up --build --no-color[!NOTE]

Send/helpfor instructions on how to use the bot.

.

├── api-connections/

│ ├── base-client.ts # Abstract base class defining common client interface and methods

│ ├── factory.ts # Factory class for instantiating appropriate model clients

│ └── provider/

│ ├── litellm/

│ │ └── client.ts # Client implementation for LiteLLM API integration

│ └── ollama/

│ └── client.ts # Client implementation for Ollama API integration

├── bot.ts # Main bot initialization and event handling setup

├── commands/

│ ├── base.ts # Base command class with shared command functionality

│ ├── handlers.ts # Implementation of individual bot commands

│ └── registry.ts # Command registration and slash command setup

├── managers/

│ ├── cache/

│ │ ├── entrypoint.sh # Cache initialization script

│ │ ├── manager.ts # Cache management implementation

│ │ └── store.ts # Cache storage and persistence

│ └── generation/

│ ├── core.ts # Core message generation logic

│ ├── formatter.ts # Message formatting and pagination

│ └── generator.ts # Stream-based response generation

└── utilities/

├── error-handler.ts # Global error handling

├── index.ts # Central export point for utilities

└── settings.ts # Global settings and configuration{

"dependencies": {

"@mozilla/readability": "^0.5.0", # Library for extracting readable content from web pages

"axios": "^1.7.2", # HTTP client for making API requests

"discord.js": "^14.15.3", # Discord API wrapper for building Discord bots

"dotenv": "^16.4.5", # Loads environment variables from .env files

"jsdom": "^24.1.3", # DOM implementation for parsing HTML in Node.js

"keyword-extractor": "^0.0.27", # Extracts keywords from text for generating thread names

"sharp": "^0.33.5", # Image processing library for resizing/optimizing images

"tesseract.js": "^5.1.0" # Optical Character Recognition (OCR) for extracting text from images

},

"devDependencies": {

"@types/axios": "^0.14.0",

"@types/dotenv": "^8.2.0",

"@types/jsdom": "^21.1.7",

"@types/node": "^18.15.25",

"typescript": "^5.1.3"

}

}When sending text files to a local model, be sure to set a proportional num_ctx value with /model.

Occasionally the Discord API will throw up errors in the console.

# Discord api error examples

DiscordAPIError[10062]: Unknown interaction

DiscordAPIError[40060]: Interaction has already been acknowledgedThe errors usually seem to be related to clicking through pages of an embedded response. The errors are not critical and should not cause the bot to crash.

When adding a model to the litellm_config.yaml from a service that uses a local API (text-generation-webui for example), use this example:

# add openai/ prefix to route as OpenAI provider

# add api base, use host.docker.interal:{port}/v1

# api key to send your model. use a placeholder when the service doesn't use api keys

model_list:

- model_name: my-model

litellm_params:

model: openai/<your-model-name>

api_base: <model-api-base>

api_key: api-key If LiteLLM is exiting in the console log when doing docker compose up --build --no-color. Open the docker-compose.yaml and revise the following line and run docker compose up --build --no-color again to see more descriptive logs.

# original

command: -c "exec litellm --config /app/config.yaml >/dev/null 2>&1"

# revised

command: -c "exec litellm --config /app/config.yaml"Most issues will be related to the litellm_config.yaml file. Double check your model_list vs the examples shown in the LiteLLM docs. Some providers require additional litellm_params.

Cache issues are rare and difficult to reproduce but if one does occur, deleting bot_cache.json and re-building the bot should correct it.

This project is licensed under the MPL-2.0 license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vnc-lm

Similar Open Source Tools

vnc-lm

vnc-lm is a Discord bot designed for messaging with language models. Users can configure model parameters, branch conversations, and edit prompts to enhance responses. The bot supports various providers like OpenAI, Huggingface, and Cloudflare Workers AI. It integrates with ollama and LiteLLM, allowing users to access a wide range of language model APIs through a single interface. Users can manage models, switch between models, split long messages, and create conversation branches. LiteLLM integration enables support for OpenAI-compatible APIs and local LLM services. The bot requires Docker for installation and can be configured through environment variables. Troubleshooting tips are provided for common issues like context window problems, Discord API errors, and LiteLLM issues.

Ling

Ling is a MoE LLM provided and open-sourced by InclusionAI. It includes two different sizes, Ling-Lite with 16.8 billion parameters and Ling-Plus with 290 billion parameters. These models show impressive performance and scalability for various tasks, from natural language processing to complex problem-solving. The open-source nature of Ling encourages collaboration and innovation within the AI community, leading to rapid advancements and improvements. Users can download the models from Hugging Face and ModelScope for different use cases. Ling also supports offline batched inference and online API services for deployment. Additionally, users can fine-tune Ling models using Llama-Factory for tasks like SFT and DPO.

MaskLLM

MaskLLM is a learnable pruning method that establishes Semi-structured Sparsity in Large Language Models (LLMs) to reduce computational overhead during inference. It is scalable and benefits from larger training datasets. The tool provides examples for running MaskLLM with Megatron-LM, preparing LLaMA checkpoints, pre-tokenizing C4 data for Megatron, generating prior masks, training MaskLLM, and evaluating the model. It also includes instructions for exporting sparse models to Huggingface.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

Noi

Noi is an AI-enhanced customizable browser designed to streamline digital experiences. It includes curated AI websites, allows adding any URL, offers prompts management, Noi Ask for batch messaging, various themes, Noi Cache Mode for quick link access, cookie data isolation, and more. Users can explore, extend, and empower their browsing experience with Noi.

polaris

Polaris establishes a novel, industry‑certified standard to foster the development of impactful methods in AI-based drug discovery. This library is a Python client to interact with the Polaris Hub. It allows you to download Polaris datasets and benchmarks, evaluate a custom method against a Polaris benchmark, and create and upload new datasets and benchmarks.

client-ts

Mistral Typescript Client is an SDK for Mistral AI API, providing Chat Completion and Embeddings APIs. It allows users to create chat completions, upload files, create agent completions, create embedding requests, and more. The SDK supports various JavaScript runtimes and provides detailed documentation on installation, requirements, API key setup, example usage, error handling, server selection, custom HTTP client, authentication, providers support, standalone functions, debugging, and contributions.

parseable

Parseable is a full stack observability platform designed to ingest, analyze, and extract insights from various types of telemetry data. It can be run locally, in the cloud, or as a managed service. The platform offers features like high availability, smart cache, alerts, role-based access control, OAuth2 support, and OpenTelemetry integration. Users can easily ingest data, query logs, and access the dashboard to monitor and analyze data. Parseable provides a seamless experience for observability and monitoring tasks.

yolo-flutter-app

Ultralytics YOLO for Flutter is a Flutter plugin that allows you to integrate Ultralytics YOLO computer vision models into your mobile apps. It supports both Android and iOS platforms, providing APIs for object detection and image classification. The plugin leverages Flutter Platform Channels for seamless communication between the client and host, handling all processing natively. Before using the plugin, you need to export the required models in `.tflite` and `.mlmodel` formats. The plugin provides support for tasks like detection and classification, with specific instructions for Android and iOS platforms. It also includes features like camera preview and methods for object detection and image classification on images. Ultralytics YOLO thrives on community collaboration and offers different licensing paths for open-source and commercial use cases.

star-vector

StarVector is a multimodal vision-language model for Scalable Vector Graphics (SVG) generation. It can be used to perform image2SVG and text2SVG generation. StarVector works directly in the SVG code space, leveraging visual understanding to apply accurate SVG primitives. It achieves state-of-the-art performance in producing compact and semantically rich SVGs. The tool provides Hugging Face model checkpoints for image2SVG vectorization, with models like StarVector-8B and StarVector-1B. It also offers datasets like SVG-Stack, SVG-Fonts, SVG-Icons, SVG-Emoji, and SVG-Diagrams for evaluation. StarVector can be trained using Deepspeed or FSDP for tasks like Image2SVG and Text2SVG generation. The tool provides a demo with options for HuggingFace generation or VLLM backend for faster generation speed.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

evalscope

Eval-Scope is a framework designed to support the evaluation of large language models (LLMs) by providing pre-configured benchmark datasets, common evaluation metrics, model integration, automatic evaluation for objective questions, complex task evaluation using expert models, reports generation, visualization tools, and model inference performance evaluation. It is lightweight, easy to customize, supports new dataset integration, model hosting on ModelScope, deployment of locally hosted models, and rich evaluation metrics. Eval-Scope also supports various evaluation modes like single mode, pairwise-baseline mode, and pairwise (all) mode, making it suitable for assessing and improving LLMs.

amica

Amica is an application that allows you to easily converse with 3D characters in your browser. You can import VRM files, adjust the voice to fit the character, and generate response text that includes emotional expressions.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

Scrapegraph-ai

ScrapeGraphAI is a web scraping Python library that utilizes LLM and direct graph logic to create scraping pipelines for websites and local documents. It offers various standard scraping pipelines like SmartScraperGraph, SearchGraph, SpeechGraph, and ScriptCreatorGraph. Users can extract information by specifying prompts and input sources. The library supports different LLM APIs such as OpenAI, Groq, Azure, and Gemini, as well as local models using Ollama. ScrapeGraphAI is designed for data exploration and research purposes, providing a versatile tool for extracting information from web pages and generating outputs like Python scripts, audio summaries, and search results.

PromptClip

PromptClip is a tool that allows developers to create video clips using LLM prompts. Users can upload videos from various sources, prompt the video in natural language, use different LLM models, instantly watch the generated clips, finetune the clips, and add music or image overlays. The tool provides a seamless way to extract specific moments from videos based on user queries, making video editing and content creation more efficient and intuitive.

For similar tasks

vnc-lm

vnc-lm is a Discord bot designed for messaging with language models. Users can configure model parameters, branch conversations, and edit prompts to enhance responses. The bot supports various providers like OpenAI, Huggingface, and Cloudflare Workers AI. It integrates with ollama and LiteLLM, allowing users to access a wide range of language model APIs through a single interface. Users can manage models, switch between models, split long messages, and create conversation branches. LiteLLM integration enables support for OpenAI-compatible APIs and local LLM services. The bot requires Docker for installation and can be configured through environment variables. Troubleshooting tips are provided for common issues like context window problems, Discord API errors, and LiteLLM issues.

Dictate

Dictate is an easy-to-use keyboard tool that utilizes OpenAI Whisper for transcription and dictation. It offers accurate results in multiple languages with punctuation and custom AI rewording using GPT-4 Omni. The tool is designed to simplify the process of transcribing spoken words into text, making it convenient for users to dictate their thoughts and notes effortlessly.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

CSGHub

CSGHub is an open source, trustworthy large model asset management platform that can assist users in governing the assets involved in the lifecycle of LLM and LLM applications (datasets, model files, codes, etc). With CSGHub, users can perform operations on LLM assets, including uploading, downloading, storing, verifying, and distributing, through Web interface, Git command line, or natural language Chatbot. Meanwhile, the platform provides microservice submodules and standardized OpenAPIs, which could be easily integrated with users' own systems. CSGHub is committed to bringing users an asset management platform that is natively designed for large models and can be deployed On-Premise for fully offline operation. CSGHub offers functionalities similar to a privatized Huggingface(on-premise Huggingface), managing LLM assets in a manner akin to how OpenStack Glance manages virtual machine images, Harbor manages container images, and Sonatype Nexus manages artifacts.

AI-Horde

The AI Horde is an enterprise-level ML-Ops crowdsourced distributed inference cluster for AI Models. This middleware can support both Image and Text generation. It is infinitely scalable and supports seamless drop-in/drop-out of compute resources. The Public version allows people without a powerful GPU to use Stable Diffusion or Large Language Models like Pygmalion/Llama by relying on spare/idle resources provided by the community and also allows non-python clients, such as games and apps, to use AI-provided generations.

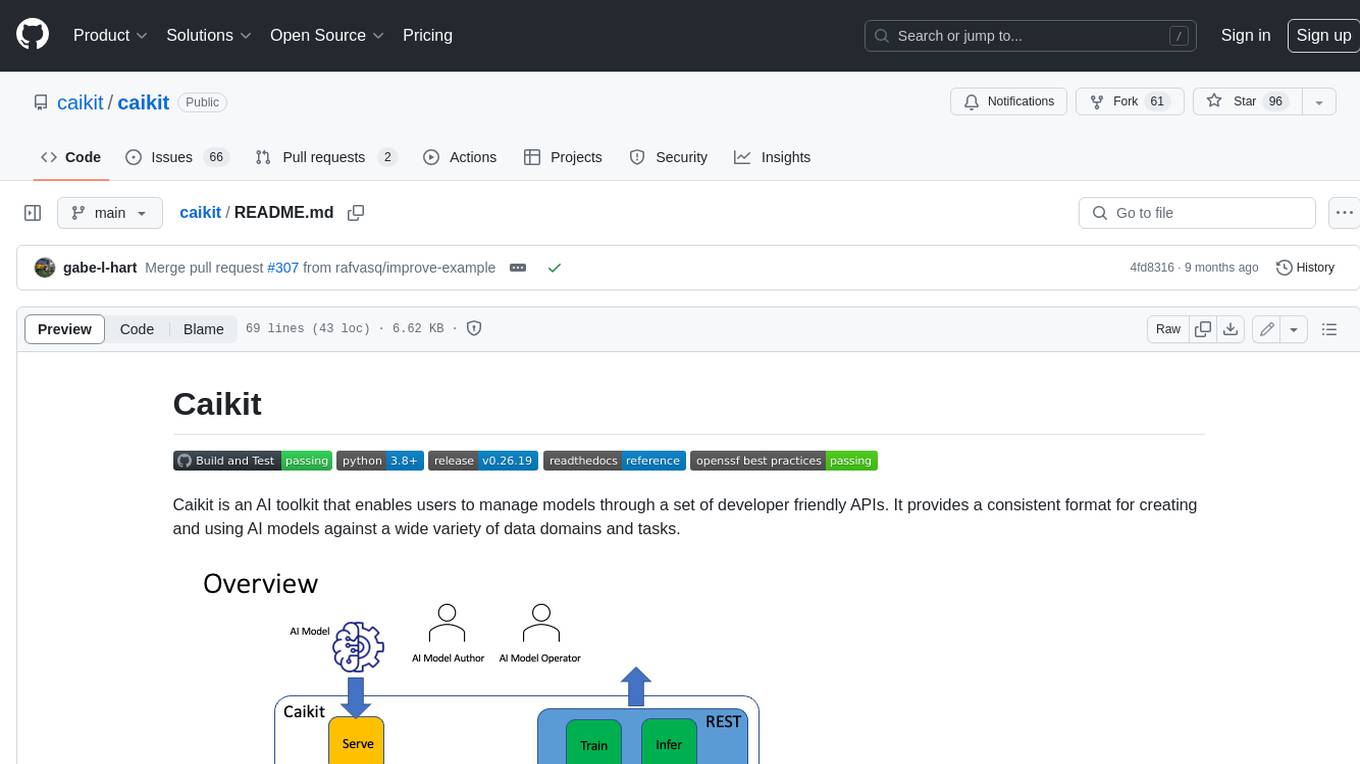

caikit

Caikit is an AI toolkit that enables users to manage models through a set of developer friendly APIs. It provides a consistent format for creating and using AI models against a wide variety of data domains and tasks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.