MemoryBear

MemoryBear Equip AI with human-like memory capability

Stars: 244

MemoryBear is a next-generation AI memory system developed by RedBear AI, focusing on overcoming limitations in knowledge storage and multi-agent collaboration. It empowers AI with human-like memory capabilities, enabling deep knowledge understanding and cognitive collaboration. The system addresses challenges such as knowledge forgetting, memory gaps in multi-agent collaboration, and semantic ambiguity during reasoning. MemoryBear's core features include memory extraction engine, graph storage, hybrid search, memory forgetting engine, self-reflection engine, and FastAPI services. It offers a standardized service architecture for efficient integration and invocation across applications.

README:

中文 | English

MemoryBear is a next-generation AI memory system independently developed by RedBear AI. Its core breakthrough lies in moving beyond the limitations of traditional "static knowledge storage". Inspired by the cognitive mechanisms of biological brains, MemoryBear builds an intelligent knowledge-processing framework that spans the full lifecycle of perception, refinement, association, and forgetting.The system is designed to free machines from the trap of mere "information accumulation", enabling deep knowledge understanding, autonomous evolution, and ultimately becoming a key partner in human-AI cognitive collaboration.

Context window limitations: Mainstream large language models typically have context windows of 8k-32k tokens. In long conversations, earlier messages are pushed out of the window, causing later responses to lose their historical context.For example, a user says in turn 1, "I'm allergic to seafood", but by turn 5 when they ask, "What should I have for dinner tonight?" the model may have already forgotten the allergy information.

Gap between static knowledge bases and dynamic data: The model's training corpus is a static snapshot (e.g., data up to 2023) and cannot continuously absorb personalized information from user interactions, such as preferences or order history. External memory modules are required to supplement and maintain this dynamic, user-specific knowledge.

Limitations of the attention mechanism: In Transformer architectures, self-attention becomes less effective at capturing long-range dependencies as the sequence grows. This leads to a recency bias, where the model overweights the latest input and ignores crucial information that appeared earlier in the conversation.

Data silos between agents: Different agents-such as a consulting agent, after-sales agent, and recommendation agent-often maintain their own isolated memories without a shared layer. As a result, users have to repeat information. For instance, after providing their address to the consulting agent, the user may be asked for it again by the after-sales agent.

Inconsistent dialogue state: When switching between agents in multi-turn interactions, key dialogue state-such as the user's current intent or past issue labels-may not be passed along completely. This causes service discontinuities. For example,a user transitions from "product inquiry" to "complaint", but the new agent does not inherit the complaint details discussed earlier.

Conflicting decisions: Agents that only see partial memory can generate contradictory responses. For example, a recommendation agent might suggest products that the user is allergic to, simply because it does not have access to the user's recorded health constraints.

Personalized signals in user conversations-such as domain-specific jargon, colloquial expressions, or context-dependent references-are often not encoded accurately, leading to semantic drift in how the model interprets memory. For instance, when the user refers to "that plan we discussed last time", the model may be unable to reliably locate the specific plan in previous conversations. Broken cross-lingual and dialect memory links in multilingual or dialect-rich scenarios, cross-language associations in memory may fail. When a user mixes Chinese and English in their requests, the model may struggle to integrate information expressed across languages.

Typical example: A user says: "Last time customer support told me it could be processed 'as an urgent case'. What's the status now?" If the system never encoded what "urgent" corresponds to in terms of a concrete service level, the model can only respond with vague, unhelpful answers.

Unlike traditional memory management tools that treat knowledge as static data to be retrieved, MemoryBear is designed around the goal of simulating the knowledge-processing logic of the human brain. It builds a closed-loop system that spans the entire lifecycle-from knowledge intake to intelligent output. By emulating the hippocampus's memory encoding, the neocortex's knowledge consolidation, and synaptic pruning-based forgetting mechanisms, MemoryBear enables knowledge to dynamically evolve with "life-like" properties. This fundamentally redefines the relationship between knowledge and its users-shifting from passive lookup to proactive cognitive assistance.

MemoryBear's design philosophy is rooted in deep insight into the essence of human cognition: the value of knowledge does not lie in its accumulation, but in the continuous transformation and refinement that occurs as it flows.

In traditional systems, once stored, knowledge becomes static-hard to associate across domains and incapable of adapting to users' cognitive needs. MemoryBear, by contrast, is built on the belief that true intelligence emerges only when knowledge undergoes a full evolutionary process: raw information distilled into structured rules, isolated rules connected into a semantic network, redundant information intelligently forgotten. Through this progression, knowledge shifts from mere informational memory to genuine cognitive understanding, enabling the emergence of real intelligence.

As an intelligent memory management system inspired by biological cognitive processes, MemoryBear centers its capabilities on two dimensions: full-lifecycle knowledge memory management and intelligent cognitive evolution. It covers the complete chain-from memory ingestion and refinement to storage, retrieval, and dynamic optimization-while providing a standardized service architecture that ensures efficient integration and invocation across applications.

Memory extraction is the starting point of MemoryBear's cognitive-oriented knowledge management. Unlike traditional data extraction, which performs "mechanical transformation", MemoryBear focuses on semantic-level parsing of unstructured information and standardized multi-format outputs, ensuring precise compatibility with downstream graph construction and intelligent retrieval. Core capabilities include:

Accurate parsing of diverse information types: The engine automatically identifies and extracts core information from declarative sentences, removing redundant modifiers while preserving the essential subject-action-object logic. It also extracts structured triples (e.g., "MemoryBear-core functionality-knowledge extraction"), providing atomic data units for graph storage and ensuring high-accuracy knowledge association.

Temporal information anchoring: For time-sensitive knowledge-such as event logs, policy documents, or experimental data-the engine automatically extracts timestamps and associates them with the content. This enables time-based reasoning and resolves the "temporal confusion" found in traditional knowledge systems.

Intelligent pruning summarization: Based on contextual semantic understanding, the engine generates summaries that cover all key information with strong logical coherence. Users may customize summary length (50-500 words) and emphasis (technical, business, etc.), enabling fast knowledge acquisition across scenarios.Example: For a 10-page technical document, MemoryBear can produce a concise summary including core parameters, implementation logic, and application scenarios in under 3 seconds.

The storage layer adopts a graph-first architecture, integrating with the mature Neo4j graph database to manage knowledge entities and relationships efficiently. This overcomes limitations of traditional relational databases-such as weak relational modeling and slow complex queries-and mirrors the biological "neuron-synapse" cognition model.

Key advantages include: Scalable, flexible storage: supportting millions of entities and tens of millions of relational edges, covering 12 core relationship types (hierarchical, causal, temporal, logical, etc.) to fit multi-domain knowledge applications. Seamless integration with the extraction module: Extracting triples synchronize directly into Neo4j, automatically constructing the initial knowledge graph with zero manual mapping. Interactive graph visualization: users can intuitively explore entity connection paths, adjust relationship weights, and perform hybrid "machine-generated + human-optimized" graph management.

To overcome the classic tradeoff-precision but rigidity vs. fuzziness but inaccuracy-MemoryBear implements a hybrid retrieval framework combining keyword search and semantic vector search.

Keyword search: Optimized with Lucene, enabling millisecond-level exact matching of structured Semantic vector search:Powered by BERT embeddings, transforming queries into high-dimensional vectors for deep semantic comparison. This allows recognition of synonyms, near-synonyms, and implicit intent.For example, the query "How to optimize memory decay efficiency?" may surface related knowledge such as "forgetting-mechanism parameter tuning" or "memory strength evaluation methods". Intelligent fusion strategy:Semantic retrieval expands the candidate space; keyword retrieval then performs precise filtering.This dual-stage process increases retrieval accuracy to 92%, improving by 35% compared with single-mode retrieval.

Forgetting is one of MemoryBear's defining features-setting it apart from static knowledge systems. Inspired by the brain's synaptic pruning mechanism, MemoryBear models forgetting using a dual-dimension approach based on memory strength and time decay, ensuring redundant knowledge is removed while key knowledge retains cognitive priority.

Implementation details:Each knowledge item is assigned an initial memory strength (determined by extraction quality and manual importance labels). Strength is updated dynamically according to usage frequency and association activity; A configurable time-decay cycle defines how different knowledge types (core rules vs. temporary data) lose strength over time. When knowledge falls below the strength threshold and exceeds its validity period, it enters a three-stage lifecycle: Dormancy-retained but with lower retrieval priority. Decay-gradually compressed to reduce storage cost. Clearance -permanently removed and archived into cold storage. This mechanism maintains redundant knowledge under 8%, reducing waste by over 60% compared with systems lacking forgetting capabilities.

The self-reflection mechanism is key to MemoryBear's "intelligent self-improvement'. It periodically revisits, validates, and optimizes existing knowledge, mimicking the human behavior of review and retrospection.

A scheduled reflection process runs automatically at midnight each day, performing:

- Consistency checks, Detects logical conflicts across related knowledge (e.g., contradictory attributes for the same entity), flags suspicious records, and routes them for human verification;

- Value assessment, Evaluates invocation frequency and contribution to associations. High-value knowledge is reinforced; low-value knowledge experiences accelerated decay;

- Association optimization, Adjusts relationship weights based on recent usage and retrieval behavior, strengthening high-frequency association paths.

To support seamless integration with external business systems, MemoryBear uses FastAPI to build a unified service architecture that exposes both management and service APIs with high performance, easy integration, and strong consistency. Service-side APIs cover knowledge extraction, graph operations, search queries, forgetting management, and more. Support JSON/XML formats, with average latency below 50 ms, and a single instance sustaining 1000 QPS concurrency. Management-side APIs provide configuration, permissions, log queries, batch knowledge import/export, reflection cycle adjustments, and other operational capabilities. Swagger API documentation is auto-generated, including parameter descriptions, request samples, and response schemas, enabling rapid integration and testing. The architecture is compatible with enterprise microservice ecosystems, supports Docker-based deployment, and integrates easily with CRM, OA, R&D management, and various business applications.

- Memory Extraction Engine: Preprocessing, deduplication, and structured knowledge extraction - Memory Forgetting Engine: Memory strength modeling and decay strategies - Memory Reflection Engine: Evaluation and rewriting of stored memories - Retrieval Services: Keyword search, semantic search, and hybrid retrieval - Agent & MCP Integration: Multi-tool collaborative agent capabilitiesWe evaluate MemoryBear across multiple datasets covering different types of tasks, comparing its performance with other memory-enabled systems. The evaluation metrics include F1 score (F1), BLEU-1 (B1), and LLM-as-a-Judge score (J)-where higher values indicate better performance. MemoryBear achieves state-of-the-art results across all task categories: In single-hop scenarios, MemoryBear leads in precision, answer matching quality, and task specificity. In multi-hop reasoning, it demonstrates stronger information coherence and higher reasoning accuracy. In open generalization tasks, it exhibits superior capability in handling diverse, unbounded information and maintaining high-quality generalization. In temporal reasoning tasks, it excels at aligning and processing time-sensitive information. Across the core metrics of all four task types, MemoryBear consistently outperforms other competing systems in the industry, including Mem O, Zep, and LangMem, demonstrating significantly stronger overall performance.

MemoryBear's vector-based knowledge memory (non-graph version) achieves substantial improvements in retrieval efficiency while maintaining high accuracy. Its overall accuracy surpasses the best existing full-text retrieval methods (72.90 ± 0.19%). More importantly, it maintains low latency across critical metrics-including Search Latency and Total Latency at both p50 and p95-demonstrating the characteristics of higher performance with greater latency efficiency. This effectively resolves the common bottleneck in full-text retrieval systems, where high accuracy typically comes at the cost of significantly increased latency. MemoryBear further unlocks its potential in tasks requiring complex reasoning and relationship awareness through the integration of a knowledge-graph architecture. Although graph traversal and reasoning introduce a slight retrieval overhead, this version effectively keeps latency within an efficient range by optimizing graph-query strategies and decision flows. More importantly, the graph-based MemoryBear pushes overall accuracy to a new benchmark (75.00 ± 0.20%). While maintaining high accuracy, it delivers performance metrics that significantly surpass all other methods, demonstrating the decisive advantage of structured memory systems.-

Node.js 20.19+ or 22.12+- Required for running the frontend

-

Python 3.12- Backend runtime environment

-

PostgreSQL 13+- Primary relational database

-

Neo4j 4.4+- Graph database (used for storing the knowledge graph)

-

Redis 6.0+- Cache layer and message queue

Clone via Git (recommended):

git clone https://github.com/SuanmoSuanyangTechnology/MemoryBear.git

# 0. Install the dependency management tool: uv

pip install uv

# 1. Switch to the API directory

cd api

# 2. Install dependencies

uv sync

# 3. Activate the Virtual Environment (Windows)

.venv\Scripts\Activate.ps1 # run inside /api directory

api\.venv\Scripts\activate # run inside project root directory

.venv\Scripts\activate.bat # run inside /api directoryUse Docker Desktop to install the necessary service images.

-

Docker Desktop download page: https://www.docker.com/products/docker-desktop/

-

PostgreSQL

Pull the Image

search-select-pull

Create the Container

Service Started Successfully

- Neo4j

Pull the Image from Docker Desktop, the same way as with PostgreSQL.

Create the Neo4j Container ensure that you map the two required ports 7474 - Neo4j Browser, 7687 - Bolt protocol. Additionally, you must set an initial password for the Neo4j database during container creation.

Service Started Successfully

- Redis

The same as above

Copy env.example as.env and fill in the configuration

# Neo4j Graph Database

NEO4J_URI=bolt://localhost:7687

NEO4J_USERNAME=neo4j

NEO4J_PASSWORD=your-password

# Neo4j Browser Access URL (optional documentation)

# PostgreSQL Database

DB_HOST=127.0.0.1

DB_PORT=5432

DB_USER=postgres

DB_PASSWORD=your-password

DB_NAME=redbear-mem

# Database Migration Configuration

# Set to true to automatically upgrade database schema on startup

DB_AUTO_UPGRADE=true # For the first startup, keep this as true to create the schema in an empty database.

# Redis

REDIS_HOST=127.0.0.1

REDIS_PORT=6379

REDIS_DB=1

# Celery (Using Redis as broker)

BROKER_URL=redis://127.0.0.1:6379/0

RESULT_BACKEND=redis://127.0.0.1:6379/0

# JWT Secret Key (Formation method: openssl rand -hex 32)

SECRET_KEY=your-secret-key-hereMemoryBear uses Alembic migration files included in the project to create the required table structures in a newly created, empty PostgreSQL database.

(1) Configure the Database Connection

Ensure that the sqlalchemy.url value in the project's alembic.ini file points to your empty PostgreSQL database. Example format:

sqlalchemy.url = postgresql://<username>:<password>@<host>:<port>/<database_name>Also verify that target_metadata in migrations/env.py is correctly linked to the ORM model's metadata object.

(2) Apply the Migration Files

Run the following command inside the API directory. Alembic will automatically detect the empty database and apply all outstanding migrations to create the full schema:

alembic upgrade headUse Navicat to inspect the database tables created by the Alembic migration process.

uv run -m app.mainAccess the API documentation at http://localhost:8000/docs

# Switch to the web directory

cd web

# Install dependencies

npm installEdit web/vite.config.ts and update the proxy target to point to your backend API service:

proxy: {

'/api': {

target: 'http://127.0.0.1:8000', // Change to the backend address, windows users 127.0.0.1 macOS users 0.0.0.0

changeOrigin: true,

},

}# Start the web service

npm run devAfter the service starts, the console will output the URL for accessing the frontend interface.

step1: Retrieve the Project.

step2: Start the Backend API Service.

step3: Start the Frontend Web Application.

step4: Enter curl.exe -X POST http://127.0.0.1:8000/api/setup in the terminal to access the interface, initialize the database, and obtain the super administrator account.

step5: Super Administrator Credentials Account: [email protected] Password: admin_password

step6: Log In to the Frontend Interface.

This project is licensed under the Apache License 2.0. For details, see the LICENSE file.

Join our community to ask questions, share your work, and connect with fellow developers.

- GitHub Issues: Report bugs, request features, or track known issues via GitHub Issues.

- GitHub Pull Requests: Contribute code improvements or fixes through Pull Requests.

- GitHub Discussions: Ask questions, share ideas, and engage with the community in GitHub Discussions.

- WeChat: Scan the QR code below to join our WeChat community group.

- Contact: If you are interested in contributing or collaborating, feel free to reach out at [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MemoryBear

Similar Open Source Tools

For similar tasks

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

Awesome-Latent-CoT

This repository contains a regularly updated paper list for Large Language Models (LLMs) reasoning in latent space. Reasoning in latent space allows for more flexible and efficient thought representation beyond language tokens, bringing AI closer to human-like cognition. The repository covers various aspects of LLMs, including pre-training, supervised finetuning, analysis, interpretability, multimodal reasoning, and applications. It aims to showcase the advancements in reasoning with latent thoughts and continuous concepts in AI models.

ERNIE

ERNIE 4.5 is a family of large-scale multimodal models with 10 distinct variants, including Mixture-of-Experts (MoE) models with 47B and 3B active parameters. The models feature a novel heterogeneous modality structure supporting parameter sharing across modalities while allowing dedicated parameters for each individual modality. Trained with optimal efficiency using PaddlePaddle deep learning framework, ERNIE 4.5 models achieve state-of-the-art performance across text and multimodal benchmarks, enhancing multimodal understanding without compromising performance on text-related tasks. The open-source development toolkits for ERNIE 4.5 offer industrial-grade capabilities, resource-efficient training and inference workflows, and multi-hardware compatibility.

LLM-KG4QA

LLM-KG4QA is a repository focused on the integration of Large Language Models (LLMs) and Knowledge Graphs (KGs) for Question Answering (QA). It covers various aspects such as using KGs as background knowledge, reasoning guideline, and refiner/filter. The repository provides detailed information on pre-training, fine-tuning, and Retrieval Augmented Generation (RAG) techniques for enhancing QA performance. It also explores complex QA tasks like Explainable QA, Multi-Modal QA, Multi-Document QA, Multi-Hop QA, Multi-run and Conversational QA, Temporal QA, Multi-domain and Multilingual QA, along with advanced topics like Optimization and Data Management. Additionally, it includes benchmark datasets, industrial and scientific applications, demos, and related surveys in the field.

MemoryBear

MemoryBear is a next-generation AI memory system developed by RedBear AI, focusing on overcoming limitations in knowledge storage and multi-agent collaboration. It empowers AI with human-like memory capabilities, enabling deep knowledge understanding and cognitive collaboration. The system addresses challenges such as knowledge forgetting, memory gaps in multi-agent collaboration, and semantic ambiguity during reasoning. MemoryBear's core features include memory extraction engine, graph storage, hybrid search, memory forgetting engine, self-reflection engine, and FastAPI services. It offers a standardized service architecture for efficient integration and invocation across applications.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

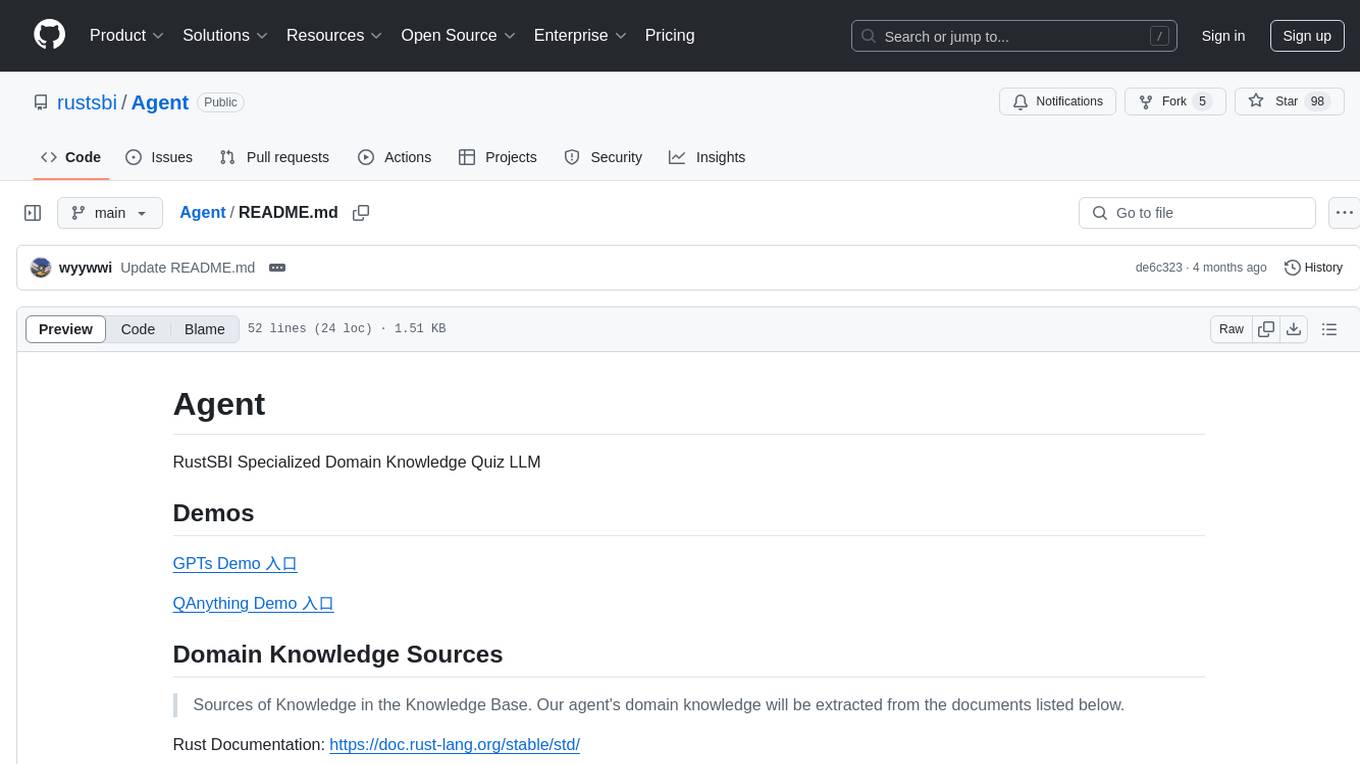

Agent

Agent is a RustSBI specialized domain knowledge quiz LLM tool that extracts domain knowledge from various sources such as Rust Documentation, RISC-V Documentation, Bouffalo Docs, Bouffalo SDK, and Xiangshan Docs. It also provides resources for LLM prompt engineering and RAG engineering, including guides and existing projects related to retrieval-augmented generation (RAG) systems.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.