Best AI tools for< Support Quantization >

20 - AI tool Sites

Private LLM

Private LLM is a secure, local, and private AI chatbot designed for iOS and macOS devices. It operates offline, ensuring that user data remains on the device, providing a safe and private experience. The application offers a range of features for text generation and language assistance, utilizing state-of-the-art quantization techniques to deliver high-quality on-device AI experiences without compromising privacy. Users can access a variety of open-source LLM models, integrate AI into Siri and Shortcuts, and benefit from AI language services across macOS apps. Private LLM stands out for its superior model performance and commitment to user privacy, making it a smart and secure tool for creative and productive tasks.

Support AI

Support AI is a custom AI chatbot application powered by ChatGPT that allows website owners to create personalized chatbots to provide instant answers to customers, capture leads, and enhance customer support. With Support AI, users can easily integrate AI chatbots on their websites, train them with specific content, and customize their behavior and responses. The application offers features such as capturing leads, providing accurate answers, handling bookings, collecting feedback, and offering product recommendations. Users can choose from different pricing plans based on their message volume and training content needs.

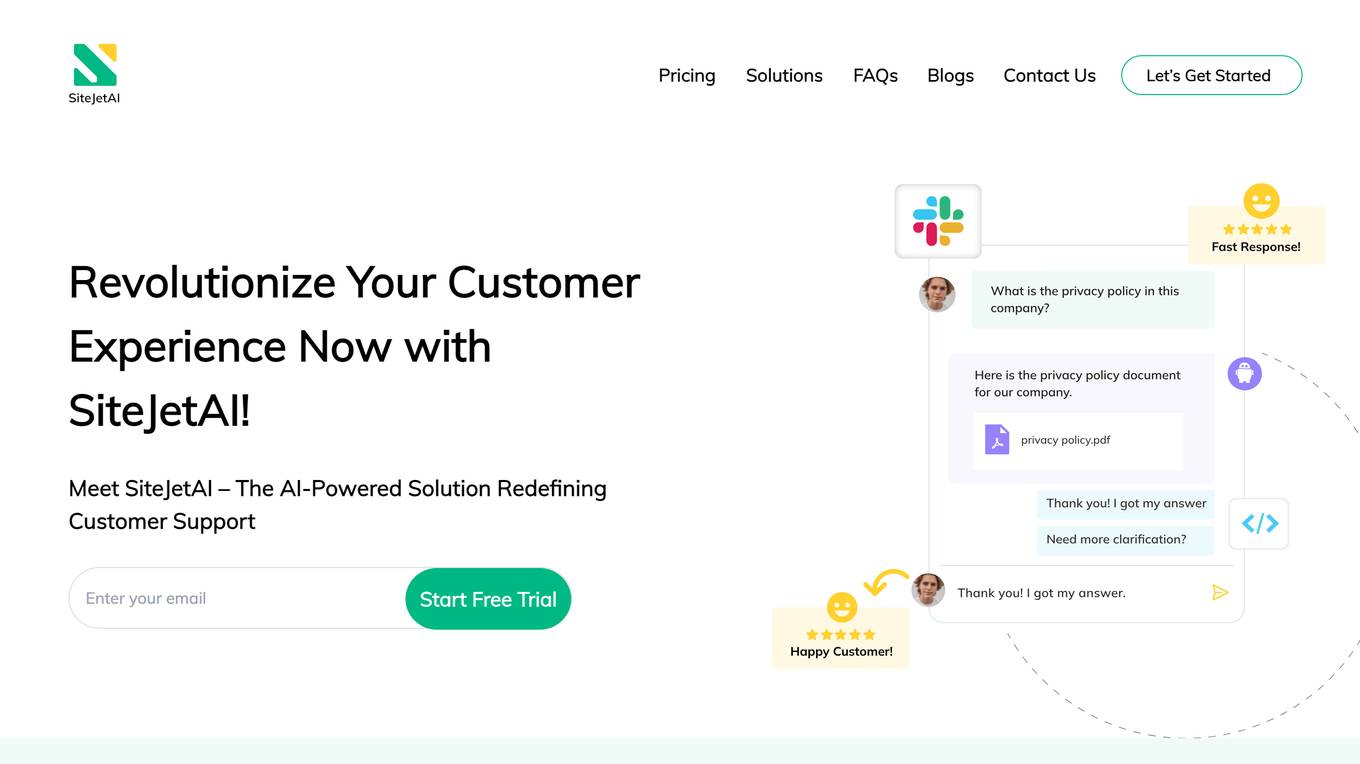

AI Chatbot Support

AI Chatbot Support is an autonomous AI and live chat customer service application that provides magic customer experiences by connecting websites, social media, and business messaging platforms. It offers multi-platform support, auto language translation, rich messaging features, smart-reply suggestions, and platform-agnostic AI assistance. The application is designed to enhance customer engagement, satisfaction, and retention across digital platforms through personalized experiences and swift query resolutions.

AI-Powered Customer Support Chatbot

This AI-powered customer support chatbot is a cutting-edge tool that transforms customer engagement and drives revenue growth. It leverages advanced natural language processing (NLP) and machine learning algorithms to provide personalized, real-time support to customers across multiple channels. By automating routine inquiries, resolving complex issues, and offering proactive assistance, this chatbot empowers businesses to enhance customer satisfaction, increase conversion rates, and optimize their support operations.

Anthropic

Anthropic is a research and deployment company founded in 2021 by former OpenAI researchers Dario Amodei, Daniela Amodei, and Geoffrey Irving. The company is developing large language models, including Claude, a multimodal AI model that can perform a variety of language-related tasks, such as answering questions, generating text, and translating languages.

Wondershare Help Center

Wondershare Help Center provides comprehensive support for Wondershare products, including video editing, video creation, diagramming, PDF solutions, and data management. It offers a wide range of resources such as tutorials, FAQs, troubleshooting guides, and access to customer support.

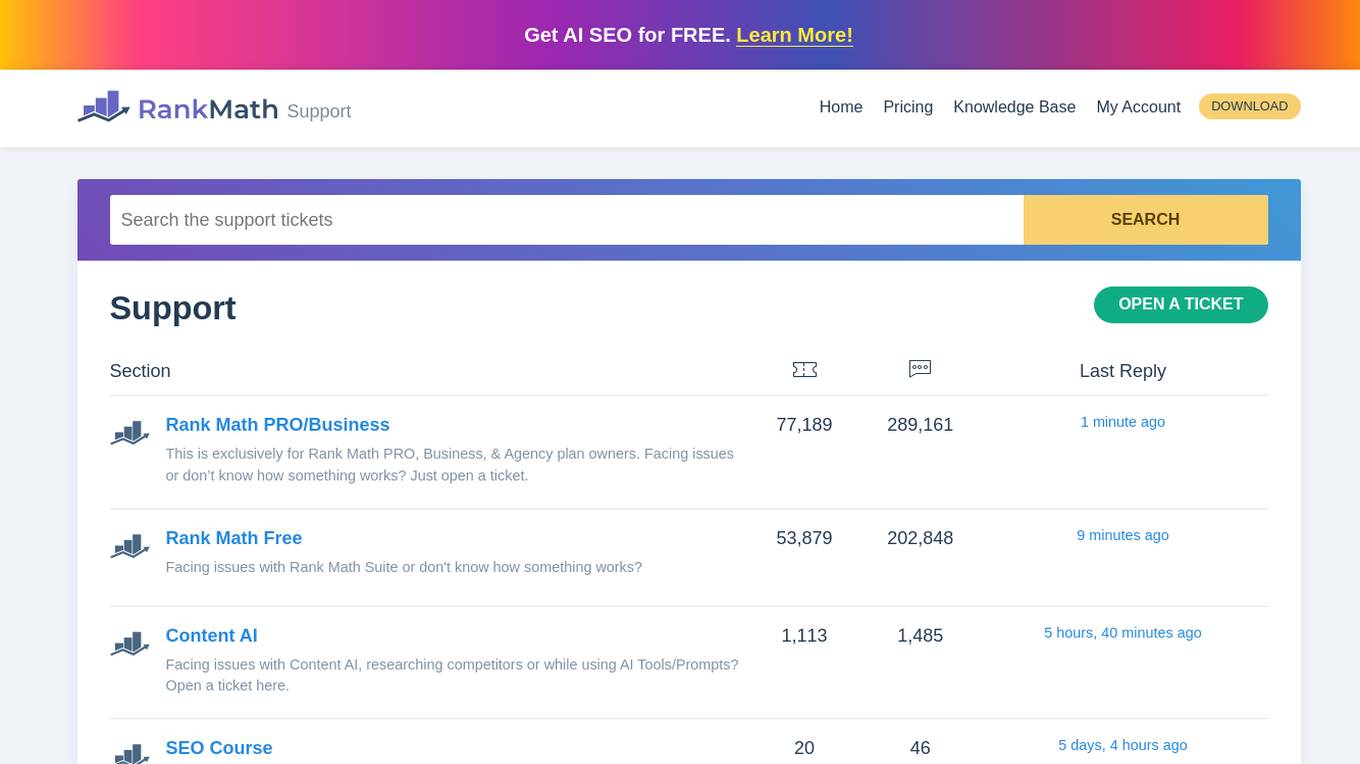

Rank Math

Rank Math is an AI-powered SEO tool that helps you optimize your website for search engines. It offers a variety of features to help you improve your website's ranking, including keyword research, on-page optimization, and link building. Rank Math also provides detailed analytics to help you track your progress and identify areas for improvement.

Meetgeek.ai

Meetgeek.ai is an AI-powered platform designed to enhance virtual meetings and conferences. It offers a range of features to streamline the meeting experience, such as integrations with popular conferencing tools, detailed guides on settings and features, and regular updates to improve functionality. With a focus on user-friendly interfaces and seamless communication, Meetgeek.ai aims to revolutionize the way teams collaborate remotely.

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

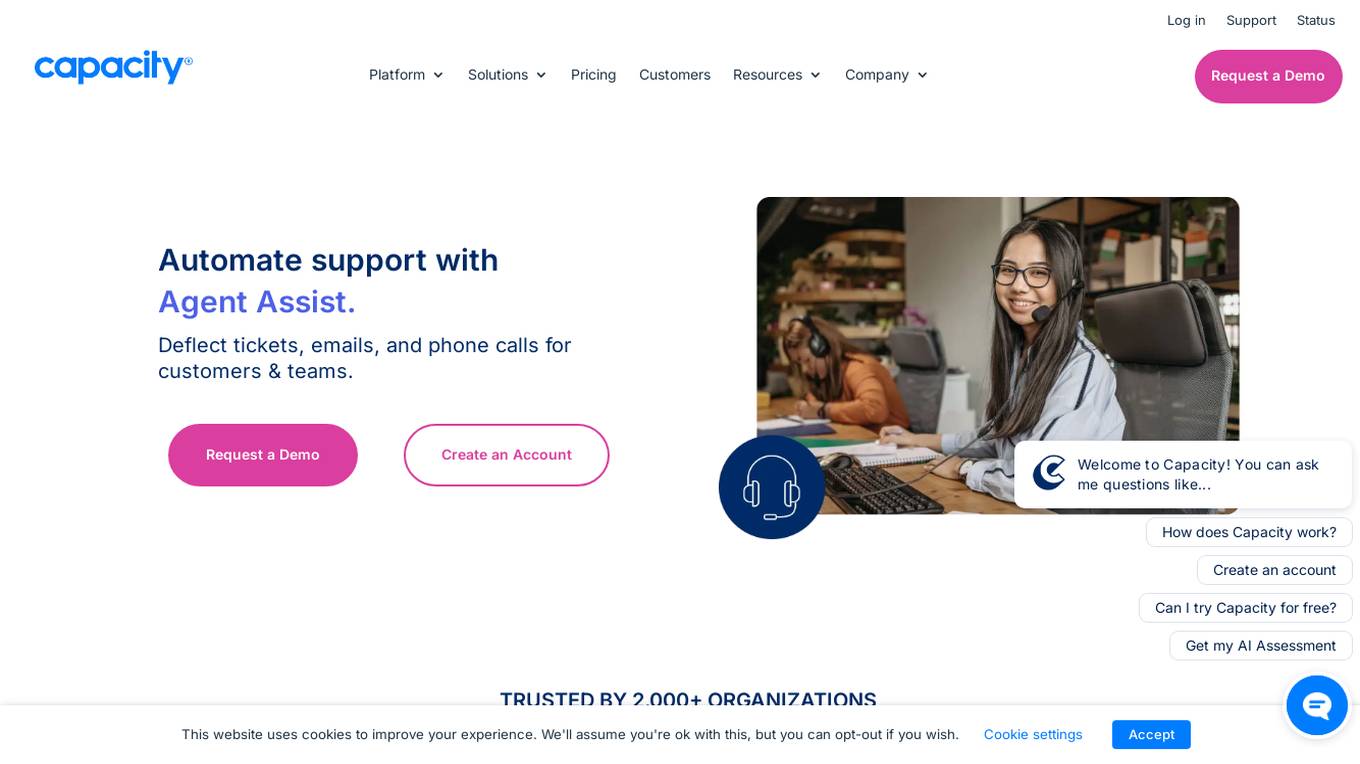

Capacity

Capacity is an AI-powered support automation platform that offers a wide range of features to streamline customer support processes. It provides self-service options, chatbots, knowledge base management, voice biometrics, CRM automation, live chat, and more. The platform is designed to enhance customer interactions, automate workflows, and improve overall efficiency in customer support operations. Capacity is trusted by over 2,000 organizations, ranging from small brands to large enterprises, and is known for its user-friendly interface and secure compliance with data protection regulations.

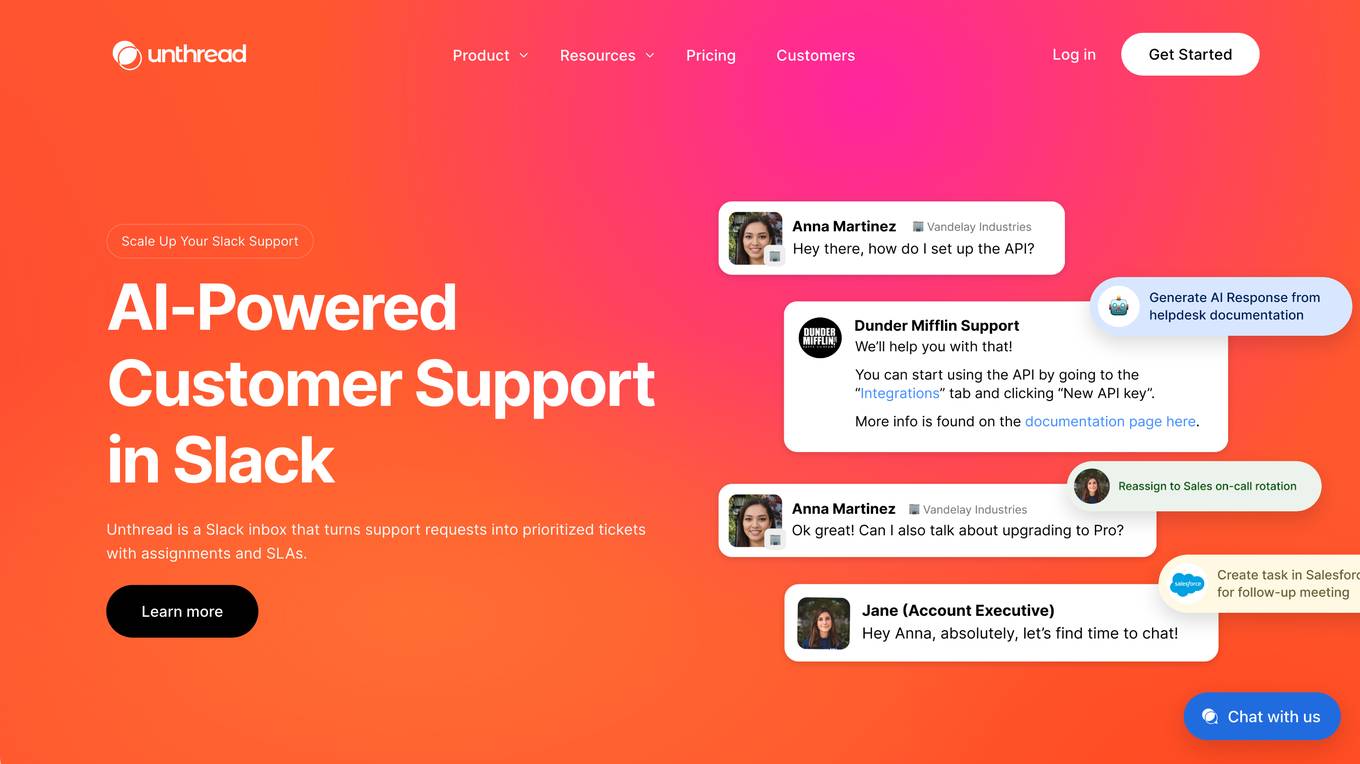

Unthread

Unthread is an AI-powered support tool integrated with Slack, designed to streamline customer interactions and automate support processes. It offers features such as AI-generated support responses, shared email inbox, in-app live chat, and IT ticketing solutions. Unthread aims to enhance customer support efficiency by leveraging AI technology to prioritize, assign, and resolve tickets instantly, while providing a seamless experience for both users and customers.

Empathy

Empathy is a platform that offers support for life's hardest moments, from planning a legacy to navigating loss. It partners with leading organizations to provide practical, emotional, and logistical support on demand. Empathy helps individuals and families move forward by offering tools and guidance for funeral planning, estate tasks, emotional support, and legacy planning. The platform aims to reduce complexity and deliver meaningful outcomes by empowering people to plan for and move through life's biggest transitions.

Coursebox AI

Coursebox AI is an AI-powered support tool designed to assist users in navigating and utilizing the Coursebox platform efficiently. It offers a knowledge base, ticket submission system, and user manuals to help users troubleshoot issues and learn best practices. The tool aims to streamline the user experience and provide comprehensive support for Coursebox users.

LiveChat

LiveChat is a customer service software application that provides businesses with tools to enhance customer support and sales across multiple communication channels. It offers features such as AI chatbots, helpdesk support, knowledge base, and widgets to automate and improve customer interactions. LiveChat aims to help businesses boost customer satisfaction, increase sales, and retain customers longer through efficient and personalized support.

LiveChat

LiveChat is an AI-driven business chat software designed for e-commerce companies to enhance customer support, sales, and marketing efforts. It offers a range of features such as AI chatbots, Copilot AI assistant, live chat communication, lead generation tools, and integration with various platforms. The application aims to improve productivity, automate customer service, and provide personalized support to help businesses grow and succeed in the competitive online market.

Crisp

Crisp is an AI Customer Support Platform designed to augment customer experience by providing AI agents to support teams and customers. It offers an all-in-one omnichannel inbox, AI workflows, flat pricing, and the ability to automate inquiries. Crisp allows users to create AI agents, centralize inbound communications, build knowledge bases, and monitor team performances. The platform is trusted by 10,000 companies and aims to automate customer support processes to allow teams to focus on more critical tasks.

Forethought

Forethought is a customer support AI platform that uses generative AI to automate tasks and improve efficiency. It offers a range of features including automatic ticket resolution, sentiment analysis, and agent assist. Forethought's platform is designed to help businesses save costs, improve customer satisfaction, and increase agent productivity.

Moveworks

Moveworks is an AI-powered employee support platform that automates tasks, provides information, and creates content across various business applications. It offers features such as task automation, ticket automation, enterprise search, data lookups, knowledge management, employee notifications, approval workflows, and internal communication. Moveworks integrates with numerous business applications and is trusted by over 5 million employees at 300+ companies.

Zaia

Zaia is an AI tool designed to automate support and sales processes using Autonomous Artificial Intelligence Agents. It enables businesses to enhance customer interactions and streamline sales operations through intelligent automation. With Zaia, companies can leverage AI technology to provide efficient and personalized customer service, leading to improved customer satisfaction and increased sales revenue.

Mava

Mava is a customer support platform that uses AI to help businesses manage their support operations. It offers a range of features, including a shared inbox, a Discord bot, and a knowledge base. Mava is designed to help businesses scale their support operations and improve their customer satisfaction.

1 - Open Source AI Tools

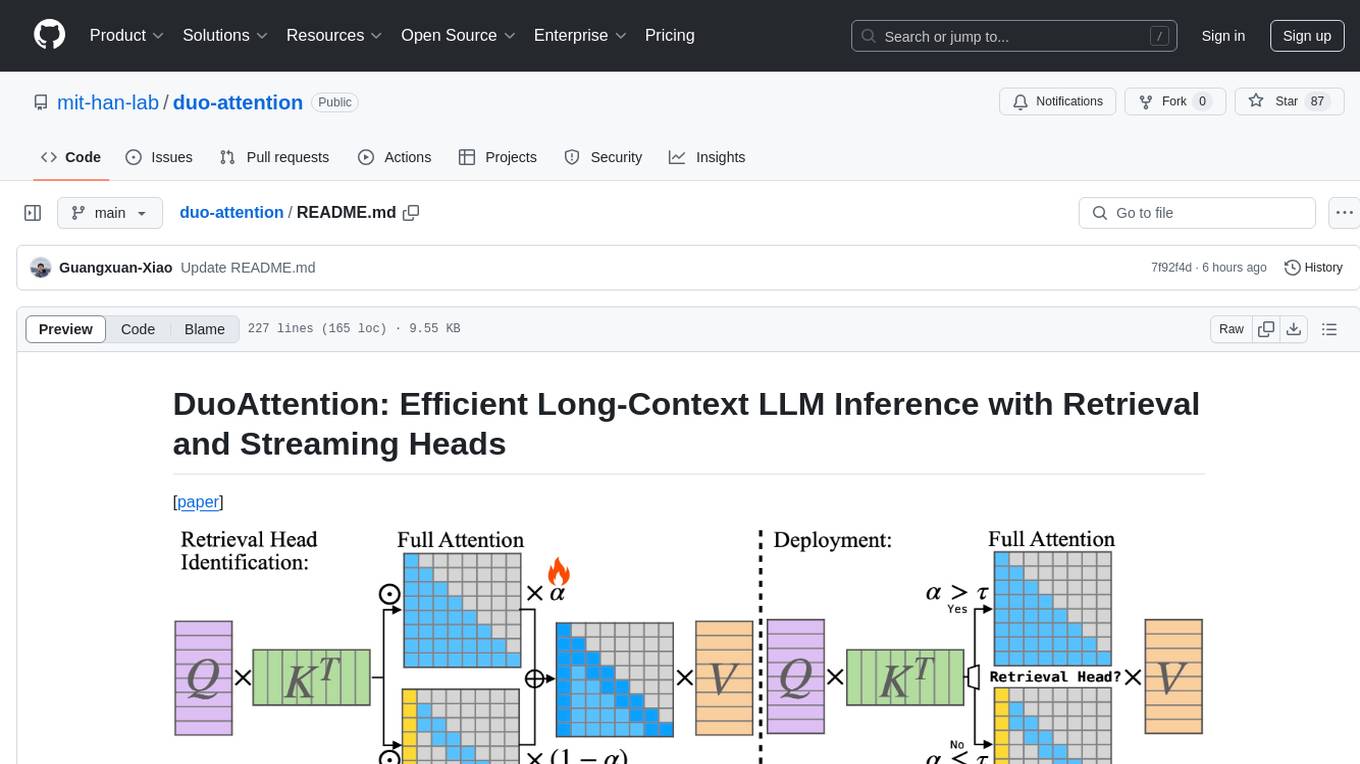

duo-attention

DuoAttention is a framework designed to optimize long-context large language models (LLMs) by reducing memory and latency during inference without compromising their long-context abilities. It introduces a concept of Retrieval Heads and Streaming Heads to efficiently manage attention across tokens. By applying a full Key and Value (KV) cache to retrieval heads and a lightweight, constant-length KV cache to streaming heads, DuoAttention achieves significant reductions in memory usage and decoding time for LLMs. The framework uses an optimization-based algorithm with synthetic data to accurately identify retrieval heads, enabling efficient inference with minimal accuracy loss compared to full attention. DuoAttention also supports quantization techniques for further memory optimization, allowing for decoding of up to 3.3 million tokens on a single GPU.

20 - OpenAI Gpts

Ekko Support Specialist

How to be a master of surprise plays and unconventional strategies in the bot lane as a support role.

Backloger.ai -Support Log Analyzer and Summary

Drop your Support Log Here, Allowing it to automatically generate concise summaries reporting to the tech team.

Tech Support Advisor

From setting up a printer to troubleshooting a device, I’m here to help you step-by-step.

Z Support

Expert in Nissan 370Z & 350Z modifications, offering tailored vehicle upgrade advice.

Emotional Support Copywriter

A creative copywriter you can hang out with and who won't do their timesheets either.

PCT 365 Support Bot

Microsoft 365 support agent, redirects admin-level requests to PCT Support.

Technischer Support Bot

Ein Bot, der grundlegende technische Unterstützung und Fehlerbehebung für gängige Software und Hardware bietet.

Military Support

Supportive and informative guide on military, veterans, and military assistance.

Dror Globerman's GPT Tech Support

Your go-to assistant for everyday tech support and guidance.

Customer Support Assistant

Expert in crafting empathetic, professional emails for customer support.