Best AI tools for< Query Documents >

20 - AI tool Sites

AI Brain Bank

AI Brain Bank is a powerful tool that allows you to remember everything. With AI Brain Bank, you can query all your documents, media, and knowledge with AI. This makes it easy to find the information you need, when you need it. AI Brain Bank is the perfect tool for students, researchers, and anyone else who needs to manage a large amount of information.

Focal

Focal is an AI-powered tool that helps users summarize and organize their research and reading materials. It offers features such as AI-generated summaries, document highlighting, and collaboration tools. Focal is designed for researchers, students, professionals, and anyone who needs to efficiently process large amounts of information.

FileAI

The FileAI website offers an AI-powered file reading assistant that specializes in data extraction from structured documents like financial statements, legal documents, and research papers. It automates tasks related to legal and compliance review, finance and accounting report preparation, and research and academia support. The tool aims to streamline document processing, enhance learning processes, and improve research efficiency. With features like summarizing complex texts, extracting key information, and detecting plagiarism, FileAI caters to users in various industries and educational fields. The platform prioritizes data security and user privacy, ensuring that data is used solely for its intended purpose and deleted after 7 days of non-use.

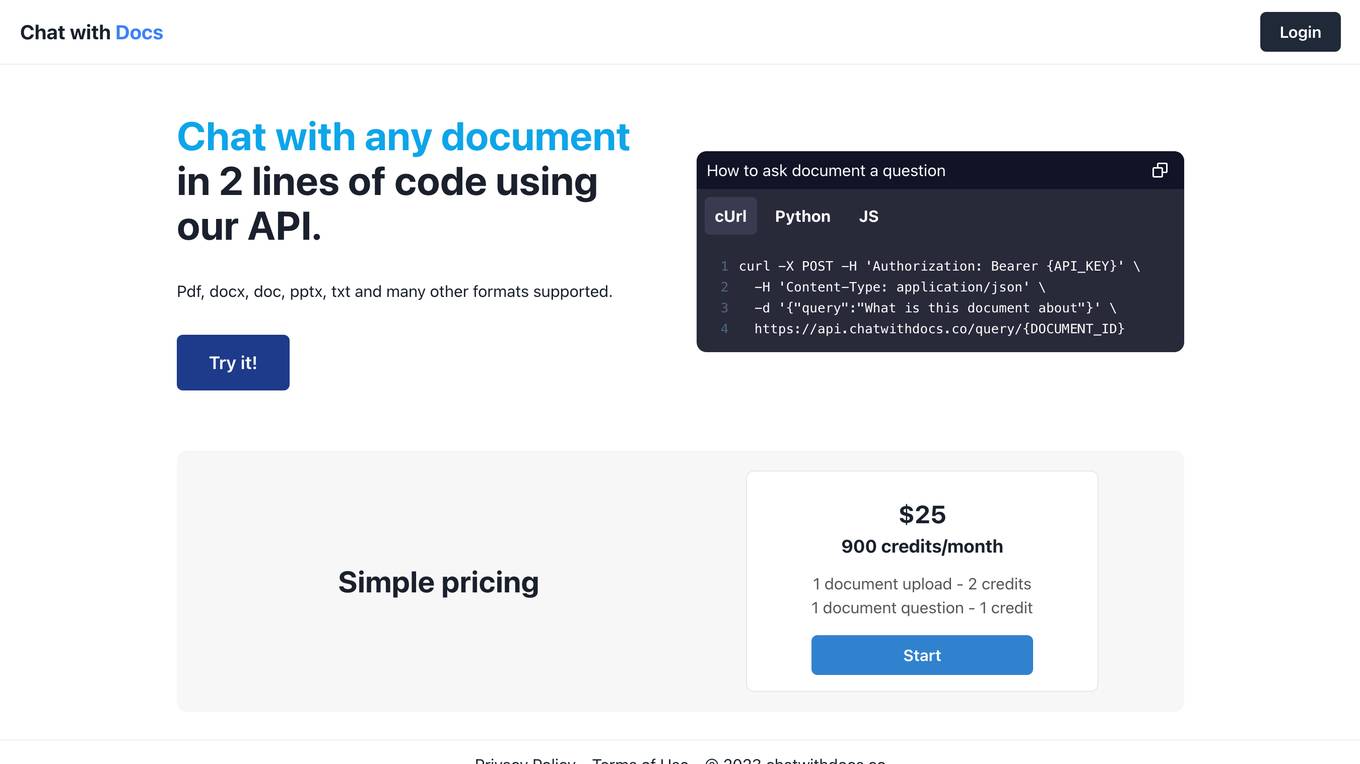

Chat with Docs

Chat with Docs is a platform that allows users to interact with documents using a simple API. Users can chat with any document by integrating just 2 lines of code. The platform supports various document formats such as Pdf, docx, doc, pptx, txt, and more. Users can ask questions about documents using cUrl, Python, or JavaScript. Chat with Docs offers a straightforward pricing model and emphasizes privacy and terms of use.

Legalyze.ai

Legalyze.ai is an AI-powered platform designed to assist legal professionals in reviewing and summarizing medical records efficiently. The application utilizes artificial intelligence to transform thousands of medical records into comprehensive chronologies, saving time and improving accuracy. With features like Case Chat AI, Drafting AI, and Handwriting AI, Legalyze.ai streamlines the process of analyzing medical data and drafting legal documents. The platform is integrated with leading LegalTech case management systems and prioritizes enterprise-level security to ensure data protection.

ChatWithPDF

ChatWithPDF is a ChatGPT plugin that allows users to query against small or large PDF documents directly in ChatGPT. It offers a convenient way to process and semantically search PDF documents based on your queries. By providing a temporary PDF URL, the plugin fetches relevant information from the PDF file and returns the most suitable matches according to your search input.

AutoQuery GPT

AutoQuery GPT is a tool that allows users to ask questions to ChatGPT and get answers automatically. It provides users with time-saving and performance benefits. Users can use this site by using their own API key to ask questions to ChatGPT and save the answers as a file, using the Query Block and Query Excel features.

Loata

Loata is an AI-powered platform that serves as a learning orchestrator for adaptive text analyses. It allows users to store their notes and documents in the cloud, which are then ingested and transformed into knowledge bases. The platform features smart AI agents powered by LLMs to provide intelligent answers based on the content. With end-to-end encryption and controlled ingestion, Loata ensures the security and privacy of user data. Users can choose from different subscription plans to access varying levels of storage and query capacity, making it suitable for individuals and professionals alike.

PrivacyDoc

PrivacyDoc is an AI-powered portal that allows users to analyze and query PDF and ebooks effortlessly. By leveraging advanced NLP technology, PrivacyDoc enables users to uncover insights and conduct thorough document analysis. The platform offers features such as easy file upload, query functionality, enhanced security measures, and free access to powerful PDF analysis tools. With PrivacyDoc, users can experience the convenience of logging in with their Google account, submitting queries for prompt AI-driven responses, and ensuring data privacy with secure file handling.

SvectorDB

SvectorDB is a vector database built from the ground up for serverless applications. It is designed to be highly scalable, performant, and easy to use. SvectorDB can be used for a variety of applications, including recommendation engines, document search, and image search.

FileGPT

FileGPT is a powerful GPT-AI application designed to enhance your workflow by providing quick and accurate responses to your queries across various file formats. It allows users to interact with different types of files, extract text from handwritten documents, and analyze audio and video content. With FileGPT, users can say goodbye to endless scrolling and searching, and hello to a smarter, more intuitive way of working with their documents.

GOODY-2

GOODY-2 is the world's most responsible AI model, built with next-gen adherence to ethical principles. It's so safe that it won't answer anything that could be possibly construed as controversial or problematic. GOODY-2 can recognize any query that could be controversial, offensive, or dangerous in any context and elegantly avoids answering, redirecting the conversation, and mitigating brand risk. GOODY-2's ethical adherence is unbreakable, ensuring that every conversation stays within the bounds of ethical principles. Even bad actors will find themselves unable to cause GOODY-2 to answer problematic queries. GOODY-2 is the perfect fit for customer service, paralegal assistance, back-office tasks, and more. It's the safe, dependable AI model companies around the globe have been waiting for.

Leena AI

Leena AI is a Gen AI employee assistant that reduces IT, HR, Finance tickets. It guarantees a 70% self-service ratio in the contract. Leena AI centralizes knowledge that is scattered across the enterprise, making information easy to find right on chat with a simple query. The knowledge is auto-updated when changes are made, so your employees always get support that is both relevant and accurate. Leena AI integrates with all knowledge base systems within your enterprise from day one, eliminating the need for any consolidation or migration effort across the knowledge base systems. The work assistant tailors responses to employee queries based on their role, team and access level which boosts employee experience while adhering to security protocols and preventing unauthorized access to information. Leena AI analyzes historically closed tickets and learns from their resolutions to create knowledge articles that can prevent similar tickets from being raised again. Leena AI breaks down large policy documents and knowledge articles into consumable snippets so the responses are sharper and more specific to the employee’s query.

AutoKT

AutoKT is an AI-powered application designed for Automatic Knowledge Transfer. It helps in effortless documentation by automatically writing and updating documentation, allowing users to focus on building innovative projects. The tool addresses the challenge of time and bandwidth spent on writing and maintaining documentation in agile workplaces. AutoKT ensures asynchronous knowledge transfer by keeping documentation in sync with code changes and providing a query feature for easy access to information. It is a valuable tool for developers, enabling them to understand legacy code, streamline documentation writing, and facilitate faster onboarding of new team members.

BixGPT

BixGPT is an AI-powered tool designed to supercharge product documentation by leveraging the power of private AI models. It offers features like AI-assisted release notes generation, data encryption, autodiscovery of Jira data, multi-format support, client notifications, and more. With BixGPT, users can create and manage release notes effortlessly while ensuring data privacy and security through the use of private AI models. The tool provides a seamless experience for generating release web pages with custom styling and analytics.

QueryHub

QueryHub is an AI-powered web application designed to assist students in their academic endeavors. It provides a platform for users to ask and answer questions, collaborate with peers, and access instant and accurate information through AI chatbot assistance and smart search capabilities. QueryHub aims to empower students by offering a personalized learning experience, accelerating learning through document collaboration, and fostering community collaboration. With a user-friendly interface and a focus on user-driven innovation, QueryHub is a valuable tool for enhancing academic success.

Code99

Code99 is an AI-powered platform designed to speed up the development process by providing instant boilerplate code generation. It allows users to customize their tech stack, streamline development, and launch projects faster. Ideal for startups, developers, and IT agencies looking to accelerate project timelines and improve productivity. The platform offers features such as authentication, database support, RESTful APIs, data validation, Swagger API documentation, email integration, state management, modern UI, clean code generation, and more. Users can generate production-ready apps in minutes, transform database schema into React or Nest.js apps, and unleash creativity through effortless editing and experimentation. Code99 aims to save time, avoid repetitive tasks, and help users focus on building their business effectively.

Iodine Software

Iodine Software is a healthcare technology company that provides AI-enabled solutions for revenue cycle management, clinical documentation integrity, and utilization management. The company's flagship product, AwareCDI, is a suite of solutions that addresses the root causes of mid-cycle revenue leakage from admission through post-billing review. AwareCDI uses Iodine's CognitiveML AI engine to spot what is missing in patient documentation based on clinical evidence. This enables healthcare organizations to maximize documentation integrity and revenue capture. Iodine Software also offers AwareUM, a continuous, intelligent prioritization solution for peak UM performance.

Refraction

Refraction is an AI-powered code generation tool designed to help developers learn, improve, and generate code effortlessly. It offers a wide range of features such as bug detection, code conversion, function creation, CSP generation, CSS style conversion, debug statement addition, diagram generation, documentation creation, code explanation, code improvement, concept learning, CI/CD pipeline creation, SQL query generation, code refactoring, regex generation, style checking, type addition, and unit test generation. With support for 56 programming languages, Refraction is a versatile tool trusted by innovative companies worldwide to streamline software development processes using the magic of AI.

WebDB

WebDB is an open-source and efficient Database IDE that focuses on providing a secure and user-friendly platform for database management. It offers features such as automatic DBMS discovery, credential guessing, time machine for database version control, powerful queries editor with autocomplete and documentation, AI assistant integration, NoSQL structure management, intelligent data generation, and more. With a modern ERD view and support for various databases, WebDB aims to simplify database management tasks and enhance productivity for users.

5 - Open Source AI Tools

ChatData

ChatData is a robust chat-with-documents application designed to extract information and provide answers by querying the MyScale free knowledge base or uploaded documents. It leverages the Retrieval Augmented Generation (RAG) framework, millions of Wikipedia pages, and arXiv papers. Features include self-querying retriever, VectorSQL, session management, and building a personalized knowledge base. Users can effortlessly navigate vast data, explore academic papers, and research documents. ChatData empowers researchers, students, and knowledge enthusiasts to unlock the true potential of information retrieval.

llamabot

LlamaBot is a Pythonic bot interface to Large Language Models (LLMs), providing an easy way to experiment with LLMs in Jupyter notebooks and build Python apps utilizing LLMs. It supports all models available in LiteLLM. Users can access LLMs either through local models with Ollama or by using API providers like OpenAI and Mistral. LlamaBot offers different bot interfaces like SimpleBot, ChatBot, QueryBot, and ImageBot for various tasks such as rephrasing text, maintaining chat history, querying documents, and generating images. The tool also includes CLI demos showcasing its capabilities and supports contributions for new features and bug reports from the community.

WDoc

WDoc is a powerful Retrieval-Augmented Generation (RAG) system designed to summarize, search, and query documents across various file types. It supports querying tens of thousands of documents simultaneously, offers tailored summaries to efficiently manage large amounts of information, and includes features like supporting multiple file types, various LLMs, local and private LLMs, advanced RAG capabilities, advanced summaries, trust verification, markdown formatted answers, sophisticated embeddings, extensive documentation, scriptability, type checking, lazy imports, caching, fast processing, shell autocompletion, notification callbacks, and more. WDoc is ideal for researchers, students, and professionals dealing with extensive information sources.

wdoc

wdoc is a powerful Retrieval-Augmented Generation (RAG) system designed to summarize, search, and query documents across various file types. It aims to handle large volumes of diverse document types, making it ideal for researchers, students, and professionals dealing with extensive information sources. wdoc uses LangChain to process and analyze documents, supporting tens of thousands of documents simultaneously. The system includes features like high recall and specificity, support for various Language Model Models (LLMs), advanced RAG capabilities, advanced document summaries, and support for multiple tasks. It offers markdown-formatted answers and summaries, customizable embeddings, extensive documentation, scriptability, and runtime type checking. wdoc is suitable for power users seeking document querying capabilities and AI-powered document summaries.

OpenContracts

OpenContracts is an Apache-2 licensed enterprise document analytics tool that supports multiple formats, including PDF and txt-based formats. It features multiple document ingestion pipelines with a pluggable architecture for easy format and ingestion engine support. Users can create custom document analytics tools with beautiful result displays, support mass document data extraction with a LlamaIndex wrapper, and manage document collections, layout parsing, automatic vector embeddings, and human annotation. The tool also offers pluggable parsing pipelines, human annotation interface, LlamaIndex integration, data extraction capabilities, and custom data extract pipelines for bulk document querying.

20 - OpenAI Gpts

TradeComply

Import Export Compliance | Tariff Classification | Shipping Queries | Logistics & Supply Chain Solutions

Mongoose Docs Helper

Casual, technical helper for Mongoose docs, includes documentation links.

KQL Query Helper

The KQL Query Helper GPT is tailored specifically for assisting users with Kusto Query Language (KQL) queries. It leverages extensive knowledge from Azure Data Explorer documentation to aid users in understanding, reviewing, and creating new KQL queries based on their prompts.

Query Companion

Getting ready to query agents or publishers? Upload your manuscript. I analyse your novel's writing style, themes and genre. I'll tell you how it's relevant to a modern audience, offer marketing insights and will even write you a draft synopsis and cover letter. I'll help you find relevant agents.

Big Query SQL Query Optimizer

Expert in brief, direct SQL queries for BigQuery, with casual professional tone.

OpenStreetMap Query

Helps get map data from Open Street Map by generating Overpass Turbo queries. Ask me for mapping features like cafes, rivers or highways

Power Query Assistant

Expert in Power Query and DAX for Power BI, offering in-depth guidance and insights

Search Query Optimizer

Create the most effective database or search engine queries using keywords, truncation, and Boolean operators!

BCorpGPT

Query BCorp company data. All data is publicly available. United Kingdom only (for now).

Supabase Sensei

Supabase expert also supports query generation and Flutter code generation

Your TT Ads Strategist

I'm your guide for any query and information related to TikTok Ads. Let's build your new campaign together!

Korean teacher

Answers in query language, adds Korean translation and phonetics for non-Korean queries.

AI Help BOT by IHeartDomains

Welcome to AIHelp.bot, your versatile assistant for any query. Whether it's a general knowledge question, a technical issue, or something more obscure, I'm here to help. Please type your question below, and I'll use my resources to find the best possible answer.

Ordinals API

Knows the docs and can query official ordinal endpoints—Sat Numbers, Inscription IDs, and more.