Best AI tools for< Load Models >

20 - AI tool Sites

Milo

Milo is an AI-powered co-pilot for parents, designed to help them manage the chaos of family life. It uses GPT-4, the latest in large-language models, to sort and organize information, send reminders, and provide updates. Milo is designed to be accurate and solve complex problems, and it learns and gets better based on user feedback. It can be used to manage tasks such as adding items to a grocery list, getting updates on the week's schedule, and sending screenshots of birthday invitations.

VoiceGPT

VoiceGPT is an Android app that provides a voice-based interface to interact with AI language models like ChatGPT, Bing AI, and Bard. It offers features such as unlimited free messages, voice input and output in 67+ languages, a floating bubble for easy switching between apps, OCR text recognition, code execution, image generation with DALL-E 2, and support for ChatGPT Plus accounts. VoiceGPT is designed to be accessible for users with visual impairments, dyslexia, or other conditions, and it can be set as the default assistant to be activated hands-free with a custom hotword.

Avaturn

Avaturn is a realistic 3D avatar creator that uses generative AI to turn a 2D photo into a recognizable and realistic 3D avatar. With endless options for avatar customization, you can create a unique look for each and everyone. Export your avatar as a 3D model and load it in Blender, Unity, Unreal Engine, Maya, Cinema4D, or any other 3D environment. The avatars come with a standard humanoid body rig, ARKit blendshapes, and visemes. They are compatible with Mixamo animations and VTubing software.

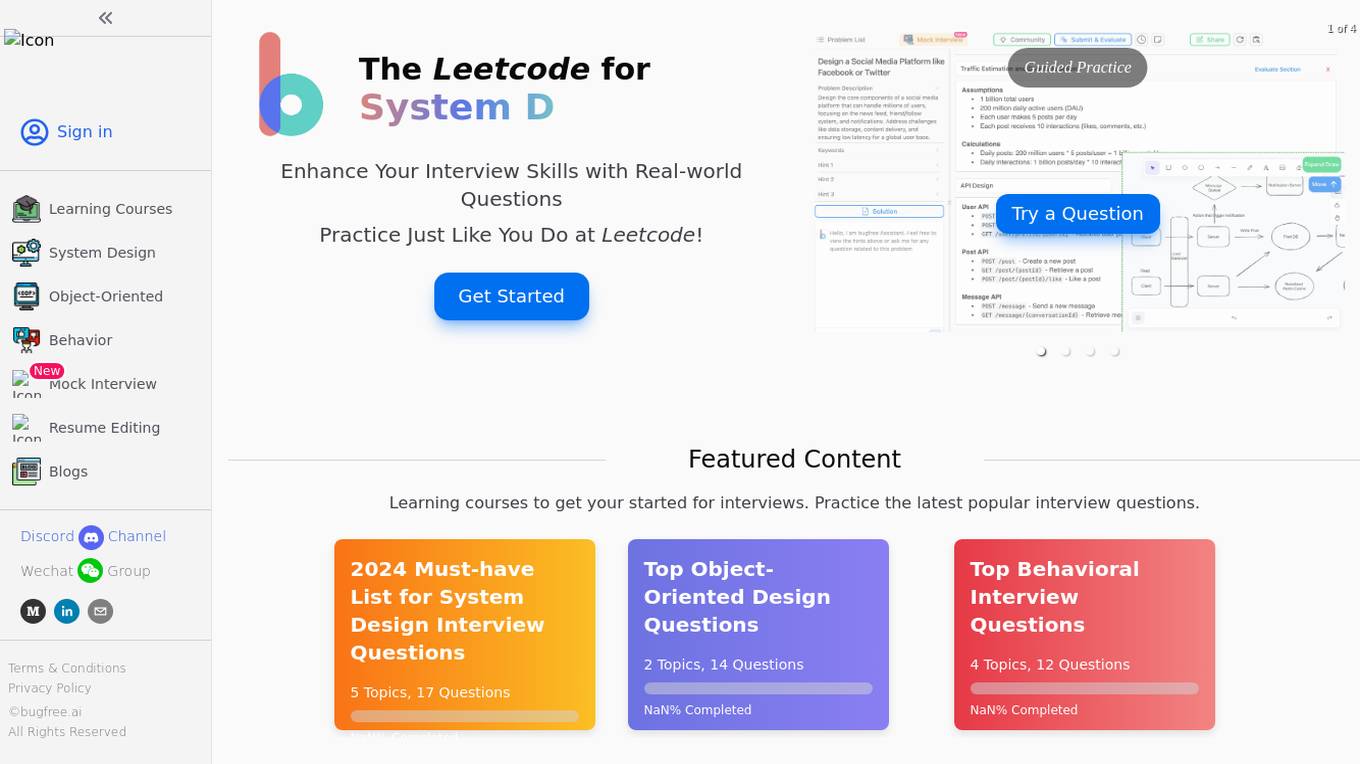

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Tesla Wrap

Tesla Wrap is a free AI wrap designer and community gallery that allows users to design custom car wraps for Tesla vehicles. The platform offers an AI Wrap Editor that simplifies the design process by providing official templates, real-time previews, and export options. Users can create unique wrap concepts by describing their ideas and generating designs with AI. Tesla Wrap aims to streamline the car wrap design process, from concept to final product, ensuring consistent and professional results for Tesla owners.

Wild Moose

Wild Moose is an AI-powered SRE Copilot tool designed to help companies handle incidents efficiently. It offers fast and efficient root cause analysis that improves with every incident by automatically gathering and analyzing logs, metrics, and code to pinpoint root causes. The tool converts tribal knowledge into custom playbooks, constantly improves performance with a system model that learns from each incident, and integrates seamlessly with various observability tools and deployment platforms. Wild Moose reduces cognitive load on teams, automates routine tasks, and provides actionable insights in real-time, enabling teams to act fast during outages.

Fastbreak

Fastbreak is an AI Assistant application designed to help users win Request for Proposals (RFPs) and Request for Information (RFIs) by providing cognitive proposal management for high-stakes enterprise deals. The tool accelerates completion time by 10x, optimizes access to relevant information, streamlines leveraging domain expertise, and allows users to relax by saving time and winning more business. Fastbreak enables users to answer business questionnaires in minutes, offers one-step onboarding, flexible answers, powerful export capabilities, and contextual smarts to synthesize answers based on previous responses and documents.

BlazeMeter

BlazeMeter by Perforce is an AI-powered continuous testing platform designed to automate testing processes and enhance software quality. It offers effortless test creation, seamless test execution, instant issue analysis, and self-sustaining maintenance. BlazeMeter provides a comprehensive solution for performance, functional, scriptless, API testing, and monitoring, along with test data and service virtualization. The platform enables teams to speed up digital transformation, shift quality left, and streamline DevOps practices. With AI analytics, scriptless test creation, and UX testing capabilities, BlazeMeter empowers users to drive innovation, accuracy, and speed in their test automation efforts.

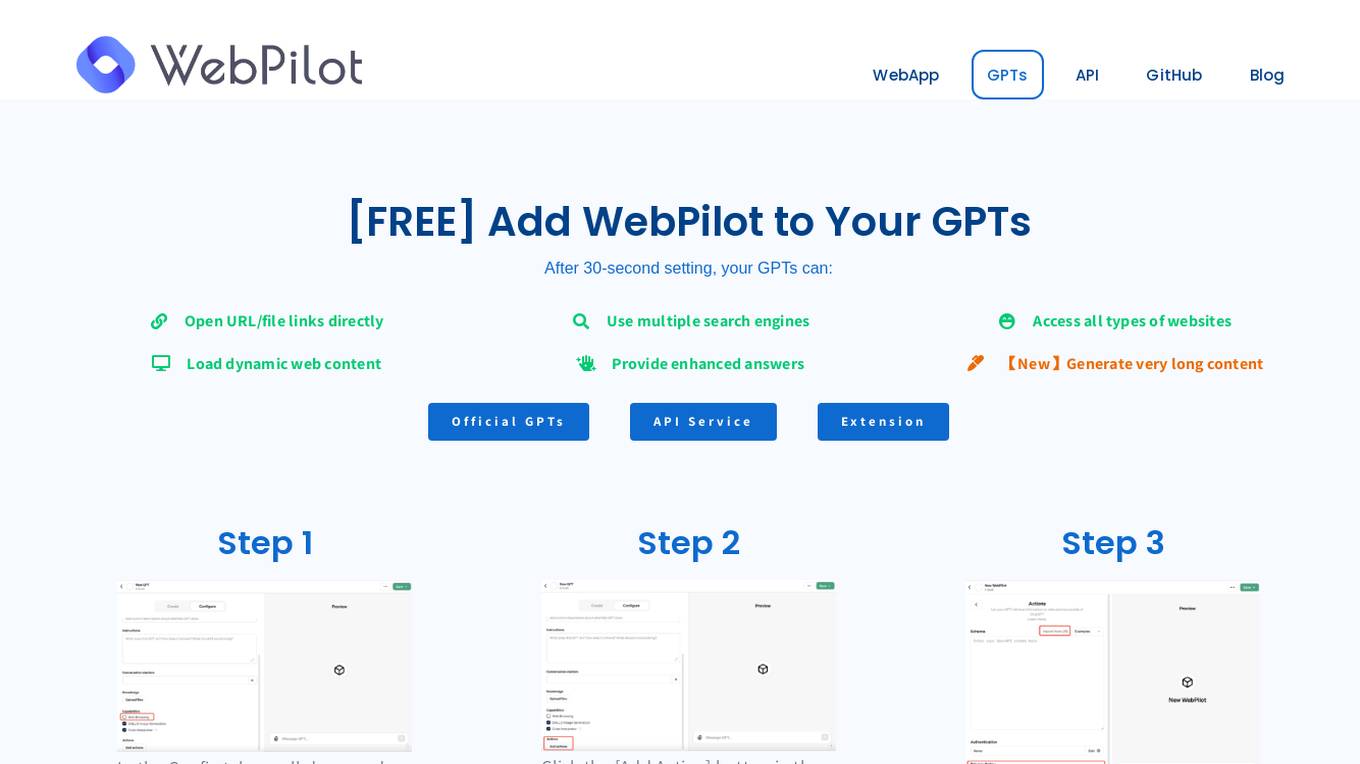

WebPilot

WebPilot is an AI tool designed to enhance your GPTs by enabling them to perform various tasks such as opening URL/file links, using multiple search engines, accessing all types of websites, loading dynamic web content, and providing enhanced answers. It offers a super easy way to interact with webpages, assisting in tasks like responding to emails, writing in forms, and solving quizzes. WebPilot is free, open-source, and has been featured by Google Extension Store as an established publisher.

Parade

Parade is a capacity management platform designed for freight brokerages and 3PLs to streamline operations, automate bookings, and improve margins. The platform leverages advanced AI to optimize pricing, bidding, and carrier management, helping users book more loads efficiently. Parade integrates seamlessly with existing tech stacks, offering precise pricing, optimized bidding, and enhanced shipper connectivity. The platform boasts a range of features and benefits aimed at increasing efficiency, reducing costs, and boosting margins for freight businesses.

Kolank AI

Kolank AI is a freight broker automation software powered by AI that handles carrier communication, load tracking, and dispatch management. It automates routine tasks like check calls, status updates, and document processing, allowing your team to focus on booking more loads and building relationships. The platform offers multi-channel communication, 24/7 coverage, and seamless integration with existing TMS systems. Designed for brokerages of all sizes, Kolank AI aims to amplify your operations by providing intelligent automation and support for critical decision-making.

SwapFans

The website offers an AI-powered tool called SwapFans that allows users to load balance and receive discounts. Users can easily FaceSwap any social media videos and swap entire Instagram and TikTok accounts with high-speed FaceSwap AI. The tool is designed to help users manage their social media presence effectively and efficiently.

Abridge

Abridge is an AI application that provides generative AI for clinical conversations. It transforms patient-clinician conversations into contextually aware, clinically useful, and billable AI-generated notes. The platform is trusted by the largest healthcare systems and aims to measurably improve outcomes for clinicians, nurses, and revenue cycle teams at scale. Abridge offers an enterprise-grade AI solution for clinical conversations, leading the way in healthcare AI infrastructure.

PixieBrix

PixieBrix is an AI engagement platform that allows users to build, deploy, and manage internal AI tools to drive team productivity. It unifies AI landscapes with oversight and governance for enterprise scale. The platform is enterprise-ready and fully customizable to meet unique needs, and can be deployed on any site, making it easy to integrate into existing systems. PixieBrix leverages the power of AI and automation to harness the latest technology to streamline workflows and take productivity to new heights.

TLDRai

TLDRai.com is an AI tool designed to help users summarize any text into concise and easy-to-digest content, enabling them to free themselves from information overload. The tool utilizes AI technology to provide efficient text summarization services, making it a valuable resource for individuals seeking quick and accurate summaries of lengthy texts.

Merlin AI

Merlin AI is a YouTube transcript tool that allows users to create summaries of YouTube videos. It is easy to use and can be added to Chrome as an extension. Merlin AI is powered by an undocumented API and features the latest build.

Kapa.ai

Kapa.ai is an AI tool that builds accurate AI agents from technical documentation and various other sources. It helps deploy AI assistants across support, documentation, and internal teams in a matter of hours. Trusted by over 200 leading companies with technical products, Kapa.ai offers pre-built integrations, customer results, and an analytics platform to track user questions and content gaps. The tool focuses on providing grounded answers, connecting existing sources, and ensuring data security and compliance.

Daxtra

Daxtra is an AI-powered recruitment technology tool designed to help staffing and recruiting professionals find, parse, match, and engage the best candidates quickly and efficiently. The tool offers a suite of products that seamlessly integrate with existing ATS or CRM systems, automating various recruitment processes such as candidate data loading, CV/resume formatting, information extraction, and job matching. Daxtra's solutions cater to corporates, vendors, job boards, and social media partners, providing a comprehensive set of developer components to enhance recruitment workflows.

Widya Robotics

Widya Robotics is an AI, Automation, and Robotics solutions provider that offers a range of innovative products and solutions for various industries such as construction, manufacturing, retail, and traffic and transportation. The company specializes in technologies like LiDAR for load scanning, gas monitoring, and AI-driven solutions to enhance efficiency, safety, and profitability for businesses. Widya Robotics has received recognition for its cutting-edge technology and commitment to helping companies achieve their financial and branding goals.

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without proper permissions or when the server is misconfigured. The message 'openresty' suggests that the server may be using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. It provides a powerful and flexible way to create web services and APIs.

5 - Open Source AI Tools

spandrel

Spandrel is a library for loading and running pre-trained PyTorch models. It automatically detects the model architecture and hyperparameters from model files, and provides a unified interface for running models.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

lmstudio.js

lmstudio.js is a pre-release alpha client SDK for LM Studio, allowing users to use local LLMs in JS/TS/Node. It is currently undergoing rapid development with breaking changes expected. Users can follow LM Studio's announcements on Twitter and Discord. The SDK provides API usage for loading models, predicting text, setting up the local LLM server, and more. It supports features like custom loading progress tracking, model unloading, structured output prediction, and cancellation of predictions. Users can interact with LM Studio through the CLI tool 'lms' and perform tasks like text completion, conversation, and getting prediction statistics.

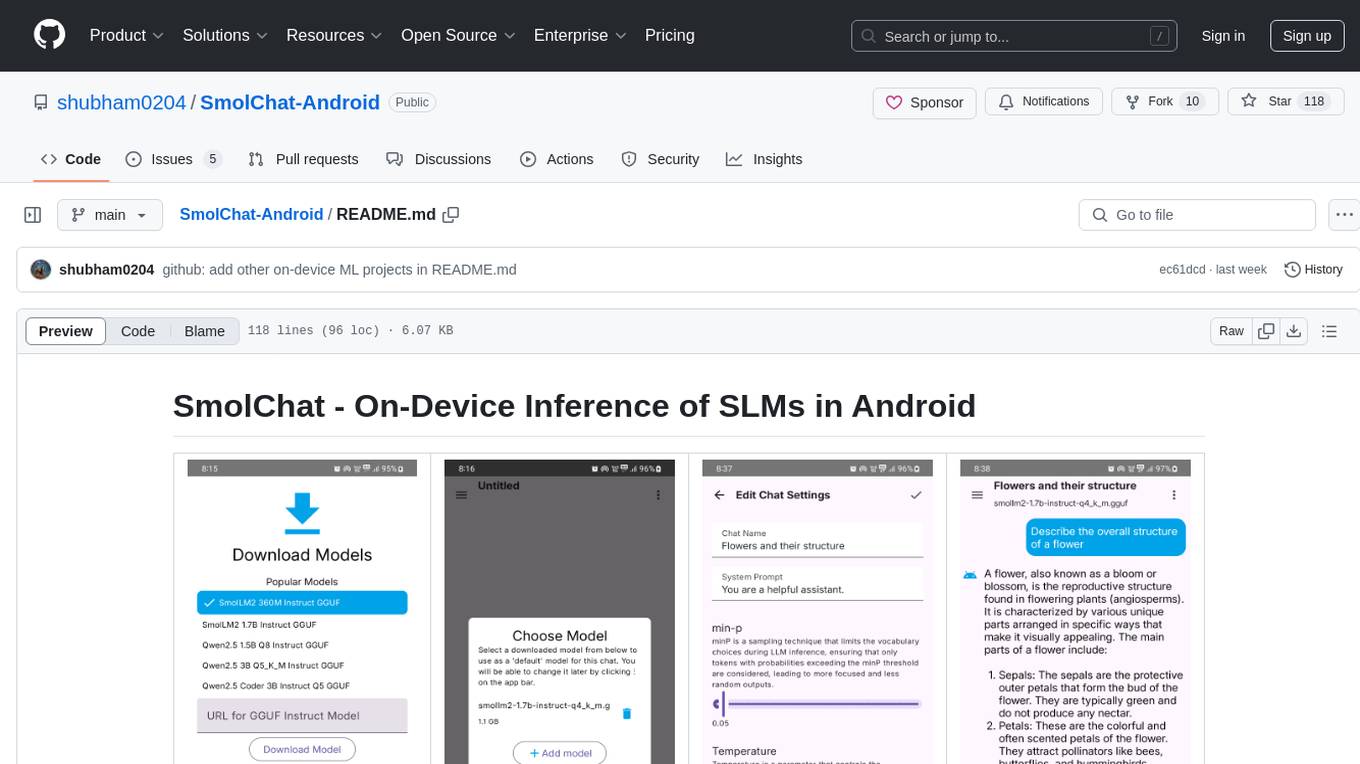

SmolChat-Android

SmolChat-Android is a mobile application that enables users to interact with local small language models (SLMs) on-device. Users can add/remove SLMs, modify system prompts and inference parameters, create downstream tasks, and generate responses. The app uses llama.cpp for model execution, ObjectBox for database storage, and Markwon for markdown rendering. It provides a simple, extensible codebase for on-device machine learning projects.

Oxide-Lab

Oxide Lab is a private AI chat desktop application with local LLM support, allowing users to run large language models locally without internet connectivity or external API services. Built with Rust and Tauri v2, it offers a fast and secure chat interface where all inference happens on the user's machine, ensuring data privacy and security. The application supports multiple architectures, model formats, and hardware accelerations, along with streaming text generation and a modern UI built with Svelte and Tailwind CSS.