Best AI tools for< Swiftui Developer >

Infographic

4 - AI tool Sites

WrapFast

WrapFast is a SwiftUI boilerplate that helps developers create AI wrappers and iOS apps quickly and easily. It provides pre-written code for common tasks such as authentication, onboarding, in-app purchases, paywalls, securing API keys, cloud database, analytics, settings, and collecting user feedback. WrapFast is designed to save developers time and effort, allowing them to focus on building their core features. It is suitable for both experienced iOS developers and beginners who are new to the platform.

IXEAU

IXEAU is an AI-powered application developed by App ahead GmbH that offers a range of innovative features such as AI transcription, speech-to-text conversion, photo text-to-image transformation, stable diffusion codepoint, and more. With over 73,000 unicodes, IXEAU provides users with a comprehensive toolset for various tasks. The application also includes unique functionalities like Superlayer Widgets, Cursor Pro Mouse Highlighter & Magnifier, and Keystroke Pro for visualizing keypresses. IXEAU is designed to enhance user productivity and efficiency across different platforms and devices.

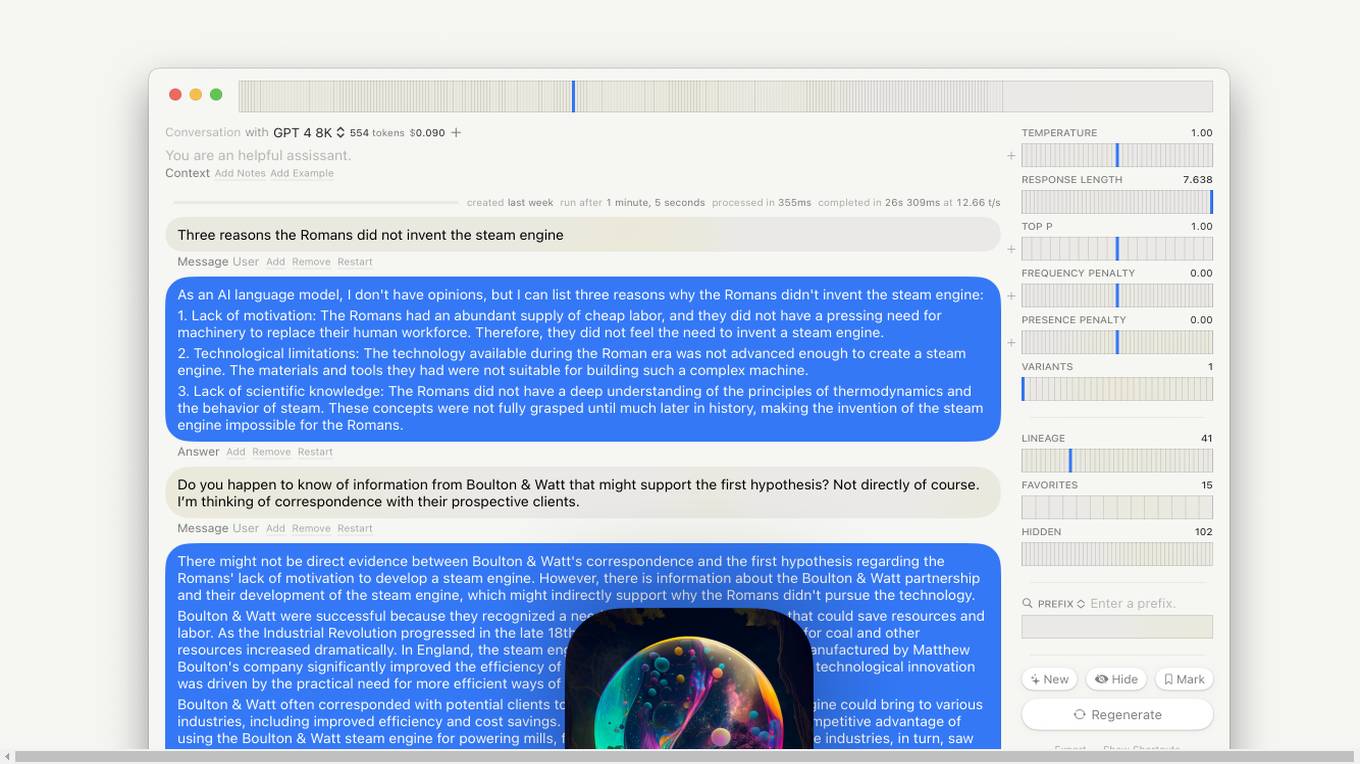

Lore macOS GPT-LLM Playground

Lore macOS GPT-LLM Playground is an AI tool designed for macOS users, offering a Multi-Model Time Travel Versioning Combinatorial Runs Variants Full-Text Search Model-Cost Aware API & Token Stats Custom Endpoints Local Models Tables. It provides a user-friendly interface with features like Syntax, LaTeX Notes Export, Shortcuts, Vim Mode, and Sandbox. The tool is built with Cocoa, SwiftUI, and SQLite, ensuring privacy and offering support & feedback.

Iterra AI

Iterra AI is an AI-powered platform that enables users to transform their app ideas into reality without the need for coding. By simply describing their app concept, the AI generates real SwiftUI code instantly, provides a live preview, and allows for easy customization in Xcode. With the aim of revolutionizing app development, Iterra AI offers a solution to the challenges of traditional app building methods, making professional iOS app creation accessible to everyone.

0 - Open Source Tools

8 - OpenAI Gpts

Glowby

Helps you build anything from websites to games and apps using JavaScript, SwiftUI, Kotlin, Unity, Flutter, and Glowbom AI Extensions.