chatgpt-shell

A multi-llm Emacs shell (ChatGPT, Claude, DeepSeek, Gemini, Kagi, Ollama, Perplexity) + editing integrations

Stars: 1008

chatgpt-shell is a multi-LLM Emacs shell that allows users to interact with various language models. Users can swap LLM providers, compose queries, execute source blocks, and perform vision experiments. The tool supports customization and offers features like inline modifications, executing snippets, and navigating source blocks. Users can support the project via GitHub Sponsors and contribute to feature requests and bug reports.

README:

👉 [[https://github.com/sponsors/xenodium][Support this work via GitHub Sponsors]]

[[https://stable.melpa.org/#/chatgpt-shell][file:https://stable.melpa.org/packages/chatgpt-shell-badge.svg]] [[https://melpa.org/#/chatgpt-shell][file:https://melpa.org/packages/chatgpt-shell-badge.svg]]

- chatgpt-shell

A multi-llm Emacs [[https://www.gnu.org/software/emacs/manual/html_node/emacs/Shell-Prompts.html][comint]] shell, by [[https://lmno.lol/alvaro][me]].

- Related packages

- [[https://github.com/xenodium/ob-chatgpt-shell][ob-chatgpt-shell]]: Evaluate chatgpt-shell blocks as Emacs org babel blocks.

- [[https://github.com/xenodium/ob-dall-e-shell][ob-dall-e-shell]]: Evaluate DALL-E shell blocks as Emacs org babel blocks.

- [[https://github.com/xenodium/dall-e-shell][dall-e-shell]]: An Emacs shell for OpenAI's DALL-E.

- [[https://github.com/xenodium/shell-maker][shell-maker]]: Create Emacs shells backed by either local or cloud services.

- News

chatgpt-shell goes multi model 🎉

Please sponsor the project to make development + support sustainable.

| Provider | Model | Supported | Setup | |------------+---------------+-----------+----------------------------------| | Anthropic | Claude | Yes | Set =chatgpt-shell-anthropic-key= | | Deepseek | Chat/Reasoner | New 💫 | Set =chatgpt-shell-deepseek-key= | | Google | Gemini | Yes | Set =chatgpt-shell-google-key= | | Kagi | Summarizer | Yes | Set =chatgpt-shell-kagi-key= | | Ollama | Llama | Yes | Install [[https://ollama.com/][Ollama]] | | OpenAI | ChatGPT | Yes | Set =chatgpt-shell-openai-key= | | OpenRouter | Various | Yes | Set =chatgpt-shell-openrouter-key= | | Perplexity | Llama Sonar | Yes | Set =chatgpt-shell-perplexity-key= |

Note: With the exception of [[https://ollama.com/][Ollama]], you typically have to pay the cloud services for API access. Please check with each respective LLM service.

My favourite model is missing.

| [[https://github.com/xenodium/chatgpt-shell/issues][File a feature request]] | [[https://github.com/sponsors/xenodium][sponsor the work]] |

** A familiar shell

chatgpt-shell is a [[https://www.gnu.org/software/emacs/manual/html_node/emacs/Shell-Prompts.html][comint]] shell. Bring your favourite Emacs shell flows along.

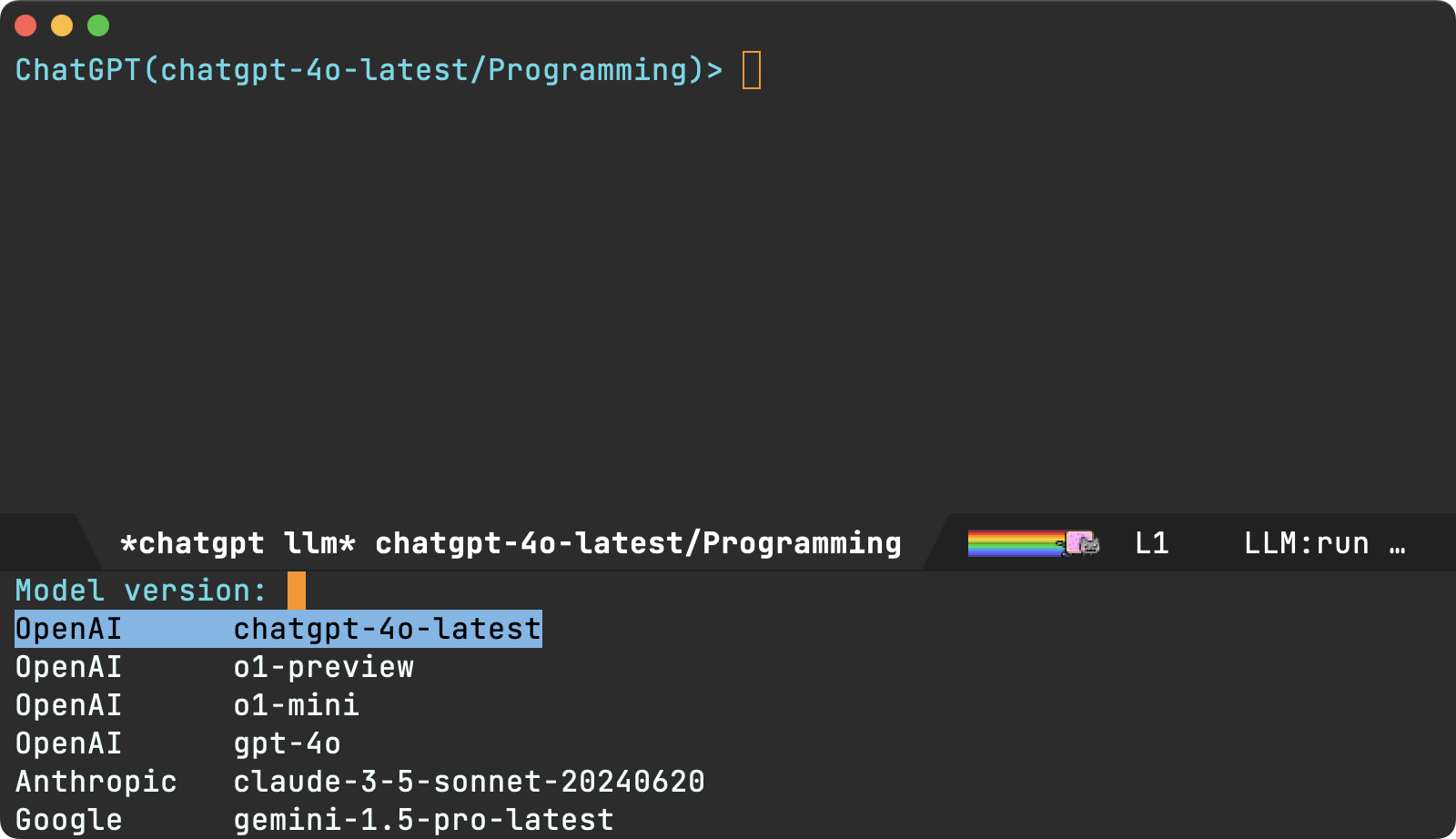

** Swap models

One shell to query all. Swap LLM provider (via =M-x chatgpt-shell-swap-model=) and continue with your familiar flow.

** A shell hybrid

=chatgpt-shell= includes a compose buffer experience. This is my favourite and most frequently used mechanism to interact with LLMs.

For example, select a region and invoke =M-x chatgpt-shell-prompt-compose= (=C-c C-e= is my preferred binding), and an editable buffer automatically copies the region and enables crafting a more thorough query. When ready, submit with the familiar =C-c C-c= binding. The buffer automatically becomes read-only and enables single-character bindings.

*** Navigation: n/p (or TAB/shift-TAB)

Navigate through source blocks (including previous submissions in history). Source blocks are automatically selected.

*** Reply: r

Reply with with follow-up requests using the =r= binding.

*** Give me more: m

Want to ask for more of the same data? Press =m= to request more of it. This is handy to follow up on any kind of list (suggestion, candidates, results, etc).

*** Quick quick: q

I'm a big fan of quickly disposing of Emacs buffers with the =q= binding. chatgpt-shell compose buffers are no exception.

*** Request entire snippets: e

LLM being lazy and returning partial code? Press =e= to request entire snippet.

** Confirm inline mods (via diffs)

Request inline modifications, with explicit confirmation before accepting.

** Execute snippets (a la [[https://orgmode.org/worg/org-contrib/babel/intro.html][org babel]])

Both the shell and the compose buffers enable users to execute source blocks via =C-c C-c=, leveraging [[https://orgmode.org/worg/org-contrib/babel/intro.html][org babel]].

** Vision experiments

I've been experimenting with image queries (currently ChatGPT only, please [[https://github.com/sponsors/xenodium][sponsor]] to help bring support for others).

Below is a handy integration to extract Japanese vocabulary. There's also a generic image descriptor available via =M-x chatgpt-shell-describe-image= that works on any Emacs image (via dired, image buffer, point on image, or selecting a desktop region).

- Support this effort

If you're finding =chatgpt-shell= useful, help make the project sustainable and consider ✨[[https://github.com/sponsors/xenodium][sponsoring]]✨.

=chatgpt-shell= is in development. Please report issues or send [[https://github.com/xenodium/chatgpt-shell/pulls][pull requests]] for improvements.

- Like this package? Tell me about it 💙

Finding it useful? Like the package? I'd love to hear from you. Get in touch ([[https://indieweb.social/@xenodium][Mastodon]] / [[https://twitter.com/xenodium][Twitter]] / [[https://bsky.app/profile/xenodium.bsky.social][Bluesky]] / [[https://www.reddit.com/user/xenodium][Reddit]] / [[mailto:me__AT__xenodium.com][Email]]).

- Install

** MELPA

Via [[https://github.com/jwiegley/use-package][use-package]], you can install with =:ensure t=.

#+begin_src emacs-lisp :lexical no (use-package chatgpt-shell :ensure t :custom ((chatgpt-shell-openai-key (lambda () (auth-source-pass-get 'secret "openai-key"))))) #+end_src

** Straight

#+begin_src emacs-lisp :lexical no (use-package shell-maker :straight (:type git :host github :repo "xenodium/shell-maker" :files ("shell-maker*.el")))

(use-package chatgpt-shell :straight (:type git :host github :repo "xenodium/chatgpt-shell" :files ("chatgpt-shell*.el")) :custom ((chatgpt-shell-openai-key (lambda () (auth-source-pass-get 'secret "openai-key"))))) #+end_src

-

Set default model #+begin_src emacs-lisp :lexical no (setq chatgpt-shell-model-version "llama3.2") #+end_src

-

Set API Keys

You will first need to get an API key for each of the various public LLM endpoints you want to interact with.

| Service | Model(s) | Link: get an API Key |

|---|---|---|

| OpenAI | ChatGPT | [[https://platform.openai.com/account/api-keys][Get an API Key]] |

| Anthropic | Claude | [[https://console.anthropic.com/dashboard][Visit the Dashboard]] |

| Deepseek | Chat/Reasoner | [[https://platform.deepseek.com/api_keys][Get an API Key]] |

| Gemini | [[https://aistudio.google.com/app/apikey][Get an API Key]] | |

| Kagi | Summarizer | [[https://kagi.com/settings?p=api][Get an API Key]] |

| OpenRouter | Various | [[https://openrouter.ai/settings/keys][Manage your API keys]] |

| Perplexity | Llama Sonar | [[https://docs.perplexity.ai/guides/getting-started#generate-an-api-key][Get an API Key]] |

** Provide the API Key to ChatGPT via a function

You can define a function that chatgpt-shell invokes to get the API Key. The following example is for Open AI; use a similar approach for other services.

#+begin_src emacs-lisp ;; if you are using the "pass" password manager (setq chatgpt-shell-openai-key (lambda () ;; (auth-source-pass-get 'secret "openai-key") ; alternative using pass support in auth-sources (nth 0 (process-lines "pass" "show" "openai-key"))))

;; or if using auth-sources, e.g., so the file ~/.authinfo has this line: ;; machine api.openai.com password OPENAI_KEY (setq chatgpt-shell-openai-key (auth-source-pick-first-password :host "api.openai.com"))

;; or same as previous but lazy loaded (prevents unexpected passphrase prompt) (setq chatgpt-shell-openai-key (lambda () (auth-source-pick-first-password :host "api.openai.com"))) #+end_src

** Set the appropriate variable Manually/Interactively

=M-x set-variable chatgpt-shell-anthropic-key=

=M-x set-variable chatgpt-shell-deepseek-key=

=M-x set-variable chatgpt-shell-google-key=

...

** Set the appropriate variable programatically, in your emacs init file #+begin_src emacs-lisp ;; set anthropic key from a string (setq chatgpt-shell-anthropic-key "my anthropic key") ;; set OpenAI key from the environment (setq chatgpt-shell-openai-key (getenv "OPENAI_API_KEY"))

#+end_src

- ChatGPT through proxy service

If you use ChatGPT through proxy service "https://api.chatgpt.domain.com", set options like the following:

#+begin_src emacs-lisp :lexical no (use-package chatgpt-shell :ensure t :custom ((chatgpt-shell-api-url-base "https://api.chatgpt.domain.com") (chatgpt-shell-openai-key (lambda () ;; Here the openai-key should be the proxy service key. (auth-source-pass-get 'secret "openai-key"))))) #+end_src

If your proxy service API path is not OpenAI ChatGPT default path like "=/v1/chat/completions=", then

you can customize option chatgpt-shell-api-url-path.

- Using ChatGPT through HTTP(S) proxy

Behind the scenes chatgpt-shell uses =curl= to send requests to the openai server. If you use ChatGPT through a HTTP proxy (for example you are in a corporate network and a HTTP proxy shields the corporate network from the internet), you need to tell =curl= to use the proxy via the curl option =-x http://your_proxy=. For this, use =chatgpt-shell-proxy=.

For example, if you want curl =-x= and =http://your_proxy=, set =chatgpt-shell-proxy= to "=http://your_proxy=".

- Launch

Launch with =M-x chatgpt-shell=.

Note: =M-x chatgpt-shell= keeps a single shell around, refocusing if needed. To launch multiple shells, use =C-u M-x chatgpt-shell=.

- Clear buffer

Type =clear= as a prompt.

#+begin_src sh ChatGPT> clear #+end_src

Alternatively, use either =M-x chatgpt-shell-clear-buffer= or =M-x comint-clear-buffer=.

- Saving and restoring

Save with =M-x chatgpt-shell-save-session-transcript= and restore with =M-x chatgpt-shell-restore-session-from-transcript=.

Some related values stored in =shell-maker= like =shell-maker-transcript-default-path= and =shell-maker-forget-file-after-clear=.

- Streaming

=chatgpt-shell= can either wait until the entire response is received before displaying, or it can progressively display as chunks arrive (streaming).

Streaming is enabled by default. =(setq chatgpt-shell-streaming nil)= to disable it.

- chatgpt-shell customizations

#+BEGIN_SRC emacs-lisp :results table :colnames '("Custom variable" "Description") :exports results (let ((rows)) (mapatoms (lambda (symbol) (when (and (string-match "^chatgpt-shell" (symbol-name symbol)) (custom-variable-p symbol)) (push `(,symbol ,(car (split-string (or (documentation-property symbol 'variable-documentation) (get (indirect-variable symbol) 'variable-documentation) (get symbol 'variable-documentation) "") "\n"))) rows)))) rows) #+END_SRC

#+RESULTS: | Custom variable | Description | |------------------------------------------------------------------+------------------------------------------------------------------------------| | chatgpt-shell-google-api-url-base | Google API’s base URL. | | chatgpt-shell-deepseek-api-url-base | DeepSeek API’s base URL. | | chatgpt-shell-perplexity-key | Perplexity API key as a string or a function that loads and returns it. | | chatgpt-shell-deepseek-key | DeepSeek key as a string or a function that loads and returns it. | | chatgpt-shell-prompt-header-write-git-commit | Prompt header of ‘git-commit‘. | | chatgpt-shell-highlight-blocks | Whether or not to highlight source blocks. | | chatgpt-shell-display-function | Function to display the shell. Set to ‘display-buffer’ or custom function. | | chatgpt-shell-prompt-header-generate-unit-test | Prompt header of ‘generate-unit-test‘. | | chatgpt-shell-prompt-header-refactor-code | Prompt header of ‘refactor-code‘. | | chatgpt-shell-prompt-header-proofread-region | Prompt header used by ‘chatgpt-shell-proofread-region‘. | | chatgpt-shell-welcome-function | Function returning welcome message or nil for no message. | | chatgpt-shell-perplexity-api-url-base | Perplexity API’s base URL. | | chatgpt-shell-prompt-query-response-style | Determines the prompt style when invoking from other buffers. | | chatgpt-shell-model-version | The active model version as either a string. | | chatgpt-shell-kagi-key | Kagi API key as a string or a function that loads and returns it. | | chatgpt-shell-logging | Logging disabled by default (slows things down). | | chatgpt-shell-render-latex | Whether or not to render LaTeX blocks (experimental). | | chatgpt-shell-api-url-base | OpenAI API’s base URL. | | chatgpt-shell-google-key | Google API key as a string or a function that loads and returns it. | | chatgpt-shell-ollama-api-url-base | Ollama API’s base URL. | | chatgpt-shell-openrouter-key | OpenRouter key as a string or a function that loads and returns it. | | chatgpt-shell-babel-headers | Additional headers to make babel blocks work. | | chatgpt-shell--pretty-smerge-mode-hook | Hook run after entering or leaving ‘chatgpt-shell--pretty-smerge-mode’. | | chatgpt-shell-model-filter | A function that is applied ‘chatgpt-shell-models’ to determine | | chatgpt-shell-source-block-actions | Block actions for known languages. | | chatgpt-shell-default-prompts | List of default prompts to choose from. | | chatgpt-shell-anthropic-key | Anthropic API key as a string or a function that loads and returns it. | | chatgpt-shell-prompt-header-eshell-summarize-last-command-output | Prompt header of ‘eshell-summarize-last-command-output‘. | | chatgpt-shell-system-prompt | The system prompt ‘chatgpt-shell-system-prompts’ index. | | chatgpt-shell-transmitted-context-length | Controls the amount of context provided to chatGPT. | | chatgpt-shell-root-path | Root path location to store internal shell files. | | chatgpt-shell-prompt-header-whats-wrong-with-last-command | Prompt header of ‘whats-wrong-with-last-command‘. | | chatgpt-shell-read-string-function | Function to read strings from user. | | chatgpt-shell-after-command-functions | Abnormal hook (i.e. with parameters) invoked after each command. | | chatgpt-shell-system-prompts | List of system prompts to choose from. | | chatgpt-shell-openai-key | OpenAI key as a string or a function that loads and returns it. | | chatgpt-shell-proxy | When non-nil, use as a proxy (for example http or socks5). | | chatgpt-shell-prompt-header-describe-code | Prompt header of ‘describe-code‘. | | chatgpt-shell-insert-dividers | Whether or not to display a divider between requests and responses. | | chatgpt-shell-models | The list of supported models to swap from. | | chatgpt-shell-openrouter-api-url-base | OpenRouter API’s base URL. | | chatgpt-shell-language-mapping | Maps external language names to Emacs names. | | chatgpt-shell-prompt-compose-view-mode-hook | Hook run after entering or leaving ‘chatgpt-shell-prompt-compose-view-mode’. | | chatgpt-shell-streaming | Whether or not to stream ChatGPT responses (show chunks as they arrive). | | chatgpt-shell-anthropic-api-url-base | Anthropic API’s base URL. | | chatgpt-shell-model-temperature | What sampling temperature to use, between 0 and 2, or nil. | | chatgpt-shell-request-timeout | How long to wait for a request to time out in seconds. | | chatgpt-shell-kagi-api-url-base | Kagi API’s base URL. |

There are more. Browse via =M-x set-variable=

** =chatgpt-shell-display-function= (with custom function)

If you'd prefer your own custom display function,

#+begin_src emacs-lisp :lexical no (setq chatgpt-shell-display-function #'my/chatgpt-shell-frame)

(defun my/chatgpt-shell-frame (bname) (let ((cur-f (selected-frame)) (f (my/find-or-make-frame "chatgpt"))) (select-frame-by-name "chatgpt") (pop-to-buffer-same-window bname) (set-frame-position f (/ (display-pixel-width) 2) 0) (set-frame-height f (frame-height cur-f)) (set-frame-width f (frame-width cur-f) 1)))

(defun my/find-or-make-frame (fname) (condition-case nil (select-frame-by-name fname) (error (make-frame `((name . ,fname)))))) #+end_src

Thanks to [[https://github.com/tuhdo][tuhdo]] for the custom display function.

- chatgpt-shell commands #+BEGIN_SRC emacs-lisp :results table :colnames '("Binding" "Command" "Description") :exports results (let ((rows)) (mapatoms (lambda (symbol) (when (and (string-match "^chatgpt-shell" (symbol-name symbol)) (commandp symbol)) (push `(,(string-join (seq-filter (lambda (symbol) (not (string-match "menu" symbol))) (mapcar (lambda (keys) (key-description keys)) (or (where-is-internal (symbol-function symbol) comint-mode-map nil nil (command-remapping 'comint-next-input)) (where-is-internal symbol chatgpt-shell-mode-map nil nil (command-remapping symbol)) (where-is-internal (symbol-function symbol) chatgpt-shell-mode-map nil nil (command-remapping symbol))))) " or ") ,(symbol-name symbol) ,(car (split-string (or (documentation symbol t) "") "\n"))) rows)))) rows) #+END_SRC

#+RESULTS:

| Binding | Command | Description |

|----------------------+-----------------------------------------------------+---------------------------------------------------------------------------------|

| | chatgpt-shell-japanese-lookup | Look Japanese term up. |

| | chatgpt-shell-next-source-block | Move point to the next source block's body. |

| | chatgpt-shell-prompt-compose-request-entire-snippet | If the response code is incomplete, request the entire snippet. |

| | chatgpt-shell-prompt-compose-request-more | Request more data. This is useful if you already requested examples. |

| | chatgpt-shell-execute-babel-block-action-at-point | Execute block as org babel. |

| C-c C-s | chatgpt-shell-swap-system-prompt | Swap system prompt from chatgpt-shell-system-prompts'. | | | chatgpt-shell-system-prompts-menu | ChatGPT | | | chatgpt-shell-prompt-compose-swap-model-version | Swap the compose buffer's model version. | | | chatgpt-shell-describe-code | Describe code from region using ChatGPT. | | C-<up> or M-p | chatgpt-shell-previous-input | Cycle backwards through input history, saving input. | | | chatgpt-shell-previous-link | Move point to the previous link. | | | chatgpt-shell-prompt-compose-next-item | Jump to and select next item (request, response, block, link, interaction). | | C-c C-v | chatgpt-shell-swap-model | Swap model version from chatgpt-shell-models'. |

| C-x C-s | chatgpt-shell-save-session-transcript | Save shell transcript to file. |

| | chatgpt-shell-proofread-region | Proofread text from region using ChatGPT. |

| | chatgpt-shell-prompt-compose-quit-and-close-frame | Quit compose and close frame if it's the last window. |

| | chatgpt-shell-prompt-compose-other-buffer | Jump to the shell buffer (compose's other buffer). |

| | chatgpt-shell | Start a ChatGPT shell interactive command. |

| RET | chatgpt-shell-submit | Submit current input. |

| | chatgpt-shell-prompt-compose-swap-system-prompt | Swap the compose buffer's system prompt. |

| | chatgpt-shell-describe-image | Request OpenAI to describe image. |

| | chatgpt-shell-prompt-compose-search-history | Search prompt history, select, and insert to current compose buffer. |

| | chatgpt-shell-prompt-compose-previous-history | Insert previous prompt from history into compose buffer. |

| | chatgpt-shell-delete-interaction-at-point | Delete interaction (request and response) at point. |

| | chatgpt-shell-refresh-rendering | Refresh markdown rendering by re-applying to entire buffer. |

| | chatgpt-shell-prompt-compose-insert-block-at-point | Insert block at point at last known location. |

| | chatgpt-shell-explain-code | Describe code from region using ChatGPT. |

| | chatgpt-shell-execute-block-action-at-point | Execute block at point. |

| | chatgpt-shell-load-awesome-prompts | Load chatgpt-shell-system-prompts' from awesome-chatgpt-prompts. | | | chatgpt-shell-write-git-commit | Write commit from region using ChatGPT. | | | chatgpt-shell-restore-session-from-transcript | Restore session from file transcript (or HISTORY). | | | chatgpt-shell-prompt-compose-next-interaction | Show next interaction (request / response). | | <backtab> or C-c C-p | chatgpt-shell-previous-item | Go to previous item. | | | chatgpt-shell-fix-error-at-point | Fixes flymake error at point. | | | chatgpt-shell-next-link | Move point to the next link. | | | chatgpt-shell-prompt-appending-kill-ring | Make a ChatGPT request from the minibuffer appending kill ring. | | | chatgpt-shell-ollama-load-models | Query ollama for the locally installed models and add them to | | C-<down> or M-n | chatgpt-shell-next-input | Cycle forwards through input history. | | | chatgpt-shell-prompt-compose-view-mode | Like view-mode, but extended for ChatGPT Compose. | | | chatgpt-shell-clear-buffer | Clear the current shell buffer. | | | chatgpt-shell-edit-block-at-point | Execute block at point. | | <tab> or C-c C-n | chatgpt-shell-next-item | Go to next item. | | | chatgpt-shell-prompt-compose-send-buffer | Send compose buffer content to shell for processing. | | C-c C-e | chatgpt-shell-prompt-compose | Compose and send prompt from a dedicated buffer. | | | chatgpt-shell-rename-buffer | Rename current shell buffer. | | | chatgpt-shell-remove-block-overlays | Remove block overlays. Handy for renaming blocks. | | | chatgpt-shell-send-region | Send region to ChatGPT. | | | chatgpt-shell-send-and-review-region | Send region to ChatGPT, review before submitting. | | C-M-h | chatgpt-shell-mark-at-point-dwim | Mark source block if at point. Mark all output otherwise. | | | chatgpt-shell--pretty-smerge-mode | Minor mode to display overlays for conflict markers. | | | chatgpt-shell-mark-block | Mark current block in compose buffer. | | | chatgpt-shell-prompt-compose-reply | Reply as a follow-up and compose another query. | | | chatgpt-shell-prompt-compose-refresh | Refresh compose buffer content with curernt item from shell. | | | chatgpt-shell-set-as-primary-shell | Set as primary shell when there are multiple sessions. | | | chatgpt-shell-rename-block-at-point | Rename block at point (perhaps a different language). | | | chatgpt-shell-quick-insert | Request from minibuffer and insert response into current buffer. | | | chatgpt-shell-reload-default-models | Reload all available models. | | S-<return> | chatgpt-shell-newline | Insert a newline, and move to left margin of the new line. | | | chatgpt-shell-generate-unit-test | Generate unit-test for the code from region using ChatGPT. | | | chatgpt-shell-prompt-compose-previous-item | Jump to and select previous item (request, response, block, link, interaction). | | | chatgpt-shell-prompt-compose-next-history | Insert next prompt from history into compose buffer. | | C-c C-c | chatgpt-shell-ctrl-c-ctrl-c | If point in source block, execute it. Otherwise interrupt. | | | chatgpt-shell-eshell-summarize-last-command-output | Ask ChatGPT to summarize the last command output. | | M-r | chatgpt-shell-search-history | Search previous input history. | | | chatgpt-shell-mode | Major mode for ChatGPT shell. | | | chatgpt-shell-prompt-compose-mode | Major mode for composing ChatGPT prompts from a dedicated buffer. | | | chatgpt-shell-previous-source-block | Move point to the previous source block's body. | | | chatgpt-shell-prompt | Make a ChatGPT request from the minibuffer. | | | chatgpt-shell-japanese-ocr-lookup | Select a region of the screen to OCR and look up in Japanese. | | | chatgpt-shell-refactor-code | Refactor code from region using ChatGPT. | | | chatgpt-shell-view-block-at-point | View code block at point (using language's major mode). | | | chatgpt-shell-japanese-audio-lookup | Transcribe audio at current file (buffer or dired') and look up in Japanese. |

| | chatgpt-shell-eshell-whats-wrong-with-last-command | Ask ChatGPT what's wrong with the last eshell command. |

| | chatgpt-shell-prompt-compose-cancel | Cancel and close compose buffer. |

| | chatgpt-shell-prompt-compose-retry | Retry sending request to shell. |

| | chatgpt-shell-version | Show chatgpt-shell' mode version. | | | chatgpt-shell-prompt-compose-previous-interaction | Show previous interaction (request / response). | | | chatgpt-shell-interrupt | Interrupt chatgpt-shell' from any buffer. |

| | chatgpt-shell-view-at-point | View prompt and output at point in a separate buffer. |

Browse all available via =M-x=.

- Feature requests

- Please go through this README to see if the feature is already supported.

- Need custom behaviour? Check out existing [[https://github.com/xenodium/chatgpt-shell/issues?q=is%3Aissue+][issues/feature requests]]. You may find solutions in discussions.

-

Pull requests Pull requests are super welcome. Please [[https://github.com/xenodium/chatgpt-shell/issues/new][reach out]] before getting started to make sure we're not duplicating effort. Also [[https://github.com/xenodium/chatgpt-shell/][search existing discussions]].

-

Reporting bugs ** Setup isn't working? Please share the entire snippet you've used to set =chatgpt-shell= up (but redact your key). Share any errors you encountered. Read on for sharing additional details. ** Found runtime/elisp errors? Please enable =M-x toggle-debug-on-error=, reproduce the error, and share the stack trace. ** Found unexpected behaviour? Please enable logging =(setq chatgpt-shell-logging t)= and share the content of the =chatgpt-log= buffer in the bug report. ** Babel issues? Please also share the entire org snippet.

-

Support my work

👉 Find my work useful? [[https://github.com/sponsors/xenodium][Support this work via GitHub Sponsors]] or [[https://apps.apple.com/us/developer/xenodium-ltd/id304568690][buy my iOS apps]].

- My other utilities, packages, apps, writing...

- [[https://xenodium.com/][Blog (xenodium.com)]]

- [[https://lmno.lol/alvaro][Blog (lmno.lol/alvaro)]]

- [[https://plainorg.com][Plain Org]] (iOS)

- [[https://flathabits.com][Flat Habits]] (iOS)

- [[https://apps.apple.com/us/app/scratch/id1671420139][Scratch]] (iOS)

- [[https://github.com/xenodium/macosrec][macosrec]] (macOS)

- [[https://apps.apple.com/us/app/fresh-eyes/id6480411697?mt=12][Fresh Eyes]] (macOS)

- [[https://github.com/xenodium/dwim-shell-command][dwim-shell-command]] (Emacs)

- [[https://github.com/xenodium/company-org-block][company-org-block]] (Emacs)

- [[https://github.com/xenodium/org-block-capf][org-block-capf]] (Emacs)

- [[https://github.com/xenodium/ob-swiftui][ob-swiftui]] (Emacs)

- [[https://github.com/xenodium/chatgpt-shell][chatgpt-shell]] (Emacs)

- [[https://github.com/xenodium/ready-player][ready-player]] (Emacs)

- [[https://github.com/xenodium/sqlite-mode-extras][sqlite-mode-extras]]

- [[https://github.com/xenodium/ob-chatgpt-shell][ob-chatgpt-shell]] (Emacs)

- [[https://github.com/xenodium/dall-e-shell][dall-e-shell]] (Emacs)

- [[https://github.com/xenodium/ob-dall-e-shell][ob-dall-e-shell]] (Emacs)

- [[https://github.com/xenodium/shell-maker][shell-maker]] (Emacs)

- Contributors

#+HTML:

#+HTML:

#+HTML:

Made with [[https://contrib.rocks][contrib.rocks]].

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatgpt-shell

Similar Open Source Tools

chatgpt-shell

chatgpt-shell is a multi-LLM Emacs shell that allows users to interact with various language models. Users can swap LLM providers, compose queries, execute source blocks, and perform vision experiments. The tool supports customization and offers features like inline modifications, executing snippets, and navigating source blocks. Users can support the project via GitHub Sponsors and contribute to feature requests and bug reports.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

casibase

Casibase is an open-source AI LangChain-like RAG (Retrieval-Augmented Generation) knowledge database with web UI and Enterprise SSO, supports OpenAI, Azure, LLaMA, Google Gemini, HuggingFace, Claude, Grok, etc.

LLaVA-pp

This repository, LLaVA++, extends the visual capabilities of the LLaVA 1.5 model by incorporating the latest LLMs, Phi-3 Mini Instruct 3.8B, and LLaMA-3 Instruct 8B. It provides various models for instruction-following LMMS and academic-task-oriented datasets, along with training scripts for Phi-3-V and LLaMA-3-V. The repository also includes installation instructions and acknowledgments to related open-source contributions.

supabase

Supabase is an open source Firebase alternative that provides a wide range of features including a hosted Postgres database, authentication and authorization, auto-generated APIs, REST and GraphQL support, realtime subscriptions, functions, file storage, AI and vector/embeddings toolkit, and a dashboard. It aims to offer developers a Firebase-like experience using enterprise-grade open source tools.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

pr-agent

PR-Agent is a tool designed to assist in efficiently reviewing and handling pull requests by providing AI feedback and suggestions. It offers various tools such as Review, Describe, Improve, Ask, Update CHANGELOG, and more, with the ability to run them via different interfaces like CLI, PR Comments, or automatically triggering them when a new PR is opened. The tool supports multiple git platforms and models, emphasizing real-life practical usage and modular, customizable tools.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

osm-ai-helper

OSM-AI-helper is a Blueprint by Mozilla.ai designed to assist users in mapping features in OpenStreetMap using object detection and image segmentation models. It provides tools for identifying and mapping various features, such as swimming pools, in OpenStreetMap. Users can also create custom datasets and fine-tune models for different use cases. The project is licensed under the AGPL-3.0 License and welcomes contributions from the community.

Folo

Folo is a content organization tool that creates a noise-free timeline for users. It allows sharing lists, exploring collections, and distraction-free browsing. Users can subscribe to feeds, curate favorites, and utilize AI-powered features like translation and summaries. Folo supports various content types such as articles, videos, images, and audio. It introduces an ownership economy with $POWER tipping for creators and fosters a community-driven experience. The tool is under active development, welcoming feedback from users and developers.

phoenix

Phoenix is a tool that provides MLOps and LLMOps insights at lightning speed with zero-config observability. It offers a notebook-first experience for monitoring models and LLM Applications by providing LLM Traces, LLM Evals, Embedding Analysis, RAG Analysis, and Structured Data Analysis. Users can trace through the execution of LLM Applications, evaluate generative models, explore embedding point-clouds, visualize generative application's search and retrieval process, and statistically analyze structured data. Phoenix is designed to help users troubleshoot problems related to retrieval, tool execution, relevance, toxicity, drift, and performance degradation.

For similar tasks

chatgpt-shell

chatgpt-shell is a multi-LLM Emacs shell that allows users to interact with various language models. Users can swap LLM providers, compose queries, execute source blocks, and perform vision experiments. The tool supports customization and offers features like inline modifications, executing snippets, and navigating source blocks. Users can support the project via GitHub Sponsors and contribute to feature requests and bug reports.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.