kheish

Kheish: A multi-role LLM agent for tasks like code auditing, file searching, and more—seamlessly leveraging RAG and extensible modules.

Stars: 81

Kheish is an open-source, multi-role agent designed for complex tasks that require structured, step-by-step collaboration with Large Language Models (LLMs). It acts as an intelligent agent that can request modules on demand, integrate user feedback, switch between specialized roles, and deliver refined results. By harnessing multiple 'sub-agents' within one framework, Kheish tackles tasks like security audits, file searches, RAG-based exploration, and more.

README:

Kheish is an open-source, multi-role agent designed for complex tasks that require structured, step-by-step collaboration with Large Language Models (LLMs). Rather than a simple orchestrator, Kheish itself acts as an intelligent agent that can request modules on demand, integrate user feedback, switch between specialized roles (Proposer, Reviewer, Validator, Formatter, etc.), and ultimately deliver a refined result. By harnessing multiple “sub-agents” (roles) within one framework, Kheish tackles tasks like security audits, file searches, RAG-based exploration, and more.

-

Adaptive Role Switching

Kheish functions as a single agent with multiple internal roles:- Proposer: Generates or updates proposals based on user input and context.

- Reviewer: Critically evaluates proposals, identifying flaws or requesting improvements.

- Validator: Final gatekeeper ensuring correctness and completeness.

-

Formatter: Takes a validated solution and converts it into a final presentation format (Markdown, etc.).

These roles can be enabled or disabled depending on the task definition in your YAML file.

-

On-Demand Module Requests

As an agent, Kheish can spontaneously invoke modules if it needs more information or functionality. Modules include:-

Filesystem (

fs): Reading files chunk by chunk, indexing them in RAG. -

Shell (

sh): Running limited shell commands with sandboxed allowances. -

RAG (

rag): Storing and retrieving large amounts of text via embeddings, enabling chunk-based queries. -

SSH (

ssh): Secure remote commands. -

Memories (

memories): Storing or recalling data outside the immediate LLM context (long-term memory).

-

Filesystem (

-

Feedback & Iteration

In many tasks, Kheish re-checks and revises its own proposals. For example:- Proposer suggests a solution.

- Reviewer critiques and possibly requests changes.

- Proposer refines based on feedback.

-

Validator delivers final approval or requests more fixes.

This iterative approach provides an agent that grows the solution’s quality step by step.

-

Retrieval-Augmented Generation (RAG)

For large codebases or multi-file contexts, Kheish indexes data in a vector store. It can retrieve relevant snippets later without stuffing the entire text into a single LLM prompt. This agent-based RAG integration reduces token usage and scales to bigger projects. -

Single Agent, Many Tasks

Kheish can handle parallel or serial tasks by defining separate YAML configurations or combining them into a single multi-step scenario. Each role or module request is orchestrated internally by Kheish’s logic—no external orchestrator needed.

| Task Name | Description |

|---|---|

audit-code |

A thorough security audit of a codebase, identifying potential vulnerabilities via multi-step agent roles. |

hf-secret-finder |

Requests the Hugging Face API, clones the repositories, and uses trufflehog (via the sh module) to detect secrets. |

find-in-file |

Searches for a secret across multiple files, chunk-reading them with fs. |

weather-blog-post |

Fetches live weather data (via web or a custom module) and writes a humorous blog post about it. |

-

Reads a YAML Configuration

Includes the agent roles, modules, the workflow of steps, and final output instructions. -

Builds an Agent

Kheish loads the roles (Proposer, Reviewer, etc.) and hooks in the modules for possible requests. -

Executes Steps Internally

The agent:- Gathers context (files, text).

- Generates or refines a solution (

Proposer). - Seeks feedback (

Reviewer) if needed. - Validates correctness (

Validator). - Formats the final result (

Formatter).

-

Optional RAG Integration

If large data is encountered, the agent chunk-indexes it into a vector store, retrieving relevant pieces via semantic queries. -

API Integration

Kheish provides a REST API that allows:

- Task submission and monitoring

- Real-time status updates

- Result retrieval

- Module execution control

-

Output

Once validated, Kheish saves or exports the final solution. If further feedback is provided, it can loop back into revision mode automatically.

-

Clone the Repository

git clone https://github.com/yourusername/kheish.git cd kheish -

Install Dependencies

- Rust toolchain (latest stable).

-

OPENAI_API_KEYor other relevant environment variables for your chosen LLM provider.

-

Build

cargo build --release

-

Run a Task

./target/release/kheish --task-config examples/tasks/audit-code.yaml

Contributions to Kheish are welcome! Feel free to open issues or submit pull requests on GitHub.

Licensed under Apache 2.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for kheish

Similar Open Source Tools

kheish

Kheish is an open-source, multi-role agent designed for complex tasks that require structured, step-by-step collaboration with Large Language Models (LLMs). It acts as an intelligent agent that can request modules on demand, integrate user feedback, switch between specialized roles, and deliver refined results. By harnessing multiple 'sub-agents' within one framework, Kheish tackles tasks like security audits, file searches, RAG-based exploration, and more.

exllamav3

ExLlamaV3 is an inference library for running local LLMs on modern consumer GPUs. It features a new EXL3 quantization format based on QTIP, flexible tensor-parallel and expert-parallel inference, OpenAI-compatible server via TabbyAPI, continuous dynamic batching, HF Transformers plugin, speculative decoding, multimodal support, and more. The library supports various architectures and aims to simplify and optimize the quantization process for large models, offering efficient conversion with reduced GPU-hours and cost. It provides a streamlined variant of QTIP, enabling fast and memory-bound latency for inference on GPUs.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

comfyui_LLM_Polymath

LLM Polymath Chat Node is an advanced Chat Node for ComfyUI that integrates large language models to build text-driven applications and automate data processes, enhancing prompt responses by incorporating real-time web search, linked content extraction, and custom agent instructions. It supports both OpenAI’s GPT-like models and alternative models served via a local Ollama API. The core functionalities include Comfy Node Finder and Smart Assistant, along with additional agents like Flux Prompter, Custom Instructors, Python debugger, and scripter. The tool offers features for prompt processing, web search integration, model & API integration, custom instructions, image handling, logging & debugging, output compression, and more.

easydiffusion

Easy Diffusion 3.0 is a user-friendly tool for installing and using Stable Diffusion on your computer. It offers hassle-free installation, clutter-free UI, task queue, intelligent model detection, live preview, image modifiers, multiple prompts file, saving generated images, UI themes, searchable models dropdown, and supports various image generation tasks like 'Text to Image', 'Image to Image', and 'InPainting'. The tool also provides advanced features such as custom models, merge models, custom VAE models, multi-GPU support, auto-updater, developer console, and more. It is designed for both new users and advanced users looking for powerful AI image generation capabilities.

ai_automation_suggester

An integration for Home Assistant that leverages AI models to understand your unique home environment and propose intelligent automations. By analyzing your entities, devices, areas, and existing automations, the AI Automation Suggester helps you discover new, context-aware use cases you might not have considered, ultimately streamlining your home management and improving efficiency, comfort, and convenience. The tool acts as a personal automation consultant, providing actionable YAML-based automations that can save energy, improve security, enhance comfort, and reduce manual intervention. It turns the complexity of a large Home Assistant environment into actionable insights and tangible benefits.

MARBLE

MARBLE (Multi-Agent Coordination Backbone with LLM Engine) is a modular framework for developing, testing, and evaluating multi-agent systems leveraging Large Language Models. It provides a structured environment for agents to interact in simulated environments, utilizing cognitive abilities and communication mechanisms for collaborative or competitive tasks. The framework features modular design, multi-agent support, LLM integration, shared memory, flexible environments, metrics and evaluation, industrial coding standards, and Docker support.

minefield

BitBom Minefield is a tool that uses roaring bit maps to graph Software Bill of Materials (SBOMs) with a focus on speed, air-gapped operation, scalability, and customizability. It is optimized for rapid data processing, operates securely in isolated environments, supports millions of nodes effortlessly, and allows users to extend the project without relying on upstream changes. The tool enables users to manage and explore software dependencies within isolated environments by offline processing and analyzing SBOMs.

llms

LLMs is a universal LLM API transformation server designed to standardize requests and responses between different LLM providers such as Anthropic, Gemini, and Deepseek. It uses a modular transformer system to handle provider-specific API formats, supporting real-time streaming responses and converting data into standardized formats. The server transforms requests and responses to and from unified formats, enabling seamless communication between various LLM providers.

Code-Atlas

Code Atlas is a lightweight interpreter developed in C++ that supports the execution of multi-language code snippets and partial Markdown rendering. It consumes significantly lower resources compared to similar tools, making it suitable for resource-limited devices. It leverages llama.cpp for local large-model inference and supports cloud-based large-model APIs. The tool provides features for code execution, Markdown rendering, local AI inference, and resource efficiency.

simplechat

The Simple Chat Application is a web-based platform that facilitates secure interactions with generative AI models, leveraging Azure OpenAI. It features Retrieval-Augmented Generation (RAG) for grounding conversations in user data. Users can upload personal or group documents processed using Azure AI Document Intelligence and Azure OpenAI Embeddings. The application offers optional features like Content Safety, Image Generation, Video and Audio processing, Document Classification, User Feedback, Conversation Archiving, Metadata Extraction, and Enhanced Citations. It uses Azure Cosmos DB for storage, Azure Active Directory for authentication, and runs on Azure App Service. Suitable for enterprise use, it supports knowledge discovery, content generation, and collaborative AI tasks in a secure, Azure-native framework.

FunGen-AI-Powered-Funscript-Generator

FunGen is a Python-based tool that uses AI to generate Funscript files from VR and 2D POV videos. It enables fully automated funscript creation for individual scenes or entire folders of videos. The tool includes features like automatic system scaling support, quick installation guides for Windows, Linux, and macOS, manual installation instructions, NVIDIA GPU setup, AMD GPU acceleration, YOLO model download, GUI settings, GitHub token setup, command-line usage, modular systems for funscript filtering and motion tracking, performance and parallel processing tips, and more. The project is still in early development stages and is not intended for commercial use.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

FinAnGPT-Pro

FinAnGPT-Pro is a financial data downloader and AI query system that downloads quarterly and annual financial data for stocks from EOD Historical Data, storing it in MongoDB and Google BigQuery. It includes an AI-powered natural language interface for querying financial data. Users can set up the tool by following the prerequisites and setup instructions provided in the README. The tool allows users to download financial data for all stocks in a watchlist or for a single stock, query financial data using a natural language interface, and receive responses in a structured format. Important considerations include error handling, rate limiting, data validation, BigQuery costs, MongoDB connection, and security measures for API keys and credentials.

maiar-ai

MAIAR is a composable, plugin-based AI agent framework designed to abstract data ingestion, decision-making, and action execution into modular plugins. It enables developers to define triggers and actions as standalone plugins, while the core runtime handles decision-making dynamically. This framework offers extensibility, composability, and model-driven behavior, allowing seamless addition of new functionality. MAIAR's architecture is influenced by Unix pipes, ensuring highly composable plugins, dynamic execution pipelines, and transparent debugging. It remains declarative and extensible, allowing developers to build complex AI workflows without rigid architectures.

AIOStreams

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

For similar tasks

kheish

Kheish is an open-source, multi-role agent designed for complex tasks that require structured, step-by-step collaboration with Large Language Models (LLMs). It acts as an intelligent agent that can request modules on demand, integrate user feedback, switch between specialized roles, and deliver refined results. By harnessing multiple 'sub-agents' within one framework, Kheish tackles tasks like security audits, file searches, RAG-based exploration, and more.

AI-Governor-Framework

The AI Governor Framework is a system designed to govern AI assistants in coding projects by providing rules and workflows to ensure consistency, respect architectural decisions, and enforce coding standards. It leverages Context Engineering to provide the AI with the right information at the right time, using an In-Repo approach to keep governance rules and architectural context directly inside the repository. The framework consists of two core components: The Governance Engine for passive rules and the Operator's Playbook for active protocols. It follows a 4-step Operator's Playbook to move features from idea to production with clarity and control.

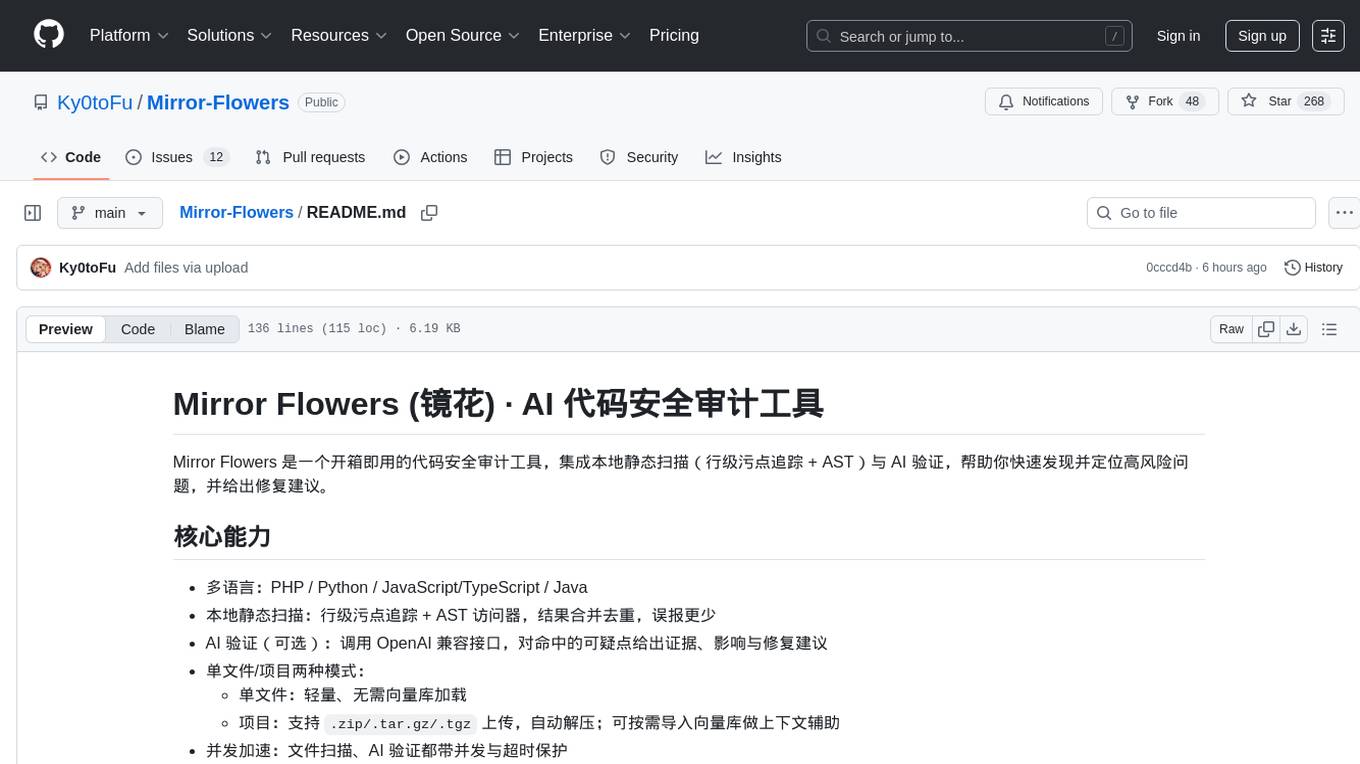

Mirror-Flowers

Mirror Flowers is an out-of-the-box code security auditing tool that integrates local static scanning (line-level taint tracking + AST) with AI verification to help quickly discover and locate high-risk issues, providing repair suggestions. It supports multiple languages such as PHP, Python, JavaScript/TypeScript, and Java. The tool offers both single-file and project modes, with features like concurrent acceleration, integrated UI for visual results, and compatibility with multiple OpenAI interface providers. Users can configure the tool through environment variables or API, and can utilize it through a web UI or HTTP API for tasks like single-file auditing or project auditing.

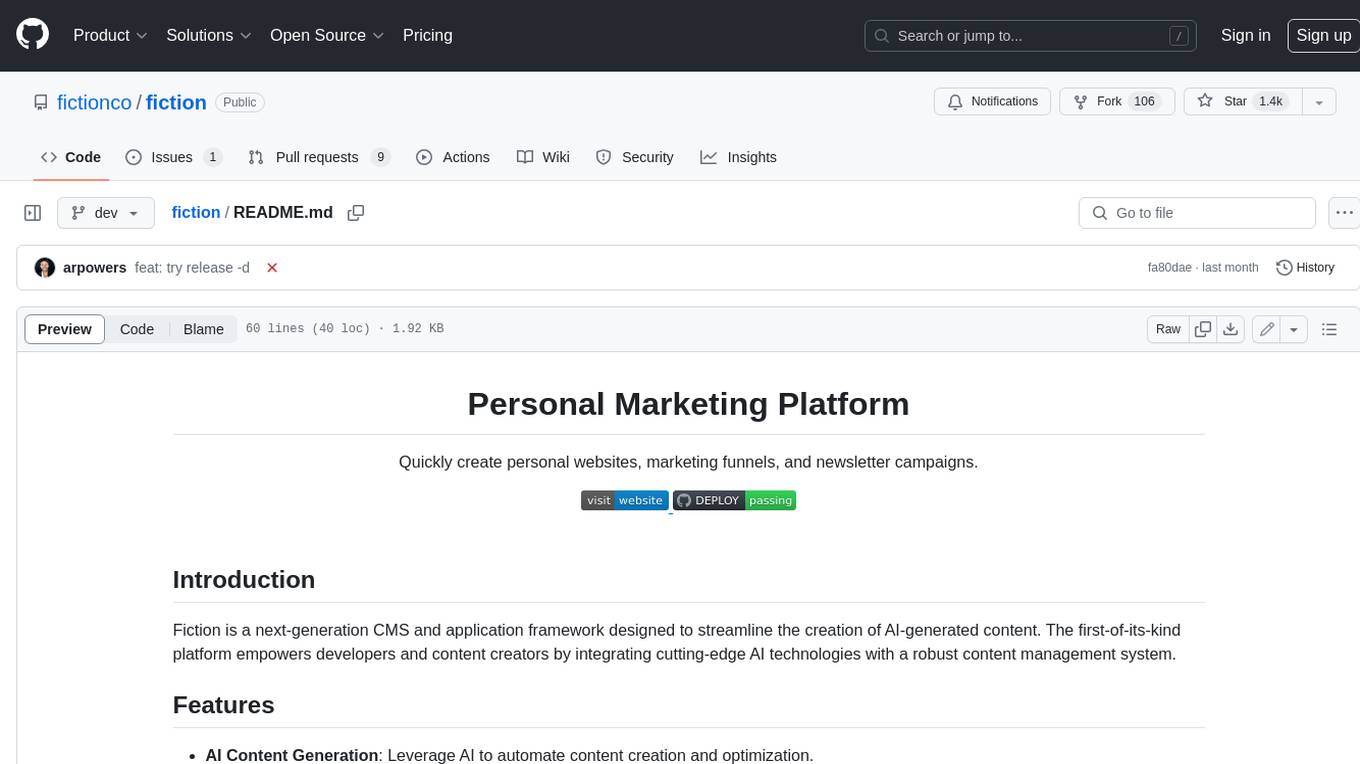

fiction

Fiction is a next-generation CMS and application framework designed to streamline the creation of AI-generated content. The first-of-its-kind platform empowers developers and content creators by integrating cutting-edge AI technologies with a robust content management system.

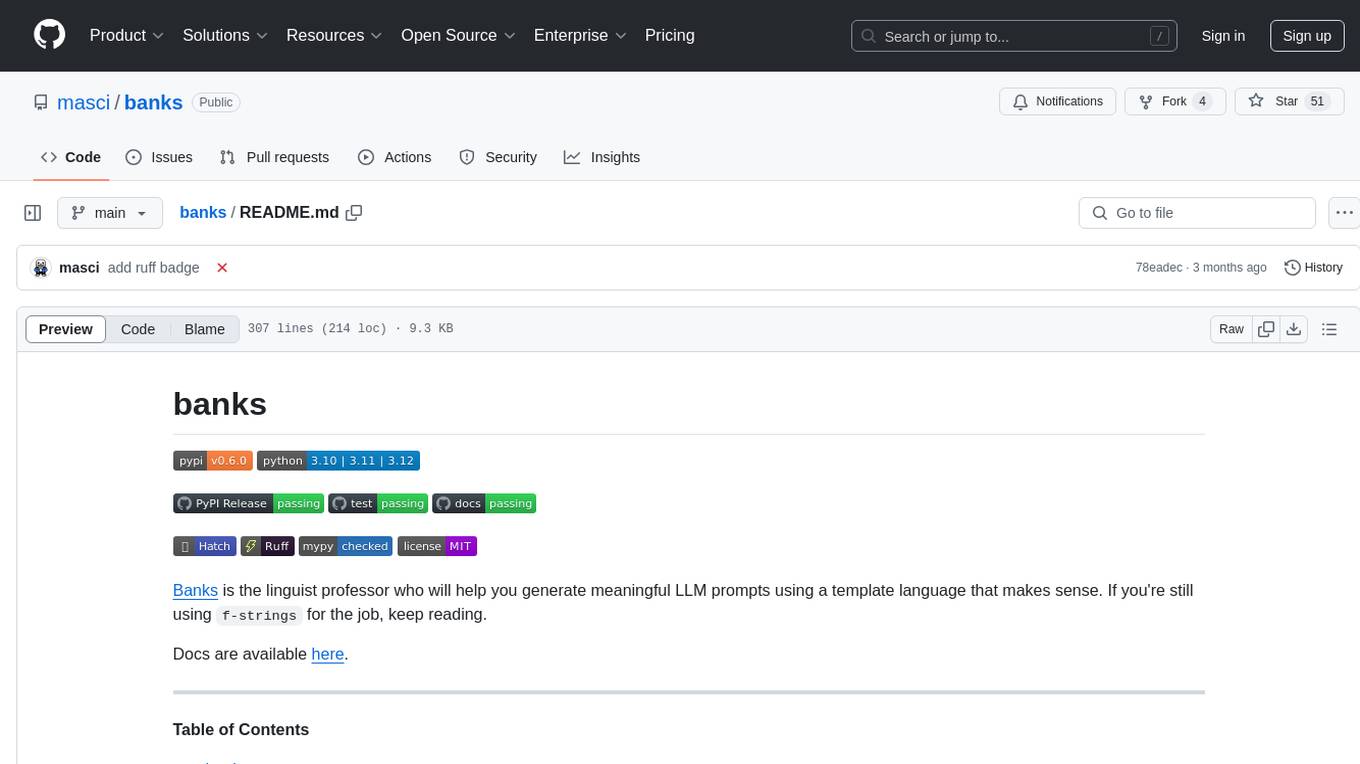

banks

Banks is a linguist professor tool that helps generate meaningful LLM prompts using a template language. It provides a user-friendly way to create prompts for various tasks such as blog writing, summarizing documents, lemmatizing text, and generating text using a LLM. The tool supports async operations and comes with predefined filters for data processing. Banks leverages Jinja's macro system to create prompts and interact with OpenAI API for text generation. It also offers a cache mechanism to avoid regenerating text for the same template and context.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.