ten-framework

Open-source framework for conversational voice AI agents

Stars: 8203

TEN is an open-source ecosystem for creating, customizing, and deploying real-time conversational AI agents with multimodal capabilities including voice, vision, and avatar interactions. It includes various components like TEN Framework, TEN Turn Detection, TEN VAD, TEN Agent, TMAN Designer, and TEN Portal. Users can follow the provided guidelines to set up and customize their agents using TMAN Designer, run them locally or in Codespace, and deploy them with Docker or other cloud services. The ecosystem also offers community channels for developers to connect, contribute, and get support.

README:

Table of Contents

- 👋 Welcome to TEN

- 🎨 TMAN Designer

- ✨ Features

- 👩💻 Get TEN Agent up and running

- 🛳️ TEN Agent Self Hosting

- 🌍 TEN Ecosystem

- ❓ Ask Questions

- 🥰 Contributing

TEN is a comprehensive open-source ecosystem for creating, customizing, and deploying real-time conversational AI agents with multimodal capabilities including voice, vision, and avatar interactions.

TEN includes TEN Framework, TEN Turn Detection, TEN VAD, TEN Agent, TMAN Designer, and TEN Portal. Check out 🌍 TEN Ecosystem for more details.

[!IMPORTANT]

Star TEN Repositories ⭐️

Get instant notifications for new releases and updates. Your support helps us grow and improve TEN!

https://github.com/user-attachments/assets/44c6a087-ec7a-45b0-a084-dab5dac5e36b

TMAN Designer is a low/no-code option to create voice agents with an easy-to-use workflow UI. It can load apps and graphs, and includes an online editor, log viewer, and much more.

Check out this blog for more details.

Build engaging AI avatars with TEN Agent using Trulience's diverse collection of free avatar options. To get it up and running, you only need 2 steps:

- Follow the README to finish setting up and running the Playground

- Enter the avatar ID and token you get from Trulience

TEN Agent now integrates seamlessly with MCP servers, expanding its LLM capabilities. To get started:

- Open the Module Picker in Playground

- Add the MCP server tool for LLM integration

- Paste a URL from your MCP server in the extension

- Start a realtime conversation with TEN Agent

This integration allows you to leverage MCP's diverse servers offerings while maintaining TEN Agent's powerful conversational abilities.

https://github.com/user-attachments/assets/78647eef-2d66-44e6-99a8-1918a940fb9f

TEN Agent is now running on the Espressif ESP32-S3 Korvo V3 development board, an excellent way to integrate realtime communication with LLM on hardware.

Check out the integration guide for more details.

Try Google Gemini Multimodal Live API with realtime vision and realtime screenshare detection capabilities, it is a ready-to-use extension, along with powerful tools like Weather Check and Web Search integrated perfectly into TEN Agent.

TEN offers a great support to make the realtime interactive experience even better on other LLM platform as well, check out docs for more.

Experience the real-time image generation with StoryTeller, it is a ready-to-use extension, along with powerful tools like Weather Check and Web Search integrated perfectly into TEN.

| Category | Requirements |

|---|---|

| Keys | • Agora App ID and App Certificate (free minutes every month) • OpenAI API key (any LLM that is compatible with OpenAI) • Deepgram ASR (free credits available with signup) • Elevenlabs TTS (free credits available with signup) |

| Installation | • Docker / Docker Compose • Node.js(LTS) v18 |

| Minimum System Requirements | • CPU >= 2 Core • RAM >= 4 GB |

[!NOTE]

macOS: Docker setting on Apple Silicon

Uncheck "Use Rosetta for x86/amd64 emulation" in Docker settings, it may result in slower build times on ARM, but performance will be normal when deployed to x64 servers.

cd ai_agents

cp ./.env.example ./.envAGORA_APP_ID=

AGORA_APP_CERTIFICATE=docker compose up -ddocker exec -it ten_agent_dev bashcheck the /examples folder for more examples

# use the chained voice assistant

task use AGENT=voice-assistant

# or use the speech-to-speech voice assistant realtime

task use AGENT=voice-assistant-realtime# run task build if you changed any local source code, this is necessary if you are working on languages which require compilation like TypeScript or Golang.

task build

task run- Open localhost:49483.

- Right-click on the STT, LLM, and TTS extensions.

- Open their properties and enter APIs respectively.

- Right-click the canvas and select 'Manage Apps' to open the Apps Manager.

- Right under the Actions, click the ▶ to run the App.

- Check the 'Run with TEN Agent' option and click the Run button.

GitHub offers free Codespace for each repository, you can run the playground in Codespace without using Docker.Also, the speed of Codespace is much faster than localhost.

Check out this guide for more details.

Once you have customized your agent (either by using the TMAN Manager, Playground, or editing property.json directly), you can deploy it by creating a release Docker image for your service.

Read the Deployment Guide for detailed information about deployment.

coming soon

| Project | Preview |

|---|---|

|

🏚️ TEN Framework TEN is an open-source framework for real-time, multimodal conversational AI.

|

|

|

️🔂 TEN Turn Detection TEN is for full-duplex dialogue communication.

|

|

|

🔉 TEN VAD TEN VAD is a low-latency, lightweight and high-performance streaming voice activity detector (VAD).

|

|

|

🎙️ TEN Agent TEN Agent is a showcase of TEN Framewrok. |

|

|

🎨 TMAN Designer TMAN Designer is low/no code option to make a voice agent with easy to use workflow UI. |

|

|

📒 TEN Portal The official site of TEN framework, it has documentation and blog.

|

TEN Framework is available on these AI-powered Q&A platforms. They can help you find answers quickly and accurately in multiple languages, covering everything from basic setup to advanced implementation details.

| Service | Link |

|---|---|

| DeepWiki | |

| ReadmeX |

We welcome all forms of open-source collaboration! Whether you're fixing bugs, adding features, improving documentation, or sharing ideas - your contributions help advance personalized AI tools. Check out our GitHub Issues and Projects to find ways to contribute and show your skills. Together, we can build something amazing!

[!TIP]

Welcome all kinds of contributions 🙏

Join us in building TEN better! Every contribution makes a difference, from code to documentation. Share your TEN Agent projects on social media with to inspire others!

Connect with one of the TEN maintainers @elliotchen100 on 𝕏 or @cyfyifanchen on GitHub for project updates, discussions and collaboration opportunities.

Contributions are welcome! Please read the contribution guidelines first.

-

The entire TEN framework (except for the folders explicitly listed below) is released under the Apache License, Version 2.0, with additional restrictions. For details, please refer to the LICENSE file located in the root directory of the TEN framework.

-

The components within the

packagesdirectory are released under the Apache License, Version 2.0. For details, please refer to theLICENSEfile located in each package's root directory. -

The third-party libraries used by the TEN framework are listed and described in detail. For more information, please refer to the third_party folder.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ten-framework

Similar Open Source Tools

ten-framework

TEN is an open-source ecosystem for creating, customizing, and deploying real-time conversational AI agents with multimodal capabilities including voice, vision, and avatar interactions. It includes various components like TEN Framework, TEN Turn Detection, TEN VAD, TEN Agent, TMAN Designer, and TEN Portal. Users can follow the provided guidelines to set up and customize their agents using TMAN Designer, run them locally or in Codespace, and deploy them with Docker or other cloud services. The ecosystem also offers community channels for developers to connect, contribute, and get support.

Folo

Folo is a content organization tool that creates a noise-free timeline for users. It allows sharing lists, exploring collections, and distraction-free browsing. Users can subscribe to feeds, curate favorites, and utilize AI-powered features like translation and summaries. Folo supports various content types such as articles, videos, images, and audio. It introduces an ownership economy with $POWER tipping for creators and fosters a community-driven experience. The tool is under active development, welcoming feedback from users and developers.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

ColossalAI

Colossal-AI is a deep learning system for large-scale parallel training. It provides a unified interface to scale sequential code of model training to distributed environments. Colossal-AI supports parallel training methods such as data, pipeline, tensor, and sequence parallelism and is integrated with heterogeneous training and zero redundancy optimizer.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

screenpipe

24/7 Screen & Audio Capture Library to build personalized AI powered by what you've seen, said, or heard. Works with Ollama. Alternative to Rewind.ai. Open. Secure. You own your data. Rust. We are shipping daily, make suggestions, post bugs, give feedback. Building a reliable stream of audio and screenshot data, simplifying life for developers by solving non-trivial problems. Multiple installation options available. Experimental tool with various integrations and features for screen and audio capture, OCR, STT, and more. Open source project focused on enabling tooling & infrastructure for a wide range of applications.

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

Omi

Omi is an open-source AI wearable that transforms the way conversations are captured and managed. By connecting Omi to your mobile device, you can effortlessly obtain high-quality transcriptions of meetings, chats, and voice memos on the go.

generative-ai-with-javascript

The 'Generative AI with JavaScript' repository is a comprehensive resource hub for JavaScript developers interested in delving into the world of Generative AI. It provides code samples, tutorials, and resources from a video series, offering best practices and tips to enhance AI skills. The repository covers the basics of generative AI, guides on building AI applications using JavaScript, from local development to deployment on Azure, and scaling AI models. It is a living repository with continuous updates, making it a valuable resource for both beginners and experienced developers looking to explore AI with JavaScript.

anx-reader

Anx Reader is a meticulously designed e-book reader tailored for book enthusiasts. It boasts powerful AI functionalities and supports various e-book formats, enhancing the reading experience. With a modern interface, the tool aims to provide a seamless and enjoyable reading journey. It offers rich format support, seamless sync across devices, smart AI assistance, personalized reading experiences, professional reading analytics, a powerful note system, practical tools, and cross-platform support. The tool is continuously evolving with features like UI adaptation for tablets, page-turning animation, TTS voice reading, reading fonts, translation, and more in the pipeline.

efficient-transformers

Efficient Transformers Library provides reimplemented blocks of Large Language Models (LLMs) to make models functional and highly performant on Qualcomm Cloud AI 100. It includes graph transformations, handling for under-flows and overflows, patcher modules, exporter module, sample applications, and unit test templates. The library supports seamless inference on pre-trained LLMs with documentation for model optimization and deployment. Contributions and suggestions are welcome, with a focus on testing changes for model support and common utilities.

omi

Omi is an open-source AI wearable that provides automatic, high-quality transcriptions of meetings, chats, and voice memos. It revolutionizes how conversations are captured and managed by connecting to mobile devices. The tool offers features for seamless documentation and integration with third-party services.

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

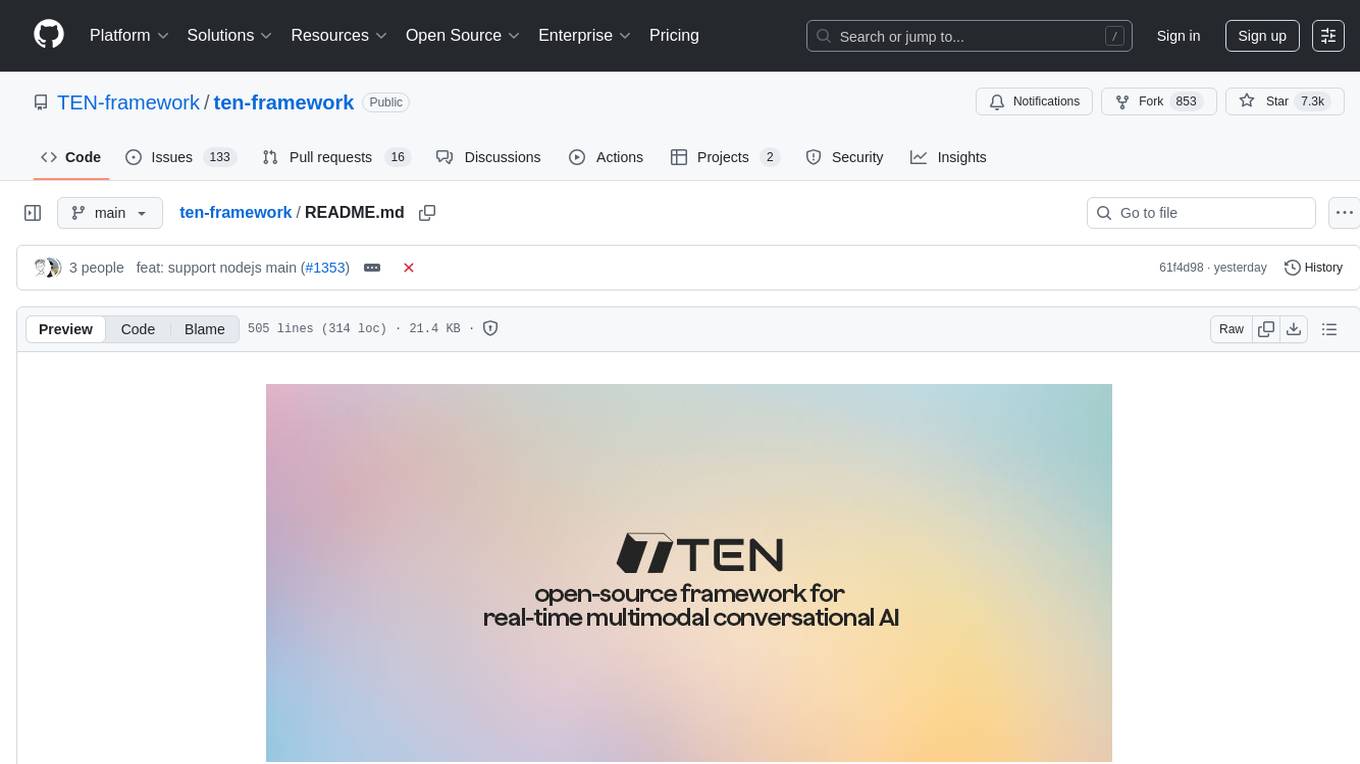

Tutorial-of-AI-Kit-with-Raspberry-Pi-From-Zero-to-Hero

This course is designed to teach you how to harness the power of AI on the Raspberry Pi, with a focus on using an AI kit for computer vision tasks. Learn to integrate AI into IoT applications, from object detection to visual recognition. Suitable for hobbyists, students, and professionals to bring AI-driven solutions to life on resource-constrained devices like the Raspberry Pi.

Imagine_AI

IMAGINE - AI is a groundbreaking image generator tool that leverages the power of OpenAI's DALL-E 2 API library to create extraordinary visuals. Developed using Node.js and Express, this tool offers a transformative way to unleash artistic creativity and imagination by generating unique and captivating images through simple prompts or keywords.

For similar tasks

ten-framework

TEN is an open-source ecosystem for creating, customizing, and deploying real-time conversational AI agents with multimodal capabilities including voice, vision, and avatar interactions. It includes various components like TEN Framework, TEN Turn Detection, TEN VAD, TEN Agent, TMAN Designer, and TEN Portal. Users can follow the provided guidelines to set up and customize their agents using TMAN Designer, run them locally or in Codespace, and deploy them with Docker or other cloud services. The ecosystem also offers community channels for developers to connect, contribute, and get support.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.