ck

Local first semantic and hybrid BM25 grep / search tool for use by AI and humans!

Stars: 742

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

README:

ck (seek) finds code by meaning, not just keywords. It's a drop-in replacement for grep that understands what you're looking for — search for "error handling" and find try/catch blocks, error returns, and exception handling code even when those exact words aren't present.

# Install from crates.io

cargo install ck-search

# Or build from source

git clone https://github.com/BeaconBay/ck

cd ck

cargo build --release# Index your project for semantic search (one-time setup)

ck --index src/

# Search by meaning - automatically updates index for changed files

ck --sem "error handling" src/

ck --sem "authentication logic" src/

ck --sem "database connection pooling" src/

# Traditional grep-compatible search still works

ck -n "TODO" *.rs

ck -R "TODO|FIXME" .

# Combine both: semantic relevance + keyword filtering

ck --hybrid "connection timeout" src/For Developers: Stop hunting through thousands of regex false positives. Find the code you actually need by describing what it does.

For AI Agents: Get structured, semantic search results in JSONL format. Stream-friendly, error-resilient output perfect for LLM workflows, code analysis, documentation generation, and automated refactoring.

Find code by concept, not keywords. Searches understand synonyms, related terms, and conceptual similarity.

# These find related code even without exact keywords:

ck --sem "retry logic" # finds backoff, circuit breakers

ck --sem "user authentication" # finds login, auth, credentials

ck --sem "data validation" # finds sanitization, type checking

# Get complete functions/classes containing matches (NEW!)

ck --sem --full-section "error handling" # returns entire functions

ck --full-section "async def" src/ # works with regex tooAll your muscle memory works. Same flags, same behavior, same output format.

ck -i "warning" *.log # Case-insensitive

ck -n -A 3 -B 1 "error" src/ # Line numbers + context

ck --no-filename "TODO" src/ # Suppress filenames (grep -h equivalent)

ck -l "error" src/ # List files with matches only (NEW!)

ck -L "TODO" src/ # List files without matches (NEW!)

ck -R --exclude "*.test.js" "bug" # Recursive with exclusions

ck "pattern" file1.txt file2.txt # Multiple filesCombine keyword precision with semantic understanding using Reciprocal Rank Fusion.

ck --hybrid "async timeout" src/ # Best of both worlds

ck --hybrid --scores "cache" src/ # Show relevance scores with color highlighting

ck --hybrid --threshold 0.02 query # Filter by minimum relevance

ck -l --hybrid "database" src/ # List files using hybrid searchPerfect structured output for LLMs, scripts, and automation. JSONL format provides superior parsing reliability for AI agents.

# JSONL format - one JSON object per line (recommended for agents)

ck --jsonl --sem "error handling" src/

ck --jsonl --no-snippet "function" . # Metadata only

ck --jsonl --topk 5 --threshold 0.7 "auth" # High-confidence results

# Traditional JSON (single array)

ck --json --sem "error handling" src/ | jq '.file'

ck --json --topk 5 "TODO" . | jq -r '.preview'

ck --json --full-section --sem "database" . | jq -r '.preview' # Complete functionsWhy JSONL for AI agents?

- ✅ Streaming friendly: Process results as they arrive, no waiting for complete response

- ✅ Memory efficient: Parse one result at a time, not entire array into memory

- ✅ Error resilient: One malformed line doesn't break entire response

- ✅ Composable: Works perfectly with Unix pipes and stream processing

- ✅ Standard format: Used by OpenAI API, Anthropic API, and modern ML pipelines

JSONL Output Format:

{"path":"./src/auth.rs","span":{"byte_start":1203,"byte_end":1456,"line_start":42,"line_end":58},"language":"rust","snippet":"fn authenticate(user: User) -> Result<Token> { ... }","score":0.89}

{"path":"./src/error.rs","span":{"byte_start":234,"byte_end":678,"line_start":15,"line_end":25},"language":"rust","snippet":"impl Error for AuthError { ... }","score":0.76}Agent Processing Example:

# Stream-process JSONL results (memory efficient)

import json, subprocess

proc = subprocess.Popen(['ck', '--jsonl', '--sem', 'error handling', 'src/'],

stdout=subprocess.PIPE, text=True)

for line in proc.stdout:

result = json.loads(line)

if result['score'] > 0.8: # High-confidence matches only

print(f"📍 {result['path']}:{result['span']['line_start']}")

print(f"🔍 {result['snippet'][:100]}...")Automatically excludes cache directories, build artifacts, and respects .gitignore files.

# Respects .gitignore by default (NEW!)

ck "pattern" . # Follows .gitignore rules

ck --no-ignore "pattern" . # Search all files including ignored ones

# These are also excluded by default:

# .git, node_modules, target/, __pycache__

# Override defaults:

ck --no-default-excludes "pattern" . # Search everything

ck --exclude "dist" --exclude "logs" . # Add custom exclusions

# Works with indexing too (NEW in v0.3.6!):

ck --index --exclude "node_modules" . # Exclude from index

ck --index --exclude "*.test.js" . # Support glob patterns# Create semantic index (one-time setup)

ck --index /path/to/project

# Now search instantly by meaning

ck --sem "database queries" .

ck --sem "error handling" .

ck --sem "authentication" .Choose the right model for your needs when creating the index:

# Default: BGE-Small (fast, precise chunking)

ck --index .

# Enhanced: Nomic V1.5 (8K context, optimal for large functions)

ck --index --model nomic-v1.5 .

# Code-specialized: Jina Code (optimized for programming languages)

ck --index --model jina-code .Model Comparison:

-

bge-small(default): 400-token chunks, fast indexing, good for most code -

nomic-v1.5: 1024-token chunks with 8K model capacity, better for large functions and classes -

jina-code: 1024-token chunks with 8K model capacity, specialized for code understanding

New in v0.4.5: Token-aware chunking uses actual model tokenizers for precise sizing, with model-specific chunk configurations balancing precision vs context.

Note: Model choice is set during indexing. Existing indexes will automatically use their original model.

-

--regex(default): Classic grep behavior, no indexing required -

--sem: Pure semantic search using embeddings (requires index) -

--hybrid: Combines regex + semantic with intelligent ranking

ck --sem --scores "machine learning" docs/

# [0.847] ./ai_guide.txt: Machine learning introduction...

# [0.732] ./statistics.txt: Statistical learning methods...

# [0.681] ./algorithms.txt: Classification algorithms...# Glob patterns work

ck --sem "authentication" *.py *.js *.rs

# Multiple files

ck --sem "error handling" src/auth.rs src/db.rs

# Quoted patterns prevent shell expansion

ck --sem "auth" "src/**/*.ts"# Only high-confidence semantic matches

ck --sem --threshold 0.7 "query"

# Low-confidence hybrid matches (good for exploration)

ck --hybrid --threshold 0.01 "concept"

# Get complete code sections instead of snippets (NEW!)

ck --sem --full-section "database queries"

ck --full-section "class.*Error" src/ # Complete classes# Limit results for focused analysis

ck --sem --topk 5 "authentication patterns"

# Great for AI agent consumption

ck --json --topk 10 "error handling" | process_results.py# Check index status

ck --status .

# Clean up and rebuild

ck --clean .

ck --index .

# Add single file to index

ck --add new_file.rsAnalyze how files will be chunked for embedding with the enhanced --inspect command:

# Inspect file chunking and token usage

ck --inspect src/main.rs

# Output: File info, chunk count, token statistics, and chunk details

# Example output:

# File: src/main.rs (49.6 KB, 1378 lines, 12083 tokens)

# Language: rust

#

# Chunks: 17 (tokens: min=4, max=3942, avg=644)

# 1. mod: 4 tokens | L9-9 | mod progress;

# 2. func: 1185 tokens | L88-294 | struct Cli { ... }

# 3. func: 442 tokens | L296-341 | fn expand_glob_patterns(...

# Check different model configurations

ck --inspect --model bge-small src/main.rs # 400-token chunking

ck --inspect --model nomic-v1.5 src/main.rs # 1024-token chunking| Language | Indexing | Tree-sitter Parsing | Semantic Chunking |

|---|---|---|---|

| Python | ✅ | ✅ | ✅ Functions, classes |

| JavaScript | ✅ | ✅ | ✅ Functions, classes, methods |

| TypeScript | ✅ | ✅ | ✅ Functions, classes, methods |

| Haskell | ✅ | ✅ | ✅ Functions, types, instances |

| Rust | ✅ | ✅ | ✅ Functions, structs, traits |

| Ruby | ✅ | ✅ | ✅ Classes, methods, modules |

| Go | ✅ | ✅ | ✅ Functions, types, methods, variables |

| C# | ✅ | ✅ | ✅ Classes, interfaces, methods, variables |

Text Formats: Markdown, JSON, YAML, TOML, XML, HTML, CSS, shell scripts, SQL, log files, config files, and any other text format.

Smart Binary Detection: Uses ripgrep-style content analysis (NUL byte detection) instead of extension-based filtering, automatically indexing any text file regardless of extension while correctly excluding binary files.

Smart Exclusions: Automatically skips .git, node_modules, target/, build/, dist/, __pycache__/, .venv, venv, and other common build/cache/virtual environment directories.

git clone https://github.com/BeaconBay/ck

cd ck

cargo install --path ck-cli# Install latest release from crates.io

cargo install ck-search# Coming soon:

brew install ck-search

apt install ck-searchck uses a modular Rust workspace:

-

ck-cli- Command-line interface and argument parsing -

ck-core- Shared types, configuration, and utilities -

ck-search- Search engine implementations (regex, BM25, semantic) -

ck-index- File indexing, hashing, and sidecar management -

ck-embed- Text embedding providers (FastEmbed, API backends) -

ck-ann- Approximate nearest neighbor search indices -

ck-chunk- Text segmentation and language-aware parsing -

ck-models- Model registry and configuration management

Indexes are stored in .ck/ directories alongside your code:

project/

├── src/

├── docs/

└── .ck/ # Semantic index (can be safely deleted)

├── embeddings.json

├── ann_index.bin

└── tantivy_index/

The .ck/ directory is a cache — safe to delete and rebuild anytime.

# Find authentication/authorization code

ck --sem "user permissions" src/

ck --sem "access control" src/

ck --sem "login validation" src/

# Find error handling strategies

ck --sem "exception handling" src/

ck --sem "error recovery" src/

ck --sem "fallback mechanisms" src/

# Find performance-related code

ck --sem "caching strategies" src/

ck --sem "database optimization" src/

ck --sem "memory management" src/# Git hooks

git diff --name-only | xargs ck --sem "TODO"

# CI/CD pipeline

ck --json --sem "security vulnerability" . | security_scanner.py

# Code review prep

ck --hybrid --scores "performance" src/ > review_notes.txt

# Documentation generation

ck --json --sem "public API" src/ | generate_docs.py# Find related test files

ck --sem "unit tests for authentication" tests/

ck -l --sem "test" tests/ # List test files by semantic content

# Identify refactoring candidates

ck --sem "duplicate logic" src/

ck --sem "code complexity" src/

ck -L "test" src/ # Find source files without tests

# Security audit

ck --hybrid "password|credential|secret" src/

ck --sem "input validation" src/

ck -l --hybrid --scores "security" src/ # Files with security-related code# View current exclusion patterns

ck --help | grep -A 20 exclude

# These directories are excluded by default:

# .git, .svn, .hg # Version control

# node_modules, target, build # Build artifacts

# .cache, __pycache__ # Caches

# .vscode, .idea # IDE files# .ck/config.toml

[search]

default_mode = "hybrid"

default_threshold = 0.05

[indexing]

exclude_patterns = ["*.log", "temp/"]

chunk_size = 512

overlap = 64

[models]

embedding_model = "BAAI/bge-small-en-v1.5"- Indexing: ~1M LOC in under 2 minutes (with smart exclusions and token-aware chunking)

- Search: Sub-500ms queries on typical codebases

- Index size: ~2x source code size with compression

- Memory: Efficient streaming for large repositories with span-based content extraction

- File filtering: Automatic exclusion of virtual environments and build artifacts

- Output: Clean stdout/stderr separation for reliable piping and scripting

- Token precision: HuggingFace tokenizers for exact model-specific token counting (v0.4.5+)

Run the comprehensive test suite:

# Full test suite (40+ tests)

./test_ck.sh

# Quick smoke test (14 core tests)

./test_ck_simple.shTests cover grep compatibility, semantic search, index management, file filtering, and more.

ck is actively developed and welcomes contributions:

- Issues: Report bugs, request features

- Code: Submit PRs for bug fixes, new features

- Documentation: Improve examples, guides, tutorials

- Testing: Help test on different codebases and languages

git clone https://github.com/your-org/ck

cd ck

cargo build

cargo test

./target/debug/ck --index test_files/

./target/debug/ck --sem "test query" test_files/- ✅ grep-compatible CLI with semantic search and file listing flags (

-l,-L) - ✅ FastEmbed integration with BGE models and enhanced model selection

- ✅ File exclusion patterns and glob support

- ✅ Threshold filtering and relevance scoring with visual highlighting

- ✅ Tree-sitter parsing and intelligent chunking (Python, TypeScript, JavaScript, Go, Haskell, Rust, Ruby)

- ✅ Complete code section extraction (

--full-section) - ✅ Enhanced indexing strategy with v3 semantic search optimization

- ✅ Clean stdout/stderr separation for reliable scripting

- ✅ Incremental index updates with hash-based change detection

- ✅ Token-aware chunking with HuggingFace tokenizers (v0.4.5)

- ✅ Model-specific chunk sizing and FastEmbed capacity utilization (v0.4.5)

- ✅ Enhanced

--inspectcommand with token analysis (v0.4.5) - ✅ Granular indexing progress with file-level and chunk-level progress bars (v0.4.5)

- ✅ Published to crates.io (

cargo install ck-search) - 🚧 Configuration file support

- 🚧 Package manager distributions (brew, apt)

Q: How is this different from grep/ripgrep/silver-searcher?

A: ck includes all the features of traditional search tools, but adds semantic understanding. Search for "error handling" and find relevant code even when those exact words aren't used.

Q: Does it work offline?

A: Yes, completely offline. The embedding model runs locally with no network calls.

Q: How big are the indexes?

A: Typically 1-3x the size of your source code, depending on content. The .ck/ directory can be safely deleted to reclaim space.

Q: Is it fast enough for large codebases?

A: Yes. Indexing is a one-time cost, and searches are sub-second even on large projects. Regex searches require no indexing and are as fast as grep.

Q: Can I use it in scripts/automation?

A: Absolutely. The --json flag provides structured output perfect for automated processing. Use --full-section to get complete functions for AI analysis.

Q: What about privacy/security?

A: Everything runs locally. No code or queries are sent to external services. The embedding model is downloaded once and cached locally.

Q: Where are the embedding models cached?

A: The embedding models (ONNX format) are downloaded and cached in platform-specific directories:

- Linux/macOS:

~/.cache/ck/models/(or$XDG_CACHE_HOME/ck/models/if set) - Windows:

%LOCALAPPDATA%\ck\cache\models\ - Fallback:

.ck_models/models/in the current directory (only if no home directory is found)

The models are downloaded automatically on first use and reused for subsequent runs.

Licensed under either of:

- Apache License, Version 2.0 (LICENSE-APACHE)

- MIT License (LICENSE-MIT)

at your option.

Built with:

- Rust - Systems programming language

- FastEmbed - Fast text embeddings

- Tantivy - Full-text search engine

- clap - Command line argument parsing

Inspired by the need for better code search tools in the age of AI-assisted development.

Start finding code by what it does, not what it says.

cargo build --release

./target/release/ck --index .

./target/release/ck --sem "the code you're looking for"For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ck

Similar Open Source Tools

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

zotero-mcp

Zotero MCP seamlessly connects your Zotero research library with AI assistants like ChatGPT and Claude via the Model Context Protocol. It offers AI-powered semantic search, access to library content, PDF annotation extraction, and easy updates. Users can search their library, analyze citations, and get summaries, making it ideal for research tasks. The tool supports multiple embedding models, intelligent search results, and flexible access methods for both local and remote collaboration. With advanced features like semantic search and PDF annotation extraction, Zotero MCP enhances research efficiency and organization.

mcp-omnisearch

mcp-omnisearch is a Model Context Protocol (MCP) server that acts as a unified gateway to multiple search providers and AI tools. It integrates Tavily, Perplexity, Kagi, Jina AI, Brave, Exa AI, and Firecrawl to offer a wide range of search, AI response, content processing, and enhancement features through a single interface. The server provides powerful search capabilities, AI response generation, content extraction, summarization, web scraping, structured data extraction, and more. It is designed to work flexibly with the API keys available, enabling users to activate only the providers they have keys for and easily add more as needed.

wikipedia-mcp

The Wikipedia MCP Server is a Model Context Protocol (MCP) server that provides real-time access to Wikipedia information for Large Language Models (LLMs). It allows AI assistants to retrieve accurate and up-to-date information from Wikipedia to enhance their responses. The server offers features such as searching Wikipedia, retrieving article content, getting article summaries, extracting specific sections, discovering links within articles, finding related topics, supporting multiple languages and country codes, optional caching for improved performance, and compatibility with Google ADK agents and other AI frameworks. Users can install the server using pipx, Smithery, PyPI, virtual environment, or from source. The server can be run with various options for transport protocol, language, country/locale, caching, access token, and more. It also supports Docker and Kubernetes deployment. The server provides MCP tools for interacting with Wikipedia, such as searching articles, getting article content, summaries, sections, links, coordinates, related topics, and extracting key facts. It also supports country/locale codes and language variants for languages like Chinese, Serbian, Kurdish, and Norwegian. The server includes example prompts for querying Wikipedia and provides MCP resources for interacting with Wikipedia through MCP endpoints. The project structure includes main packages, API implementation, core functionality, utility functions, and a comprehensive test suite for reliability and functionality testing.

ocode

OCode is a sophisticated terminal-native AI coding assistant that provides deep codebase intelligence and autonomous task execution. It seamlessly works with local Ollama models, bringing enterprise-grade AI assistance directly to your development workflow. OCode offers core capabilities such as terminal-native workflow, deep codebase intelligence, autonomous task execution, direct Ollama integration, and an extensible plugin layer. It can perform tasks like code generation & modification, project understanding, development automation, data processing, system operations, and interactive operations. The tool includes specialized tools for file operations, text processing, data processing, system operations, development tools, and integration. OCode enhances conversation parsing, offers smart tool selection, and provides performance improvements for coding tasks.

memento-mcp

Memento MCP is a scalable, high-performance knowledge graph memory system designed for LLMs. It offers semantic retrieval, contextual recall, and temporal awareness to any LLM client supporting the model context protocol. The system is built on core concepts like entities and relations, utilizing Neo4j as its storage backend for unified graph and vector search capabilities. With advanced features such as semantic search, temporal awareness, confidence decay, and rich metadata support, Memento MCP provides a robust solution for managing knowledge graphs efficiently and effectively.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

llm-context.py

LLM Context is a tool designed to assist developers in quickly injecting relevant content from code/text projects into Large Language Model chat interfaces. It leverages `.gitignore` patterns for smart file selection and offers a streamlined clipboard workflow using the command line. The tool also provides direct integration with Large Language Models through the Model Context Protocol (MCP). LLM Context is optimized for code repositories and collections of text/markdown/html documents, making it suitable for developers working on projects that fit within an LLM's context window. The tool is under active development and aims to enhance AI-assisted development workflows by harnessing the power of Large Language Models.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

MCPSpy

MCPSpy is a command-line tool leveraging eBPF technology to monitor Model Context Protocol (MCP) communication at the kernel level. It provides real-time visibility into JSON-RPC 2.0 messages exchanged between MCP clients and servers, supporting Stdio and HTTP transports. MCPSpy offers security analysis, debugging, performance monitoring, compliance assurance, and learning opportunities for understanding MCP communications. The tool consists of eBPF programs, an eBPF loader, an HTTP session manager, an MCP protocol parser, and output handlers for console display and JSONL output.

supergateway

Supergateway is a tool that allows running MCP stdio-based servers over SSE (Server-Sent Events) with one command. It is useful for remote access, debugging, or connecting to SSE-based clients when your MCP server only speaks stdio. The tool supports running in SSE to Stdio mode as well, where it connects to a remote SSE server and exposes a local stdio interface for downstream clients. Supergateway can be used with ngrok to share local MCP servers with remote clients and can also be run in a Docker containerized deployment. It is designed with modularity in mind, ensuring compatibility and ease of use for AI tools exchanging data.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

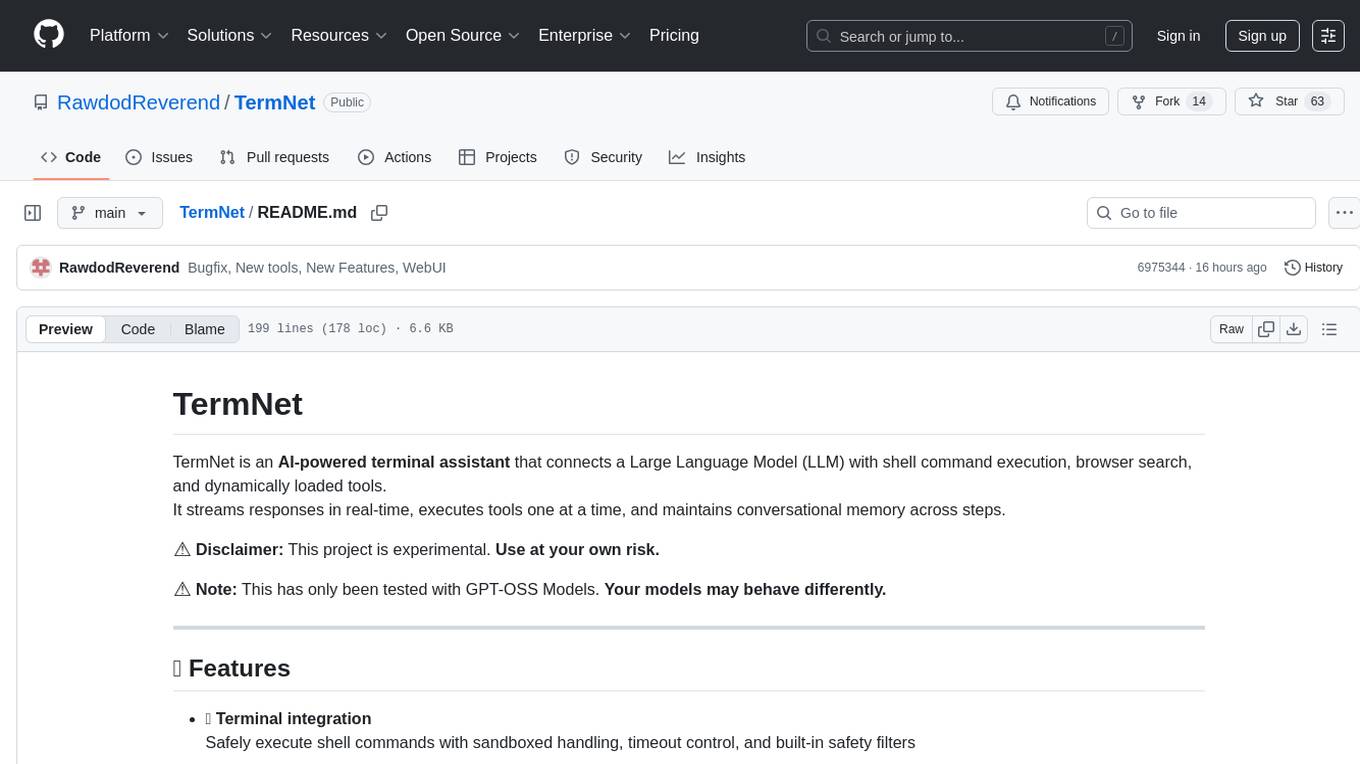

TermNet

TermNet is an AI-powered terminal assistant that connects a Large Language Model (LLM) with shell command execution, browser search, and dynamically loaded tools. It streams responses in real-time, executes tools one at a time, and maintains conversational memory across steps. The project features terminal integration for safe shell command execution, dynamic tool loading without code changes, browser automation powered by Playwright, WebSocket architecture for real-time communication, a memory system to track planning and actions, streaming LLM output integration, a safety layer to block dangerous commands, dual interface options, a notification system, and scratchpad memory for persistent note-taking. The architecture includes a multi-server setup with servers for WebSocket, browser automation, notifications, and web UI. The project structure consists of core backend files, various tools like web browsing and notification management, and servers for browser automation and notifications. Installation requires Python 3.9+, Ollama, and Chromium, with setup steps provided in the README. The tool can be used via the launcher for managing components or directly by starting individual servers. Additional tools can be added by registering them in `toolregistry.json` and implementing them in Python modules. Safety notes highlight the blocking of dangerous commands, allowed risky commands with warnings, and the importance of monitoring tool execution and setting appropriate timeouts.

For similar tasks

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

LLM4SE

The collection is actively updated with the help of an internal literature search engine.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.