Segment Anything

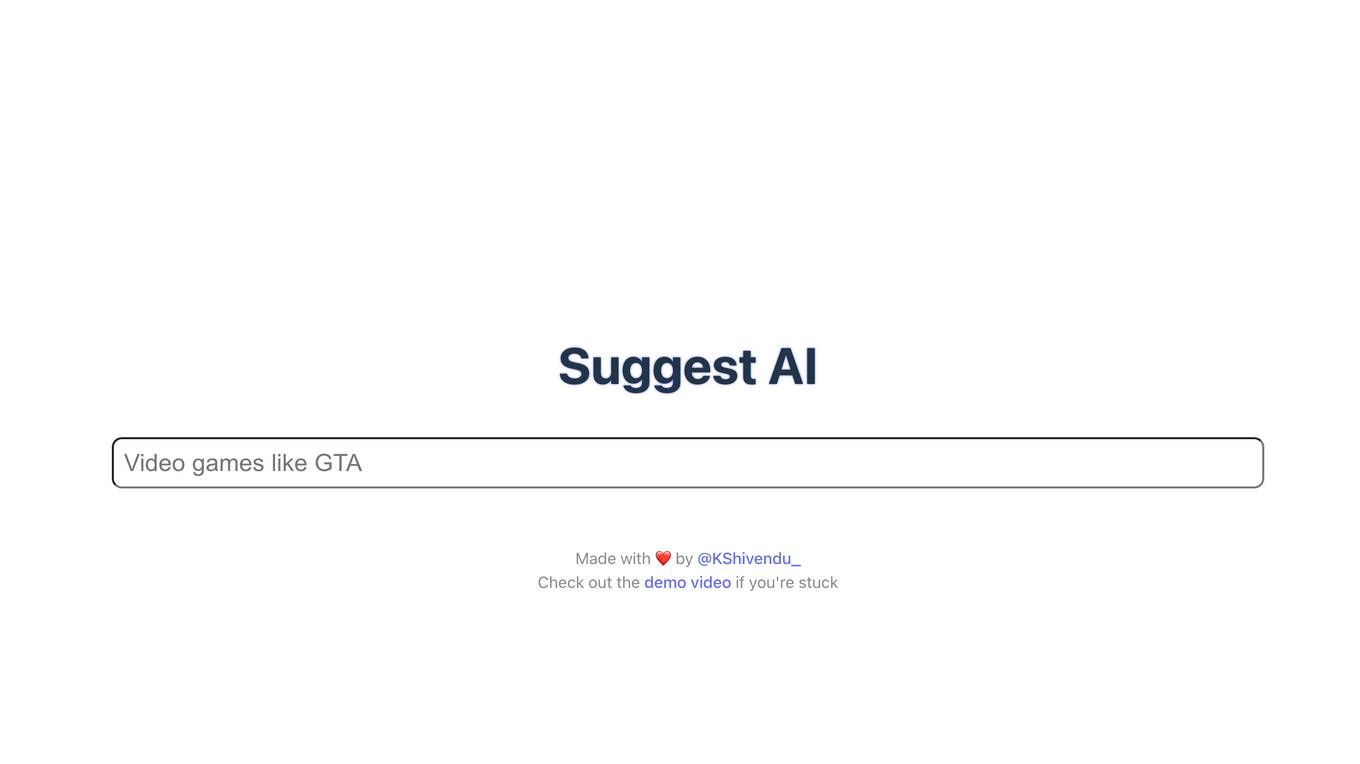

Segment Anything: The AI Model That Can Cut Out Any Object, in Any Image, With a Single Click

Description:

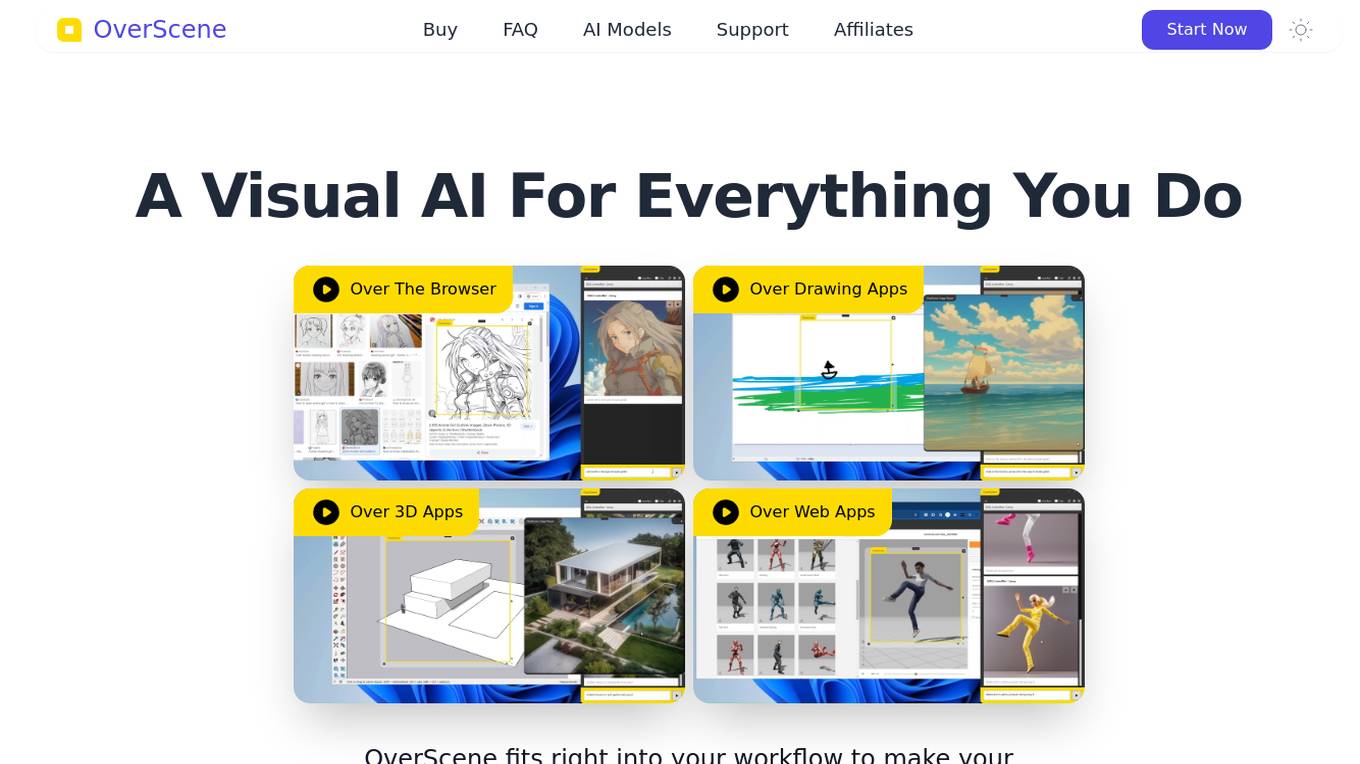

Segment Anything is a new AI model from Meta AI that can "cut out" any object, in any image, with a single click. It is a promptable segmentation system with zero-shot generalization to unfamiliar objects and images, without the need for additional training. SAM's promptable design enables flexible integration with other systems, and its extensible outputs can be used as inputs to other AI systems.

For Tasks:

For Jobs:

Features

- Can segment any object, in any image, with a single click

- Has zero-shot generalization to unfamiliar objects and images

- Does not require additional training

- Promptable design enables flexible integration with other systems

- Extensible outputs can be used as inputs to other AI systems

Advantages

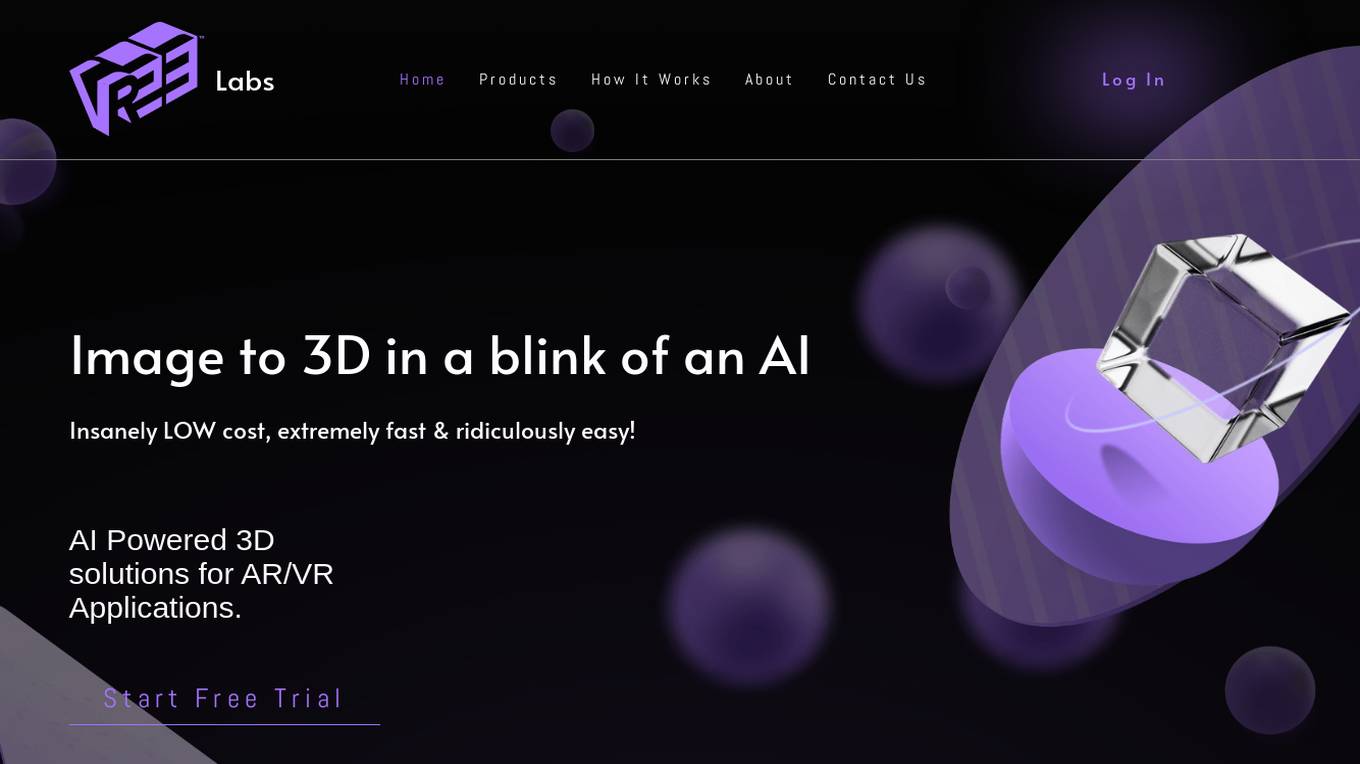

- Can be used to segment objects for a variety of tasks, such as image editing, object tracking, and 3D modeling

- Can be used to create new and innovative AI applications

- Is easy to use and integrate with other systems

- Is efficient and can run in real-time

- Is open source and available to everyone

Disadvantages

- Can be computationally expensive to train

- May not be able to segment all objects accurately

- May not be able to handle complex scenes

Frequently Asked Questions

-

Q:What type of prompts are supported?

A:Foreground/background points, bounding box, mask, and text prompts -

Q:What is the structure of the model?

A:A ViT-H image encoder that runs once per image and outputs an image embedding, a prompt encoder that embeds input prompts such as clicks or boxes, and a lightweight transformer based mask decoder that predicts object masks from the image embedding and prompt embeddings -

Q:What platforms does the model use?

A:The image encoder is implemented in PyTorch and requires a GPU for efficient inference. The prompt encoder and mask decoder can run directly with PyTroch or converted to ONNX and run efficiently on CPU or GPU across a variety of platforms that support ONNX runtime. -

Q:How big is the model?

A:The image encoder has 632M parameters. The prompt encoder and mask decoder have 4M parameters. -

Q:How long does inference take?

A:The image encoder takes ~0.15 seconds on an NVIDIA A100 GPU. The prompt encoder and mask decoder take ~50ms on CPU in the browser using multithreaded SIMD execution.

Alternative AI tools for Segment Anything

Similar sites

Segment Anything

Segment Anything: The AI Model That Can Cut Out Any Object, in Any Image, With a Single Click

For similar tasks

Segment Anything

Segment Anything: The AI Model That Can Cut Out Any Object, in Any Image, With a Single Click

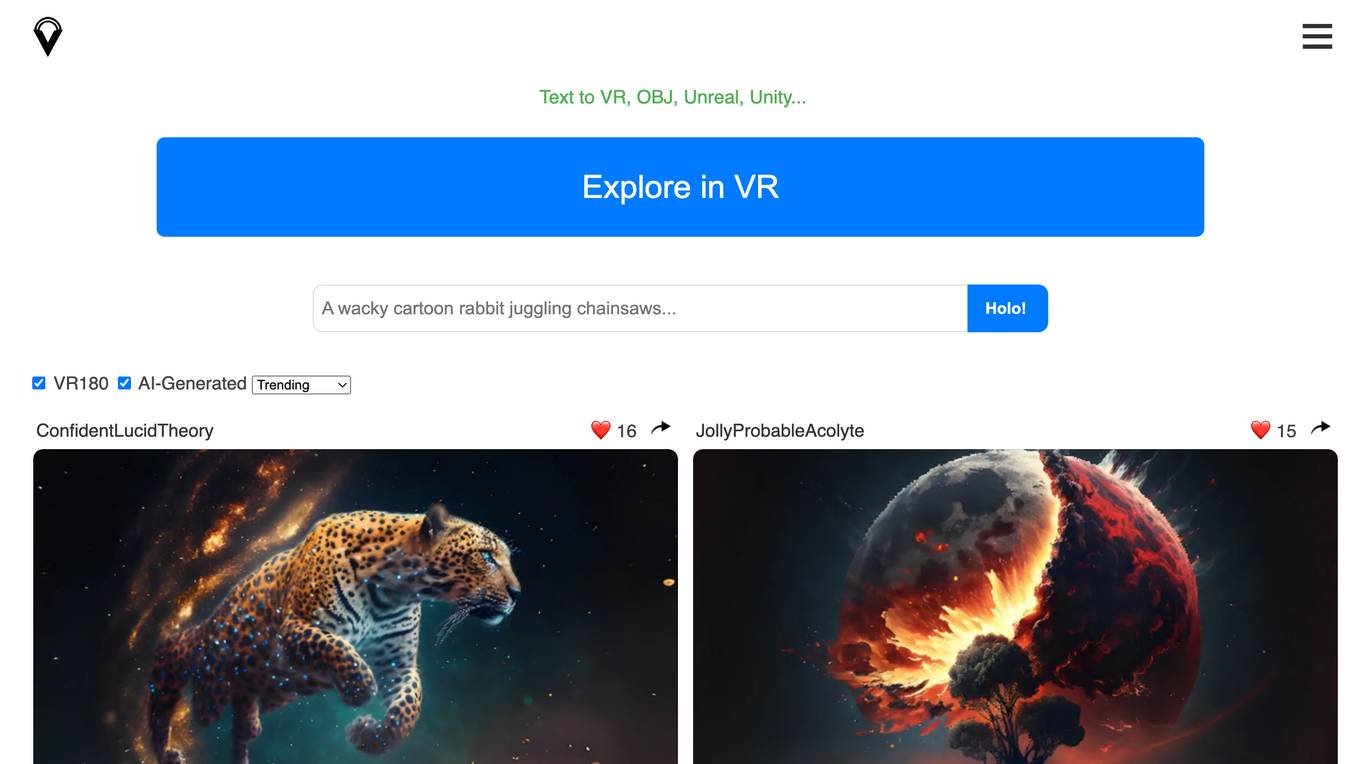

Holovolo

Immersive volumetric VR180 videos and photos, and 3D stable diffusion, for Quest and WebVR

For similar jobs

Segment Anything

Segment Anything: The AI Model That Can Cut Out Any Object, in Any Image, With a Single Click