Best AI tools for< Use Llm >

20 - AI tool Sites

Awan LLM

Awan LLM is an AI tool that offers an Unlimited Tokens, Unrestricted, and Cost-Effective LLM Inference API Platform for Power Users and Developers. It allows users to generate unlimited tokens, use LLM models without constraints, and pay per month instead of per token. The platform features an AI Assistant, AI Agents, Roleplay with AI companions, Data Processing, Code Completion, and Applications for profitable AI-powered applications.

Shieldbase

Shieldbase is an AI-powered enterprise search tool designed to provide secure and efficient search capabilities for businesses. It utilizes advanced artificial intelligence algorithms to index and retrieve information from various data sources within an organization, ensuring quick and accurate search results. With a focus on security, Shieldbase offers encryption and access control features to protect sensitive data. The platform is user-friendly and customizable, making it easy for businesses to implement and integrate into their existing systems. Shieldbase enhances productivity by enabling employees to quickly find the information they need, ultimately improving decision-making processes and overall operational efficiency.

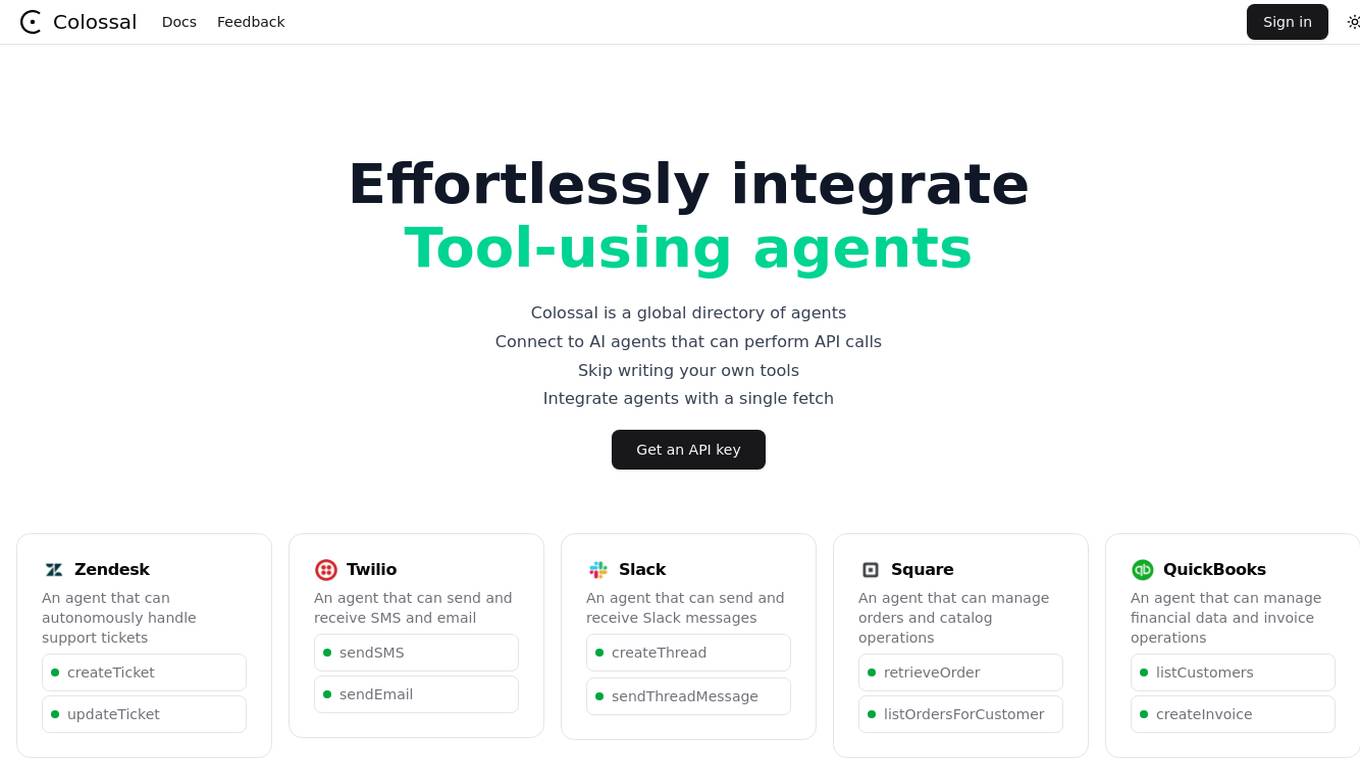

Colossal

Colossal is a global directory of AI agents that allows users to effortlessly integrate tool-using agents for various tasks. Users can connect to AI agents that can perform API calls, skip writing their own tools, and integrate agents with a single fetch. The platform offers agents for support tickets, SMS and email handling, Slack messages, order and catalog operations, financial data management, and more.

Notification Harbor

Notification Harbor is an email marketing platform that uses Large Language Models (LLMs) to help businesses create and send more effective email campaigns. With Notification Harbor, businesses can use LLMs to generate personalized email content, optimize subject lines, and even create entire email campaigns from scratch. Notification Harbor is designed to make email marketing easier and more effective for businesses of all sizes.

AnythingLLM

AnythingLLM is an all-in-one AI application designed for everyone. It offers a suite of tools for working with LLM (Large Language Models), documents, and agents in a fully private environment. Users can install AnythingLLM on their desktop for Windows, MacOS, and Linux, enabling flexible one-click installation and secure, fully private operation without internet connectivity. The application supports custom models, including enterprise models like GPT-4, custom fine-tuned models, and open-source models like Llama and Mistral. AnythingLLM allows users to work with various document formats, such as PDFs and word documents, providing tailored solutions with locally running defaults for privacy.

Ubdroid AI Answer Engine

Ubdroid AI Answer Engine is an AI-powered tool that utilizes various open-source LLMs to provide answers to user queries. It works by processing user queries and fetching relevant information from these LLMs. The accuracy of the answers depends on the quality and relevance of the data provided by the LLMs. The free version of the tool has a request limit of 10 requests per minute. If a model is not working, users can select another model.

Candor

Candor is an AI-powered team feedback platform that helps businesses improve team culture and performance. It offers a range of features including team retrospectives, check-ins, anonymous feedback, 1:1s, and 360 surveys. Candor's AI-driven insights help businesses identify and address issues within their teams, and its user-friendly interface makes it easy to set up and use. Candor is a valuable tool for any business looking to improve team communication, collaboration, and productivity.

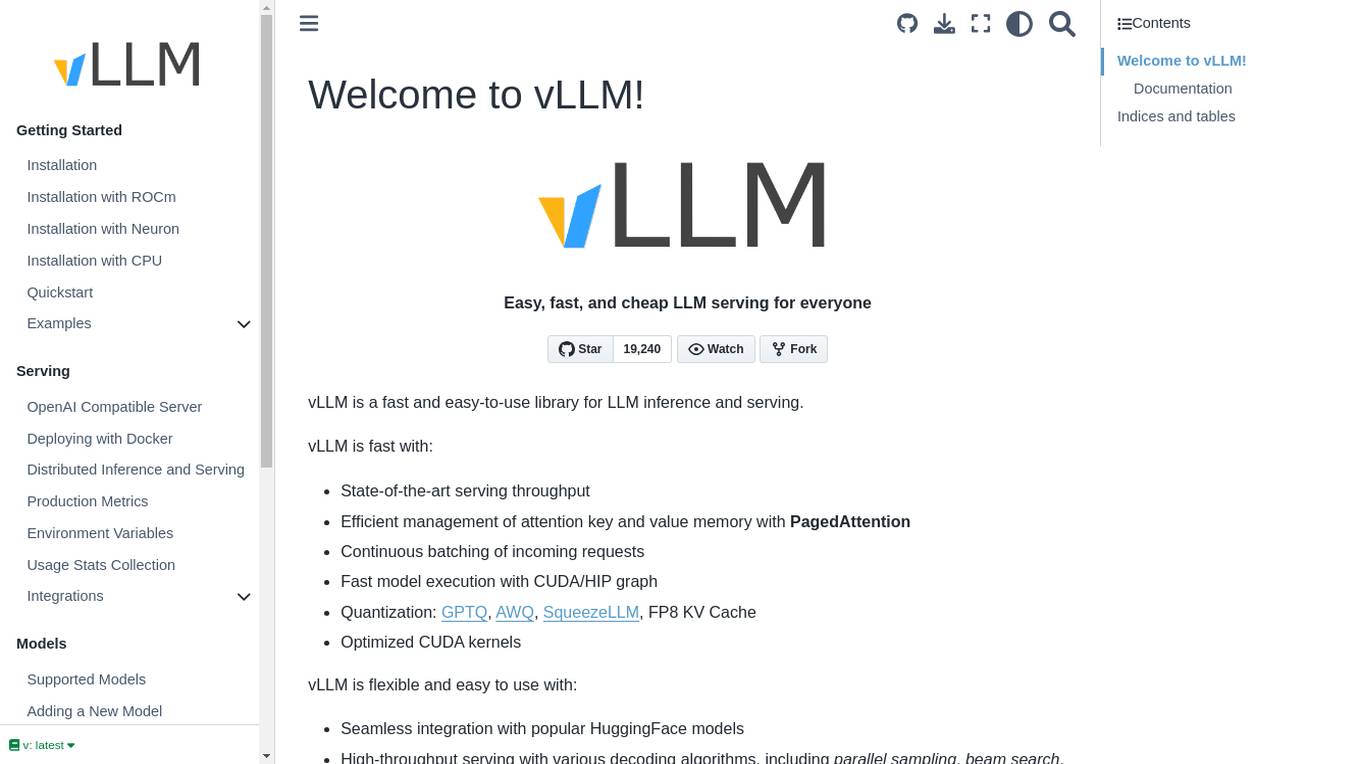

vLLM

vLLM is a fast and easy-to-use library for LLM inference and serving. It offers state-of-the-art serving throughput, efficient management of attention key and value memory, continuous batching of incoming requests, fast model execution with CUDA/HIP graph, and various decoding algorithms. The tool is flexible with seamless integration with popular HuggingFace models, high-throughput serving, tensor parallelism support, and streaming outputs. It supports NVIDIA GPUs and AMD GPUs, Prefix caching, and Multi-lora. vLLM is designed to provide fast and efficient LLM serving for everyone.

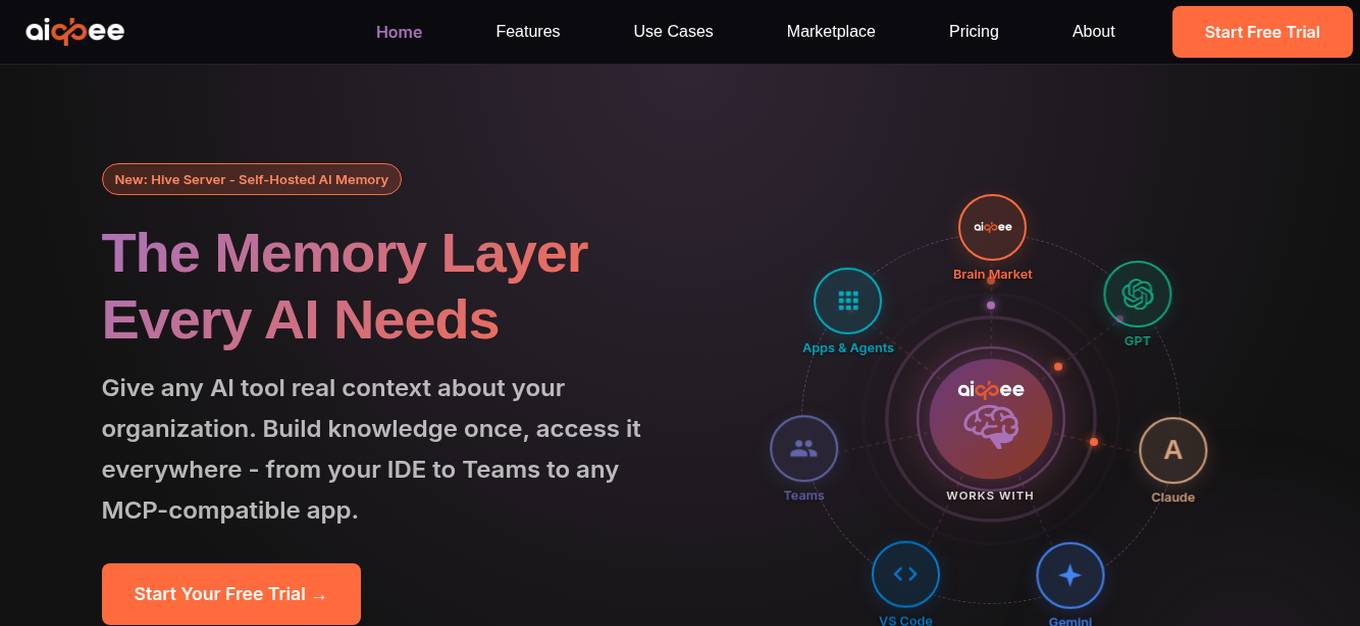

Aiqbee

Aiqbee is a Universal AI Memory Platform designed to provide enterprise knowledge for any AI tool or application. It allows users to centralize and curate organizational knowledge into AI-ready Brains, enabling seamless access across various platforms. With features like GraphRAG technology, Microsoft Teams integration, and MCP compatibility, Aiqbee aims to enhance AI understanding and usage within organizations. The platform offers control over AI usage, protection of sensitive data, and shared token pool economics. Aiqbee addresses the common challenge of insufficient context in enterprise AI projects by providing a vendor-agnostic solution for building, connecting, and utilizing AI knowledge effectively.

LM Studio

LM Studio is an AI tool designed for discovering, downloading, and running local LLMs (Large Language Models). Users can run LLMs on their laptops offline, use models through an in-app Chat UI or a local server, download compatible model files from HuggingFace repositories, and discover new LLMs. The tool ensures privacy by not collecting data or monitoring user actions, making it suitable for personal and business use. LM Studio supports various models like ggml Llama, MPT, and StarCoder on Hugging Face, with minimum hardware/software requirements specified for different platforms.

Prompt Hippo

Prompt Hippo is an AI tool designed as a side-by-side LLM prompt testing suite to ensure the robustness, reliability, and safety of prompts. It saves time by streamlining the process of testing LLM prompts and allows users to test custom agents and optimize them for production. With a focus on science and efficiency, Prompt Hippo helps users identify the best prompts for their needs.

Abacus.AI

Abacus.AI is the world's first AI platform where AI, not humans, build Applied AI agents and systems at scale. Using generative AI and other novel neural net techniques, AI can build LLM apps, gen AI agents, and predictive applied AI systems at scale.

FreedomGPT

FreedomGPT is a powerful AI platform that provides access to a wide range of AI models without the need for technical knowledge. With its user-friendly interface and offline capabilities, FreedomGPT empowers users to explore and utilize AI for various tasks and applications. The platform is committed to privacy and offers an open-source approach, encouraging collaboration and innovation within the AI community.

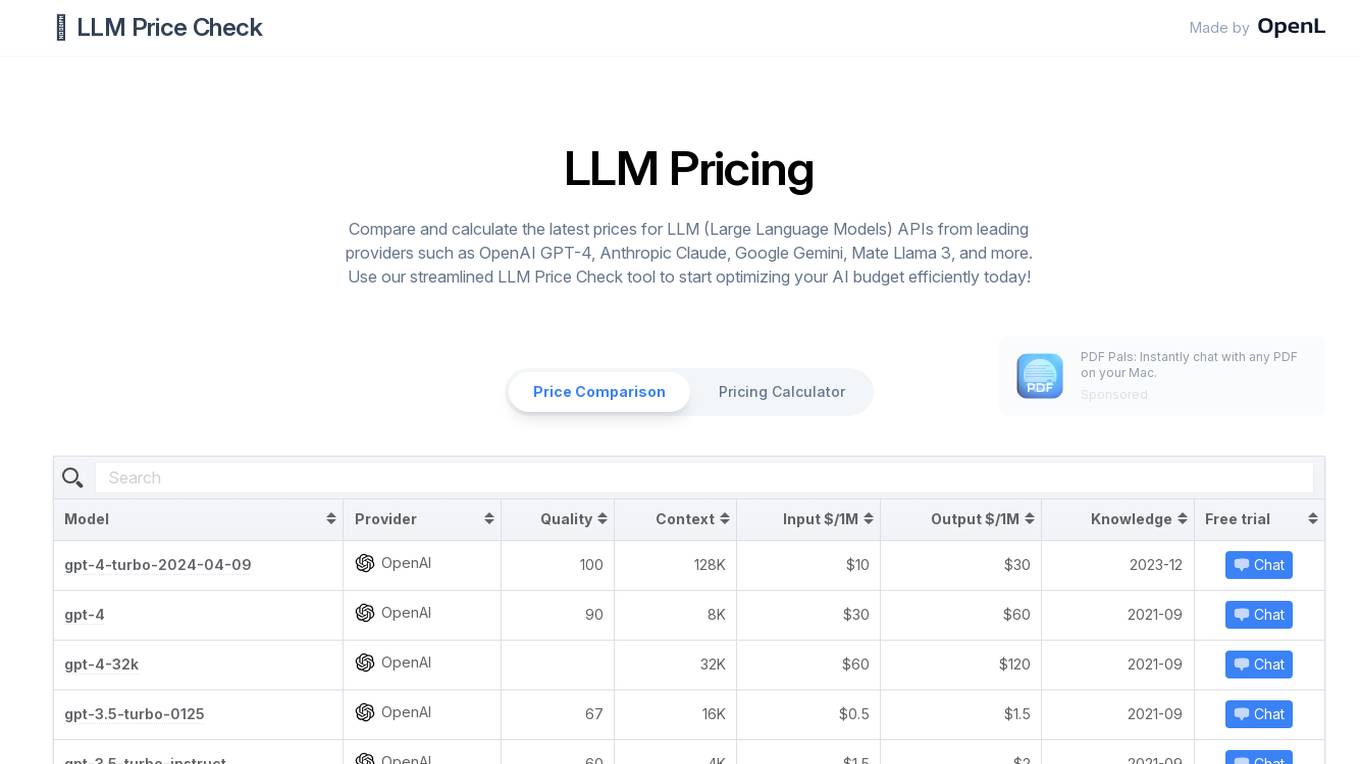

LLM Price Check

LLM Price Check is an AI tool designed to compare and calculate the latest prices for Large Language Models (LLM) APIs from leading providers such as OpenAI, Anthropic, Google, and more. Users can use the streamlined tool to optimize their AI budget efficiently by comparing pricing, sorting by various parameters, and searching for specific models. The tool provides a comprehensive overview of pricing information to help users make informed decisions when selecting an LLM API provider.

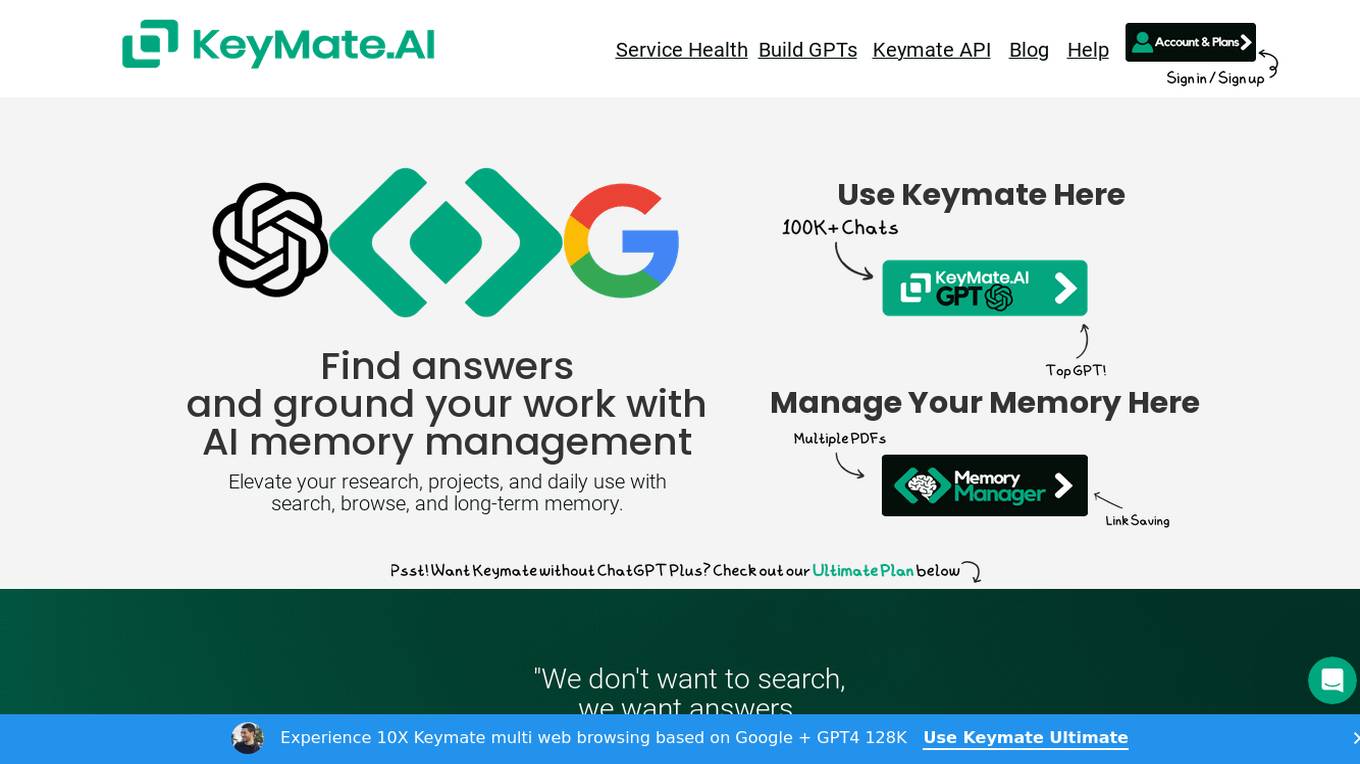

Keymate.AI

Keymate.AI is an AI application that allows users to build GPTs with advanced search, browse, and long-term memory capabilities. It offers a personalized long-term memory on ChatGPT, parallel search functionality, and privacy features using Google API. Keymate.AI aims to elevate research, projects, and daily tasks by providing efficient AI memory management and real-time data retrieval from the web.

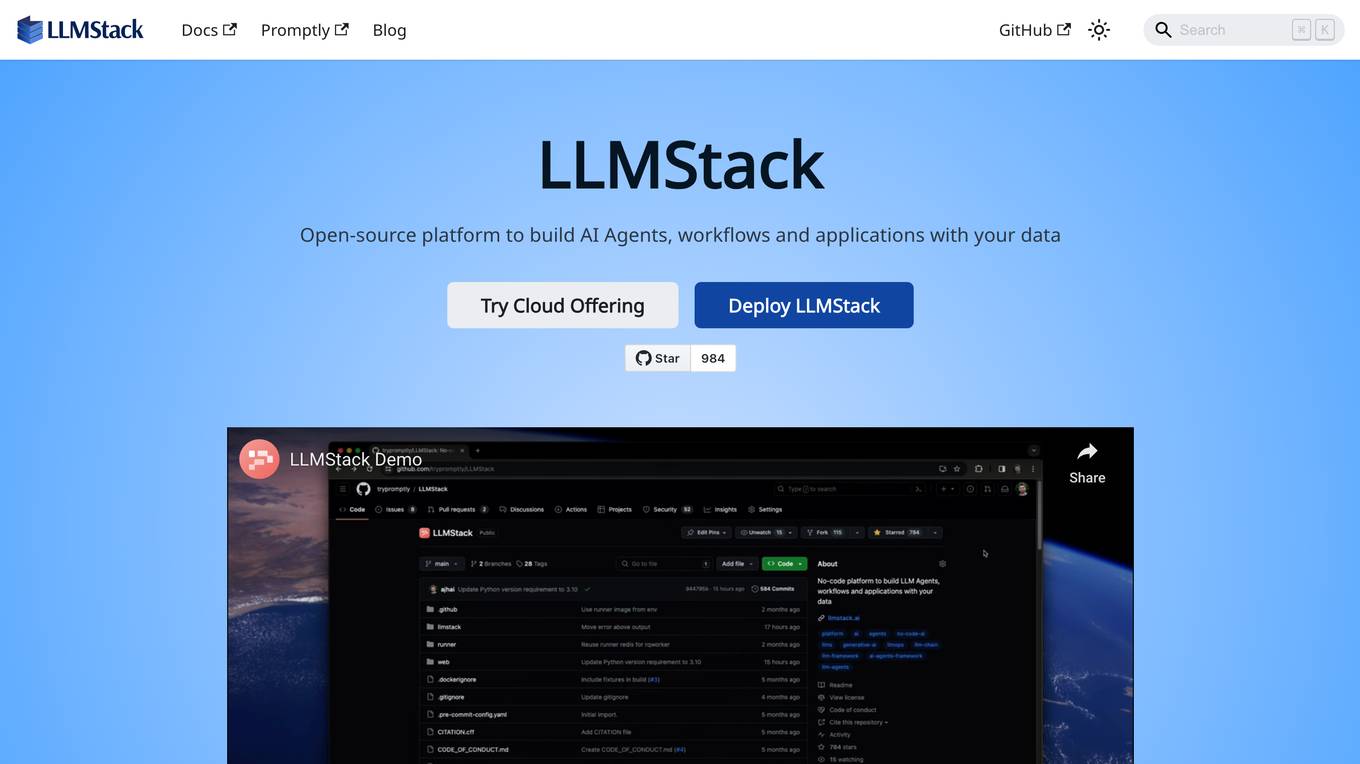

LLMStack

LLMStack is an open-source platform that allows users to build AI Agents, workflows, and applications using their own data. It is a no-code AI app builder that supports model chaining from major providers like OpenAI, Cohere, Stability AI, and Hugging Face. Users can import various data sources such as Web URLs, PDFs, audio files, and more to enhance generative AI applications and chatbots. With a focus on collaboration, LLMStack enables users to share apps publicly or restrict access, with viewer and collaborator roles for multiple users to work together. Powered by React, LLMStack provides an easy-to-use interface for building AI applications.

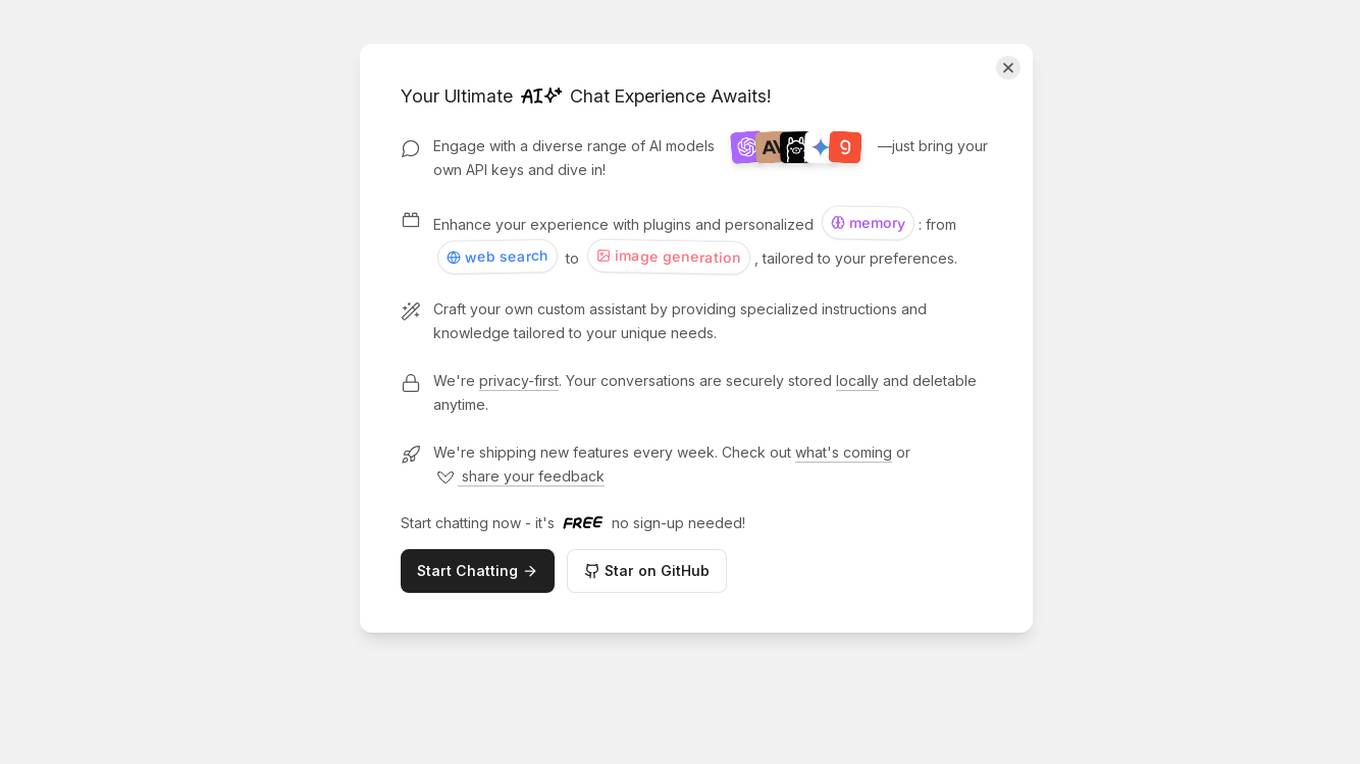

LLMChat

LLMChat is an advanced AI chat application that provides users with the ultimate chat experience. It utilizes cutting-edge artificial intelligence technology to engage in natural conversations, answer queries, and assist users in various tasks. LLMChat is designed to be user-friendly and intuitive, making it suitable for both personal and professional use. With its powerful AI capabilities, LLMChat aims to revolutionize the way people interact with chat applications, offering a seamless and efficient communication experience.

Onyxium

Onyxium is an AI platform that provides a comprehensive collection of AI tools for various tasks such as image recognition, text analysis, and speech recognition. It offers users the ability to access and utilize the latest AI technologies in one place, empowering them to enhance their projects and workflows with advanced AI capabilities. With a user-friendly interface and affordable pricing plans, Onyxium aims to make AI tools accessible to everyone, from individuals to large-scale businesses.

AllAIs

AllAIs is an AI ecosystem platform that brings together various AI tools, including large language models (LLMs), image generation capabilities, and development plugins, into a unified ecosystem. It aims to enhance productivity by providing a comprehensive suite of tools for both creative and technical tasks. Users can access popular LLMs, generate high-quality images, and streamline their projects using web and Visual Studio Code plugins. The platform offers integration with other tools and services, multiple pricing tiers, and regular updates to ensure high performance and compatibility with new technologies.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

1 - Open Source AI Tools

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.

20 - OpenAI Gpts

GPT Detector

ChatGPT Detector quickly finds AI writing from ChatGPT, LLMs, Bard, and GPT-4. It's easy and fast to use!

Use Case Writing Assistant

This GPT can generate software use cases, which are based on a use case templates repository and conform to a style guide.

ecosystem.Ai Use Case Designer v2

The use case designer is configured with the latest Data Science and Behavioral Social Science insights to guide you through the process of defining AI and Machine Learning use cases for the ecosystem.Ai platform.

AI Use Case Analyst for Sales & Marketing

Enables sales & marketing leadership to identify high-value AI use cases

Terms of Use & Privacy policy Assistant

OpenAIのTerms of UseとPrivacy policyを参照できます(2023年12月14日適用分)

PragmaPilot - A Generative AI Use Case Generator

Show me your job description or just describe what you do professionally, and I'll help you identify high value use cases for AI in your day-to-day work. I'll also coach you on simple techniques to get the best out of ChatGPT.

Name Generator and Use Checker Toolkit

Need a new name? Character, brand, story, etc? Try the matrix! Use all the different naming modules as different strategies for new names!

Your Headline Writer

Use this to get increased engagement, more clicks and higher rankings for your content. Copy and paste your headline below and get a score out of 100 and 3 new ideas on how to improve it. For FREE.

Write a romance novel

Use this GPT to outline your romance novel: design your story, your characters, obstacles, stakes, twists, arena, etc… Then ask GPT to draft the chapters ❤️ (remember: you are the brain, GPT is just the hand. Stay creative, use this GPT as an author!)

IHeartDomains.BOT | Web3 Domain Knowledgebase

Use me for educational insights, ALPHA, and strategies for investing in Domains & Digital Identity. Your GUIDE to Unstoppable Domains, ENS, Freename, HNS, and more. *DO NOT use as Financial Advice & Always DYOR* https://iheartdomains.com

Acquisition Criteria Creator

Use me to help you decide what type of business to acquire. Let's go!

Family Constellation Guide

Use DALL-E to create a family constellation image for an issue that has been troubling you.

The 80/20 Principle master(80/20法则大师-敏睿)

使用GPTS快速识别关键因素,提高决策效率和工作效率,找到关键的20%,Use GPTS to quickly identify key factors, improve decision-making efficiency and work efficiency, and find the key 20%.