Best AI tools for< Scale Applications >

20 - AI tool Sites

Heroku

Heroku is a cloud platform that enables developers to build, deliver, monitor, and scale applications quickly and easily. It supports multiple programming languages and provides a seamless deployment process. With Heroku, developers can focus on coding without worrying about infrastructure management.

Heroku

Heroku is a cloud platform that lets companies build, deliver, monitor, and scale apps. It simplifies the process of deploying applications by providing a seamless experience for developers. With Heroku, developers can focus on writing code without worrying about infrastructure management. The platform supports various programming languages and frameworks, making it versatile for different types of applications.

Magick

Magick is a cutting-edge Artificial Intelligence Development Environment (AIDE) that empowers users to rapidly prototype and deploy advanced AI agents and applications without coding. It provides a full-stack solution for building, deploying, maintaining, and scaling AI creations. Magick's open-source, platform-agnostic nature allows for full control and flexibility, making it suitable for users of all skill levels. With its visual node-graph editors, users can code visually and create intuitively. Magick also offers powerful document processing capabilities, enabling effortless embedding and access to complex data. Its real-time and event-driven agents respond to events right in the AIDE, ensuring prompt and efficient handling of tasks. Magick's scalable deployment feature allows agents to handle any number of users, making it suitable for large-scale applications. Additionally, its multi-platform integrations with tools like Discord, Unreal Blueprints, and Google AI provide seamless connectivity and enhanced functionality.

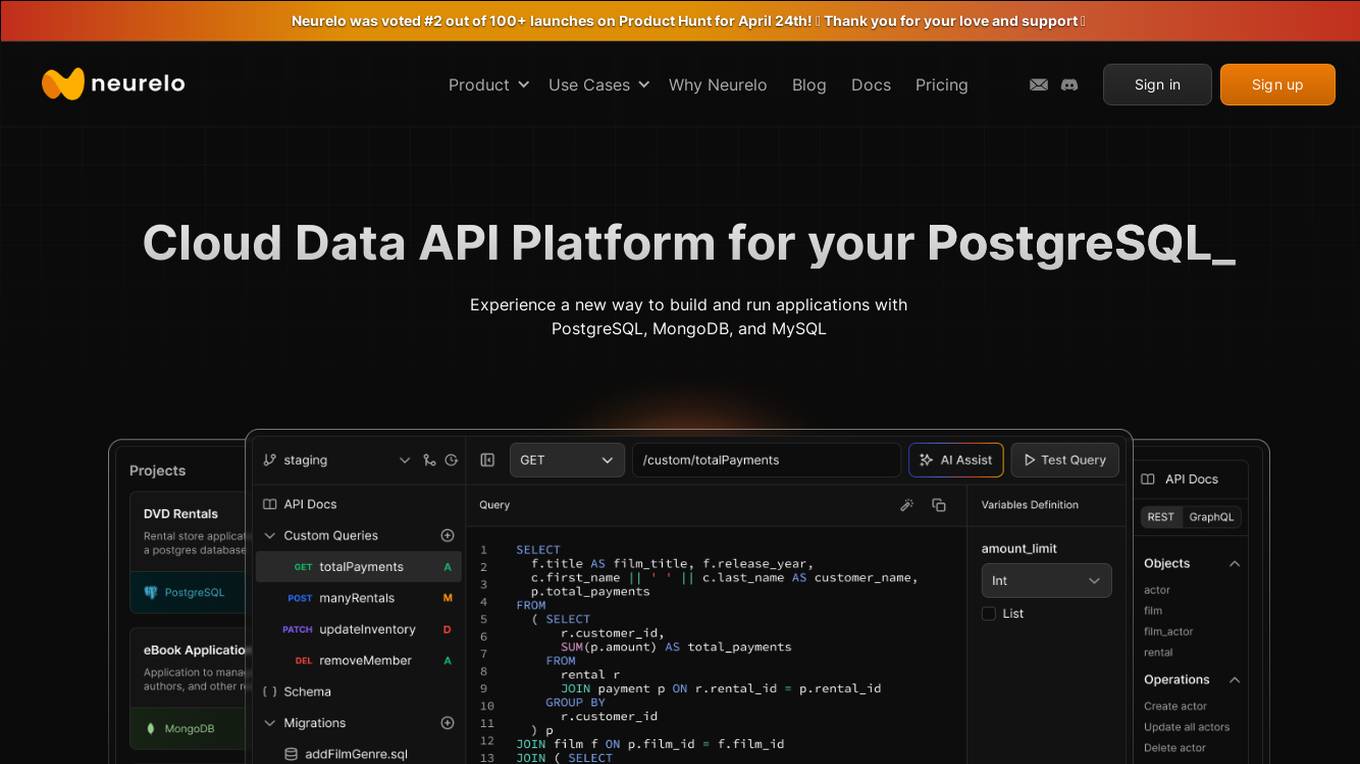

Neurelo

Neurelo is a cloud API platform that offers services for PostgreSQL, MongoDB, and MySQL. It provides features such as auto-generated APIs, custom query APIs with AI assistance, query observability, schema as code, and the ability to build full-stack applications in minutes. Neurelo aims to empower developers by simplifying database programming complexities and enhancing productivity. The platform leverages the power of cloud technology, APIs, and AI to offer a seamless and efficient way to build and run applications.

Azure Static Web Apps

Azure Static Web Apps is a platform provided by Microsoft Azure for building and deploying modern web applications. It allows developers to easily host static web content and serverless APIs with seamless integration to popular frameworks like React, Angular, and Vue. With Azure Static Web Apps, developers can quickly set up continuous integration and deployment workflows, enabling them to focus on building great user experiences without worrying about infrastructure management.

Allapi.ai

Allapi.ai is an advanced AI API platform designed to simplify AI integration for developers and startup founders. It offers a powerful ecosystem of models, plugins, and APIs to help users build and deploy AI-powered applications quickly and efficiently. With features like dynamic data capabilities, advanced RAG system, streamlined development process, and intelligent code assistant, Allapi.ai aims to accelerate innovation and reduce development costs. The platform provides access to cutting-edge AI models like Claude3, GPT-4, Gemini 1.5 Pro, and LLaMA 3, along with a wide range of plugins and tools to supercharge AI-driven applications.

Release.ai

Release.ai is an AI-centric platform that allows developers, operations, and leadership teams to easily deploy and manage AI applications. It offers pre-configured templates for popular open-source technologies, private AI environments for secure development, and access to GPU resources. With Release.ai, users can build, test, and scale AI solutions quickly and efficiently within their own boundaries.

Novita AI

Novita AI is an AI cloud platform offering Model APIs, Serverless, and GPU Instance services in a cost-effective and integrated manner to accelerate AI businesses. It provides optimized models for high-quality dialogue use cases, full spectrum AI APIs for image, video, audio, and LLM applications, serverless auto-scaling based on demand, and customizable GPU solutions for complex AI tasks. The platform also includes a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable services.

Deployment Manager

The website is currently experiencing a temporary pause in deployment. It seems to be a technical issue related to a specific deployment code. The website may be undergoing maintenance or facing a technical glitch that requires attention. Users are advised to wait for further updates or contact the website administrators for assistance.

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

Mixpeek

Mixpeek is a multimodal intelligence platform that helps users extract important data from videos, images, audio, and documents. It enables users to focus on insights rather than data preparation by identifying concepts, activities, and objects from various sources. Mixpeek offers features such as real-time synchronization, extraction and embedding, fine-tuning and scaling of models, and seamless integration with various data sources. The platform is designed to be easy to use, scalable, and secure, making it suitable for a wide range of applications.

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a comprehensive, evolving cloud computing platform from Amazon that provides a broad set of global compute, storage, database, analytics, application, and deployment services that help organizations move faster, lower IT costs, and scale applications. With AWS, you can use as much or as little of its services as you need, and scale up or down as required with only a few minutes notice. AWS has a global network of regions and availability zones, so you can deploy your applications and data in the locations that are optimal for you.

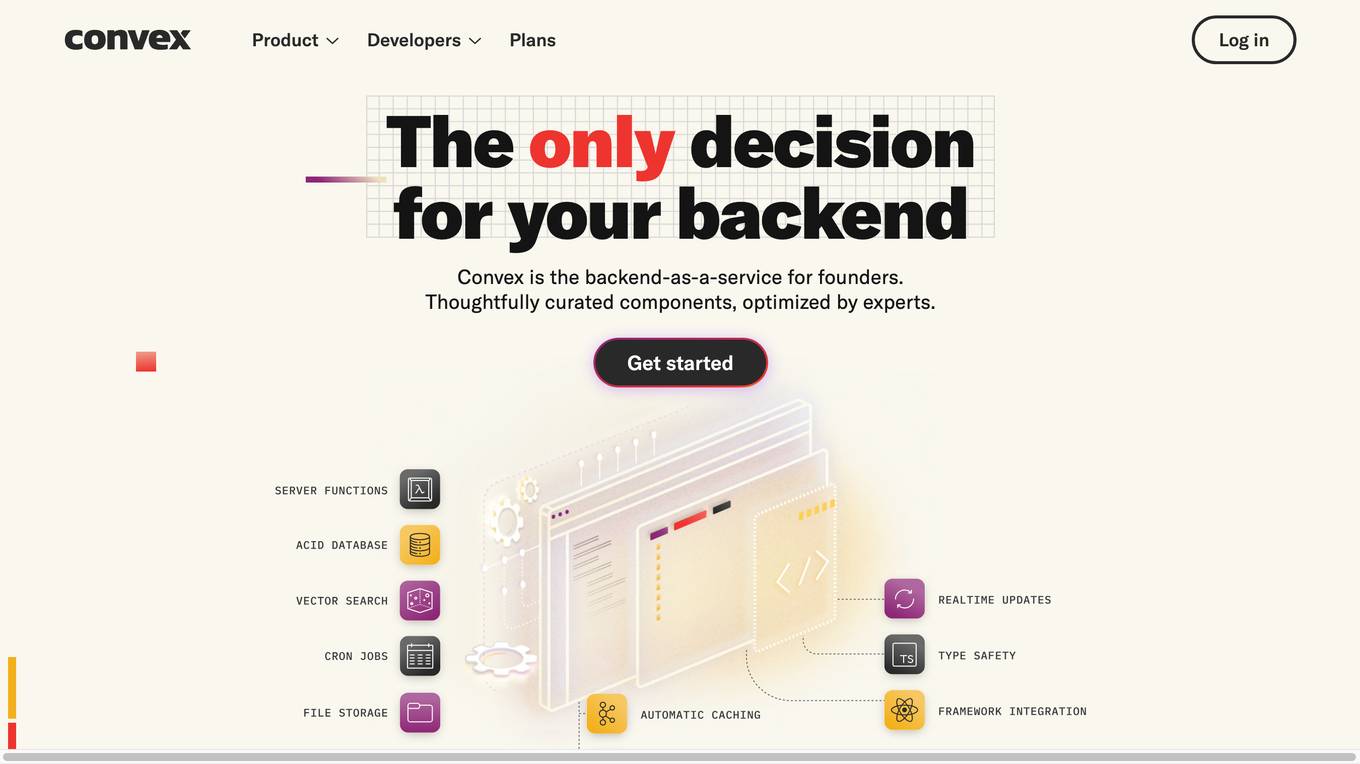

Convex

Convex is a fullstack TypeScript development platform that serves as an open-source backend for application builders. It offers a comprehensive set of APIs and tools to build, launch, and scale applications efficiently. With features like real-time collaboration, optimized transactions, and over 80 OAuth integrations, Convex simplifies backend operations and allows developers to focus on delivering value to customers. The platform enables developers to write backend logic in TypeScript, perform database operations with strong consistency, and integrate with various third-party services seamlessly. Convex is praised for its reliability, simplicity, and developer experience, making it a popular choice for modern software development projects.

BentoML

BentoML is a platform for software engineers to build, ship, and scale AI products. It provides a unified AI application framework that makes it easy to manage and version models, create service APIs, and build and run AI applications anywhere. BentoML is used by over 1000 organizations and has a global community of over 3000 members.

C3 AI

C3 AI provides a comprehensive Enterprise AI application development platform and a large and growing family of turnkey enterprise AI applications. C3 AI's platform provides all necessary software services in one integrated suite to rapidly develop, provision, and operate Enterprise AI applications. C3 AI's applications are designed to meet the business-critical needs of global enterprises in various industries, including manufacturing, financial services, government, utilities, oil and gas, chemicals, agribusiness, defense and intelligence.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the error message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide advanced features for web development.

NeuReality

NeuReality is an AI-centric solution designed to democratize AI adoption by providing purpose-built tools for deploying and scaling inference workflows. Their innovative AI-centric architecture combines hardware and software components to optimize performance and scalability. The platform offers a one-stop shop for AI inference, addressing barriers to AI adoption and streamlining computational processes. NeuReality's tools enable users to deploy, afford, use, and manage AI more efficiently, making AI easy and accessible for a wide range of applications.

Moreh

Moreh is an AI platform that aims to make hyperscale AI infrastructure more accessible for scaling any AI model and application. It provides a full-stack infrastructure software from PyTorch to GPUs for the LLM era, enabling users to train large language models efficiently and effectively.

Blackthorn AI

Blackthorn AI is an AI development company offering innovative solutions for businesses across various industries. With a focus on AI & ML development services, generative AI, big data analytics, and custom software development, Blackthorn AI aims to transform businesses with precision and measurable results. The company has a proven track record of successful projects and a team of certified experts, delivering enterprise-grade accuracy and results. Recognized as a top AI company, Blackthorn AI specializes in solving complex challenges and empowering businesses to innovate and excel in a competitive market.

3 - Open Source AI Tools

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

VectorETL

VectorETL is a lightweight ETL framework designed to assist Data & AI engineers in processing data for AI applications quickly. It streamlines the conversion of diverse data sources into vector embeddings and storage in various vector databases. The framework supports multiple data sources, embedding models, and vector database targets, simplifying the creation and management of vector search systems for semantic search, recommendation systems, and other vector-based operations.

GenAIComps

GenAIComps is an initiative aimed at building enterprise-grade Generative AI applications using a microservice architecture. It simplifies the scaling and deployment process for production, abstracting away infrastructure complexities. GenAIComps provides a suite of containerized microservices that can be assembled into a mega-service tailored for real-world Enterprise AI applications. The modular approach of microservices allows for independent development, deployment, and scaling of individual components, promoting modularity, flexibility, and scalability. The mega-service orchestrates multiple microservices to deliver comprehensive solutions, encapsulating complex business logic and workflow orchestration. The gateway serves as the interface for users to access the mega-service, providing customized access based on user requirements.

20 - OpenAI Gpts

R&D Process Scale-up Advisor

Optimizes production processes for efficient large-scale operations.

CIM Analyst

In-depth CIM analysis with a structured rating scale, offering detailed business evaluations.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Business Angel - Startup and Insights PRO

Business Angel provides expert startup guidance: funding, growth hacks, and pitch advice. Navigate the startup ecosystem, from seed to scale. Essential for entrepreneurs aiming for success. Master your strategy and launch with confidence. Your startup journey begins here!

Sysadmin

I help you with all your sysadmin tasks, from setting up your server to scaling your already exsisting one. I can help you with understanding the long list of log files and give you solutions to the problems.

Seabiscuit Launch Lander

Startup Strong Within 180 Days: Tailored advice for launching, promoting, and scaling businesses of all types. It covers all stages from pre-launch to post-launch and develops strategies including market research, branding, promotional tactics, and operational planning unique your business. (v1.8)

Startup Advisor

Startup advisor guiding founders through detailed idea evaluation, product-market-fit, business model, GTM, and scaling.