Best AI tools for< Configure Server >

20 - AI tool Sites

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without the necessary permissions. The 'openresty' mentioned in the text is likely the software running on the server. It is a web platform based on NGINX and LuaJIT, known for its high performance and scalability in handling web traffic. The website may be using OpenResty to manage its server configurations and handle incoming requests.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is denied. This error is typically caused by insufficient permissions or server misconfiguration. The 'openresty' message indicates that the server is using the OpenResty web platform. OpenResty is a scalable web platform that integrates the Nginx web server with various Lua-based modules, providing powerful features for web development and server-side scripting.

nginx

The website is a landing page for the nginx web server. It indicates that the server is successfully installed and working, with further configuration required. Users can access online documentation and support at nginx.org, and commercial support is available at nginx.com. The page serves as a thank you message for using nginx.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is denied. This error is typically caused by insufficient permissions or server misconfiguration. The 'openresty' message indicates that the server is using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. The website may be experiencing technical issues that prevent users from accessing its content.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' message suggests that the server is using the OpenResty web platform. OpenResty is a powerful web platform based on Nginx and LuaJIT, providing high performance and flexibility for web applications.

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or a misconfiguration in the server settings. The message 'openresty' suggests that the server may be running the OpenResty web platform. OpenResty is a web platform based on NGINX and Lua that is commonly used to build high-performance web applications. It provides a powerful and flexible way to extend NGINX with Lua scripts, allowing for advanced web server functionality.

Autobackend.dev

Autobackend.dev is a web development platform that provides tools and resources for building and managing backend systems. It offers a range of features such as database management, API integration, and server configuration. With Autobackend.dev, users can streamline the process of backend development and focus on creating innovative web applications.

403 Forbidden

The website seems to be experiencing a 403 Forbidden error, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or misconfigured security settings. The message 'openresty' suggests that the server may be running on the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, known for its high performance and scalability. Users encountering a 403 Forbidden error on a website may need to contact the website administrator or webmaster for assistance in resolving the issue.

N/A

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. It seems that the website is currently inaccessible due to server-side issues.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide a scalable and efficient web server solution.

OpenResty Server Manager

The website seems to be experiencing a 403 Forbidden error, which typically indicates that the server is denying access to the requested resource. This error is often caused by incorrect permissions or misconfigurations on the server side. The message 'openresty' suggests that the server may be using the OpenResty web platform. Users encountering this error may need to contact the website administrator for assistance in resolving the issue.

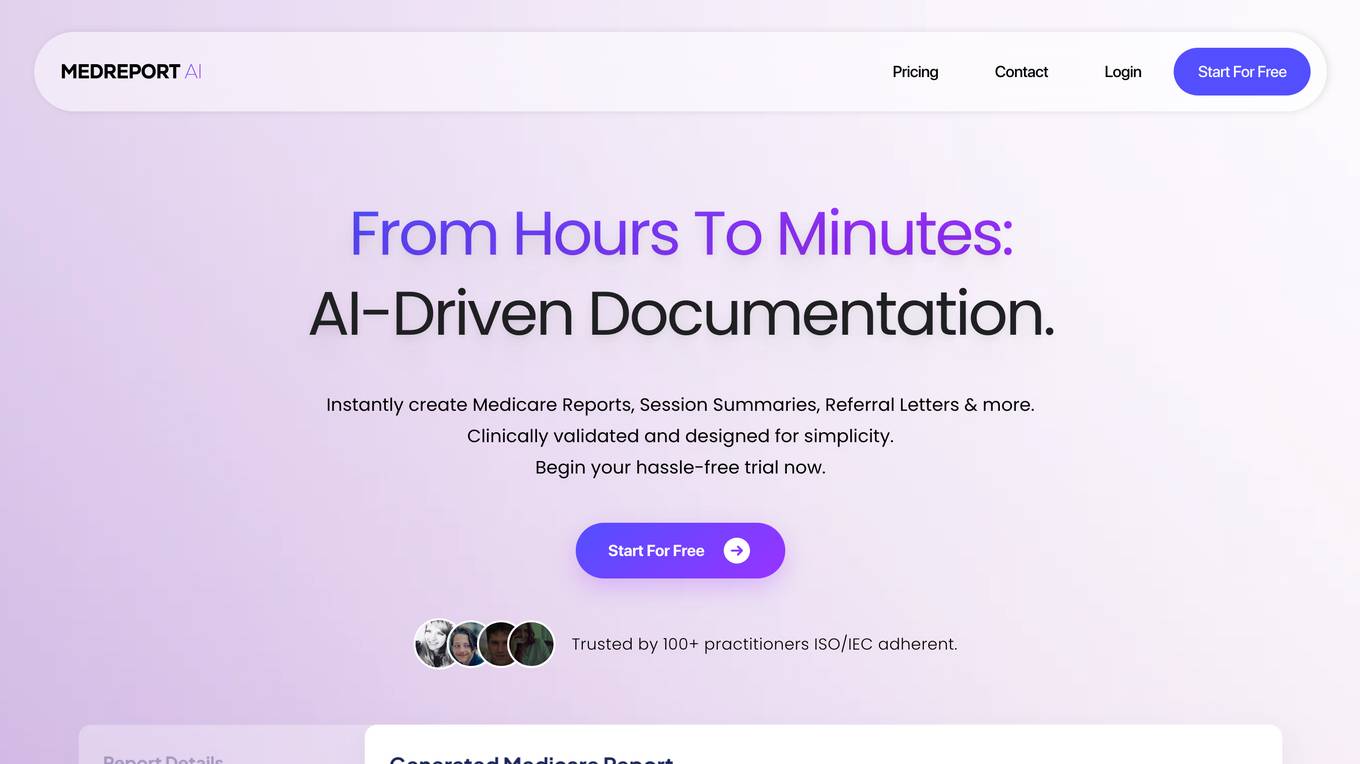

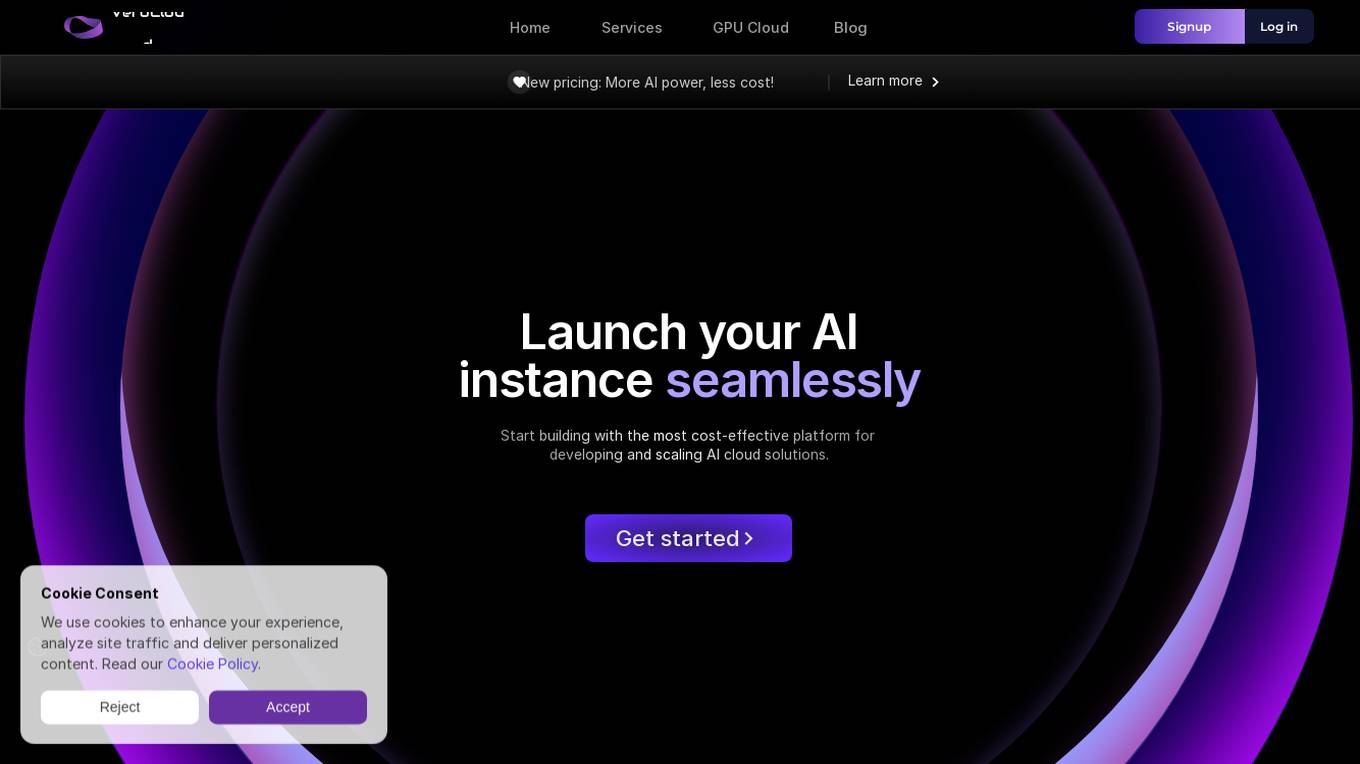

VeroCloud

VeroCloud is a platform offering tailored solutions for AI, HPC, and scalable growth. It provides cost-effective cloud solutions with guaranteed uptime, performance efficiency, and cost-saving models. Users can deploy HPC workloads seamlessly, configure environments as needed, and access optimized environments for GPU Cloud, HPC Compute, and Tally on Cloud. VeroCloud supports globally distributed endpoints, public and private image repos, and deployment of containers on secure cloud. The platform also allows users to create and customize templates for seamless deployment across computing resources.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is forbidden. This error is typically caused by insufficient permissions or misconfiguration on the server side. The message 'openresty' suggests that the server is using the OpenResty web platform. OpenResty is a dynamic web platform based on NGINX and Lua that is commonly used for building high-performance web applications. It provides a powerful and flexible environment for developing and deploying web services.

403 Forbidden

The website appears to be displaying a '403 Forbidden' error message, which typically means that the user is not authorized to access the requested page. This error is often encountered when trying to access a webpage without the necessary permissions or when the server is configured to deny access. The message 'openresty' may indicate that the server is using the OpenResty web platform. It is important to ensure that the correct permissions are in place and that the requested page exists on the server.

403 Forbidden OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without proper permissions. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. The website may be experiencing technical issues or undergoing maintenance.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. It seems that the website is currently inaccessible due to server-side issues.

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

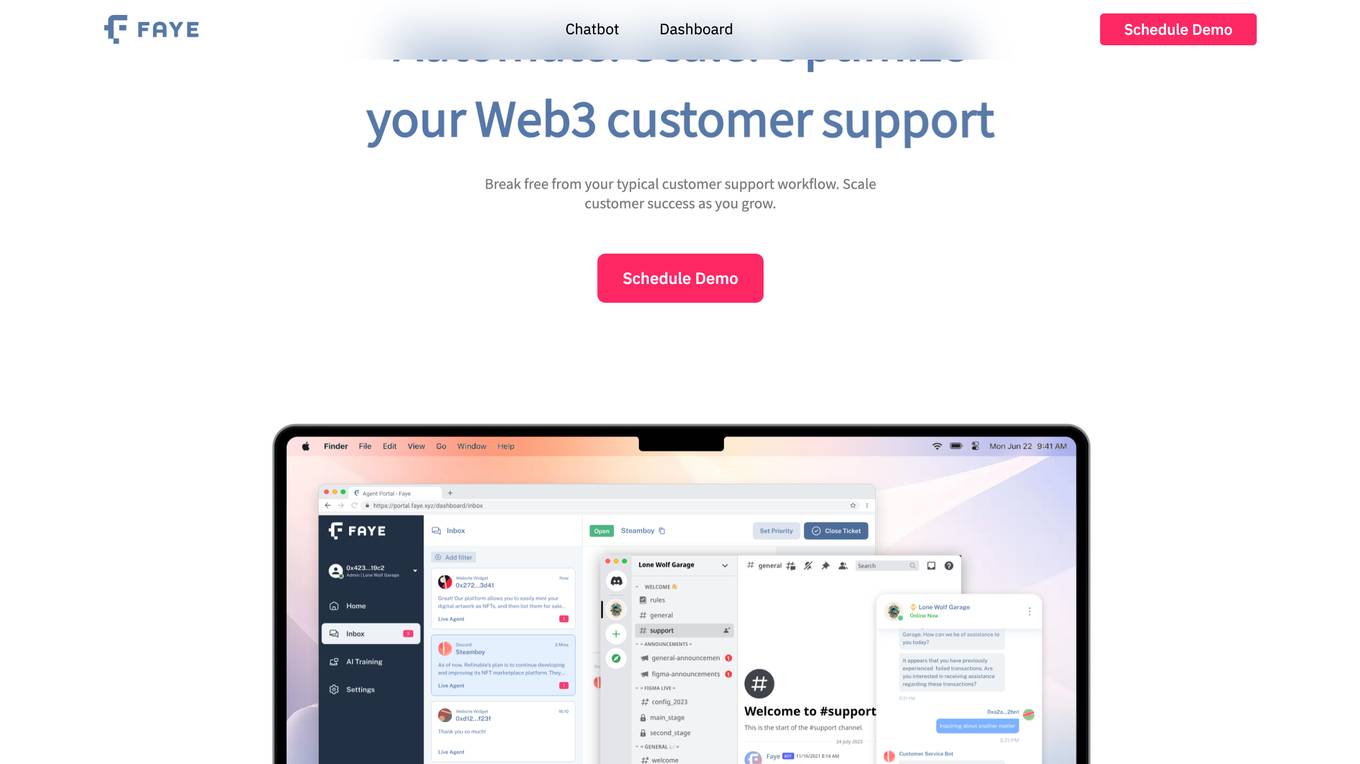

faye.xyz

faye.xyz is a website experiencing an SSL handshake failed error (Error code 525) due to Cloudflare being unable to establish an SSL connection to the origin server. The issue may be related to incompatible SSL configuration with Cloudflare, possibly due to no shared cipher suites. Visitors are advised to try again in a few minutes, while website owners are recommended to check the SSL configuration. Cloudflare provides additional troubleshooting information for resolving such errors.

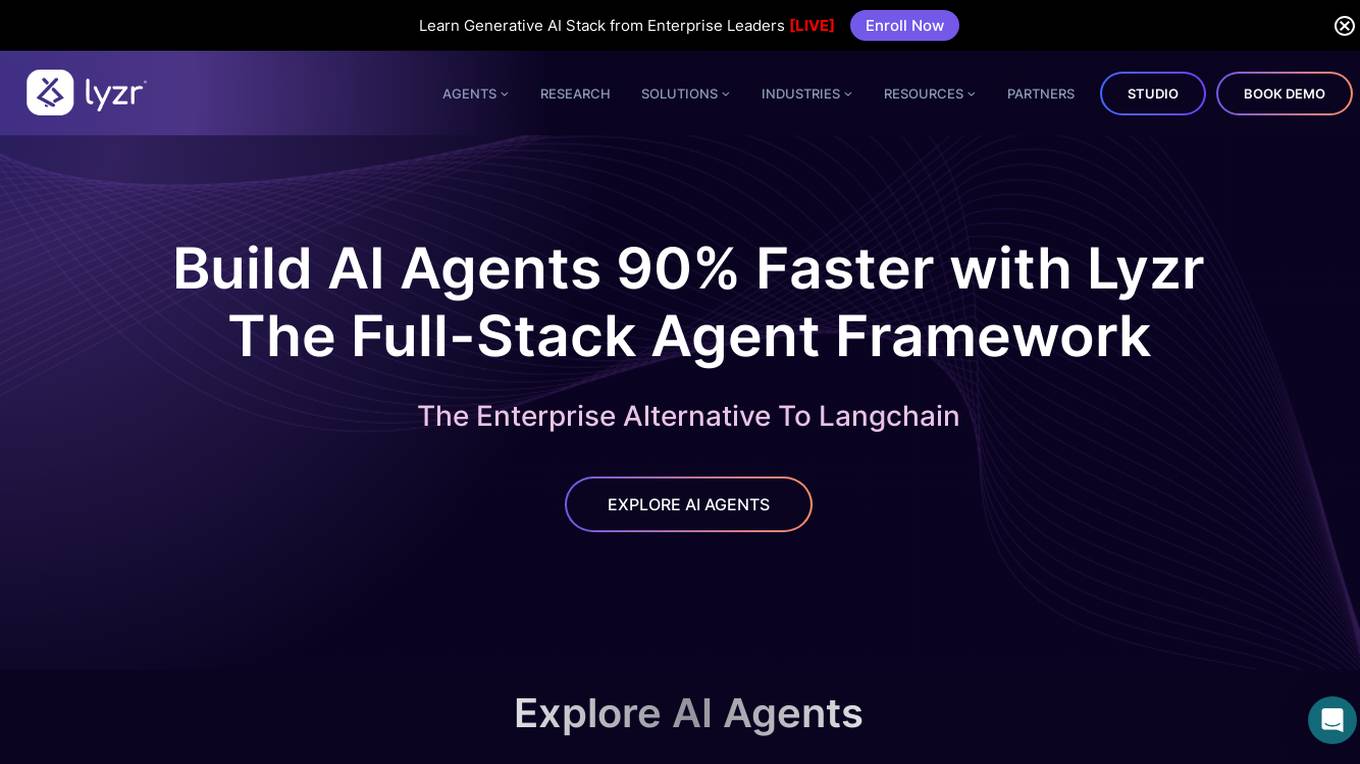

Lyzr AI

Lyzr AI is a full-stack agent framework designed to build GenAI applications faster. It offers a range of AI agents for various tasks such as chatbots, knowledge search, summarization, content generation, and data analysis. The platform provides features like memory management, human-in-loop interaction, toxicity control, reinforcement learning, and custom RAG prompts. Lyzr AI ensures data privacy by running data locally on cloud servers. Enterprises and developers can easily configure, deploy, and manage AI agents using Lyzr's platform.

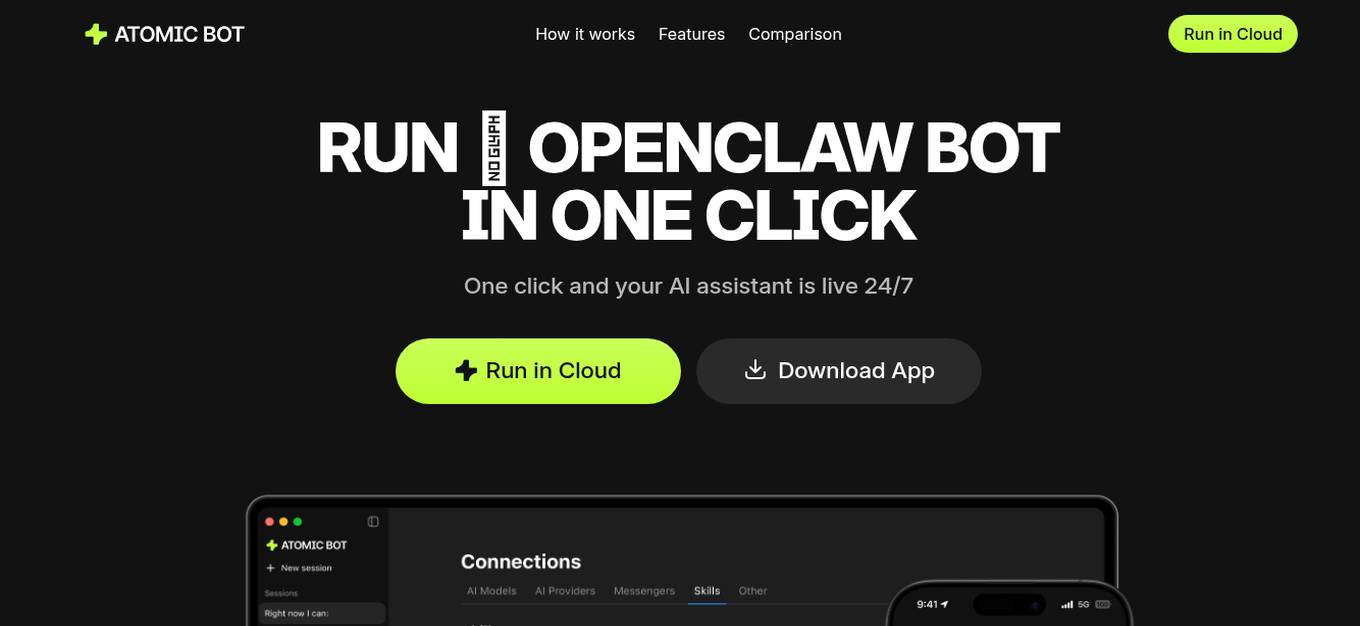

Atomic Bot

Atomic Bot is an AI application that serves as a user-friendly native app bringing OpenClaw (Clawdbot) capabilities to everyday users. It offers a simplified way to access the full OpenClaw experience through a human-friendly interface, enabling users to manage tasks such as email, calendars, documents, browser actions, and workflows efficiently. With features like Gmail management, calendar autopilot, document summarization, browser control, and task automation, Atomic Bot aims to enhance productivity and streamline daily workflows. The application prioritizes privacy by allowing users to choose between running it on their local device, in the cloud, or a hybrid setup, ensuring data control and security.

3 - Open Source AI Tools

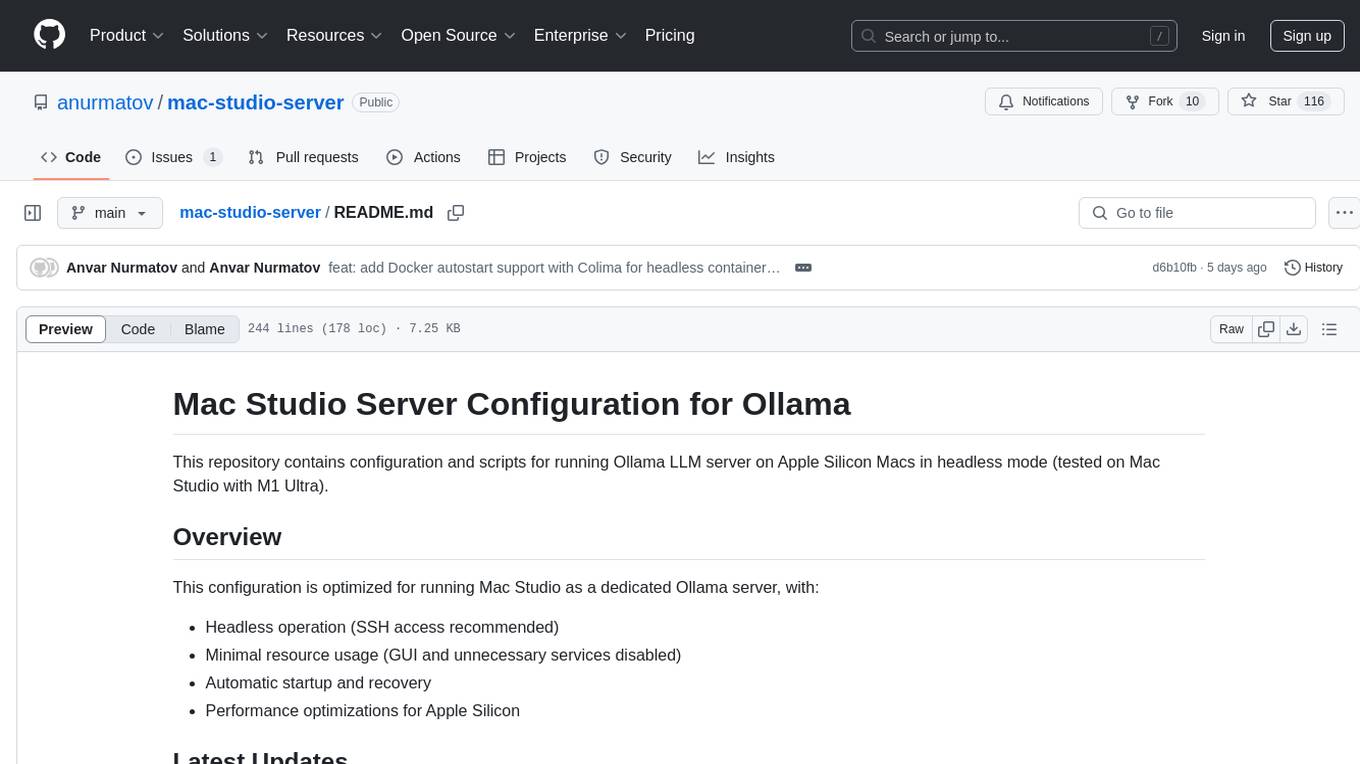

mac-studio-server

This repository provides configuration and scripts for running Ollama LLM server on Apple Silicon Macs in headless mode, optimized for performance and resource usage. It includes features like automatic startup, system resource optimization, external network access, proper logging setup, and SSH-based remote management. Users can customize the Ollama service configuration and enable optional GPU memory optimization and Docker autostart for container applications. The installation process disables unnecessary system services, configures power management, and optimizes for background operation while maintaining Screen Sharing capability for remote management. Performance considerations focus on reducing memory usage, disabling GUI-related services, minimizing background processes, preventing sleep/hibernation, and optimizing for headless operation.

mcp-server-qdrant

The mcp-server-qdrant repository is an official Model Context Protocol (MCP) server designed for keeping and retrieving memories in the Qdrant vector search engine. It acts as a semantic memory layer on top of the Qdrant database. The server provides tools like 'qdrant-store' for storing information in the database and 'qdrant-find' for retrieving relevant information. Configuration is done using environment variables, and the server supports different transport protocols. It can be installed using 'uvx' or Docker, and can also be installed via Smithery for Claude Desktop. The server can be used with Cursor/Windsurf as a code search tool by customizing tool descriptions. It can store code snippets and help developers find specific implementations or usage patterns. The repository is licensed under the Apache License 2.0.

echokit_box

EchoKit is a tool for setting up and flashing firmware on the EchoKit device. It provides instructions for flashing the device image, installing necessary dependencies, building firmware from source, and flashing the firmware onto the device. The tool also includes steps for resetting the device and configuring it to connect to an EchoKit server for full functionality.

20 - OpenAI Gpts

Calendar and email Assistant

Your expert assistant for Google Calendar and gmail tasks, integrated with Zapier (works with free plan). Supports: list, add, update events to calendar, send gmail. You will be prompted to configure zapier actions when set up initially. Conversation data is not used for openai training.

Salesforce Sidekick

Personal assistant for Salesforce configuration, coding, troubleshooting, solutioning, proposal writing, and more. This is not an official Salesforce product or service.

Istio Advisor Plus

Rich in Istio knowledge, with a focus on configurations, troubleshooting, and bug reporting.

FlashSystem Expert

Expert on IBM FlashSystem, offering 'How-To' guidance and technical insights.

CUDA GPT

Expert in CUDA for configuration, installation, troubleshooting, and programming.

SIP Expert

A senior VoIP engineer with expertise in SIP, RTP, IMS, and WebRTC. Kinda employed at sipfront.com, your telco test automation company.