Best AI tools for< Build Model >

20 - AI tool Sites

Build Club

Build Club is an AI tool designed to help individuals learn and explore various aspects of artificial intelligence. The platform offers a wide range of courses, challenges, hackathons, and community projects to enhance users' AI skills. Users can build AI models for tasks like image and video generation, AI marketing, and creating AI agents. Build Club aims to create a collaborative learning environment for AI enthusiasts to grow their knowledge and skills in the field of artificial intelligence.

FranzAI LLM Playground

FranzAI LLM Playground is an AI-powered tool that helps you extract, classify, and analyze unstructured text data. It leverages transformer models to provide accurate and meaningful results, enabling you to build data applications faster and more efficiently. With FranzAI, you can accelerate product and content classification, enhance data interpretation, and advance data extraction processes, unlocking key insights from your textual data.

DagsHub

DagsHub is an open source data science collaboration platform that helps AI teams build better models and manage data projects. It provides a central location for data, code, experiments, and models, making it easy for teams to collaborate and track their progress. DagsHub also integrates with a variety of popular data science tools and frameworks, making it a powerful tool for data scientists and machine learning engineers.

Infrabase.ai

Infrabase.ai is a directory of AI infrastructure products that helps users discover and explore a wide range of tools for building world-class AI products. The platform offers a comprehensive directory of products in categories such as Vector databases, Prompt engineering, Observability & Analytics, Inference APIs, Frameworks & Stacks, Fine-tuning, Audio, and Agents. Users can find tools for tasks like data storage, model development, performance monitoring, and more, making it a valuable resource for AI projects.

Intel Gaudi AI Accelerator Developer

The Intel Gaudi AI accelerator developer website provides resources, guidance, tools, and support for building, migrating, and optimizing AI models. It offers software, model references, libraries, containers, and tools for training and deploying Generative AI and Large Language Models. The site focuses on the Intel Gaudi accelerators, including tutorials, documentation, and support for developers to enhance AI model performance.

Groq

Groq is a fast AI inference tool that offers instant intelligence for openly-available models like Llama 3.1. It provides ultra-low-latency inference for cloud deployments and is compatible with other providers like OpenAI. Groq's speed is proven to be instant through independent benchmarks, and it powers leading openly-available AI models such as Llama, Mixtral, Gemma, and Whisper. The tool has gained recognition in the industry for its high-speed inference compute capabilities and has received significant funding to challenge established players like Nvidia.

Numerai

Numerai is a data science tournament platform where users can compete to build models that predict the stock market. The platform provides users with clean and regularized hedge fund quality data, and users can build models using Python or R scripts. Numerai also has a cryptocurrency, NMR, which users can stake on their models to earn rewards.

Novita AI

Novita AI is an AI cloud platform offering Model APIs, Serverless, and GPU Instance services in a cost-effective and integrated manner to accelerate AI businesses. It provides optimized models for high-quality dialogue use cases, full spectrum AI APIs for image, video, audio, and LLM applications, serverless auto-scaling based on demand, and customizable GPU solutions for complex AI tasks. The platform also includes a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable services.

Tecton

Tecton is an AI data platform that helps build smarter AI applications by simplifying feature engineering, generating training data, serving real-time data, and enhancing AI models with context-rich prompts. It automates data pipelines, improves model accuracy, and lowers production costs, enabling faster deployment of AI models. Tecton abstracts away data complexity, provides a developer-friendly experience, and allows users to create features from any source. Trusted by top engineering teams, Tecton streamlines ML delivery processes, improves customer interactions, and automates release processes through CI/CD pipelines.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables users to build, deploy, and manage AI models across any environment. It fosters collaboration, establishes best practices, and ensures governance while reducing costs. The platform provides access to a broad ecosystem of open source and commercial tools, and infrastructure, allowing users to accelerate and scale AI impact. Domino serves as a central hub for AI operations and knowledge, offering integrated workflows, automation, and hybrid multicloud capabilities. It helps users optimize compute utilization, enforce compliance, and centralize knowledge across teams.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

SentiSight.ai

SentiSight.ai is a machine learning platform for image recognition solutions, offering services such as object detection, image segmentation, image classification, image similarity search, image annotation, computer vision consulting, and intelligent automation consulting. Users can access pre-trained models, background removal, NSFW detection, text recognition, and image recognition API. The platform provides tools for image labeling, project management, and training tutorials for various image recognition models. SentiSight.ai aims to streamline the image annotation process, empower users to build and train their own models, and deploy them for online or offline use.

ZDNet

ZDNet is a technology news website that provides news, reviews, and advice on the latest innovations in the tech industry. It covers a wide range of topics, including artificial intelligence, cloud computing, digital transformation, energy, robotics, sustainability, transportation, and work life. ZDNet's mission is to help readers understand the latest trends and developments in the tech industry and to make informed decisions about how to use technology to improve their lives and businesses.

Strong Analytics

Strong Analytics is a data science consulting and machine learning engineering company that specializes in building bespoke data science, machine learning, and artificial intelligence solutions for various industries. They offer end-to-end services to design, engineer, and deploy custom AI products and solutions, leveraging a team of full-stack data scientists and engineers with cross-industry experience. Strong Analytics is known for its expertise in accelerating innovation, deploying state-of-the-art techniques, and empowering enterprises to unlock the transformative value of AI.

Plat.AI

Plat.AI is an automated predictive analytics software that offers model building solutions for various industries such as finance, insurance, and marketing. It provides a real-time decision-making engine that allows users to build and maintain AI models without any coding experience. The platform offers features like automated model building, data preprocessing tools, codeless modeling, and personalized approach to data analysis. Plat.AI aims to make predictive analytics easy and accessible for users of all experience levels, ensuring transparency, security, and compliance in decision-making processes.

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for enterprise, government, and automotive sectors. It offers Scale Data Engine for generative AI, Scale GenAI Platform, and evaluation services for model developers. The platform leverages enterprise data to build sustainable AI programs and partners with leading AI models. Scale's focus on generative AI applications, data labeling, and model evaluation sets it apart in the AI industry.

Prem AI

Prem is an AI platform that empowers developers and businesses to build and fine-tune generative AI models with ease. It offers a user-friendly development platform for developers to create AI solutions effortlessly. For businesses, Prem provides tailored model fine-tuning and training to meet unique requirements, ensuring data sovereignty and ownership. Trusted by global companies, Prem accelerates the advent of sovereign generative AI by simplifying complex AI tasks and enabling full control over intellectual capital. With a suite of foundational open-source SLMs, Prem supercharges business applications with cutting-edge research and customization options.

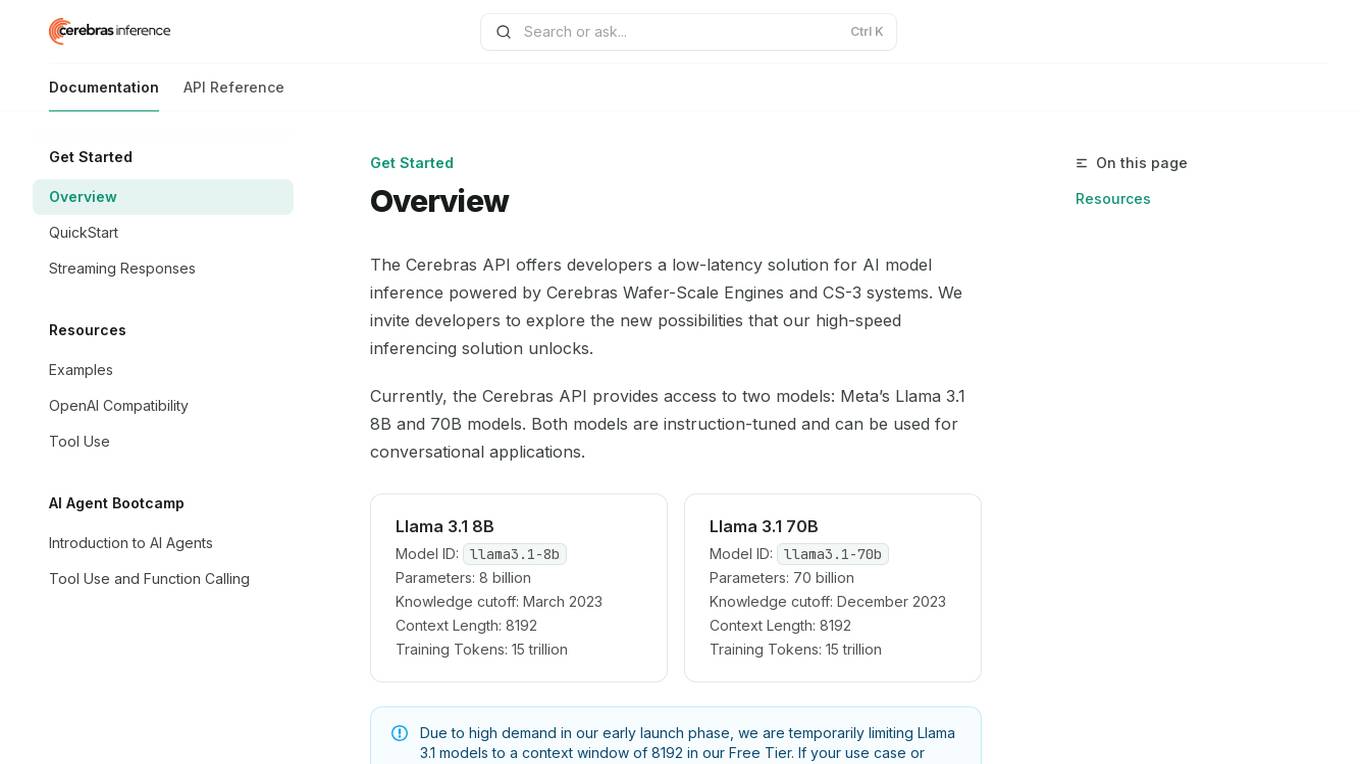

Cerebras API

The Cerebras API is a high-speed inferencing solution for AI model inference powered by Cerebras Wafer-Scale Engines and CS-3 systems. It offers developers access to two models: Meta’s Llama 3.1 8B and 70B models, which are instruction-tuned and suitable for conversational applications. The API provides low-latency solutions and invites developers to explore new possibilities in AI development.

madebymachines

madebymachines is an AI tool designed to assist users in various stages of the machine learning workflow, from data preparation to model development. The tool offers services such as data collection, data labeling, model training, hyperparameter tuning, and transfer learning. With a user-friendly interface and efficient algorithms, madebymachines aims to streamline the process of building machine learning models for both beginners and experienced users.

HappyML

HappyML is an AI tool designed to assist users in machine learning tasks. It provides a user-friendly interface for running machine learning algorithms without the need for complex coding. With HappyML, users can easily build, train, and deploy machine learning models for various applications. The tool offers a range of features such as data preprocessing, model evaluation, hyperparameter tuning, and model deployment. HappyML simplifies the machine learning process, making it accessible to users with varying levels of expertise.

2 - Open Source AI Tools

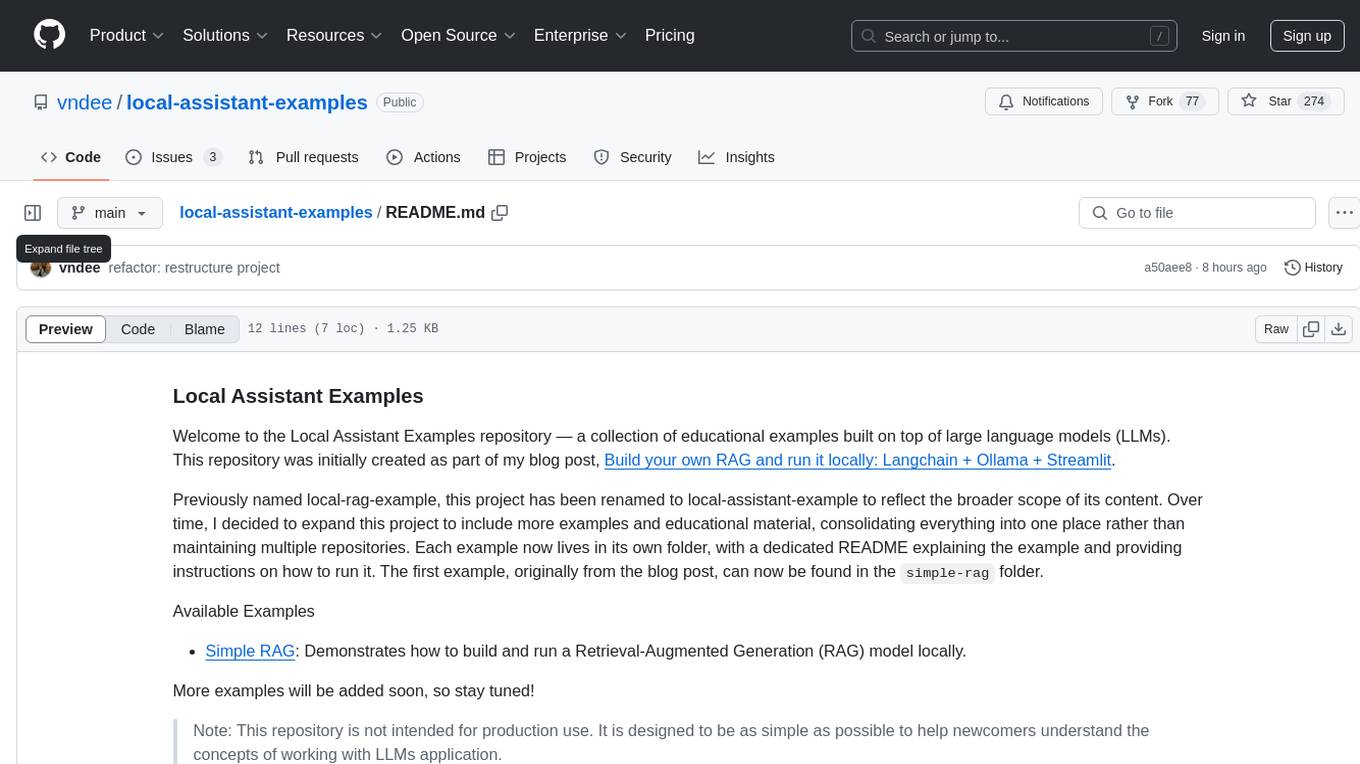

local-assistant-examples

The Local Assistant Examples repository is a collection of educational examples showcasing the use of large language models (LLMs). It was initially created for a blog post on building a RAG model locally, and has since expanded to include more examples and educational material. Each example is housed in its own folder with a dedicated README providing instructions on how to run it. The repository is designed to be simple and educational, not for production use.

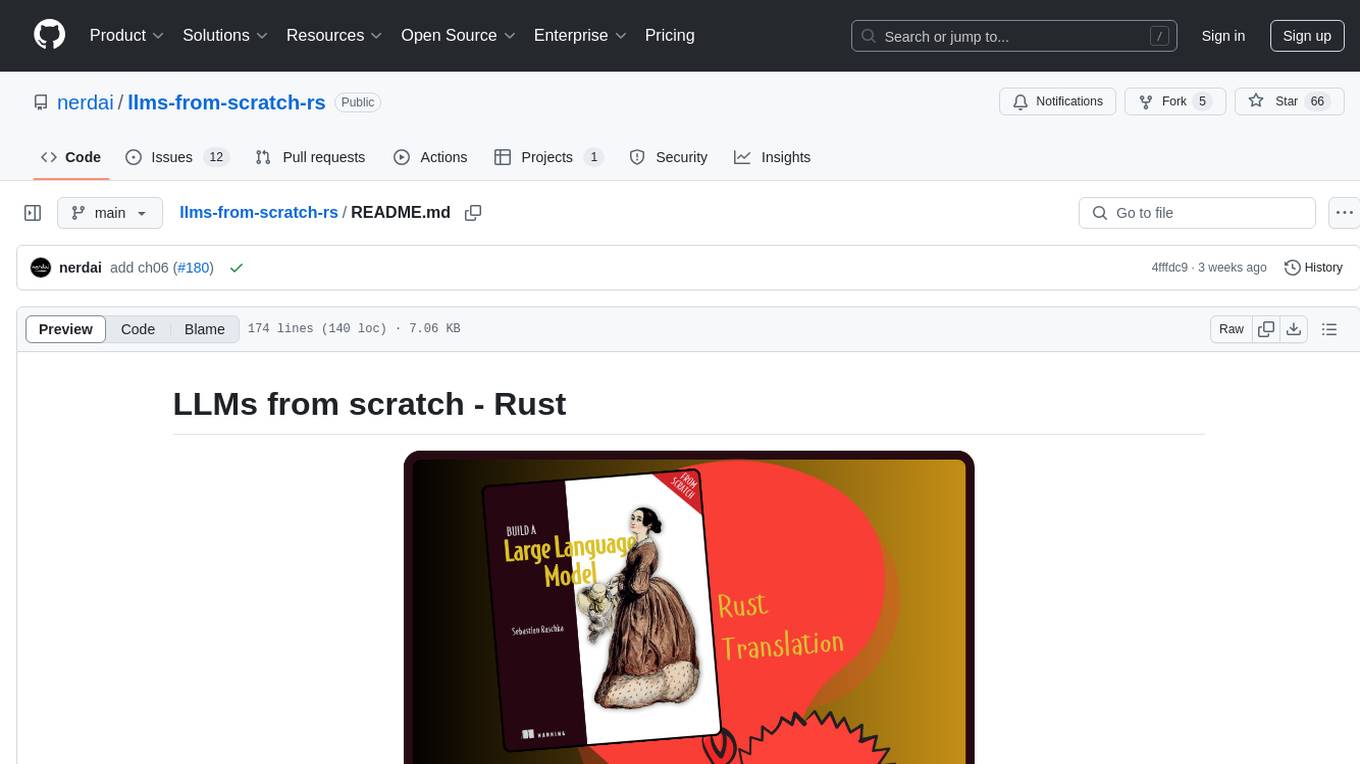

llms-from-scratch-rs

This project provides Rust code that follows the text 'Build An LLM From Scratch' by Sebastian Raschka. It translates PyTorch code into Rust using the Candle crate, aiming to build a GPT-style LLM. Users can clone the repo, run examples/exercises, and access the same datasets as in the book. The project includes chapters on understanding large language models, working with text data, coding attention mechanisms, implementing a GPT model, pretraining unlabeled data, fine-tuning for classification, and fine-tuning to follow instructions.

20 - OpenAI Gpts

Data Science Copilot

Data science co-pilot specializing in statistical modeling and machine learning.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

3D Printers

Expert guide in 3D printing for all skill levels, offering comprehensive advice.

Personalized ML+AI Learning Program

Interactive ML/AI tutor providing structured daily lessons.

Code & Research ML Engineer

ML Engineer who codes & researches for you! created by Meysam

Data Dynamo

A friendly data science coach offering practical, useful, and accurate advice.

Alas Data Analytics Student Mentor

Salam mən Alas Academy-nin Data Analitika üzrə Süni İntellekt mentoruyam. Mənə istənilən sualı verə bilərsiniz :)

Therocial Scientist

I am a digital scientist skilled in Python, here to assist with scientific and data analysis tasks.