Best AI tools for< Annotate Data >

20 - AI tool Sites

CVAT

CVAT is an open-source data annotation platform that helps teams of any size annotate data for machine learning. It is used by companies big and small in a variety of industries, including healthcare, retail, and automotive. CVAT is known for its intuitive user interface, advanced features, and support for a wide range of data formats. It is also highly extensible, allowing users to add their own custom features and integrations.

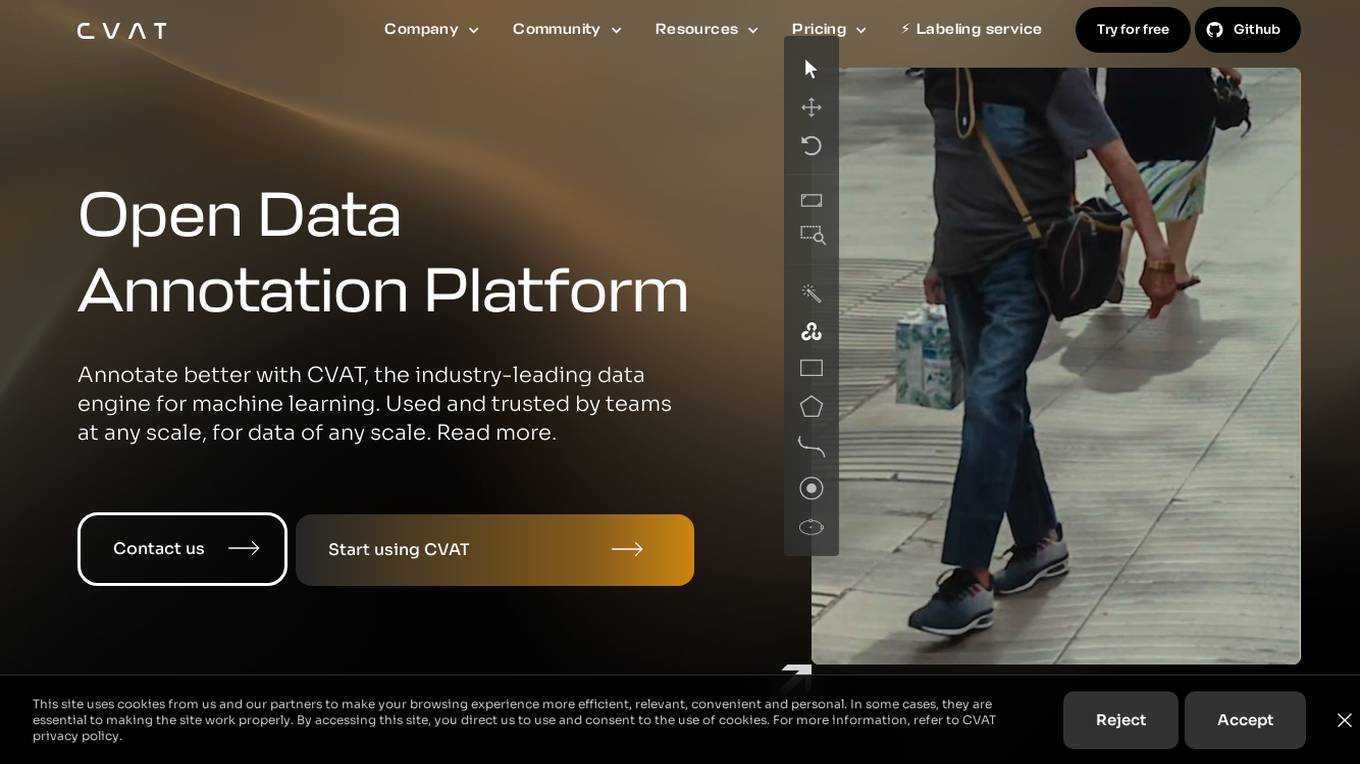

Cogitotech

Cogitotech is an AI tool that specializes in data annotation and labeling expertise. The platform offers a comprehensive suite of services tailored to meet training data needs for computer vision models and AI applications. With a decade-long industry exposure, Cogitotech provides high-quality training data for industries like healthcare, financial services, security, and more. The platform helps minimize biases in AI algorithms and ensures accurate and reliable training data solutions for deploying AI in real-life systems.

Pulan

Pulan is a comprehensive platform designed to assist in collecting, curating, annotating, and evaluating data points for various AI initiatives. It offers services in Natural Language Processing, Data Annotation, and Computer Vision across multiple industries such as Agriculture, Medical, Life Sciences, Government, Automotive, Insurance & Finance, Logistics, Software & Internet, Manufacturing, Retail, Construction, Energy, and Food & Beverage. Pulan provides a one-stop destination for reliable data collection and curation by industry experts, with a vast inventory of millions of datasets available for licensing at a fraction of the cost of creating the data oneself.

Innodata Inc.

Innodata Inc. is a global data engineering company that delivers AI-enabled software platforms and managed services for AI data collection/annotation, AI digital transformation, and industry-specific business processes. They provide a full-suite of services and products to power data-centric AI initiatives using artificial intelligence and human expertise. With a 30+ year legacy, they offer the highest quality data and outstanding service to their customers.

Sapien.io

Sapien.io is a decentralized data foundry that offers data labeling services powered by a decentralized workforce and gamified platform. The platform provides high-quality training data for large language models through a human-in-the-loop labeling process, enabling fine-tuning of datasets to build performant AI models. Sapien combines AI and human intelligence to collect and annotate various data types for any model, offering customized data collection and labeling models across industries.

Encord

Encord is a complete data development platform designed for AI applications, specifically tailored for computer vision and multimodal AI teams. It offers tools to intelligently manage, clean, and curate data, streamline labeling and workflow management, and evaluate model performance. Encord aims to unlock the potential of AI for organizations by simplifying data-centric AI pipelines, enabling the building of better models and deploying high-quality production AI faster.

DeepVinci

DeepVinci is an AI-powered platform that helps businesses automate their workflows and make better decisions. It offers a range of features, including data annotation, model training, and predictive analytics.

OpenAI01

OpenAI01.net is an AI tool that offers free usage with some limitations. It provides a new series of AI models designed to spend more time thinking before responding, capable of reasoning through complex tasks and solving harder problems in science, coding, and math. Users can ask questions and get answers for free, with the option to select different models based on credits. The tool excels in complex reasoning tasks and has shown impressive performance in various benchmarks.

Patee.io

Patee.io is an AI-powered platform that helps businesses automate their data annotation and labeling tasks. With Patee.io, businesses can easily create, manage, and annotate large datasets, which can then be used to train machine learning models. Patee.io offers a variety of features that make it easy to annotate data, including a user-friendly interface, a variety of annotation tools, and the ability to collaborate with others. Patee.io also offers a number of pre-built models that can be used to automate the annotation process, saving businesses time and money.

4Quant

4Quant is an AI-powered medical imaging platform that utilizes Big Data and Deep Learning technology to accelerate the extraction of high-quality medical labels. The platform offers a range of tools for image analysis, annotation, and data analytics in the medical field. 4Quant aims to provide scalable solutions for medical imaging analysis, statistical reporting, and personalized training in image analysis. The platform is built on the latest Big Data framework, Apache Spark, and integrates with cloud computing for efficient processing of large datasets.

Datature

Datature is an all-in-one platform for building and deploying computer vision models. It provides tools for data management, annotation, training, and deployment, making it easy to develop and implement computer vision solutions. Datature is used by a variety of industries, including healthcare, retail, manufacturing, and agriculture.

Datasaur

Datasaur is an advanced text and audio data labeling platform that offers customizable solutions for various industries such as LegalTech, Healthcare, Financial, Media, e-Commerce, and Government. It provides features like configurable annotation, quality control automation, and workforce management to enhance the efficiency of NLP and LLM projects. Datasaur prioritizes data security with military-grade practices and offers seamless integrations with AWS and other technologies. The platform aims to streamline the data labeling process, allowing engineers to focus on creating high-quality models.

Globose Technology Solutions

Globose Technology Solutions Pvt Ltd (GTS) is an AI data collection company that provides various datasets such as image datasets, video datasets, text datasets, speech datasets, etc., to train machine learning models. They offer premium data collection services with a human touch, aiming to refine AI vision and propel AI forward. With over 25+ years of experience, they specialize in data management, annotation, and effective data collection techniques for AI/ML. The company focuses on unlocking high-quality data, understanding AI's transformative impact, and ensuring data accuracy as the backbone of reliable AI.

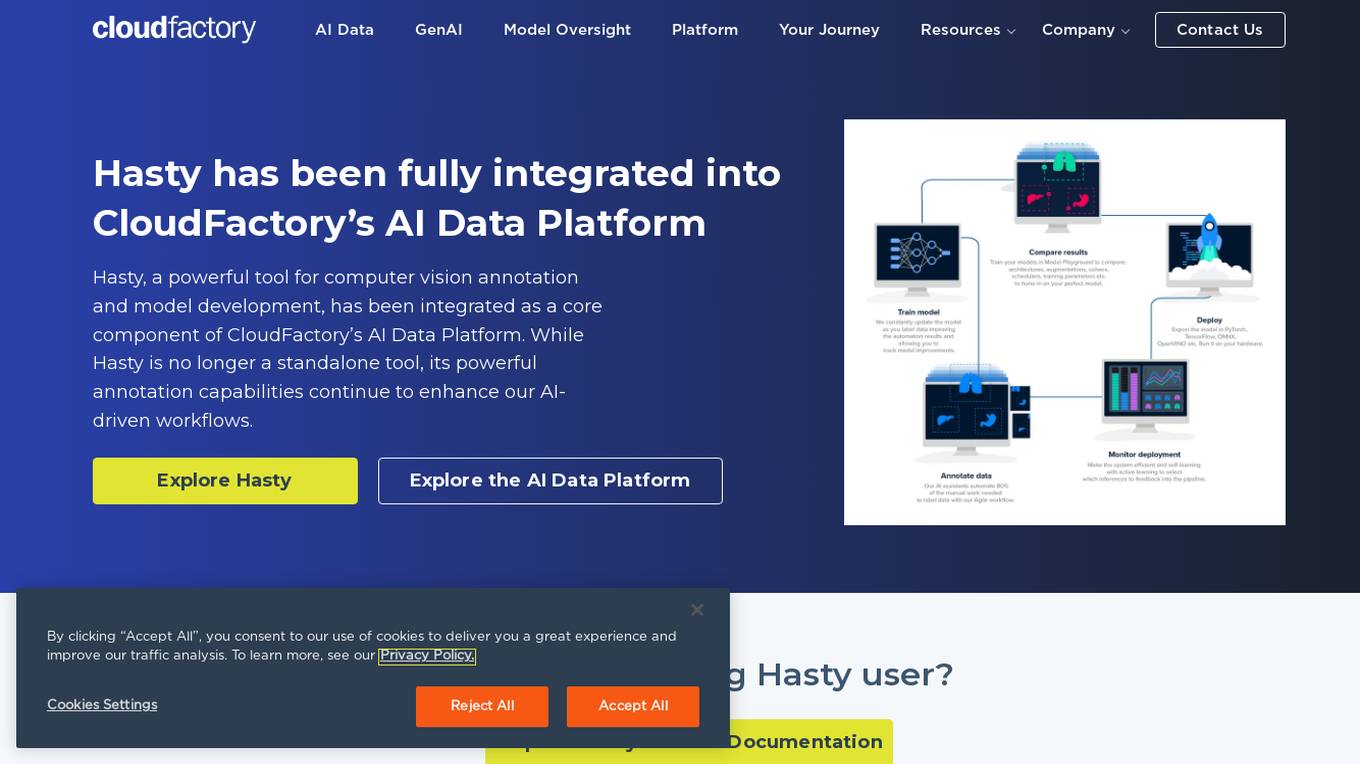

Hasty

CloudFactory's AI Data Platform, including the GenAI Model Oversight Platform, integrates Hasty as a powerful tool for computer vision annotation and model development. Hasty's annotation capabilities enhance AI-driven workflows within the platform, offering comprehensive solutions for data labeling, computer vision, NLP, and more.

UBIAI

UBIAI is a powerful text annotation tool that helps businesses accelerate their data labeling process. With UBIAI, businesses can annotate any type of document, including PDFs, images, and text. UBIAI also offers a variety of features to make the annotation process easier and more efficient, such as auto-labeling, multi-lingual annotation, and team collaboration. With UBIAI, businesses can save time and money on their data labeling projects.

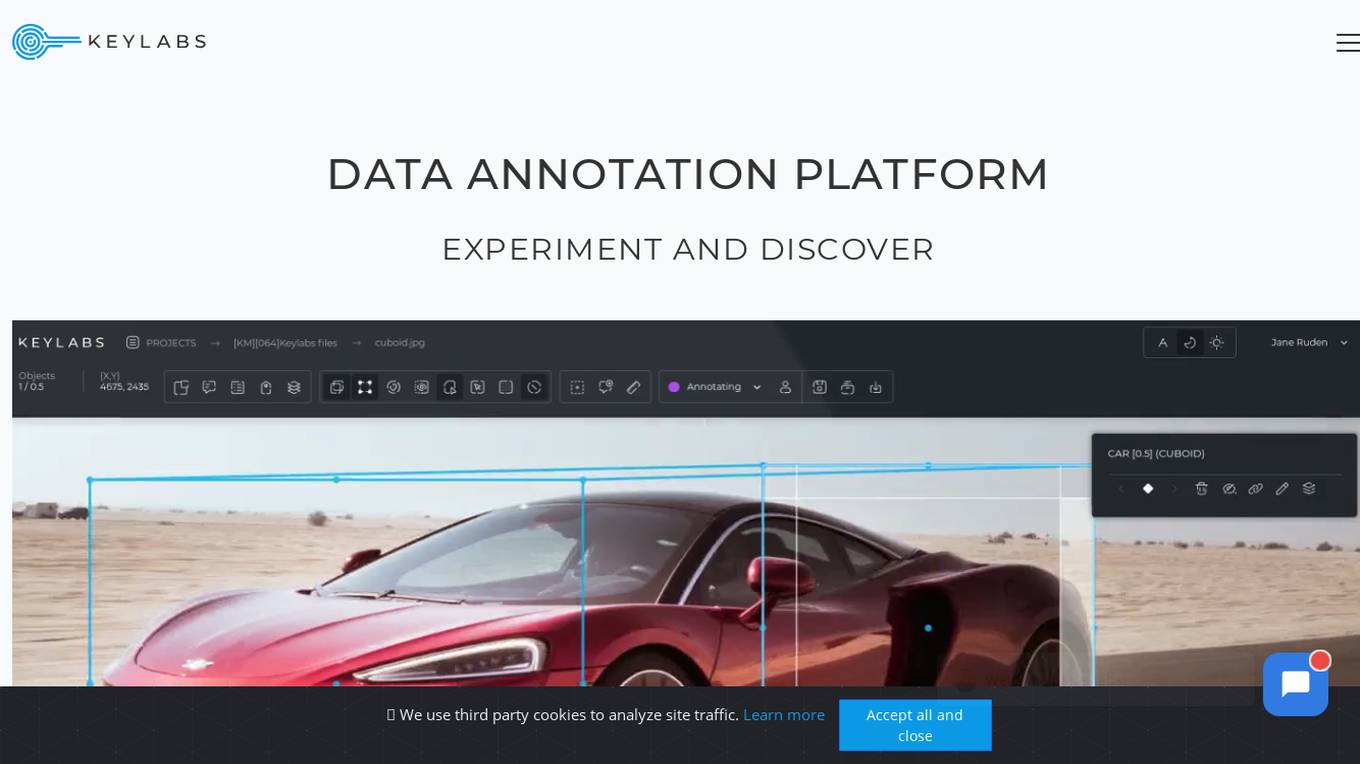

Keylabs

Keylabs is a state-of-the-art data annotation platform that enhances AI projects with highly precise data annotation and innovative tools. It offers image and video annotation, labeling, and ML-assisted features for industries such as automotive, aerial, agriculture, robotics, manufacturing, waste management, medical, healthcare, retail, fashion, sports, security, livestock, construction, and logistics. Keylabs provides advanced annotation tools, built-in machine learning, efficient operation management, and extra high performance to boost the preparation of visual data for machine learning. The platform ensures transparency in pricing with no hidden fees and offers a free trial for users to experience its capabilities.

Cogniroot

Cogniroot is an AI-powered platform that helps businesses automate their data annotation and data labeling processes. It provides a suite of tools and services that make it easy for businesses to train their machine learning models with high-quality data. Cogniroot's platform is designed to be scalable, efficient, and cost-effective, making it a valuable tool for businesses of all sizes.

OpenTrain AI

OpenTrain AI is a data labeling marketplace that leverages artificial intelligence to streamline the process of labeling data for machine learning models. It provides a platform where users can crowdsource data labeling tasks to a global community of annotators, ensuring high-quality labeled datasets for training AI algorithms. With advanced AI algorithms and human-in-the-loop validation, OpenTrain AI offers efficient and accurate data labeling services for various industries such as autonomous vehicles, healthcare, and natural language processing.

Innovatiana

Innovatiana is a data labeling outsourcing platform that offers high-quality datasets for artificial intelligence models. They specialize in image, audio/video, and text data labeling tasks, providing ethical outsourcing with a focus on impact and transparency. Innovatiana recruits and trains their own team in Madagascar, ensuring fair pay and good working conditions. They offer competitive rates, secure data handling, and high-quality labeled data to feed AI models. The platform supports various AI tasks such as Computer Vision, Data Collection, Data Moderation, Documents Processing, and Natural Language Processing.

Shaip

Shaip is a human-powered data processing service specializing in AI and ML models. They offer a wide range of services including data collection, annotation, de-identification, and more. Shaip provides high-quality training data for various AI applications, such as healthcare AI, conversational AI, and computer vision. With over 15 years of expertise, Shaip helps organizations unlock critical information from unstructured data, enabling them to achieve better results in their AI initiatives.

4 - Open Source AI Tools

argilla

Argilla is a collaboration platform for AI engineers and domain experts that require high-quality outputs, full data ownership, and overall efficiency. It helps users improve AI output quality through data quality, take control of their data and models, and improve efficiency by quickly iterating on the right data and models. Argilla is an open-source community-driven project that provides tools for achieving and maintaining high-quality data standards, with a focus on NLP and LLMs. It is used by AI teams from companies like the Red Cross, Loris.ai, and Prolific to improve the quality and efficiency of AI projects.

Online-RLHF

This repository, Online RLHF, focuses on aligning large language models (LLMs) through online iterative Reinforcement Learning from Human Feedback (RLHF). It aims to bridge the gap in existing open-source RLHF projects by providing a detailed recipe for online iterative RLHF. The workflow presented here has shown to outperform offline counterparts in recent LLM literature, achieving comparable or better results than LLaMA3-8B-instruct using only open-source data. The repository includes model releases for SFT, Reward model, and RLHF model, along with installation instructions for both inference and training environments. Users can follow step-by-step guidance for supervised fine-tuning, reward modeling, data generation, data annotation, and training, ultimately enabling iterative training to run automatically.

OlympicArena

OlympicArena is a comprehensive benchmark designed to evaluate advanced AI capabilities across various disciplines. It aims to push AI towards superintelligence by tackling complex challenges in science and beyond. The repository provides detailed data for different disciplines, allows users to run inference and evaluation locally, and offers a submission platform for testing models on the test set. Additionally, it includes an annotation interface and encourages users to cite their paper if they find the code or dataset helpful.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

9 - OpenAI Gpts

Chapter Enhancer

An assistant for annotating and improving fiction writing, chapter by chapter.

Apple PencilKit Complete Code Expert

A detailed expert trained on all 1,823 pages of Apple PencilKit, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️