Best AI tools for< Analyze Visual Data >

20 - AI tool Sites

Custom Vision

Custom Vision is a cognitive service provided by Microsoft that offers a user-friendly platform for creating custom computer vision models. Users can easily train the models by providing labeled images, allowing them to tailor the models to their specific needs. The service simplifies the process of implementing visual intelligence into applications, making it accessible even to those without extensive machine learning expertise.

Luxi

Luxi is an AI-powered tool that enables users to automatically discover items in images. By leveraging advanced image recognition technology, Luxi can accurately identify objects within images, making it easier for users to search, categorize, and analyze visual content. With Luxi, users can streamline their image processing workflows, saving time and effort in identifying and tagging objects within large image datasets.

Grok-1.5 Vision

Grok-1.5 Vision (Grok-1.5V) is a groundbreaking multimodal AI model developed by Elon Musk's research lab, x.AI. This advanced model has the potential to revolutionize the field of artificial intelligence and shape the future of various industries. Grok-1.5V combines the capabilities of computer vision, natural language processing, and other AI techniques to provide a comprehensive understanding of the world around us. With its ability to analyze and interpret visual data, Grok-1.5V can assist in tasks such as object recognition, image classification, and scene understanding. Additionally, its natural language processing capabilities enable it to comprehend and generate human language, making it a powerful tool for communication and information retrieval. Grok-1.5V's multimodal nature sets it apart from traditional AI models, allowing it to handle complex tasks that require a combination of visual and linguistic understanding. This makes it a valuable asset for applications in fields such as healthcare, manufacturing, and customer service.

GeoInfer

GeoInfer is a professional AI-powered geolocation platform that analyzes photographs to determine where they were taken. It uses visual-only inference technology to examine visual elements like architecture, terrain, vegetation, and environmental markers to identify geographic locations without requiring GPS metadata or EXIF data. The platform offers transparent accuracy levels for different use cases, including a Global Model with 1km-100km accuracy ideal for regional and city-level identification. Additionally, GeoInfer provides custom regional models for organizations requiring higher precision, such as meter-level accuracy for specific geographic areas. The platform is designed for professionals in various industries, including law enforcement, insurance fraud investigation, digital forensics, and security research.

PandasAI

PandasAI is an open-source AI tool designed for conversational data analysis. It allows users to ask questions in natural language to their enterprise data and receive real-time data insights. The tool is integrated with various data sources and offers enhanced analytics, actionable insights, detailed reports, and visual data representation. PandasAI aims to democratize data analysis for better decision-making, offering enterprise solutions for stable and scalable internal data analysis. Users can also fine-tune models, ingest universal data, structure data automatically, augment datasets, extract data from websites, and forecast trends using AI.

Vansh

Vansh is an AI tool developed by a tech enthusiast. It specializes in Vision AI and Vispark technologies. The tool offers advanced features for image recognition, object detection, and visual data analysis. With a user-friendly interface, Vansh caters to both beginners and experts in the field of artificial intelligence.

LensAI

LensAI is an AI-powered contextual computer vision ad solution that monetizes any visual content and fine-tunes targeting through identifying objects, logos, actions, and context and matching them with relevant ads.

Report Video Generator

Report Video Generator is an AI-powered platform designed for business professionals to transform raw data from Excel spreadsheets, CSV files, and business documents into professional data visualization videos. The tool automates the process of creating stunning annual report videos, quarterly business presentations, and executive summary videos without the need for video editing experience. It offers features like Excel to video conversion, AI-powered data analysis, professional voice narration, and cinematic visual design to help users create impactful video reports for various business purposes.

Dreamervision.ai

Dreamervision.ai is an innovative AI tool that utilizes advanced machine learning algorithms to analyze and interpret images and videos. The tool is designed to provide users with valuable insights and information based on visual content, enabling them to make informed decisions and enhance their understanding of the world around them. With its cutting-edge technology, Dreamervision.ai offers a seamless and efficient way to extract meaningful data from visual media, making it a valuable asset for professionals in various industries.

VideoInsights.ai

VideoInsights.ai is an AI-powered platform that serves as your AI assistant for media analysis. It allows users to analyze media content in real-time and gain valuable insights through lightning-fast, conversational analysis. The platform offers powerful features such as chat with videos, visual analysis, uploading and managing audio/video files, analyzing YouTube videos, and integrating analysis features via API. VideoInsights GPT provides a conversational interface to intuitively analyze audio and visual content, enhancing the overall media experience.

Z.ai

Z.ai is a free AI chatbot and agent powered by GLM-5 and GLM-4.7. It provides users with an interactive platform to engage with AI technology for various purposes. The chatbot is designed to assist users in answering questions, providing information, and offering personalized recommendations. With advanced algorithms and machine learning capabilities, Z.ai aims to enhance user experience and streamline communication processes.

Visual Computing and Artificial Intelligence Department

The Visual Computing and Artificial Intelligence Department focuses on foundational research problems at the intersection of Computer Graphics, Computer Vision, and Artificial Intelligence. Their long-term vision is to develop new ways to capture, represent, synthesize, and simulate models of the real world with high detail, robustness, and efficiency. By uniting concepts from Computer Graphics, Computer Vision, and Artificial Intelligence, they aim to create advanced methods for perceiving, understanding, and interpreting the complex real world. The department is headed by Prof. Dr. Christian Theobalt at the Saarbruecken Research Center for Visual Computing, Interaction, and Artificial Intelligence.

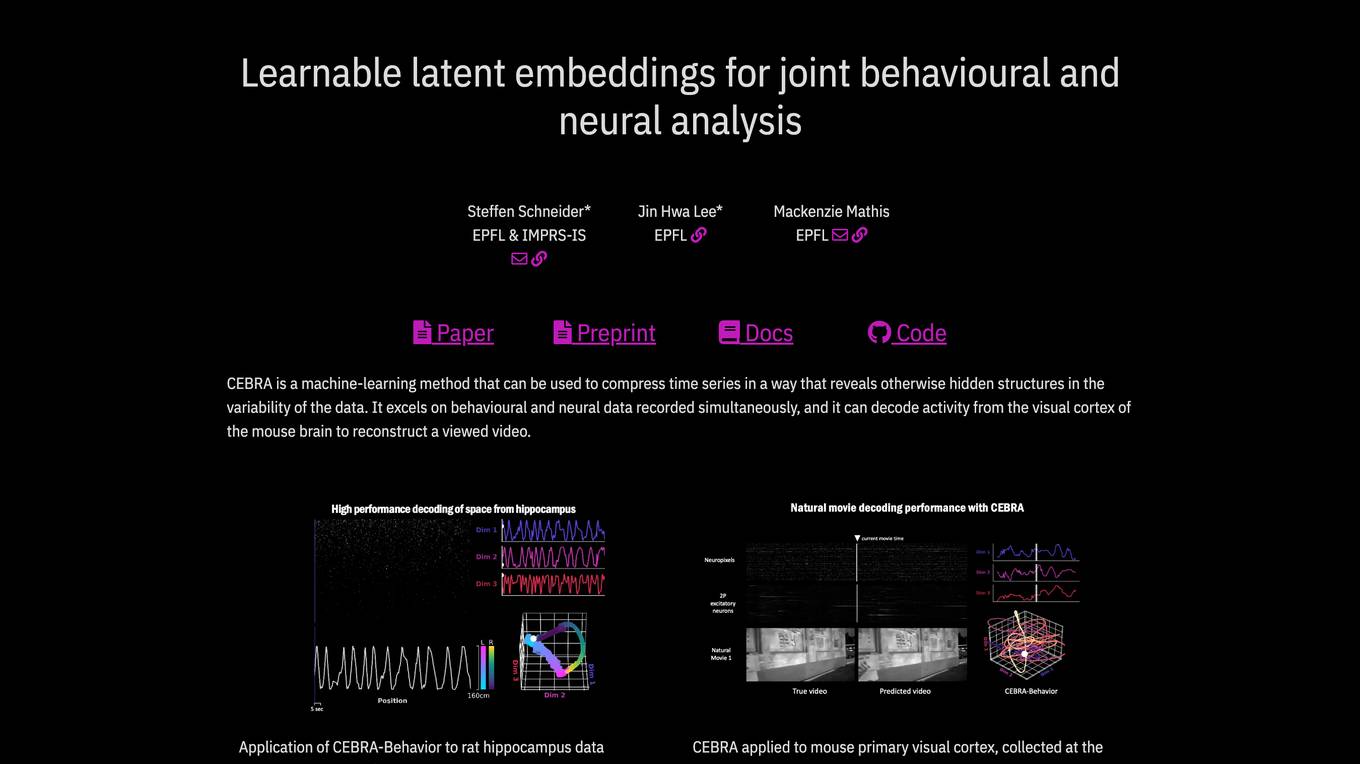

CEBRA

CEBRA is a self-supervised learning algorithm that provides interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode activity from the visual cortex, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, offering consistent and high-performance latent spaces for hypothesis testing and label-free usage across various datasets and species.

Supertype

Supertype is a full-cycle data science consultancy offering a range of services including computer vision, custom BI development, managed data analytics, programmatic report generation, and more. They specialize in providing tailored solutions for data analytics, business intelligence, and data engineering services. Supertype also offers services for developing custom web dashboards, computer vision research and development, PDF generation, managed analytics services, and LLM development. Their expertise extends to implementing data science in various industries such as e-commerce, mobile apps & games, and financial markets. Additionally, Supertype provides bespoke solutions for enterprises, advisory and consulting services, and an incubator platform for data scientists and engineers to work on real-world projects.

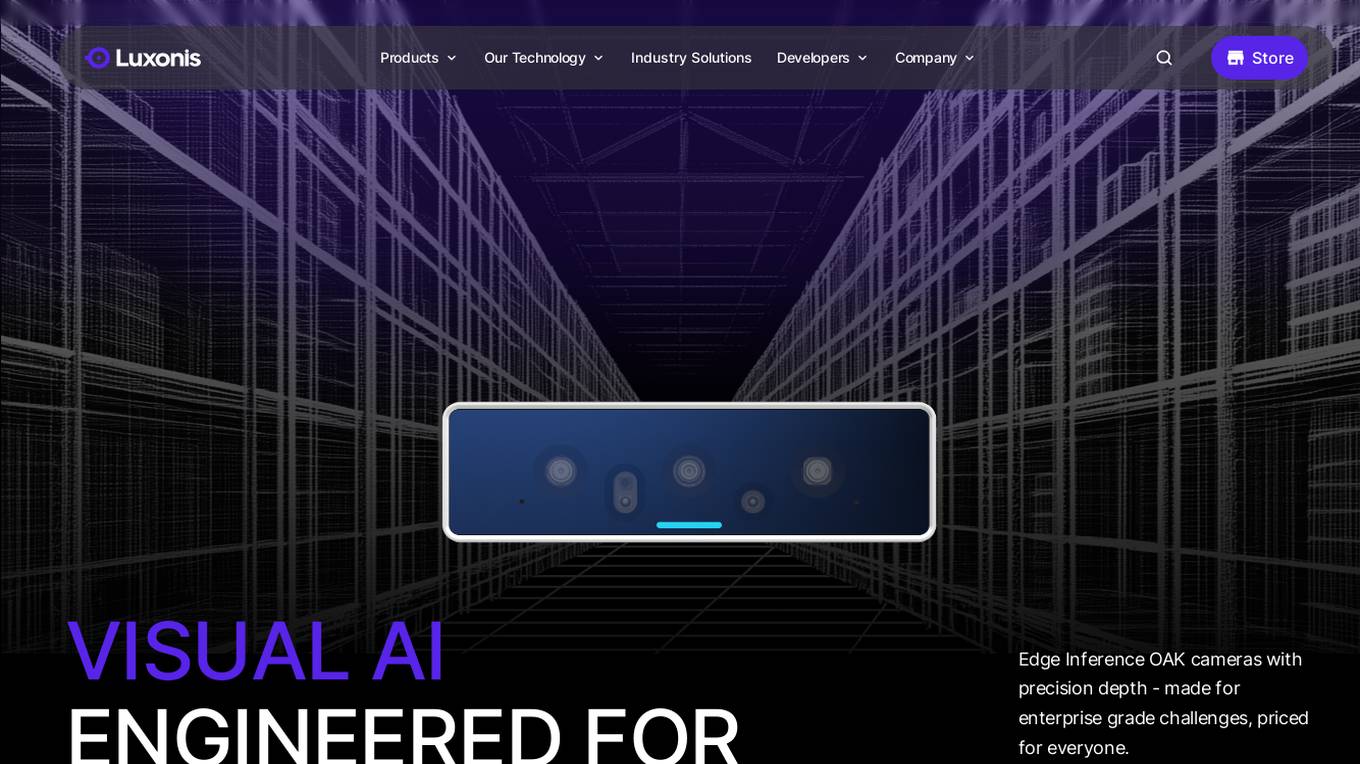

Luxonis

Luxonis is an AI application that offers Visual AI solutions engineered for precision edge inference. The application provides stereo depth cameras with unique features and quality, enabling users to perform advanced vision tasks on-device, reducing latency and bandwidth demands. With open-source DepthAI API, users can create and deploy custom vision solutions that scale with their needs. Luxonis also offers real-world training data for self-improving vision intelligence and operates flawlessly through vibrations, temperature shifts, and extended use. The application integrates advanced sensing capabilities with up to 48MP cameras, wide field of view, IMUs, microphones, ToF, thermal, IR illumination, and active stereo for unparalleled perception.

Molmo AI

Molmo AI is a powerful, open-source multimodal AI model revolutionizing visual understanding. It helps developers easily build tools that can understand images and interact with the world in useful ways. Molmo AI offers exceptional image understanding, efficient data usage, open and accessible features, on-device compatibility, and a new era in multimodal AI development. It closes the gap between open and closed AI models, empowers the AI community with open access, and efficiently utilizes data for superior performance.

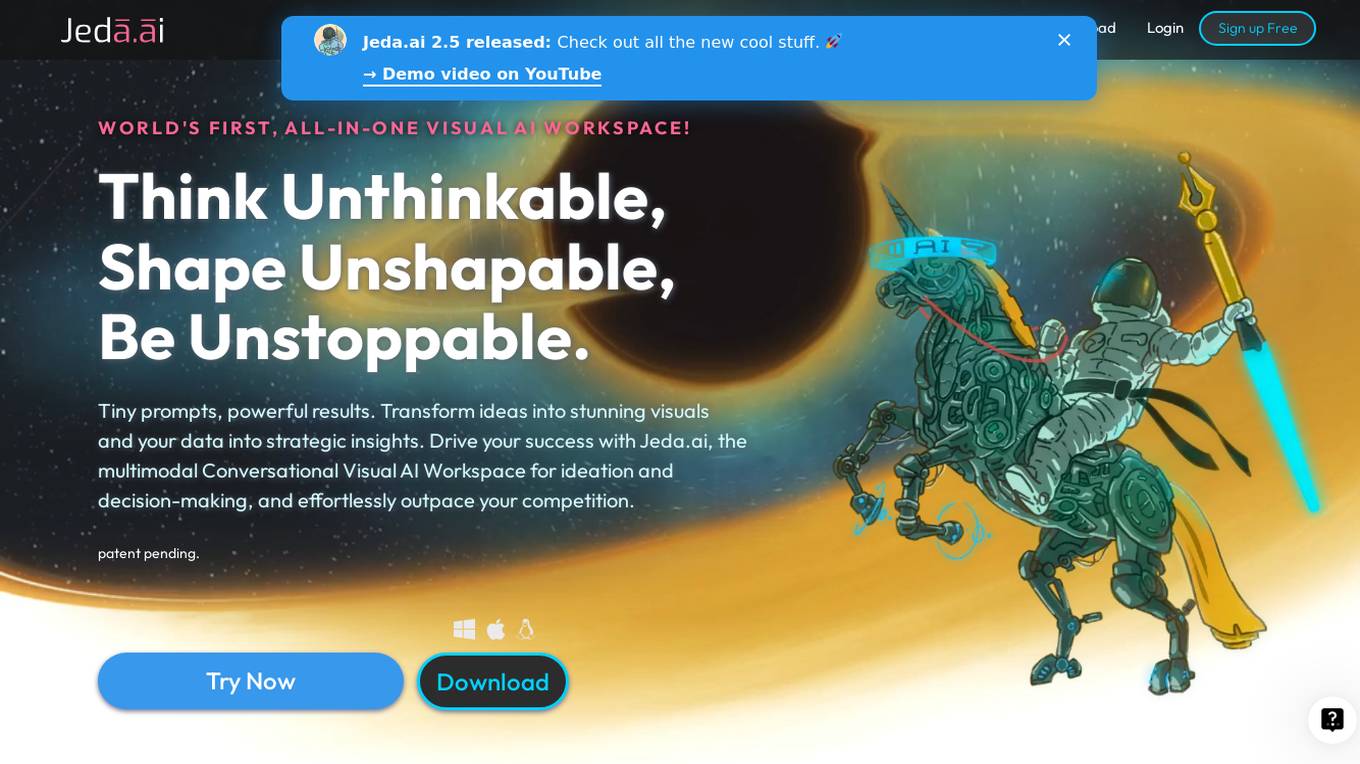

Jeda.ai

Jeda.ai is a cutting-edge AI application that offers a Visual AI Workspace for ideation and decision-making. It provides a platform for users to visualize, collaborate, and innovate using various AI tools like AI Template Analysis, AI Note Taking, AI Mind Map Diagrams, AI Flowchart Diagrams, AI Wireframe, AI Text Writer, AI Sticky Notes, AI Art, AI Vision, and Transform. The application caters to a wide range of business cases, including Leadership & Business Development, Product Management, Marketing, Sales, User Experience & Product Design, Design, Human Resources, Retrospective Analysis, Engineering, and Software Development. Jeda.ai aims to transform ideas into stunning visuals and data into strategic insights, helping users drive success and outpace their competition.

Jeda.ai

Jeda.ai is a generative AI workspace that allows users to create, visualize, and analyze data in a collaborative environment. It offers a variety of features, including AI template analysis, AI mind map diagrams, AI flowchart diagrams, AI wireframe, AI text writer, AI sticky notes, AI art, AI vision and transform, AI data analysis, AI document analysis, and AI business cases. Jeda.ai is designed to help users improve their productivity and make better decisions.

Restb.ai

Restb.ai is a leading provider of visual insights for real estate companies, utilizing computer vision and AI to analyze property images. The application offers solutions for AVMs, iBuyers, investors, appraisals, inspections, property search, marketing, insurance companies, and more. By providing actionable and unique data at scale, Restb.ai helps improve valuation accuracy, automate manual processes, and enhance property interactions. The platform enables users to leverage visual insights to optimize valuations, automate report quality checks, enhance listings, improve data collection, and more.

KNIME

KNIME is a data science platform that enables users to analyze, blend, transform, model, visualize, and deploy data science solutions without coding. It provides a range of features and advantages for business and domain experts, data experts, end users, and MLOps & IT professionals across various industries and departments.

3 - Open Source AI Tools

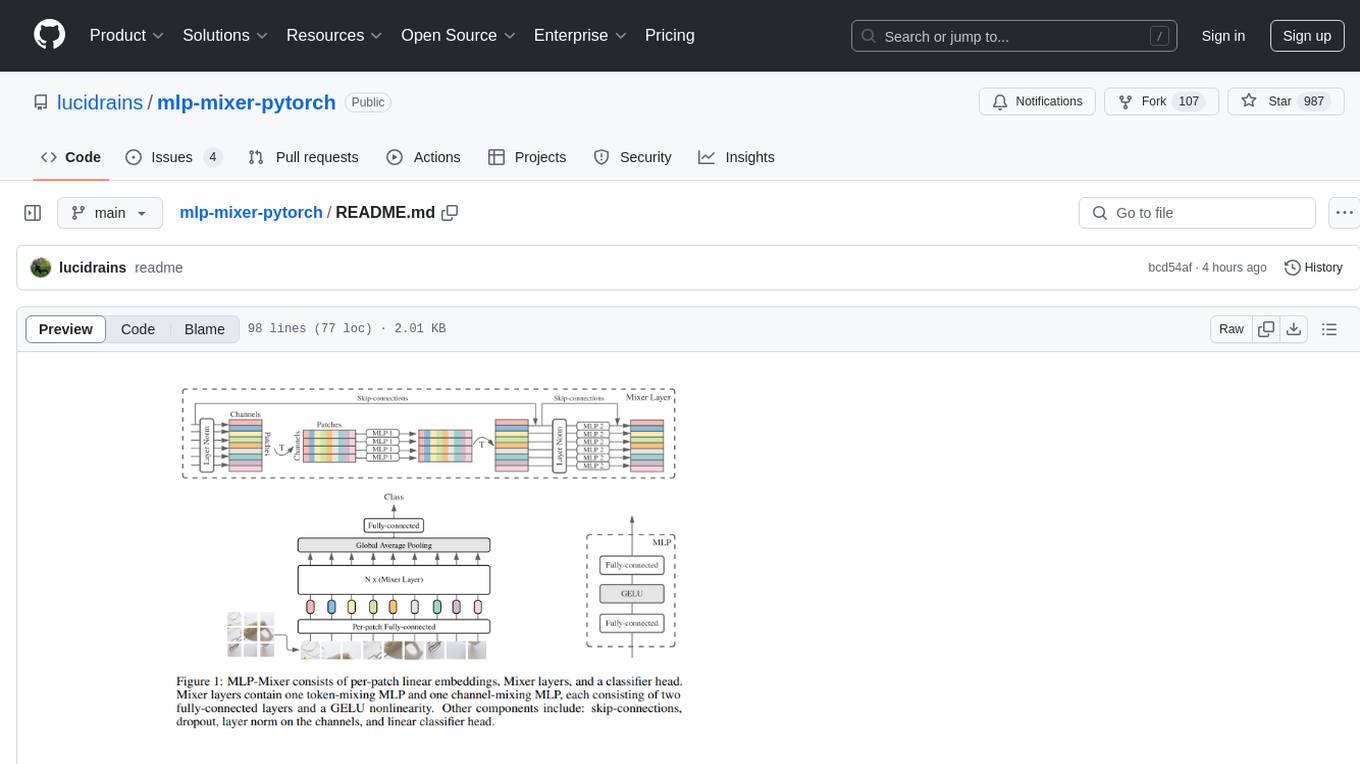

mlp-mixer-pytorch

MLP Mixer - Pytorch is an all-MLP solution for vision tasks, developed by Google AI, implemented in Pytorch. It provides an architecture that does not require convolutions or attention mechanisms, offering an alternative approach for image and video processing. The tool is designed to handle tasks related to image classification and video recognition, utilizing multi-layer perceptrons (MLPs) for feature extraction and classification. Users can easily install the tool using pip and integrate it into their Pytorch projects to experiment with MLP-based vision models.

ppt2desc

ppt2desc is a command-line tool that converts PowerPoint presentations into detailed textual descriptions using vision language models. It interprets and describes visual elements, capturing the full semantic meaning of each slide in a machine-readable format. The tool supports various model providers and offers features like converting PPT/PPTX files to semantic descriptions, processing individual files or directories, visual elements interpretation, rate limiting for API calls, customizable prompts, and JSON output format for easy integration.

awesome-robotics-ai-companies

A curated list of companies in the robotics and artificially intelligent agents industry, including large companies, stable start-ups, non-profits, and government research labs. The list covers companies working on autonomous vehicles, robotics, artificial intelligence, machine learning, computer vision, and more. It aims to showcase industry innovators and important players in the field of robotics and AI.

20 - OpenAI Gpts

Stock Ratings Tracker by Trading Volatility

Specialist in analyzing Ratings data with visual insights

Product Visual GPT

An AI adept in product visuals and data, creating compelling images and documents.

Goods Guru

"Goods Guru" represents a fusion of AI technology and in text and visual content creation, aimed at boosting online sales and improving the digital footprint of e-commerce businesses.

Flowchart, MindMap, Sequential Diagram

The specific types of diagrams I can produce, such as sequence diagrams, mind maps, and flowcharts, along with the appropriate contexts for each type.

Crypto Chronicle Assistant

Writes as a crypto journalist with insights, analysis, and visuals, in UK English.

Vicky Vega

Generates Vega-Lite JSON code for Power BI visuals from data and descriptions.

Quake and Volcano Watch Iceland

Seismic and volcanic monitor with in-depth data and visuals.

Time Tracker Visualizer (See Stats from Toggl)

I turn Toggl data into insightful visuals. Get your data from Settings (in Toggl Track) -> Data Export -> Export Time Entries. Ask for bonus analyses and plots :)