prompt-optimizer

一款提示词优化器,助力于编写高质量的提示词

Stars: 14718

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

README:

在线体验 | 快速开始 | 常见问题 | Chrome插件 | 💖赞助支持

开发文档 | Vercel部署指南 | MCP部署使用说明 | DeepWiki文档 | ZRead文档

Prompt Optimizer是一个强大的AI提示词优化工具,帮助你编写更好的AI提示词,提升AI输出质量。支持Web应用、桌面应用、Chrome插件和Docker部署四种使用方式。

1. 角色扮演对话:激发小模型潜力

在追求成本效益的生产或注重隐私的本地化场景中,结构化的提示词能让小模型稳定地进入角色,提供沉浸式、高一致性的角色扮演体验,有效激发其潜力。

2. 知识图谱提取:保障生产环境的稳定性

在需要程序化处理的生产环境中,高质量的提示词能显著降低对模型智能程度的要求,使得更经济的小模型也能稳定输出可靠的指定格式。本工具旨在辅助开发者快速达到此目的,从而加速开发、保障稳定,实现降本增效。

3. 诗歌写作:辅助创意探索与需求定制

当面对一个强大的AI,我们的目标不只是得到一个“好”答案,而是得到一个“我们想要的”独特答案。本工具能帮助用户将一个模糊的灵感(如“写首诗”)细化为具体的需求(关于什么主题、何种意象、何种情感),辅助您探索、发掘并精确表达自己的创意,与AI共创独一无二的作品。

- 🎯 智能优化:一键优化提示词,支持多轮迭代改进,提升AI回复准确度

- 📝 双模式优化:支持系统提示词优化和用户提示词优化,满足不同使用场景

- 🔄 对比测试:支持原始提示词和优化后提示词的实时对比,直观展示优化效果

- 🤖 多模型集成:支持OpenAI、Gemini、DeepSeek、智谱AI、SiliconFlow等主流AI模型

- 🔒 安全架构:纯客户端处理,数据直接与AI服务商交互,不经过中间服务器

- 📱 多端支持:同时提供Web应用、桌面应用、Chrome插件和Docker部署四种使用方式

- 🔐 访问控制:支持密码保护功能,保障部署安全

- 🧩 MCP协议支持:支持Model Context Protocol (MCP) 协议,可与Claude Desktop等MCP兼容应用集成

预览环境:https://prompt-dev.always200.com | 欢迎体验新功能并反馈

- 📊 上下文变量管理:自定义变量、多轮会话测试、变量替换预览

- 🛠️ 工具调用支持:Function Calling集成,支持OpenAI和Gemini工具调用

- 🎯 高级测试模式:更灵活的提示词测试和调试能力

注:高级功能正在开发完善中,未来版本将正式集成到主版本

直接访问:https://prompt.always200.com

项目是纯前端项目,所有数据只存储在浏览器本地,不会上传至任何服务器,因此直接使用在线版本也是安全可靠的

方式1:一键部署到自己的Vercel(方便,但后续无法自动更新):

方式2: Fork项目后在Vercel中导入(推荐,但需参考部署文档进行手动设置):

- 先Fork项目到自己的GitHub

- 然后在Vercel中导入该项目

- 可跟踪源项目更新,便于同步最新功能和修复

- 配置环境变量:

-

ACCESS_PASSWORD:设置访问密码,启用访问限制 -

VITE_OPENAI_API_KEY等:配置各AI服务商的API密钥

-

更多详细的部署步骤和注意事项,请查看:

从 GitHub Releases 下载最新版本。我们为各平台提供安装程序和压缩包两种格式。

-

安装程序 (推荐): 如

*.exe,*.dmg,*.AppImage等。强烈推荐使用此方式,因为它支持自动更新。 -

压缩包: 如

*.zip。解压即用,但无法自动更新。

桌面应用核心优势:

- ✅ 无跨域限制:作为原生桌面应用,它能彻底摆脱浏览器跨域(CORS)问题的困扰。这意味着您可以直接连接任何AI服务提供商的API,包括本地部署的Ollama或有严格安全策略的商业API,获得最完整、最稳定的功能体验。

- ✅ 自动更新:通过安装程序(如

.exe,.dmg)安装的版本,能够自动检查并更新到最新版。 - ✅ 独立运行:无需依赖浏览器,提供更快的响应和更佳的性能。

- 从Chrome商店安装(由于审批较慢,可能不是最新的):Chrome商店地址

- 点击图标即可打开提示词优化器

点击查看 Docker 部署命令

# 运行容器(默认配置)

docker run -d -p 8081:80 --restart unless-stopped --name prompt-optimizer linshen/prompt-optimizer

# 运行容器(配置API密钥和访问密码)

docker run -d -p 8081:80 \

-e VITE_OPENAI_API_KEY=your_key \

-e ACCESS_USERNAME=your_username \ # 可选,默认为"admin"

-e ACCESS_PASSWORD=your_password \ # 设置访问密码

--restart unless-stopped \

--name prompt-optimizer \

linshen/prompt-optimizer国内镜像: 如果Docker Hub访问较慢,可以将上述命令中的

linshen/prompt-optimizer替换为registry.cn-guangzhou.aliyuncs.com/prompt-optimizer/prompt-optimizer

点击查看 Docker Compose 部署步骤

# 1. 克隆仓库

git clone https://github.com/linshenkx/prompt-optimizer.git

cd prompt-optimizer

# 2. 可选:创建.env文件配置API密钥和访问认证

cp env.local.example .env

# 编辑 .env 文件,填入实际的 API 密钥和配置

# 3. 启动服务

docker compose up -d

# 4. 查看日志

docker compose logs -f

# 5. 访问服务

Web 界面:http://localhost:8081

MCP 服务器:http://localhost:8081/mcp你还可以直接编辑docker-compose.yml文件,自定义配置:

点击查看 docker-compose.yml 示例

services:

prompt-optimizer:

# 使用Docker Hub镜像

image: linshen/prompt-optimizer:latest

# 或使用阿里云镜像(国内用户推荐)

# image: registry.cn-guangzhou.aliyuncs.com/prompt-optimizer/prompt-optimizer:latest

container_name: prompt-optimizer

restart: unless-stopped

ports:

- "8081:80" # Web应用端口(包含MCP服务器,通过/mcp路径访问)

environment:

# API密钥配置

- VITE_OPENAI_API_KEY=your_openai_key

- VITE_GEMINI_API_KEY=your_gemini_key

# 访问控制(可选)

- ACCESS_USERNAME=admin

- ACCESS_PASSWORD=your_password点击查看 MCP Server 使用说明

Prompt Optimizer 现在支持 Model Context Protocol (MCP) 协议,可以与 Claude Desktop 等支持 MCP 的 AI 应用集成。

当通过 Docker 运行时,MCP Server 会自动启动,并可通过 http://ip:port/mcp 访问。

MCP Server 需要配置 API 密钥才能正常工作。主要的 MCP 专属配置:

# MCP 服务器配置

MCP_DEFAULT_MODEL_PROVIDER=openai # 可选值:openai, gemini, deepseek, siliconflow, zhipu, custom

MCP_LOG_LEVEL=info # 日志级别在 Docker 环境中,MCP Server 会与 Web 应用一起运行,您可以通过 Web 应用的相同端口访问 MCP 服务,路径为 /mcp。

例如,如果您将容器的 80 端口映射到主机的 8081 端口:

docker run -d -p 8081:80 \

-e VITE_OPENAI_API_KEY=your-openai-key \

-e MCP_DEFAULT_MODEL_PROVIDER=openai \

--name prompt-optimizer \

linshen/prompt-optimizer那么 MCP Server 将可以通过 http://localhost:8081/mcp 访问。

要在 Claude Desktop 中使用 Prompt Optimizer,您需要在 Claude Desktop 的配置文件中添加服务配置。

-

找到 Claude Desktop 的配置目录:

- Windows:

%APPDATA%\Claude\services - macOS:

~/Library/Application Support/Claude/services - Linux:

~/.config/Claude/services

- Windows:

-

编辑或创建

services.json文件,添加以下内容:

{

"services": [

{

"name": "Prompt Optimizer",

"url": "http://localhost:8081/mcp"

}

]

}请确保将 localhost:8081 替换为您实际部署 Prompt Optimizer 的地址和端口。

- optimize-user-prompt: 优化用户提示词以提高 LLM 性能

- optimize-system-prompt: 优化系统提示词以提高 LLM 性能

- iterate-prompt: 对已经成熟/完善的提示词进行定向迭代优化

更多详细信息,请查看 MCP 服务器用户指南。

点击查看API密钥配置方法

- 点击界面右上角的"⚙️设置"按钮

- 选择"模型管理"选项卡

- 点击需要配置的模型(如OpenAI、Gemini、DeepSeek等)

- 在弹出的配置框中输入对应的API密钥

- 点击"保存"即可

支持的模型:OpenAI、Gemini、DeepSeek、Zhipu智谱、SiliconFlow、自定义API(OpenAI兼容接口)

除了API密钥,您还可以在模型配置界面为每个模型单独设置高级LLM参数。这些参数通过一个名为 llmParams 的字段进行配置,它允许您以键值对的形式指定LLM SDK支持的任何参数,从而更精细地控制模型行为。

高级LLM参数配置示例:

-

OpenAI/兼容API:

{"temperature": 0.7, "max_tokens": 4096, "timeout": 60000} -

Gemini:

{"temperature": 0.8, "maxOutputTokens": 2048, "topP": 0.95} -

DeepSeek:

{"temperature": 0.5, "top_p": 0.9, "frequency_penalty": 0.1}

有关 llmParams 的更详细说明和配置指南,请参阅 LLM参数配置指南。

Docker部署时通过 -e 参数配置环境变量:

-e VITE_OPENAI_API_KEY=your_key

-e VITE_GEMINI_API_KEY=your_key

-e VITE_DEEPSEEK_API_KEY=your_key

-e VITE_ZHIPU_API_KEY=your_key

-e VITE_SILICONFLOW_API_KEY=your_key

# 多自定义模型配置(支持无限数量)

-e VITE_CUSTOM_API_KEY_ollama=dummy_key

-e VITE_CUSTOM_API_BASE_URL_ollama=http://localhost:11434/v1

-e VITE_CUSTOM_API_MODEL_ollama=qwen2.5:7b📖 详细配置指南: 查看 多自定义模型配置文档 了解完整的配置方法和高级用法

详细文档可查看 开发文档

点击查看本地开发命令

# 1. 克隆项目

git clone https://github.com/linshenkx/prompt-optimizer.git

cd prompt-optimizer

# 2. 安装依赖

pnpm install

# 3. 启动开发服务

pnpm dev # 主开发命令:构建core/ui并运行web应用

pnpm dev:web # 仅运行web应用

pnpm dev:fresh # 完整重置并重新启动开发环境- [x] 基础功能开发

- [x] Web应用发布

- [x] Chrome插件发布

- [x] 国际化支持

- [x] 支持系统提示词优化和用户提示词优化

- [x] 桌面应用发布

- [x] MCP服务发布

- [x] 高级模式:变量管理、上下文测试、工具调用

- [ ] 支持图片输入和多模态处理

- [ ] 支持工作区/项目管理

- [ ] 支持提示词收藏和模板管理

详细的项目状态可查看 项目状态文档

- 文档索引 - 所有文档的索引

- 技术开发指南 - 技术栈和开发规范

- LLM参数配置指南 - 高级LLM参数配置详细说明

- 项目结构 - 详细的项目结构说明

- 项目状态 - 当前进度和计划

- 产品需求 - 产品需求文档

- Vercel部署指南 - Vercel部署详细说明

点击查看常见问题解答

A: 大多数连接失败是由跨域问题(CORS)导致的。由于本项目是纯前端应用,浏览器出于安全考虑会阻止直接访问不同源的API服务。模型服务如未正确配置CORS策略,会拒绝来自浏览器的直接请求。

A: Ollama完全支持OpenAI标准接口,只需配置正确的跨域策略:

- 设置环境变量

OLLAMA_ORIGINS=*允许任意来源的请求 - 如仍有问题,设置

OLLAMA_HOST=0.0.0.0:11434监听任意IP地址

A: 这些平台通常有严格的跨域限制,推荐以下解决方案:

-

使用Vercel代理(便捷方案)

- 使用在线版本:prompt.always200.com

- 或自行部署到Vercel平台

- 在模型设置中勾选"使用Vercel代理"选项

- 请求流向:浏览器→Vercel→模型服务提供商

- 详细步骤请参考 Vercel部署指南

-

使用自部署的API中转服务(可靠方案)

- 部署如OneAPI等开源API聚合/代理工具

- 在设置中配置为自定义API端点

- 请求流向:浏览器→中转服务→模型服务提供商

A: 使用Vercel代理可能会触发某些模型服务提供商的风控机制。部分厂商可能会将来自Vercel的请求判定为代理行为,从而限制或拒绝服务。如遇此问题,建议使用自部署的中转服务。

A: 这是由浏览器的混合内容(Mixed Content)安全策略导致的。出于安全考虑,浏览器会阻止安全的HTTPS页面(如在线版)向不安全的HTTP地址(如您的本地Ollama服务)发送请求。

解决方案: 为了绕过此限制,您需要让应用和API处于同一种协议下(例如,都是HTTP)。推荐以下几种方式:

- 使用桌面版:桌面应用没有浏览器限制,是连接本地模型最稳定可靠的方式。

- docker部署:docker部署也是http

- 使用Chrome插件:插件在某些情况下也可以绕过部分安全限制。

点击查看贡献指南

- Fork 本仓库

- 创建特性分支 (

git checkout -b feature/AmazingFeature) - 提交更改 (

git commit -m '添加某个特性') - 推送到分支 (

git push origin feature/AmazingFeature) - 提交 Pull Request

提示:使用cursor工具开发时,建议在提交前:

- 使用"code_review"规则进行代码审查

- 按照审查报告格式检查:

- 变更的整体一致性

- 代码质量和实现方式

- 测试覆盖情况

- 文档完善程度

- 根据审查结果进行优化后再提交

感谢所有为项目做出贡献的开发者!

本项目采用 MIT 协议开源。

如果这个项目对你有帮助,请考虑给它一个 Star ⭐️

- 提交 Issue

- 发起 Pull Request

- 加入讨论组

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for prompt-optimizer

Similar Open Source Tools

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

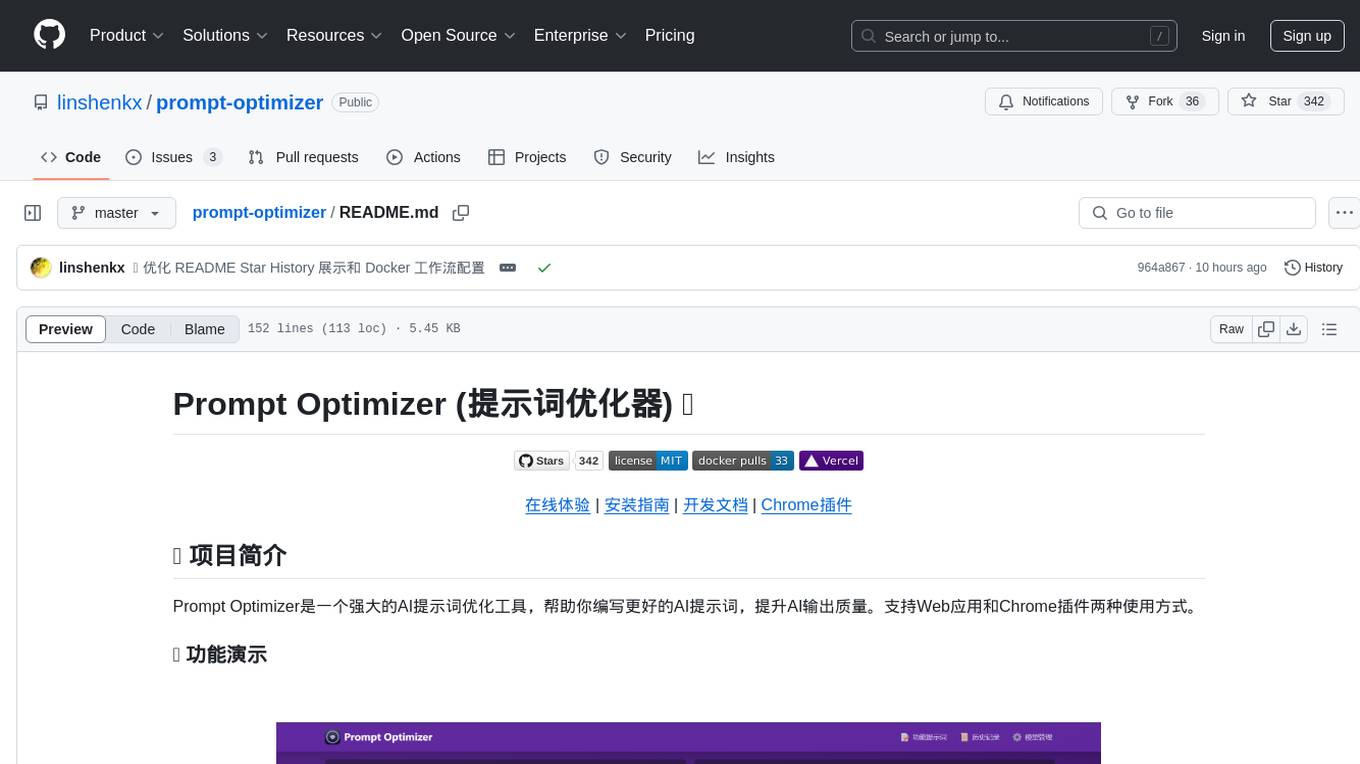

nndeploy

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

simple-ai

Simple AI is a lightweight Python library for implementing basic artificial intelligence algorithms. It provides easy-to-use functions and classes for tasks such as machine learning, natural language processing, and computer vision. With Simple AI, users can quickly prototype and deploy AI solutions without the complexity of larger frameworks.

jadx-mcp-server

JADX-MCP-SERVER is a standalone Python server that interacts with JADX-AI-MCP Plugin to analyze Android APKs using LLMs like Claude. It enables live communication with decompiled Android app context, uncovering vulnerabilities, parsing manifests, and facilitating reverse engineering effortlessly. The tool combines JADX-AI-MCP and JADX MCP SERVER to provide real-time reverse engineering support with LLMs, offering features like quick analysis, vulnerability detection, AI code modification, static analysis, and reverse engineering helpers. It supports various MCP tools for fetching class information, text, methods, fields, smali code, AndroidManifest.xml content, strings.xml file, resource files, and more. Tested on Claude Desktop, it aims to support other LLMs in the future, enhancing Android reverse engineering and APK modification tools connectivity for easier reverse engineering purely from vibes.

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

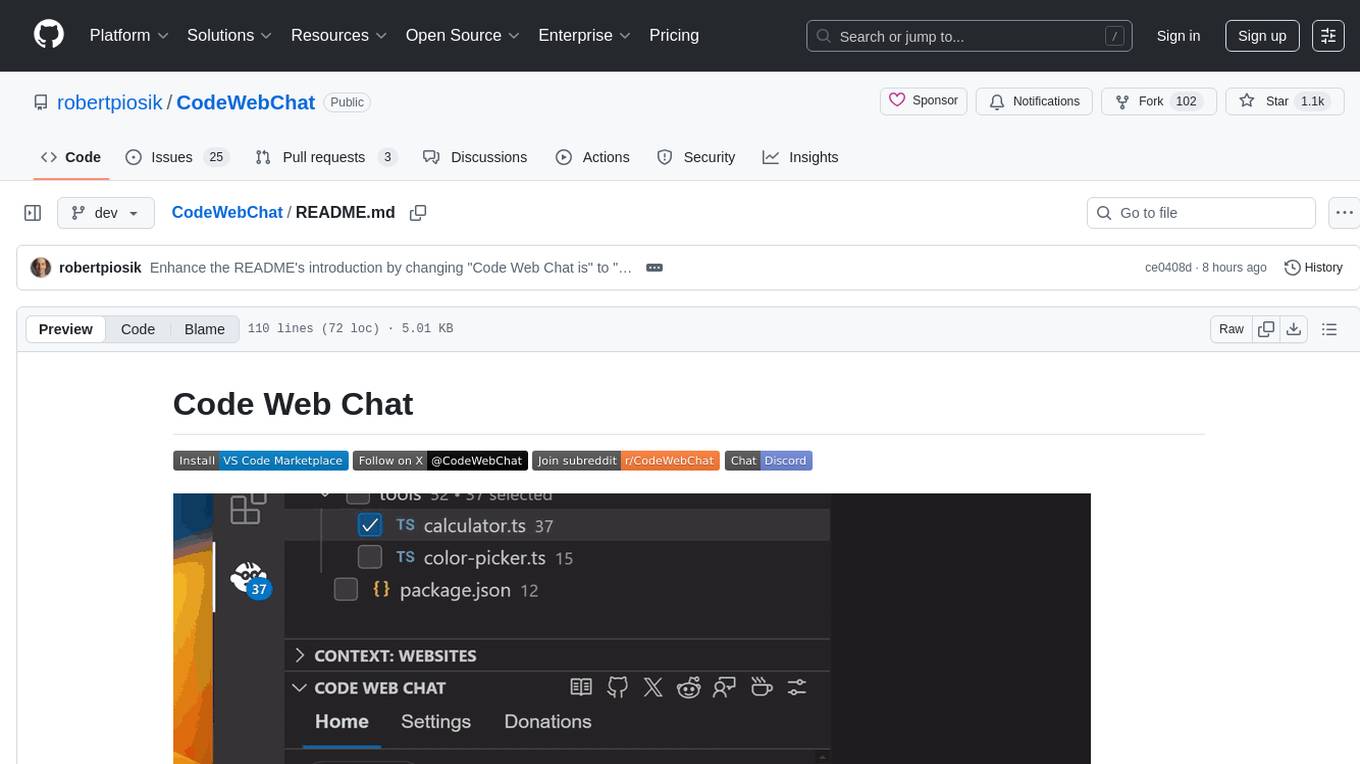

CodeWebChat

Code Web Chat is a versatile, free, and open-source AI pair programming tool with a unique web-based workflow. Users can select files, type instructions, and initialize various chatbots like ChatGPT, Gemini, Claude, and more hands-free. The tool helps users save money with free tiers and subscription-based billing and save time with multi-file edits from a single prompt. It supports chatbot initialization through the Connector browser extension and offers API tools for code completions, editing context, intelligent updates, and commit messages. Users can handle AI responses, code completions, and version control through various commands. The tool is privacy-focused, operates locally, and supports any OpenAI-API compatible provider for its utilities.

DashAI

DashAI is a powerful tool for building interactive web applications with Python. It allows users to create data visualization dashboards and deploy machine learning models with ease. The tool provides a simple and intuitive way to design and customize web apps without the need for extensive front-end development knowledge. With DashAI, users can easily showcase their data analysis results and predictive models in a user-friendly and interactive manner, making it ideal for data scientists, developers, and business professionals looking to share insights and predictions with stakeholders.

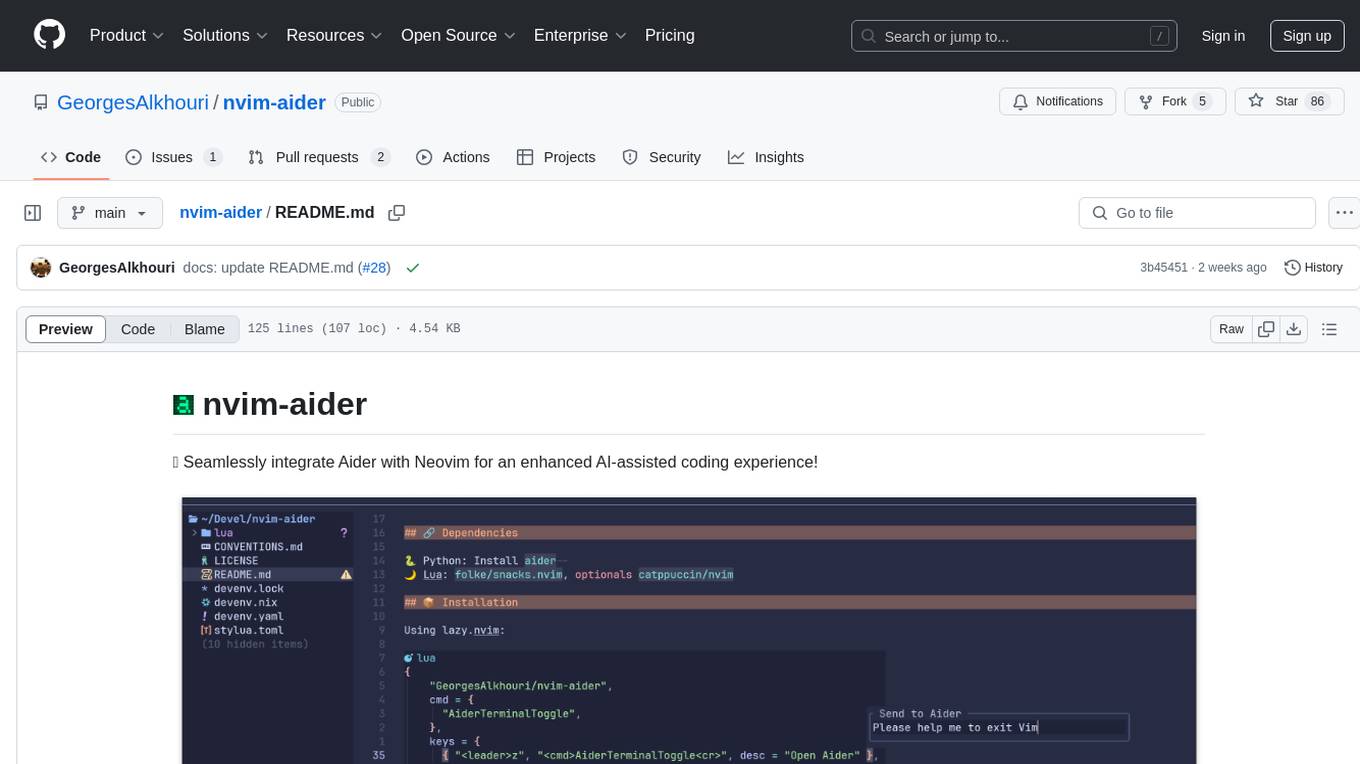

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

jadx-ai-mcp

JADX-AI-MCP is a plugin for the JADX decompiler that integrates with Model Context Protocol (MCP) to provide live reverse engineering support with LLMs like Claude. It allows for quick analysis, vulnerability detection, and AI code modification, all in real time. The tool combines JADX-AI-MCP and JADX MCP SERVER to analyze Android APKs effortlessly. It offers various prompts for code understanding, vulnerability detection, reverse engineering helpers, static analysis, AI code modification, and documentation. The tool is part of the Zin MCP Suite and aims to connect all android reverse engineering and APK modification tools with a single MCP server for easy reverse engineering of APK files.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

For similar tasks

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

TrustEval-toolkit

TrustEval-toolkit is a dynamic and comprehensive framework for evaluating the trustworthiness of Generative Foundation Models (GenFMs) across dimensions such as safety, fairness, robustness, privacy, and more. It offers features like dynamic dataset generation, multi-model compatibility, customizable metrics, metadata-driven pipelines, comprehensive evaluation dimensions, optimized inference, and detailed reports.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

learn-applied-generative-ai-fundamentals

This repository is part of the Certified Cloud Native Applied Generative AI Engineer program, focusing on Applied Generative AI Fundamentals. It covers prompt engineering, developing custom GPTs, and Multi AI Agent Systems. The course helps in building a strong understanding of generative AI, applying Large Language Models (LLMs) and diffusion models practically. It introduces principles of prompt engineering to work efficiently with AI, creating custom AI models and GPTs using OpenAI, Azure, and Google technologies. It also utilizes open source libraries like LangChain, CrewAI, and LangGraph to automate tasks and business processes.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.