repo-to-text

Convert a repository structure and its contents into a single text file, including the tree output and file contents in markdown code blocks. It may be useful to chat with LLM about your code.

Stars: 122

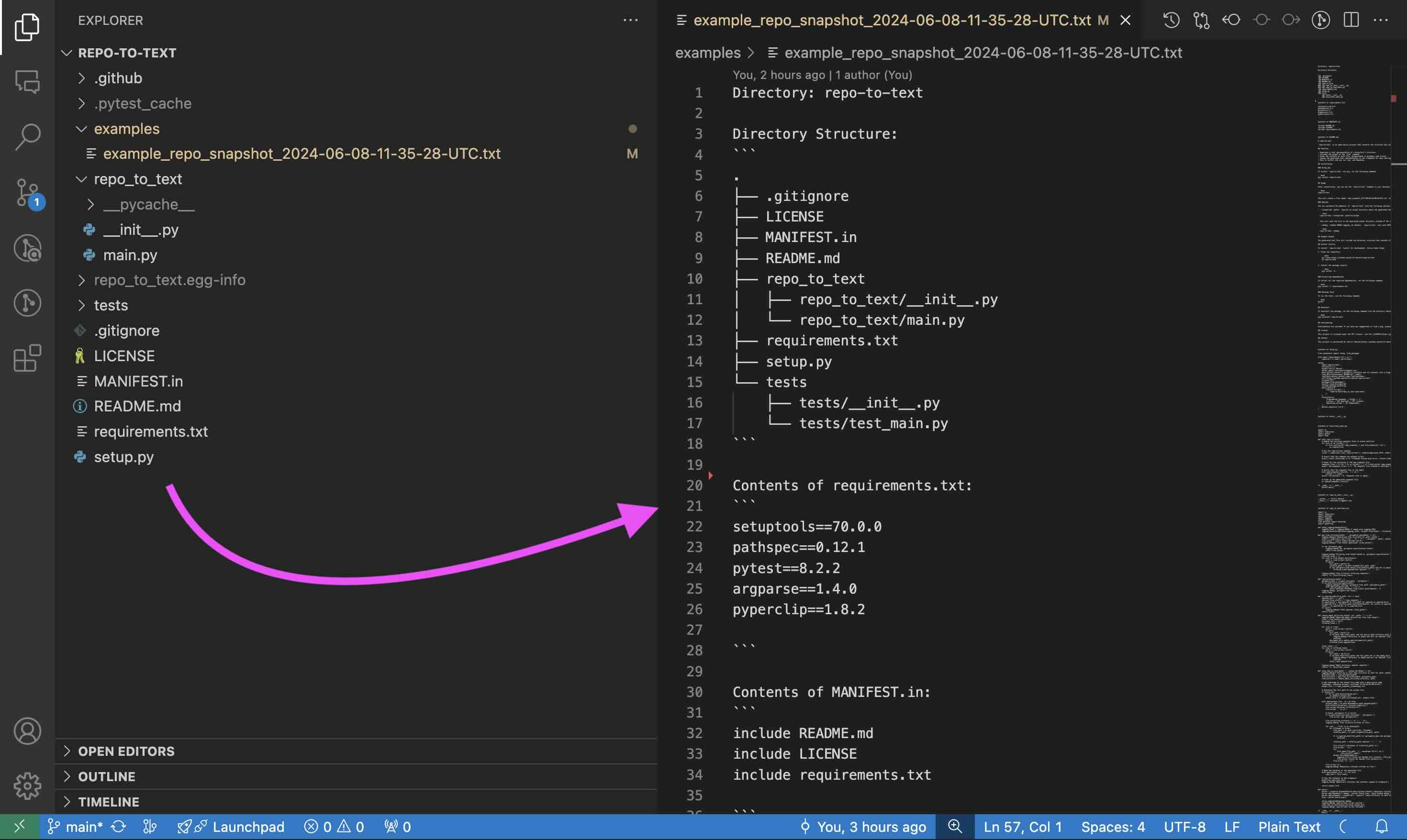

The `repo-to-text` tool converts a directory's structure and contents into a single text file. It generates a formatted text representation that includes the directory tree and file contents, making it easy to share code with LLMs for development and debugging. Users can customize the tool's behavior with various options and settings, including output directory specification, debug logging, and file inclusion/exclusion rules. The tool supports Docker usage for containerized environments and provides detailed instructions for installation, usage, settings configuration, and contribution guidelines. It is a versatile tool for converting repository contents into text format for easy sharing and documentation.

README:

repo-to-text converts a directory's structure and contents into a single text file. Run it from the terminal to generate a formatted text representation that includes the directory tree and file contents. This makes it easy to share code with LLMs for development and debugging.

-

pip install repo-to-text— install the package -

cd <your-repo-dir>— navigate to the repository directory -

repo-to-text— run the command, result will be saved in the current directory

The generated text file will include the directory structure and contents of each file. For a full example, see the example output for this repository.

To install repo-to-text via pip, run the following command:

pip install repo-to-textTo upgrade to the latest version, use the following command:

pip install --upgrade repo-to-textAfter installation, you can use the repo-to-text command in your terminal. Navigate to the directory you want to convert and run:

repo-to-textor

flattenThis will create a file named repo-to-text_YYYY-MM-DD-HH-MM-SS-UTC.txt in the current directory with the text representation of the repository. The contents of this file will also be copied to your clipboard for easy sharing.

You can customize the behavior of repo-to-text with the following options:

-

--output-dir <path>: Specify an output directory where the generated text file will be saved. For example:repo-to-text --output-dir /path/to/output

This will save the file in the specified output directory instead of the current directory.

-

--create-settingsor--init: Create a default.repo-to-text-settings.yamlfile with predefined settings. This is useful if you want to start with a template settings file and customize it according to your needs. To create the default settings file, run the following command in your terminal:repo-to-text --create-settings

or

repo-to-text --init

This will create a file named

.repo-to-text-settings.yamlin the current directory. If the file already exists, an error will be raised to prevent overwriting. -

--debug: Enable DEBUG logging. By default,repo-to-textruns with INFO logging level. To enable DEBUG logging, use the--debugflag:repo-to-text --debug

or to save the debug log to a file:

repo-to-text --debug > debug_log.txt 2>&1

-

input_dir: Specify the directory to process. If not provided, the current directory (.) will be used. For example:repo-to-text /path/to/input_dir

-

--stdout: Output the generated text to stdout instead of a file. This is useful for piping the output to another command or saving it to a file using shell redirection. For example:repo-to-text --stdout > myfile.txtThis will write the output directly to

myfile.txtinstead of creating a timestamped file.

-

Build the container:

docker compose build

-

Start a shell session:

docker compose run --rm repo-to-text

Once in the shell, you can run repo-to-text:

-

Process current directory:

repo-to-text

-

Process specific directory:

repo-to-text /home/user/myproject

-

Use with options:

repo-to-text --output-dir /home/user/output

The container mounts your home directory at /home/user, allowing access to all your projects.

repo-to-text also supports configuration via a .repo-to-text-settings.yaml file. By default, the tool works without this file, but you can use it to customize what gets included in the final text file.

To create a settings file, add a file named .repo-to-text-settings.yaml at the root of your project with the following content:

# Syntax: gitignore rules

# Ignore files and directories for all sections from gitignore file

# Default: True

gitignore-import-and-ignore: True

# Ignore files and directories for tree

# and "Contents of ..." sections

ignore-tree-and-content:

- ".repo-to-text-settings.yaml"

- "examples/"

- "MANIFEST.in"

- "setup.py"

# Ignore files and directories for "Contents of ..." section

ignore-content:

- "README.md"

- "LICENSE"

- "tests/"You can copy this file from the existing example in the project and adjust it to your needs. This file allows you to specify rules for what should be ignored when creating the text representation of the repository.

-

gitignore-import-and-ignore: Ignore files and directories specified in

.gitignorefor all sections. - ignore-tree-and-content: Ignore files and directories for the tree and "Contents of ..." sections.

- ignore-content: Ignore files and directories only for the "Contents of ..." section.

Using these settings, you can control which files and directories are included or excluded from the final text file.

Using Wildcard Patterns

-

*.ext: Matches any file ending with .ext in any directory. -

dir/*.ext: Matches files ending with .ext in the specified directory dir/. -

**/*.ext: Matches files ending with .ext in any subdirectory (recursive).

If you want to include certain files that would otherwise be ignored, use the ! pattern:

ignore-tree-and-content:

- "*.txt"

- "!README.txt"To ignore the generated text files, add the following lines to your .gitignore file:

repo-to-text_*.txtTo install repo-to-text locally for development, follow these steps:

-

Clone the repository:

git clone https://github.com/kirill-markin/repo-to-text cd repo-to-text -

Install the package with development dependencies:

pip install -e ".[dev]"

- Python >= 3.6

- Core dependencies:

- setuptools >= 70.0.0

- pathspec >= 0.12.1

- argparse >= 1.4.0

- PyYAML >= 6.0.1

For development, additional packages are required:

- pytest >= 8.2.2

- black

- mypy

- isort

- build

- twine

To run the tests, use the following command:

pytestTo uninstall the package, run the following command from the directory where the repository is located:

pip uninstall repo-to-textContributions are welcome! If you have any suggestions or find a bug, please open an issue or submit a pull request.

This project is licensed under the MIT License - see the LICENSE file for details.

This project is maintained by Kirill Markin. For any inquiries or feedback, please contact [email protected].

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for repo-to-text

Similar Open Source Tools

repo-to-text

The `repo-to-text` tool converts a directory's structure and contents into a single text file. It generates a formatted text representation that includes the directory tree and file contents, making it easy to share code with LLMs for development and debugging. Users can customize the tool's behavior with various options and settings, including output directory specification, debug logging, and file inclusion/exclusion rules. The tool supports Docker usage for containerized environments and provides detailed instructions for installation, usage, settings configuration, and contribution guidelines. It is a versatile tool for converting repository contents into text format for easy sharing and documentation.

ai-digest

ai-digest is a CLI tool designed to aggregate your codebase into a single Markdown file for use with Claude Projects or custom ChatGPTs. It aggregates all files in the specified directory and subdirectories, ignores common build artifacts and configuration files, and provides options for whitespace removal and custom ignore patterns. The tool is useful for preparing codebases for AI analysis and assistance.

reader

Reader is a tool that converts any URL to an LLM-friendly input with a simple prefix `https://r.jina.ai/`. It improves the output for your agent and RAG systems at no cost. Reader supports image reading, captioning all images at the specified URL and adding `Image [idx]: [caption]` as an alt tag. This enables downstream LLMs to interact with the images in reasoning, summarizing, etc. Reader offers a streaming mode, useful when the standard mode provides an incomplete result. In streaming mode, Reader waits a bit longer until the page is fully rendered, providing more complete information. Reader also supports a JSON mode, which contains three fields: `url`, `title`, and `content`. Reader is backed by Jina AI and licensed under Apache-2.0.

web-codegen-scorer

Web Codegen Scorer is a tool designed to evaluate the quality of web code generated by Large Language Models (LLMs). It allows users to make evidence-based decisions related to AI-generated code by iterating on system prompts, comparing code quality from different models, and monitoring code quality over time. The tool focuses specifically on web code and offers various features such as configuring evaluations, specifying system instructions, using built-in checks for code quality, automatically repairing issues, and viewing results with an intuitive report viewer UI.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

aides-jeunes

The user interface (and the main server) of the simulator of aids and social benefits for young people. It is based on the free socio-fiscal simulator Openfisca.

mlx-lm

MLX LM is a Python package designed for generating text and fine-tuning large language models on Apple silicon using MLX. It offers integration with the Hugging Face Hub for easy access to thousands of LLMs, support for quantizing and uploading models to the Hub, low-rank and full model fine-tuning capabilities, and distributed inference and fine-tuning with `mx.distributed`. Users can interact with the package through command line options or the Python API, enabling tasks such as text generation, chatting with language models, model conversion, streaming generation, and sampling. MLX LM supports various Hugging Face models and provides tools for efficient scaling to long prompts and generations, including a rotating key-value cache and prompt caching. It requires macOS 15.0 or higher for optimal performance.

LLM-Engineers-Handbook

The LLM Engineer's Handbook is an official repository containing a comprehensive guide on creating an end-to-end LLM-based system using best practices. It covers data collection & generation, LLM training pipeline, a simple RAG system, production-ready AWS deployment, comprehensive monitoring, and testing and evaluation framework. The repository includes detailed instructions on setting up local and cloud dependencies, project structure, installation steps, infrastructure setup, pipelines for data processing, training, and inference, as well as QA, tests, and running the project end-to-end.

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

renumics-rag

Renumics RAG is a retrieval-augmented generation assistant demo that utilizes LangChain and Streamlit. It provides a tool for indexing documents and answering questions based on the indexed data. Users can explore and visualize RAG data, configure OpenAI and Hugging Face models, and interactively explore questions and document snippets. The tool supports GPU and CPU setups, offers a command-line interface for retrieving and answering questions, and includes a web application for easy access. It also allows users to customize retrieval settings, embeddings models, and database creation. Renumics RAG is designed to enhance the question-answering process by leveraging indexed documents and providing detailed answers with sources.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

minecraft-mcp-server

Minecraft MCP Server is a bot powered by large language models and Mineflayer API. It uses the Model Context Protocol (MCP) to enable models like Claude to control a Minecraft character. The bot allows users to interact with Minecraft through commands and chat messages, facilitating tasks such as movement, inventory management, block interaction, entity interaction, and more. Users can also upload images of buildings and ask the bot to build them. The tool is designed to work with Claude Desktop and requires specific configurations for Minecraft and MCP clients. Contributions to the project, including refactoring, testing, documentation, and new functionality, are welcome.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

gemini-pro-bot

This Python Telegram bot utilizes Google's `gemini-pro` LLM API to generate creative text formats based on user input. It's designed to be an engaging and interactive way to explore the capabilities of large language models. Key features include generating various text formats like poems, code, scripts, and musical pieces. The bot supports real-time streaming of the generation process, allowing users to witness the text unfold. Additionally, it can respond to messages with Bard's creative output and handle image-based inputs for multimodal responses. User authentication is optional, and the bot can be easily integrated with Docker or installed via pipenv.

opencommit

OpenCommit is a tool that auto-generates meaningful commits using AI, allowing users to quickly create commit messages for their staged changes. It provides a CLI interface for easy usage and supports customization of commit descriptions, emojis, and AI models. Users can configure local and global settings, switch between different AI providers, and set up Git hooks for integration with IDE Source Control. Additionally, OpenCommit can be used as a GitHub Action to automatically improve commit messages on push events, ensuring all commits are meaningful and not generic. Payments for OpenAI API requests are handled by the user, with the tool storing API keys locally.

For similar tasks

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

core

OpenSumi is a framework designed to help users quickly build AI Native IDE products. It provides a set of tools and templates for creating Cloud IDEs, Desktop IDEs based on Electron, CodeBlitz web IDE Framework, Lite Web IDE on the Browser, and Mini-App liked IDE. The framework also offers documentation for users to refer to and a detailed guide on contributing to the project. OpenSumi encourages contributions from the community and provides a platform for users to report bugs, contribute code, or improve documentation. The project is licensed under the MIT license and contains third-party code under other open source licenses.

yolo-ios-app

The Ultralytics YOLO iOS App GitHub repository offers an advanced object detection tool leveraging YOLOv8 models for iOS devices. Users can transform their devices into intelligent detection tools to explore the world in a new and exciting way. The app provides real-time detection capabilities with multiple AI models to choose from, ranging from 'nano' to 'x-large'. Contributors are welcome to participate in this open-source project, and licensing options include AGPL-3.0 for open-source use and an Enterprise License for commercial integration. Users can easily set up the app by following the provided steps, including cloning the repository, adding YOLOv8 models, and running the app on their iOS devices.

PyAirbyte

PyAirbyte brings the power of Airbyte to every Python developer by providing a set of utilities to use Airbyte connectors in Python. It enables users to easily manage secrets, work with various connectors like GitHub, Shopify, and Postgres, and contribute to the project. PyAirbyte is not a replacement for Airbyte but complements it, supporting data orchestration frameworks like Airflow and Snowpark. Users can develop ETL pipelines and import connectors from local directories. The tool simplifies data integration tasks for Python developers.

md-agent

MD-Agent is a LLM-agent based toolset for Molecular Dynamics. It uses Langchain and a collection of tools to set up and execute molecular dynamics simulations, particularly in OpenMM. The tool assists in environment setup, installation, and usage by providing detailed steps. It also requires API keys for certain functionalities, such as OpenAI and paper-qa for literature searches. Contributions to the project are welcome, with a detailed Contributor's Guide available for interested individuals.

flowgen

FlowGen is a tool built for AutoGen, a great agent framework from Microsoft and a lot of contributors. It provides intuitive visual tools that streamline the construction and oversight of complex agent-based workflows, simplifying the process for creators and developers. Users can create Autoflows, chat with agents, and share flow templates. The tool is fully dockerized and supports deployment on Railway.app. Contributions to the project are welcome, and the platform uses semantic-release for versioning and releases.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.