aider.nvim

None

Stars: 241

Aider.nvim is a Neovim plugin that integrates the Aider AI coding assistant, allowing users to open a terminal window within Neovim to run Aider. It provides functions like AiderOpen to open the terminal window, AiderAddModifiedFiles to add git-modified files to the Aider chat, and customizable keybindings. Users can configure the plugin using the setup function to manage context, keybindings, debug logging, and ignore specific buffer names.

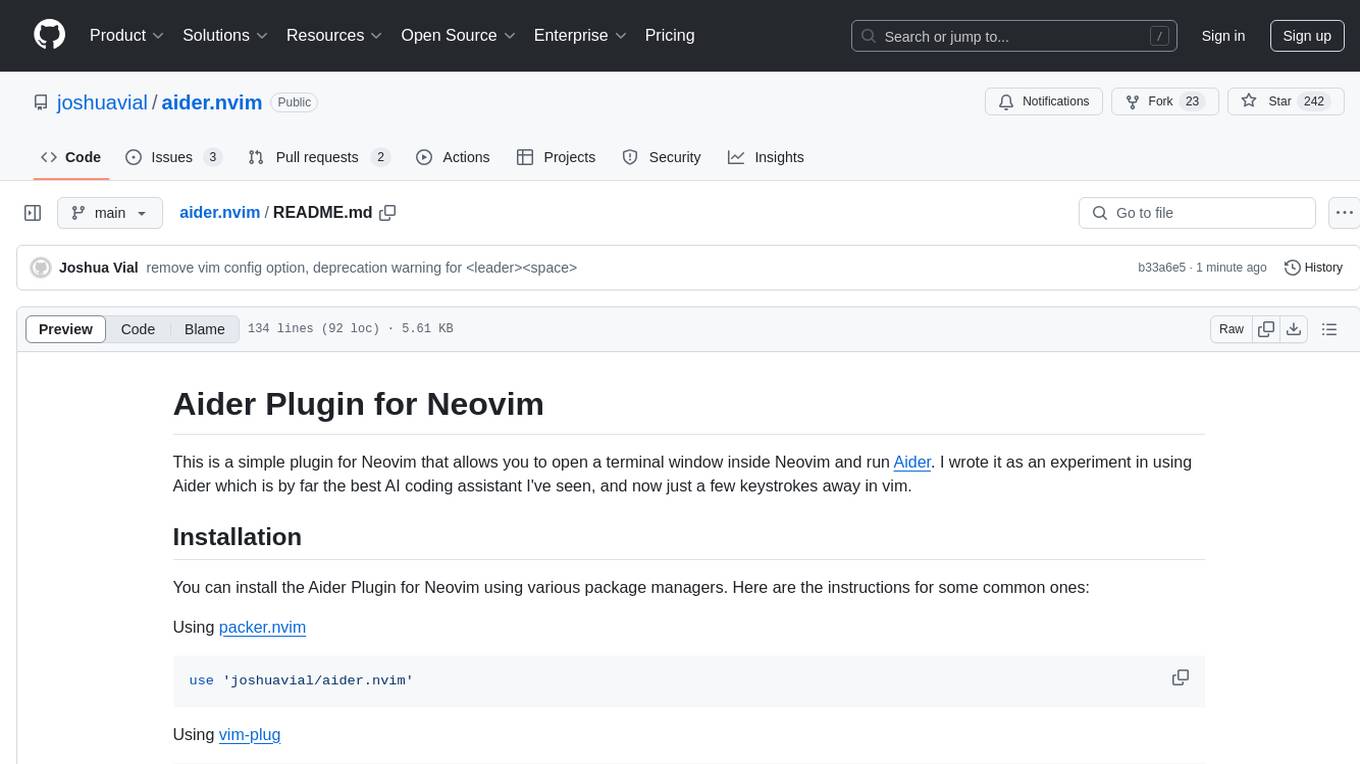

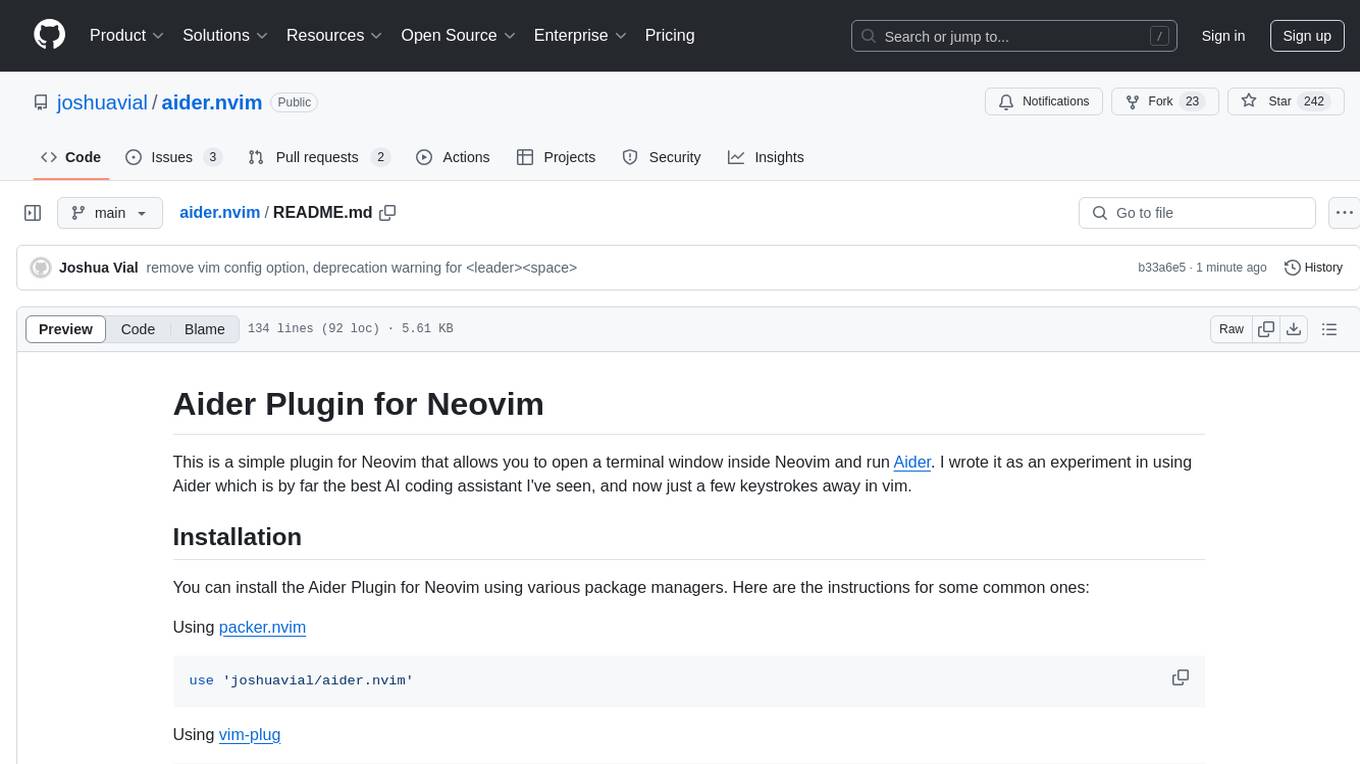

README:

This is a simple plugin for Neovim that allows you to open a terminal window inside Neovim and run Aider. I wrote it as an experiment in using Aider which is by far the best AI coding assistant I've seen, and now just a few keystrokes away in vim.

You can install the Aider Plugin for Neovim using various package managers. Here are the instructions for some common ones:

Using packer.nvim

use 'joshuavial/aider.nvim'Using vim-plug

Plug 'joshuavial/aider.nvim'Using dein

call dein#add('joshuavial/aider.nvim')Using lazy.nvim

{

"joshuavial/aider.nvim",

config = function()

require("aider").setup({

-- your configuration comes here

-- if you don't want to use the default settings

auto_manage_context = true, -- automatically manage buffer context

default_bindings = true, -- use default <leader>A keybindings

debug = false, -- enable debug logging

vim = false -- pass --vim flag to aider

})

end,

}The Aider Plugin for Neovim provides several functions and commands:

-

AiderOpen: Opens a terminal window with the Aider command.- Arguments:

-

args: Command line arguments to pass toaider(default: "") -

window_type: Window style to use ('vsplit' (default), 'hsplit', or 'editor')

-

- Note: If an Aider job is already running, calling AiderOpen will reattach to it, even with different flags.

- Arguments:

-

AiderAddModifiedFiles: Adds all git-modified files to the Aider chat.- This function can be called directly or through the user command

:AiderAddModifiedFiles

- This function can be called directly or through the user command

When Aider opens (through either function), it automatically adds all open buffers to both commands. It's recommended to actively manage open buffers with commands like :ls and :bd.

Examples of using these commands:

:AiderOpen

:AiderOpen -3 hsplit

:AiderOpen "AIDER_NO_AUTO_COMMITS=1 aider -3" editor

:AiderAddModifiedFilesYou can set custom keybindings for these commands in your Neovim configuration. For example:

vim.api.nvim_set_keymap('n', '<leader>Ao', ':AiderOpen<CR>', {noremap = true, silent = true})

vim.api.nvim_set_keymap('n', '<leader>Am', ':AiderAddModifiedFiles<CR>', {noremap = true, silent = true})Run aider --help to see all the options you can pass to the CLI.

The plugin provides the following default keybindings:

-

<leader>Ao: Open a terminal window with the Aider defaults (gpt-4). -

<leader>Am: Add all git-modified files to the Aider chat.

These keybindings are set up using which-key, providing a descriptive popup menu when you press <leader>A.

The Aider Plugin for Neovim provides a setup function that you can use to configure the plugin. This function accepts a table with the following keys:

-

auto_manage_context: A boolean value that determines whether the plugin should automatically manage the context. If set totrue, the plugin will automatically add and remove buffers from the context as they are opened and closed. Defaults totrue. -

default_bindings: A boolean value that determines whether the plugin should use the default keybindings. If set totrue, the plugin will require the keybindings file and set the default keybindings. Defaults totrue. -

debug: A boolean value that determines whether the plugin should enable debug logging. When set to true, it will print debug information to help troubleshoot issues. Defaults to false. - vim: pass the --vim flag to aider when opening a new chat

-

ignore_buffers: A list of matching patterns for buffer names that will be ignored. Defaults to{'^term:', 'NeogitConsole', 'NvimTree_', 'neo-tree filesystem'}.

Here is an example of how to use the setup function:

require('aider').setup({

auto_manage_context = false,

default_bindings = false,

debug = true,

vim = true,

ignore_buffers = {},

-- only necessary if you want to change the default keybindings. <Leader>C is not a particularly good choice. It's just shown as an example.

vim.api.nvim_set_keymap('n', '<leader>C', ':AiderOpen --no-auto-commits<CR>', {noremap = true, silent = true})

})In this example, the setup function is called with a table that sets auto_manage_context to false and default_bindings to false. This means that the plugin will not automatically manage the context and will not use the default keybindings.

Run the :e command to re-edit the current buffer updating its contents with any changes made since initially loading it.

If you're not familiar with buffers in Vim, here are some tips:

- Use

:lsor:buffersto see all open buffers. - Use

:b <number>or:buffer <number>to switch to a specific buffer. Replace<number>with the buffer number. - Use

:bdor:bdeleteto close the current buffer. - Use

:bd <number>or:bdelete <number>to close a specific buffer. Replace<number>with the buffer number. - Use

:bufdo bdto close all buffers.

if you resize a split the nvim buffer can truncate the text output, chatGPT tells me there isn't an easy work around for this. Feel free to make a PR if you think it's easy to solve without rearchitecting and using tmux or something similar.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aider.nvim

Similar Open Source Tools

aider.nvim

Aider.nvim is a Neovim plugin that integrates the Aider AI coding assistant, allowing users to open a terminal window within Neovim to run Aider. It provides functions like AiderOpen to open the terminal window, AiderAddModifiedFiles to add git-modified files to the Aider chat, and customizable keybindings. Users can configure the plugin using the setup function to manage context, keybindings, debug logging, and ignore specific buffer names.

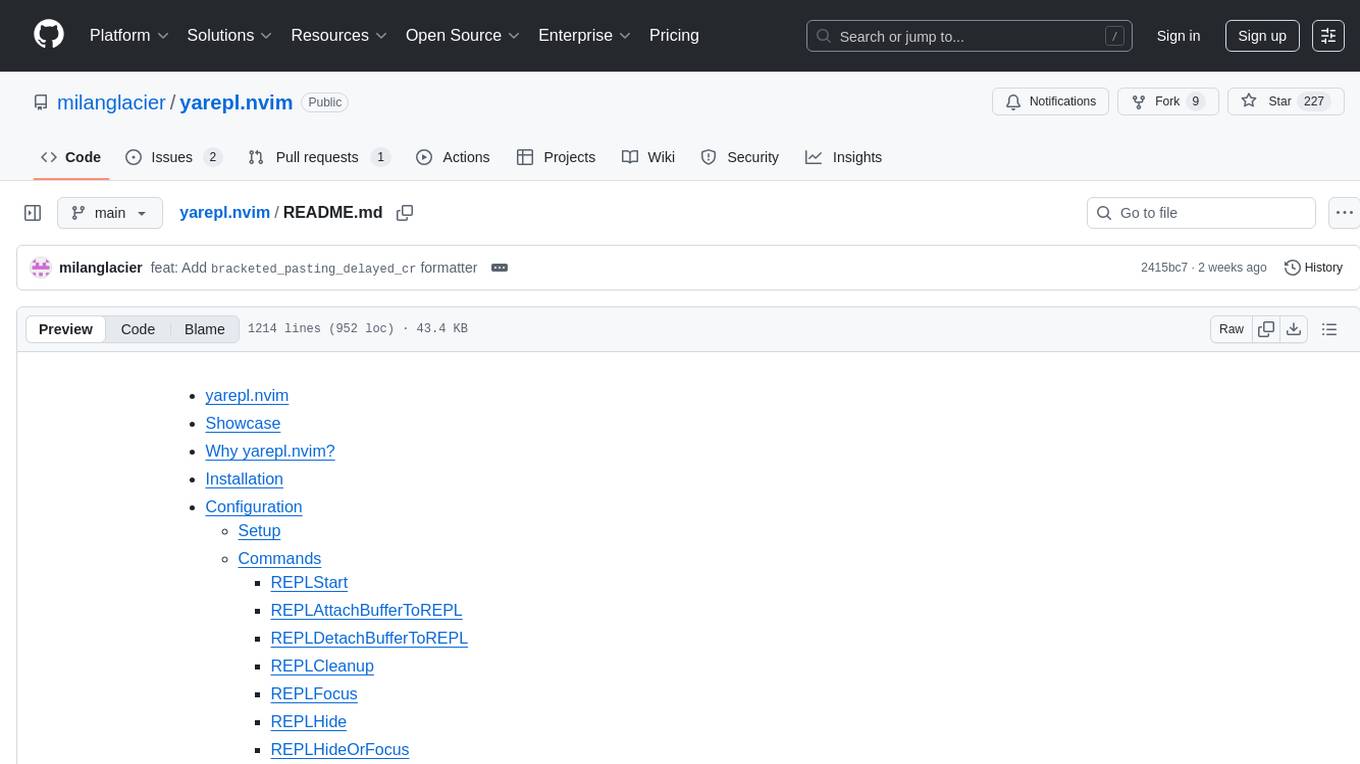

yarepl.nvim

Yet Another REPL is a flexible REPL / TUI App management tool that supports multiple paradigms for interacting with TUI Apps. This plugin allows users to effortlessly interact with multiple TUI Apps through various paradigms, such as sending text from multiple buffers to a single TUI App, sending text from a single buffer to multiple TUI Apps, and attaching a buffer to a dedicated TUI App. It features integration with aider.chat and OpenAI Codex CLI, as well as provides code cell text object definitions. Users can choose their preferred fuzzy finder among telescope, fzf-lua, or Snacks.picker to preview active REPLs. The plugin also supports project-level REPLs and creating persistent REPLs in tmux.

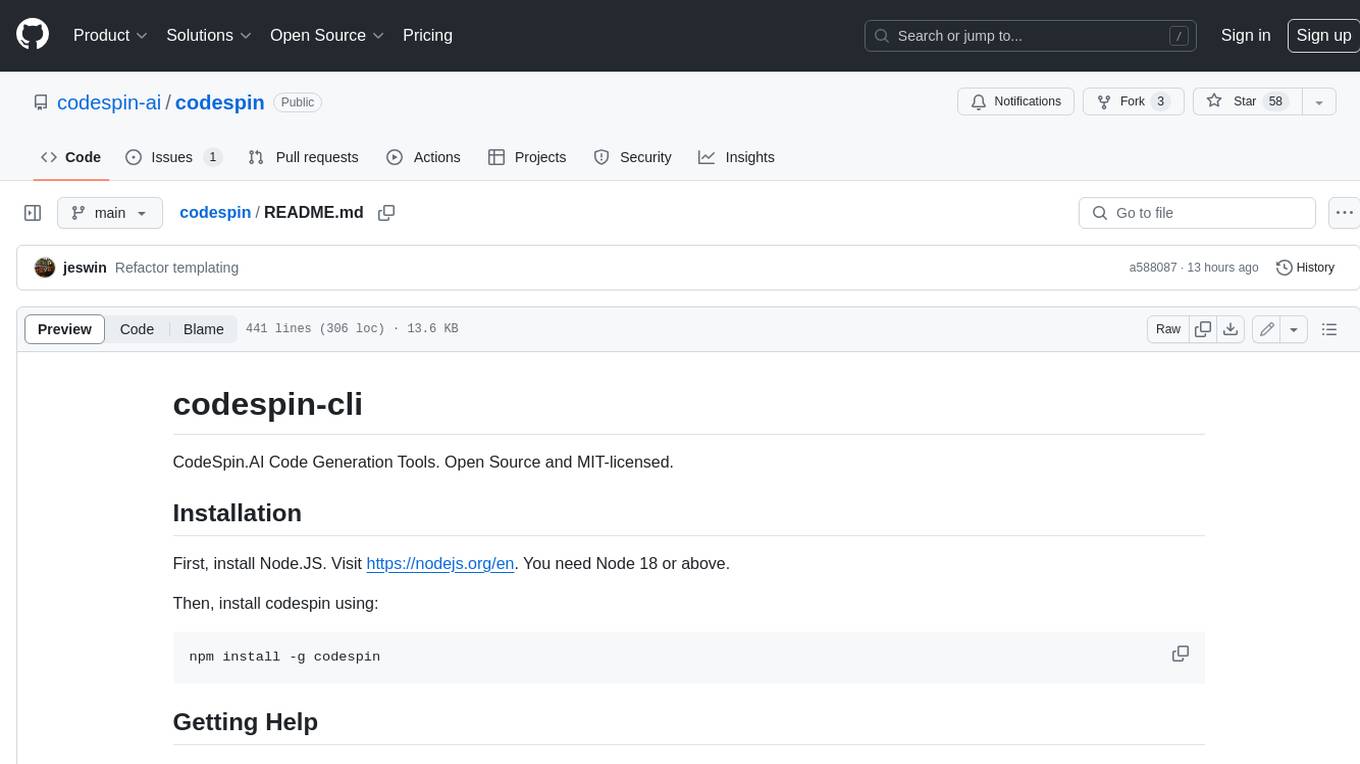

codespin

CodeSpin.AI is a set of open-source code generation tools that leverage large language models (LLMs) to automate coding tasks. With CodeSpin, you can generate code in various programming languages, including Python, JavaScript, Java, and C++, by providing natural language prompts. CodeSpin offers a range of features to enhance code generation, such as custom templates, inline prompting, and the ability to use ChatGPT as an alternative to API keys. Additionally, CodeSpin provides options for regenerating code, executing code in prompt files, and piping data into the LLM for processing. By utilizing CodeSpin, developers can save time and effort in coding tasks, improve code quality, and explore new possibilities in code generation.

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

opencommit

OpenCommit is a tool that auto-generates meaningful commits using AI, allowing users to quickly create commit messages for their staged changes. It provides a CLI interface for easy usage and supports customization of commit descriptions, emojis, and AI models. Users can configure local and global settings, switch between different AI providers, and set up Git hooks for integration with IDE Source Control. Additionally, OpenCommit can be used as a GitHub Action to automatically improve commit messages on push events, ensuring all commits are meaningful and not generic. Payments for OpenAI API requests are handled by the user, with the tool storing API keys locally.

appworld

AppWorld is a high-fidelity execution environment of 9 day-to-day apps, operable via 457 APIs, populated with digital activities of ~100 people living in a simulated world. It provides a benchmark of natural, diverse, and challenging autonomous agent tasks requiring rich and interactive coding. The repository includes implementations of AppWorld apps and APIs, along with tests. It also introduces safety features for code execution and provides guides for building agents and extending the benchmark.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

autoscraper

AutoScraper is a smart, automatic, fast, and lightweight web scraping tool for Python. It simplifies the process of web scraping by learning scraping rules based on sample data provided by the user. The tool can extract text, URLs, or HTML tag values from web pages and return similar elements. Users can utilize the learned object to scrape similar content or exact elements from new pages. AutoScraper is compatible with Python 3 and offers easy installation from various sources. It provides functionalities for fetching similar and exact results from web pages, such as extracting post titles from Stack Overflow or live stock prices from Yahoo Finance. The tool allows customization with custom requests module parameters like proxies or headers. Users can save and load models for future use and explore advanced usages through tutorials and examples.

SirChatalot

A Telegram bot that proves you don't need a body to have a personality. It can use various text and image generation APIs to generate responses to user messages. For text generation, the bot can use: * OpenAI's ChatGPT API (or other compatible API). Vision capabilities can be used with GPT-4 models. Function calling can be used with Function calling. * Anthropic's Claude API. Vision capabilities can be used with Claude 3 models. Function calling can be used with tool use. * YandexGPT API Bot can also generate images with: * OpenAI's DALL-E * Stability AI * Yandex ART This bot can also be used to generate responses to voice messages. Bot will convert the voice message to text and will then generate a response. Speech recognition can be done using the OpenAI's Whisper model. To use this feature, you need to install the ffmpeg library. This bot is also support working with files, see Files section for more details. If function calling is enabled, bot can generate images and search the web (limited).

meta-prompt

Meta-Prompt is a tool designed for interacting with Jina Search Foundation APIs. It provides a way to access different versions of the API, pipe into 'llm' for specific tasks, and handle programmatic access for clean responses. Developers can upload prompts, fetch prompts using curl commands, and utilize response templates and headers for AI applications.

chat-ai

A Seamless Slurm-Native Solution for HPC-Based Services. This repository contains the stand-alone web interface of Chat AI, which can be set up independently to act as a wrapper for an OpenAI-compatible API endpoint. It consists of two Docker containers, 'front' and 'back', providing a ReactJS app served by ViteJS and a wrapper for message requests to prevent CORS errors. Configuration files allow setting port numbers, backend paths, models, user data, default conversation settings, and more. The 'back' service interacts with an OpenAI-compatible API endpoint using configurable attributes in 'back.json'. Customization options include creating a path for available models and setting the 'modelsPath' in 'front.json'. Acknowledgements to contributors and the Chat AI community are included.

slack-bot

The Slack Bot is a tool designed to enhance the workflow of development teams by integrating with Jenkins, GitHub, GitLab, and Jira. It allows for custom commands, macros, crons, and project-specific commands to be implemented easily. Users can interact with the bot through Slack messages, execute commands, and monitor job progress. The bot supports features like starting and monitoring Jenkins jobs, tracking pull requests, querying Jira information, creating buttons for interactions, generating images with DALL-E, playing quiz games, checking weather, defining custom commands, and more. Configuration is managed via YAML files, allowing users to set up credentials for external services, define custom commands, schedule cron jobs, and configure VCS systems like Bitbucket for automated branch lookup in Jenkins triggers.

ash_ai

Ash AI is a tool that provides a Model Context Protocol (MCP) server for exposing tool definitions to an MCP client. It allows for the installation of dev and production MCP servers, and supports features like OAuth2 flow with AshAuthentication, tool data access, tool execution callbacks, prompt-backed actions, and vectorization strategies. Users can also generate a chat feature for their Ash & Phoenix application using `ash_oban` and `ash_postgres`, and specify LLM API keys for OpenAI. The tool is designed to help developers experiment with tools and actions, monitor tool execution, and expose actions as tool calls.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

llm-ollama

LLM-ollama is a plugin that provides access to models running on an Ollama server. It allows users to query the Ollama server for a list of models, register them with LLM, and use them for prompting, chatting, and embedding. The plugin supports image attachments, embeddings, JSON schemas, async models, model aliases, and model options. Users can interact with Ollama models through the plugin in a seamless and efficient manner.

For similar tasks

aider.nvim

Aider.nvim is a Neovim plugin that integrates the Aider AI coding assistant, allowing users to open a terminal window within Neovim to run Aider. It provides functions like AiderOpen to open the terminal window, AiderAddModifiedFiles to add git-modified files to the Aider chat, and customizable keybindings. Users can configure the plugin using the setup function to manage context, keybindings, debug logging, and ignore specific buffer names.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.