ramparts

mcp scan that scans any mcp server for indirect attack vectors and security or configuration vulnerabilities

Stars: 58

Ramparts is a fast, lightweight security scanner designed for the Model Context Protocol (MCP) ecosystem. It scans MCP servers to identify vulnerabilities and provides security features such as discovering capabilities, multi-transport support, session management, static analysis, cross-origin analysis, LLM-powered analysis, and risk assessment. The tool is suitable for developers, MCP users, and MCP developers to ensure the security of their connections. It can be used for security audits, development testing, CI/CD integration, and compliance with security requirements for AI agent deployments.

README:

A fast, lightweight security scanner for Model Context Protocol (MCP) servers with built-in vulnerability detection.

Ramparts is a scanner designed for the Model Context Protocol (MCP) ecosystem. As AI agents and LLMs increasingly rely on external tools and resources through MCP servers, ensuring the security of these connections has become critical.

The Model Context Protocol (MCP) is an open standard that enables AI assistants to securely connect to external data sources and tools. It allows AI agents to access databases, file systems, and APIs through toolcalling to retrieve real-time information and interact with external or internal services.

Ramparts is under active development. Read our launch blog.

MCP servers expose powerful capabilities—file systems, databases, APIs, and system commands—that can become attack vectors like tool poisoning, command injection, and data exfiltration without proper security analysis. - 📚 Security Features & Attack Vectors

Ramparts provides security scanning of MCP servers by:

- Discovering Capabilities: Scans all MCP endpoints to identify available tools, resources, and prompts

- Multi-Transport Support: Supports HTTP, SSE, stdio, and subprocess transports with intelligent fallback

- Session Management: Handles stateful MCP servers with automatic session ID management

- Static Analysis: Performs yara-based checks for common vulnerabilities

- Cross-Origin Analysis: Detects when tools span multiple domains, which could enable context hijacking or injection attacks

- LLM-Powered Analysis: Uses AI models to detect sophisticated security issues

- Risk Assessment: Categorizes findings by severity and provides actionable recommendations

💡 Jump directly to detailed Rampart features? 📚 Detailed Features

- Developers: Scan MCP servers for vulnerabilities in your development environment (Cursor, Windsurf, Claude Code) or production deployments.

- MCP users: Scan third-party servers before connecting, validate local servers before production.

- MCP developers: Ensure your tools, resources, and prompts don't expose vulnerabilities to AI agents.

- Security Audits: Comprehensive assessment of MCP server security posture

- Development: Testing MCP servers during development and testing phases

- CI/CD Integration: Automated security scanning in deployment pipelines

- Compliance: Meeting security requirements for AI agent deployments

💡 Caution: Ramparts analyzes MCP server metadata and static configurations. For comprehensive security, combine with runtime MCP guardrails and adopt a layered security approach. The MCP threat landscape is rapidly evolving, and rampart is not perfect and inaccuracies are inevitable.

Installation

cargo install rampartsScan an MCP server

ramparts scan https://api.githubcopilot.com/mcp/ --auth-headers "Authorization: Bearer $TOKEN"

# Generate detailed markdown report (scan_YYYYMMDD_HHMMSS.md)

ramparts scan https://api.githubcopilot.com/mcp/ --auth-headers "Authorization: Bearer $TOKEN" --report

# Scan stdio/subprocess MCP servers

ramparts scan "stdio:npx:mcp-server-commands"

ramparts scan "stdio:python3:/path/to/mcp_server.py"Scan your IDE's MCP configurations

# Automatically discovers and scans MCP servers from Cursor, Windsurf, VS Code, Claude Desktop, Claude Code

ramparts scan-config

# With detailed report generation

ramparts scan-config --report💡 Did you know you can start Ramparts as a server? Run

ramparts serverto get a REST API for continuous monitoring and CI/CD integration. See 📚 Ramparts Server Mode

ramparts mcp-stdioWhen publishing to Docker MCP Toolkit, configure the container command to ramparts mcp-stdio so the toolkit connects via stdio. Use MCP-Dockerfile to make this the default.

Single server scan:

ramparts scan https://api.githubcopilot.com/mcp/ --auth-headers "Authorization: Bearer $TOKEN"RAMPARTS

MCP Security Scanner

Version: 0.7.0

Current Time: 2025-08-04 07:32:19 UTC

Git Commit: 9d0c37c

🌐 GitHub Copilot MCP Server

✅ All tools passed security checks

└── push_files ✅ passed

└── create_or_update_file ⚠️ 2 warnings

│ └── 🟠 HIGH (LLM): Tool allowing directory traversal attacks

│ └── 🟠 HIGH (YARA): EnvironmentVariableLeakage

└── get_secret_scanning_alert ⚠️ 1 warning

│ └── 🟠 HIGH (YARA): EnvironmentVariableLeakage

Summary:

• Tools scanned: 83

• Security issues: 3 findings

IDE configuration scan:

ramparts scan-config --report🔍 Found 3 IDE config files:

✓ vscode IDE: /Users/user/.vscode/mcp.json

✓ claude IDE: /Users/user/Library/Application Support/Claude/claude_desktop_config.json

✓ cursor IDE: /Users/user/.cursor/mcp.json

📁 vscode IDE config: /Users/user/.vscode/mcp.json (2 servers)

└─ github-copilot [HTTP]: https://api.githubcopilot.com/mcp/

└─ local-tools [STDIO]: stdio:python[local-mcp-server]

🌍 MCP Servers Security Scan Summary

────────────────────────────────────────────────────────────

📊 Scan Summary:

• Servers: 2 total (2 ✅ successful, 0 ❌ failed)

• Resources: 81 tools, 0 resources, 2 prompts

• Security: ✅ All servers passed security checks

📄 Detailed report generated: scan_20250804_073225.md

We welcome contributions to Ramparts mcp scan. If you have suggestions, bug reports, or feature requests, please open an issue on our GitHub repository.

- 🔍 Troubleshooting Guide - Solutions to common issues

- ⚙️ Configuration Reference - Complete configuration file documentation

- 📖 CLI Reference - All commands, options, and usage examples

- Need Support?

- MCP Protocol Documentation // Examples folder was removed to reduce branch diff; see configuration docs instead.

- Configuration Guide

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ramparts

Similar Open Source Tools

ramparts

Ramparts is a fast, lightweight security scanner designed for the Model Context Protocol (MCP) ecosystem. It scans MCP servers to identify vulnerabilities and provides security features such as discovering capabilities, multi-transport support, session management, static analysis, cross-origin analysis, LLM-powered analysis, and risk assessment. The tool is suitable for developers, MCP users, and MCP developers to ensure the security of their connections. It can be used for security audits, development testing, CI/CD integration, and compliance with security requirements for AI agent deployments.

fast-llm-security-guardrails

ZenGuard AI enables AI developers to integrate production-level, low-code LLM (Large Language Model) guardrails into their generative AI applications effortlessly. With ZenGuard AI, ensure your application operates within trusted boundaries, is protected from prompt injections, and maintains user privacy without compromising on performance.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

mcp-pointer

MCP Pointer is a local tool that combines an MCP Server with a Chrome Extension to allow users to visually select DOM elements in the browser and make textual context available to agentic coding tools like Claude Code. It bridges between the browser and AI tools via the Model Context Protocol, enabling real-time communication and compatibility with various AI tools. The tool extracts detailed information about selected elements, including text content, CSS properties, React component detection, and more, making it a valuable asset for developers working with AI-powered web development.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing a user-friendly interface for AI copilot assistance on Windows, Mac, and Linux. It offers features like local data storage, multiple LLM provider support, image generation with Dall-E-3, enhanced prompting, keyboard shortcuts, and more. Users can collaborate, access the tool on various platforms, and enjoy multilingual support. Chatbox is constantly evolving with new features to enhance the user experience.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

panko-gpt

PankoGPT is an AI companion platform that allows users to easily create and deploy custom AI companions on messaging platforms like WhatsApp, Discord, and Telegram. Users can customize companion behavior, configure settings, and equip companions with various tools without the need for coding. The platform aims to provide contextual understanding and user-friendly interface for creating companions that respond based on context and offer configurable tools for enhanced capabilities. Planned features include expanded functionality, pre-built skills, and optimization for better performance.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing features like local data storage, multiple LLM provider support, image generation, enhanced prompting, keyboard shortcuts, and more. It offers a user-friendly interface with dark theme, team collaboration, cross-platform availability, web version access, iOS & Android apps, multilingual support, and ongoing feature enhancements. Developed for prompt and API debugging, it has gained popularity for daily chatting and professional role-playing with AI assistance.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

ai-marketplace-monitor

An intelligent tool that monitors Facebook Marketplace listings using AI to help users find the best deals. It provides instant notifications when items matching specific criteria are posted, along with AI-powered analysis of each listing. The tool offers smart search capabilities, AI-powered listing evaluation and recommendations, various notification options, support for multiple locations, and customizable search parameters. Users can configure the tool to search for specific products, filter by price and location, and receive notifications through different channels. The tool also supports AI service providers and offers a self-hosted model option.

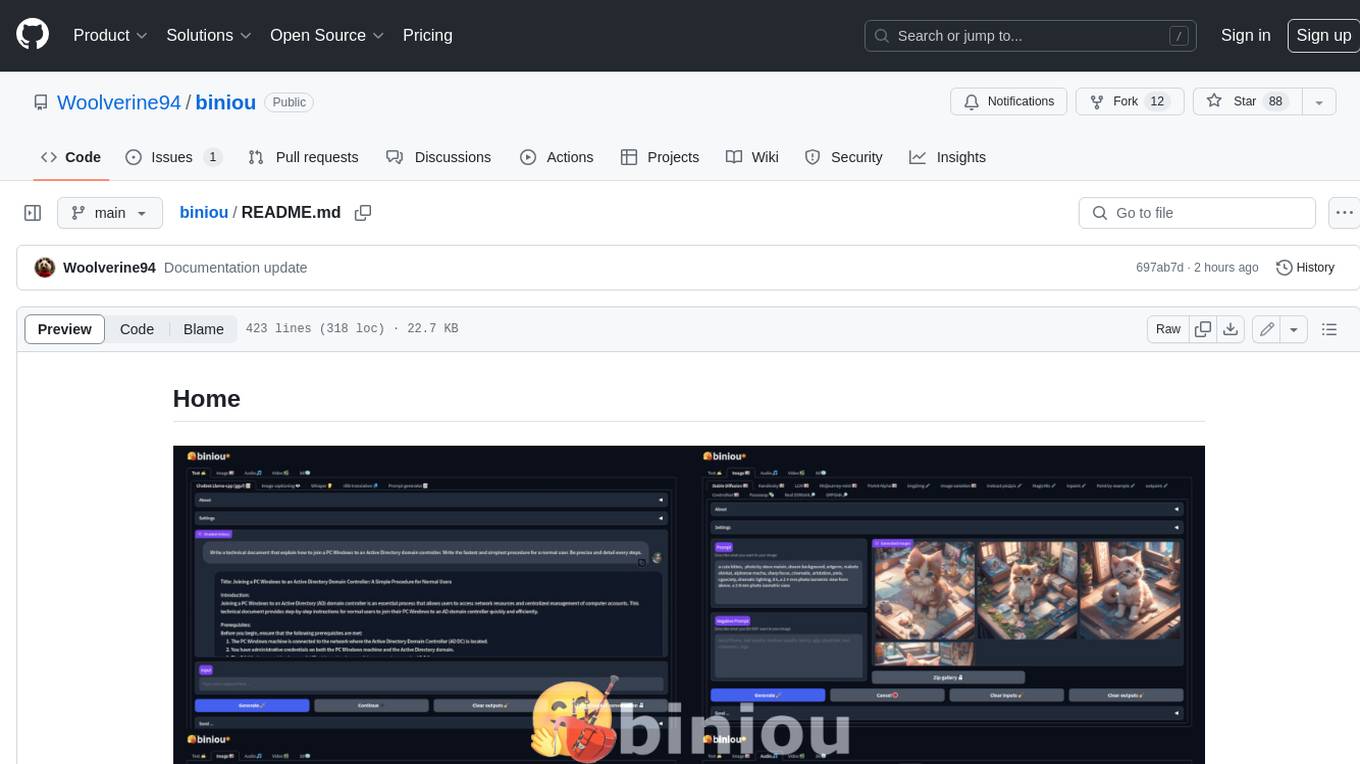

biniou

biniou is a self-hosted webui for various GenAI (generative artificial intelligence) tasks. It allows users to generate multimedia content using AI models and chatbots on their own computer, even without a dedicated GPU. The tool can work offline once deployed and required models are downloaded. It offers a wide range of features for text, image, audio, video, and 3D object generation and modification. Users can easily manage the tool through a control panel within the webui, with support for various operating systems and CUDA optimization. biniou is powered by Huggingface and Gradio, providing a cross-platform solution for AI content generation.

ClaudeSync

ClaudeSync is a powerful tool designed to seamlessly synchronize local files with Claude.ai projects. It bridges the gap between local development environment and Claude.ai's knowledge base, offering real-time synchronization, CLI for easy management, support for multiple organizations and projects, intelligent file filtering, configurable sync interval, two-way synchronization, and more. It ensures data privacy, open source transparency, and comes with disclaimers for use at own risk. Users can quickly start syncing by installing, logging in, selecting organization and project, and running sync. Advanced features include API, organization, project, file, chat management, configuration, synchronization modes, scheduled sync, providers, custom ignore file, and troubleshooting. Contributions are welcome, and communication channels include GitHub Issues and Discord. Licensed under MIT License.

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

open-health

OpenHealth is an AI health assistant that helps users manage their health data by leveraging AI and personal health information. It allows users to consolidate health data, parse it smartly, and engage in contextual conversations with GPT-powered AI. The tool supports various data sources like blood test results, health checkup data, personal physical information, family history, and symptoms. OpenHealth aims to empower users to take control of their health by combining data and intelligence for actionable health management.

bytebot

Bytebot is an open-source AI desktop agent that provides a virtual employee with its own computer to complete tasks for users. It can use various applications, download and organize files, log into websites, process documents, and perform complex multi-step workflows. By giving AI access to a complete desktop environment, Bytebot unlocks capabilities not possible with browser-only agents or API integrations, enabling complete task autonomy, document processing, and usage of real applications.

For similar tasks

ramparts

Ramparts is a fast, lightweight security scanner designed for the Model Context Protocol (MCP) ecosystem. It scans MCP servers to identify vulnerabilities and provides security features such as discovering capabilities, multi-transport support, session management, static analysis, cross-origin analysis, LLM-powered analysis, and risk assessment. The tool is suitable for developers, MCP users, and MCP developers to ensure the security of their connections. It can be used for security audits, development testing, CI/CD integration, and compliance with security requirements for AI agent deployments.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.