Fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a modular system for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere.

Stars: 33558

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

README:

Updates • What and Why • Philosophy • Installation • Usage • Examples • Just Use the Patterns • Custom Patterns • Helper Apps • Meta

Since the start of modern AI in late 2022 we've seen an extraordinary number of AI applications for accomplishing tasks. There are thousands of websites, chat-bots, mobile apps, and other interfaces for using all the different AI out there.

It's all really exciting and powerful, but it's not easy to integrate this functionality into our lives.

Fabric was created to address this by creating and organizing the fundamental units of AI—the prompts themselves!

Fabric organizes prompts by real-world task, allowing people to create, collect, and organize their most important AI solutions in a single place for use in their favorite tools. And if you're command-line focused, you can use Fabric itself as the interface!

Dear Users,

We've been doing so many exciting things here at Fabric, I wanted to give a quick summary here to give you a sense of our development velocity!

Below are the new features and capabilities we've added (newest first):

- v1.4.311 (Sep 13, 2025) — More internationalization support: Adds de (German), fa (Persian / Farsi), fr (French), it (Italian), ja (Japanese), pt (Portuguese), zh (Chinese)

- v1.4.309 (Sep 9, 2025) — Comprehensive internationalization support: Includes English and Spanish locale files.

- v1.4.303 (Aug 29, 2025) — New Binary Releases: Linux ARM and Windows ARM targets. You can run Fabric on the Raspberry PI and on your Windows Surface!

- v1.4.294 (Aug 20, 2025) — Venice AI Support: Added the Venice AI provider. Venice is a Privacy-First, Open-Source AI provider. See their "About Venice" page for details.

-

v1.4.291 (Aug 18, 2025) — Speech To Text: Add OpenAI speech-to-text support with

--transcribe-file,--transcribe-model, and--split-media-fileflags. -

v1.4.287 (Aug 16, 2025) — AI Reasoning: Add Thinking to Gemini models and introduce

readme_updatespython script - v1.4.286 (Aug 14, 2025) — AI Reasoning: Introduce Thinking Config Across Anthropic and OpenAI Providers

- v1.4.285 (Aug 13, 2025) — Extended Context: Enable One Million Token Context Beta Feature for Sonnet-4

- v1.4.284 (Aug 12, 2025) — Easy Shell Completions Setup: Introduce One-Liner Curl Install for Completions

- v1.4.283 (Aug 12, 2025) — Model Management: Add Vendor Selection Support for Models

- v1.4.282 (Aug 11, 2025) — Enhanced Shell Completions: Enhanced Shell Completions for Fabric CLI Binaries

- v1.4.281 (Aug 11, 2025) — Gemini Search Tool: Add Web Search Tool Support for Gemini Models

- v1.4.278 (Aug 9, 2025) — Enhance YouTube Transcripts: Enhance YouTube Support with Custom yt-dlp Arguments

- v1.4.277 (Aug 8, 2025) — Desktop Notifications: Add cross-platform desktop notifications to Fabric CLI

- v1.4.274 (Aug 7, 2025) — Claude 4.1 Added: Add Support for Claude Opus 4.1 Model

- v1.4.271 (Jul 28, 2025) — AI Summarized Release Notes: Enable AI summary updates for GitHub releases

- v1.4.268 (Jul 26, 2025) — Gemini TTS Voice Selection: add Gemini TTS voice selection and listing functionality

- v1.4.267 (Jul 26, 2025) — Text-to-Speech: Update Gemini Plugin to New SDK with TTS Support

- v1.4.258 (Jul 17, 2025) — Onboarding Improved: Add startup check to initialize config and .env file automatically

- v1.4.257 (Jul 17, 2025) — OpenAI Routing Control: Introduce CLI Flag to Disable OpenAI Responses API

- v1.4.252 (Jul 16, 2025) — Hide Thinking Block: Optional Hiding of Model Thinking Process with Configurable Tags

- v1.4.246 (Jul 14, 2025) — Automatic ChangeLog Updates: Add AI-powered changelog generation with high-performance Go tool and comprehensive caching

- v1.4.245 (Jul 11, 2025) — Together AI: Together AI Support with OpenAI Fallback Mechanism Added

- v1.4.232 (Jul 6, 2025) — Add Custom: Add Custom Patterns Directory Support

- v1.4.231 (Jul 5, 2025) — OAuth Auto-Auth: OAuth Authentication Support for Anthropic (Use your Max Subscription)

- v1.4.230 (Jul 5, 2025) — Model Management: Add advanced image generation parameters for OpenAI models with four new CLI flags

- v1.4.227 (Jul 4, 2025) — Add Image: Add Image Generation Support to Fabric

- v1.4.226 (Jul 4, 2025) — Web Search: OpenAI Plugin Now Supports Web Search Functionality

-

v1.4.225 (Jul 4, 2025) — Web Search: Runtime Web Search Control via Command-Line

--searchFlag - v1.4.224 (Jul 1, 2025) — Add code_review: Add code_review pattern and updates in Pattern_Descriptions

- v1.4.222 (Jul 1, 2025) — OpenAI Plugin: OpenAI Plugin Migrates to New Responses API

- v1.4.218 (Jun 27, 2025) — Model Management: Add Support for OpenAI Search and Research Model Variants

- v1.4.217 (Jun 26, 2025) — New YouTube: New YouTube Transcript Endpoint Added to REST API

- v1.4.212 (Jun 23, 2025) — Add Langdock: Add Langdock AI and enhance generic OpenAI compatible support

- v1.4.211 (Jun 19, 2025) — REST API: REST API and Web UI Now Support Dynamic Pattern Variables

- v1.4.210 (Jun 18, 2025) — Add Citations: Add Citation Support to Perplexity Response

- v1.4.208 (Jun 17, 2025) — Add Perplexity: Add Perplexity AI Provider with Token Limits Support

- v1.4.203 (Jun 14, 2025) — Add Amazon Bedrock: Add support for Amazon Bedrock

These features represent our commitment to making Fabric the most powerful and flexible AI augmentation framework available!

Keep in mind that many of these were recorded when Fabric was Python-based, so remember to use the current install instructions below.

-

fabric- What and why

- Updates

- Intro videos

- Navigation

- Changelog

- Philosophy

- Installation

- Usage

- Our approach to prompting

- Examples

- Just use the Patterns

- Custom Patterns

- Helper Apps

- pbpaste

- Web Interface

- Meta

Fabric is evolving rapidly.

Stay current with the latest features by reviewing the CHANGELOG for all recent changes.

AI isn't a thing; it's a magnifier of a thing. And that thing is human creativity.

We believe the purpose of technology is to help humans flourish, so when we talk about AI we start with the human problems we want to solve.

Our approach is to break problems into individual pieces (see below) and then apply AI to them one at a time. See below for some examples.

Prompts are good for this, but the biggest challenge I faced in 2023——which still exists today—is the sheer number of AI prompts out there. We all have prompts that are useful, but it's hard to discover new ones, know if they are good or not, and manage different versions of the ones we like.

One of fabric's primary features is helping people collect and integrate prompts, which we call Patterns, into various parts of their lives.

Fabric has Patterns for all sorts of life and work activities, including:

- Extracting the most interesting parts of YouTube videos and podcasts

- Writing an essay in your own voice with just an idea as an input

- Summarizing opaque academic papers

- Creating perfectly matched AI art prompts for a piece of writing

- Rating the quality of content to see if you want to read/watch the whole thing

- Getting summaries of long, boring content

- Explaining code to you

- Turning bad documentation into usable documentation

- Creating social media posts from any content input

- And a million more…

Unix/Linux/macOS:

curl -fsSL https://raw.githubusercontent.com/danielmiessler/fabric/main/scripts/installer/install.sh | bashWindows PowerShell:

iwr -useb https://raw.githubusercontent.com/danielmiessler/fabric/main/scripts/installer/install.ps1 | iexSee scripts/installer/README.md for custom installation options and troubleshooting.

The latest release binary archives and their expected SHA256 hashes can be found at https://github.com/danielmiessler/fabric/releases/latest

NOTE: using Homebrew or the Arch Linux package managers makes fabric available as fabric-ai, so add

the following alias to your shell startup files to account for this:

alias fabric='fabric-ai'brew install fabric-ai

yay -S fabric-ai

Use the official Microsoft supported Winget tool:

winget install danielmiessler.Fabric

To install Fabric, make sure Go is installed, and then run the following command.

# Install Fabric directly from the repo

go install github.com/danielmiessler/fabric/cmd/fabric@latestRun Fabric using pre-built Docker images:

# Use latest image from Docker Hub

docker run --rm -it kayvan/fabric:latest --version

# Use specific version from GHCR

docker run --rm -it ghcr.io/ksylvan/fabric:v1.4.305 --version

# Run setup (first time)

mkdir -p $HOME/.fabric-config

docker run --rm -it -v $HOME/.fabric-config:/root/.config/fabric kayvan/fabric:latest --setup

# Use Fabric with your patterns

docker run --rm -it -v $HOME/.fabric-config:/root/.config/fabric kayvan/fabric:latest -p summarize

# Run the REST API server

docker run --rm -it -p 8080:8080 -v $HOME/.fabric-config:/root/.config/fabric kayvan/fabric:latest --serveImages available at:

- Docker Hub: kayvan/fabric

- GHCR: ksylvan/fabric

See scripts/docker/README.md for building custom images and advanced configuration.

You may need to set some environment variables in your ~/.bashrc on linux or ~/.zshrc file on mac to be able to run the fabric command. Here is an example of what you can add:

For Intel based macs or linux

# Golang environment variables

export GOROOT=/usr/local/go

export GOPATH=$HOME/go

# Update PATH to include GOPATH and GOROOT binaries

export PATH=$GOPATH/bin:$GOROOT/bin:$HOME/.local/bin:$PATHfor Apple Silicon based macs

# Golang environment variables

export GOROOT=$(brew --prefix go)/libexec

export GOPATH=$HOME/go

export PATH=$GOPATH/bin:$GOROOT/bin:$HOME/.local/bin:$PATHNow run the following command

# Run the setup to set up your directories and keys

fabric --setupIf everything works you are good to go.

You can configure specific models for individual patterns using environment variables

like FABRIC_MODEL_PATTERN_NAME=vendor|model

This makes it easy to maintain these per-pattern model mappings in your shell startup files.

In order to add aliases for all your patterns and use them directly as commands, for example, summarize instead of fabric --pattern summarize

You can add the following to your .zshrc or .bashrc file. You

can also optionally set the FABRIC_ALIAS_PREFIX environment variable

before, if you'd prefer all the fabric aliases to start with the same prefix.

# Loop through all files in the ~/.config/fabric/patterns directory

for pattern_file in $HOME/.config/fabric/patterns/*; do

# Get the base name of the file (i.e., remove the directory path)

pattern_name="$(basename "$pattern_file")"

alias_name="${FABRIC_ALIAS_PREFIX:-}${pattern_name}"

# Create an alias in the form: alias pattern_name="fabric --pattern pattern_name"

alias_command="alias $alias_name='fabric --pattern $pattern_name'"

# Evaluate the alias command to add it to the current shell

eval "$alias_command"

done

yt() {

if [ "$#" -eq 0 ] || [ "$#" -gt 2 ]; then

echo "Usage: yt [-t | --timestamps] youtube-link"

echo "Use the '-t' flag to get the transcript with timestamps."

return 1

fi

transcript_flag="--transcript"

if [ "$1" = "-t" ] || [ "$1" = "--timestamps" ]; then

transcript_flag="--transcript-with-timestamps"

shift

fi

local video_link="$1"

fabric -y "$video_link" $transcript_flag

}You can add the below code for the equivalent aliases inside PowerShell by running notepad $PROFILE inside a PowerShell window:

# Path to the patterns directory

$patternsPath = Join-Path $HOME ".config/fabric/patterns"

foreach ($patternDir in Get-ChildItem -Path $patternsPath -Directory) {

# Prepend FABRIC_ALIAS_PREFIX if set; otherwise use empty string

$prefix = $env:FABRIC_ALIAS_PREFIX ?? ''

$patternName = "$($patternDir.Name)"

$aliasName = "$prefix$patternName"

# Dynamically define a function for each pattern

$functionDefinition = @"

function $aliasName {

[CmdletBinding()]

param(

[Parameter(ValueFromPipeline = `$true)]

[string] `$InputObject,

[Parameter(ValueFromRemainingArguments = `$true)]

[String[]] `$patternArgs

)

begin {

# Initialize an array to collect pipeline input

`$collector = @()

}

process {

# Collect pipeline input objects

if (`$InputObject) {

`$collector += `$InputObject

}

}

end {

# Join all pipeline input into a single string, separated by newlines

`$pipelineContent = `$collector -join "`n"

# If there's pipeline input, include it in the call to fabric

if (`$pipelineContent) {

`$pipelineContent | fabric --pattern $patternName `$patternArgs

} else {

# No pipeline input; just call fabric with the additional args

fabric --pattern $patternName `$patternArgs

}

}

}

"@

# Add the function to the current session

Invoke-Expression $functionDefinition

}

# Define the 'yt' function as well

function yt {

[CmdletBinding()]

param(

[Parameter()]

[Alias("timestamps")]

[switch]$t,

[Parameter(Position = 0, ValueFromPipeline = $true)]

[string]$videoLink

)

begin {

$transcriptFlag = "--transcript"

if ($t) {

$transcriptFlag = "--transcript-with-timestamps"

}

}

process {

if (-not $videoLink) {

Write-Error "Usage: yt [-t | --timestamps] youtube-link"

return

}

}

end {

if ($videoLink) {

# Execute and allow output to flow through the pipeline

fabric -y $videoLink $transcriptFlag

}

}

}This also creates a yt alias that allows you to use yt https://www.youtube.com/watch?v=4b0iet22VIk to get transcripts, comments, and metadata.

If in addition to the above aliases you would like to have the option to save the output to your favorite markdown note vault like Obsidian then instead of the above add the following to your .zshrc or .bashrc file:

# Define the base directory for Obsidian notes

obsidian_base="/path/to/obsidian"

# Loop through all files in the ~/.config/fabric/patterns directory

for pattern_file in ~/.config/fabric/patterns/*; do

# Get the base name of the file (i.e., remove the directory path)

pattern_name=$(basename "$pattern_file")

# Remove any existing alias with the same name

unalias "$pattern_name" 2>/dev/null

# Define a function dynamically for each pattern

eval "

$pattern_name() {

local title=\$1

local date_stamp=\$(date +'%Y-%m-%d')

local output_path=\"\$obsidian_base/\${date_stamp}-\${title}.md\"

# Check if a title was provided

if [ -n \"\$title\" ]; then

# If a title is provided, use the output path

fabric --pattern \"$pattern_name\" -o \"\$output_path\"

else

# If no title is provided, use --stream

fabric --pattern \"$pattern_name\" --stream

fi

}

"

doneThis will allow you to use the patterns as aliases like in the above for example summarize instead of fabric --pattern summarize --stream, however if you pass in an extra argument like this summarize "my_article_title" your output will be saved in the destination that you set in obsidian_base="/path/to/obsidian" in the following format YYYY-MM-DD-my_article_title.md where the date gets autogenerated for you.

You can tweak the date format by tweaking the date_stamp format.

If you have the Legacy (Python) version installed and want to migrate to the Go version, here's how you do it. It's basically two steps: 1) uninstall the Python version, and 2) install the Go version.

# Uninstall Legacy Fabric

pipx uninstall fabric

# Clear any old Fabric aliases

(check your .bashrc, .zshrc, etc.)

# Install the Go version

go install github.com/danielmiessler/fabric/cmd/fabric@latest

# Run setup for the new version. Important because things have changed

fabric --setupThen set your environmental variables as shown above.

The great thing about Go is that it's super easy to upgrade. Just run the same command you used to install it in the first place and you'll always get the latest version.

go install github.com/danielmiessler/fabric/cmd/fabric@latestFabric provides shell completion scripts for Zsh, Bash, and Fish shells, making it easier to use the CLI by providing tab completion for commands and options.

You can install completions directly via a one-liner:

curl -fsSL https://raw.githubusercontent.com/danielmiessler/Fabric/refs/heads/main/completions/setup-completions.sh | shOptional variants:

# Dry-run (see actions without changing your system)

curl -fsSL https://raw.githubusercontent.com/danielmiessler/Fabric/refs/heads/main/completions/setup-completions.sh | sh -s -- --dry-run

# Override the download source (advanced)

FABRIC_COMPLETIONS_BASE_URL="https://raw.githubusercontent.com/danielmiessler/Fabric/refs/heads/main/completions" \

sh -c "$(curl -fsSL https://raw.githubusercontent.com/danielmiessler/Fabric/refs/heads/main/completions/setup-completions.sh)"To enable Zsh completion:

# Copy the completion file to a directory in your $fpath

mkdir -p ~/.zsh/completions

cp completions/_fabric ~/.zsh/completions/

# Add the directory to fpath in your .zshrc before compinit

echo 'fpath=(~/.zsh/completions $fpath)' >> ~/.zshrc

echo 'autoload -Uz compinit && compinit' >> ~/.zshrcTo enable Bash completion:

# Source the completion script in your .bashrc

echo 'source /path/to/fabric/completions/fabric.bash' >> ~/.bashrc

# Or copy to the system-wide bash completion directory

sudo cp completions/fabric.bash /etc/bash_completion.d/To enable Fish completion:

# Copy the completion file to the fish completions directory

mkdir -p ~/.config/fish/completions

cp completions/fabric.fish ~/.config/fish/completions/Once you have it all set up, here's how to use it.

fabric -hUsage:

fabric [OPTIONS]

Application Options:

-p, --pattern= Choose a pattern from the available patterns

-v, --variable= Values for pattern variables, e.g. -v=#role:expert -v=#points:30

-C, --context= Choose a context from the available contexts

--session= Choose a session from the available sessions

-a, --attachment= Attachment path or URL (e.g. for OpenAI image recognition messages)

-S, --setup Run setup for all reconfigurable parts of fabric

-t, --temperature= Set temperature (default: 0.7)

-T, --topp= Set top P (default: 0.9)

-s, --stream Stream

-P, --presencepenalty= Set presence penalty (default: 0.0)

-r, --raw Use the defaults of the model without sending chat options (like

temperature etc.) and use the user role instead of the system role for

patterns.

-F, --frequencypenalty= Set frequency penalty (default: 0.0)

-l, --listpatterns List all patterns

-L, --listmodels List all available models

-x, --listcontexts List all contexts

-X, --listsessions List all sessions

-U, --updatepatterns Update patterns

-c, --copy Copy to clipboard

-m, --model= Choose model

-V, --vendor= Specify vendor for chosen model (e.g., -V "LM Studio" -m openai/gpt-oss-20b)

--modelContextLength= Model context length (only affects ollama)

-o, --output= Output to file

--output-session Output the entire session (also a temporary one) to the output file

-n, --latest= Number of latest patterns to list (default: 0)

-d, --changeDefaultModel Change default model

-y, --youtube= YouTube video or play list "URL" to grab transcript, comments from it

and send to chat or print it put to the console and store it in the

output file

--playlist Prefer playlist over video if both ids are present in the URL

--transcript Grab transcript from YouTube video and send to chat (it is used per

default).

--transcript-with-timestamps Grab transcript from YouTube video with timestamps and send to chat

--comments Grab comments from YouTube video and send to chat

--metadata Output video metadata

-g, --language= Specify the Language Code for the chat, e.g. -g=en -g=zh

-u, --scrape_url= Scrape website URL to markdown using Jina AI

-q, --scrape_question= Search question using Jina AI

-e, --seed= Seed to be used for LMM generation

-w, --wipecontext= Wipe context

-W, --wipesession= Wipe session

--printcontext= Print context

--printsession= Print session

--readability Convert HTML input into a clean, readable view

--input-has-vars Apply variables to user input

--no-variable-replacement Disable pattern variable replacement

--dry-run Show what would be sent to the model without actually sending it

--serve Serve the Fabric Rest API

--serveOllama Serve the Fabric Rest API with ollama endpoints

--address= The address to bind the REST API (default: :8080)

--api-key= API key used to secure server routes

--config= Path to YAML config file

--version Print current version

--listextensions List all registered extensions

--addextension= Register a new extension from config file path

--rmextension= Remove a registered extension by name

--strategy= Choose a strategy from the available strategies

--liststrategies List all strategies

--listvendors List all vendors

--shell-complete-list Output raw list without headers/formatting (for shell completion)

--search Enable web search tool for supported models (Anthropic, OpenAI, Gemini)

--search-location= Set location for web search results (e.g., 'America/Los_Angeles')

--image-file= Save generated image to specified file path (e.g., 'output.png')

--image-size= Image dimensions: 1024x1024, 1536x1024, 1024x1536, auto (default: auto)

--image-quality= Image quality: low, medium, high, auto (default: auto)

--image-compression= Compression level 0-100 for JPEG/WebP formats (default: not set)

--image-background= Background type: opaque, transparent (default: opaque, only for

PNG/WebP)

--suppress-think Suppress text enclosed in thinking tags

--think-start-tag= Start tag for thinking sections (default: <think>)

--think-end-tag= End tag for thinking sections (default: </think>)

--disable-responses-api Disable OpenAI Responses API (default: false)

--voice= TTS voice name for supported models (e.g., Kore, Charon, Puck)

(default: Kore)

--list-gemini-voices List all available Gemini TTS voices

--notification Send desktop notification when command completes

--notification-command= Custom command to run for notifications (overrides built-in

notifications)

--yt-dlp-args= Additional arguments to pass to yt-dlp (e.g. '--cookies-from-browser brave')

--thinking= Set reasoning/thinking level (e.g., off, low, medium, high, or

numeric tokens for Anthropic or Google Gemini)

--debug= Set debug level (0: off, 1: basic, 2: detailed, 3: trace)

Help Options:

-h, --help Show this help message

Use the --debug flag to control runtime logging:

-

0: off (default) -

1: basic debug info -

2: detailed debugging -

3: trace level

Fabric Patterns are different than most prompts you'll see.

-

First, we use

Markdownto help ensure maximum readability and editability. This not only helps the creator make a good one, but also anyone who wants to deeply understand what it does. Importantly, this also includes the AI you're sending it to!

Here's an example of a Fabric Pattern.

https://github.com/danielmiessler/Fabric/blob/main/data/patterns/extract_wisdom/system.md-

Next, we are extremely clear in our instructions, and we use the Markdown structure to emphasize what we want the AI to do, and in what order.

-

And finally, we tend to use the System section of the prompt almost exclusively. In over a year of being heads-down with this stuff, we've just seen more efficacy from doing that. If that changes, or we're shown data that says otherwise, we will adjust.

The following examples use the macOS

pbpasteto paste from the clipboard. See the pbpaste section below for Windows and Linux alternatives.

Now let's look at some things you can do with Fabric.

-

Run the

summarizePattern based on input fromstdin. In this case, the body of an article.pbpaste | fabric --pattern summarize -

Run the

analyze_claimsPattern with the--streamoption to get immediate and streaming results.pbpaste | fabric --stream --pattern analyze_claims -

Run the

extract_wisdomPattern with the--streamoption to get immediate and streaming results from any Youtube video (much like in the original introduction video).fabric -y "https://youtube.com/watch?v=uXs-zPc63kM" --stream --pattern extract_wisdom -

Create patterns- you must create a .md file with the pattern and save it to

~/.config/fabric/patterns/[yourpatternname]. -

Run a

analyze_claimspattern on a website. Fabric uses Jina AI to scrape the URL into markdown format before sending it to the model.fabric -u https://github.com/danielmiessler/fabric/ -p analyze_claims

If you're not looking to do anything fancy, and you just want a lot of great prompts, you can navigate to the /patterns directory and start exploring!

We hope that if you used nothing else from Fabric, the Patterns by themselves will make the project useful.

You can use any of the Patterns you see there in any AI application that you have, whether that's ChatGPT or some other app or website. Our plan and prediction is that people will soon be sharing many more than those we've published, and they will be way better than ours.

The wisdom of crowds for the win.

Fabric also implements prompt strategies like "Chain of Thought" or "Chain of Draft" which can be used in addition to the basic patterns.

See the Thinking Faster by Writing Less paper and the Thought Generation section of Learn Prompting for examples of prompt strategies.

Each strategy is available as a small json file in the /strategies directory.

The prompt modification of the strategy is applied to the system prompt and passed on to the LLM in the chat session.

Use fabric -S and select the option to install the strategies in your ~/.config/fabric directory.

You may want to use Fabric to create your own custom Patterns—but not share them with others. No problem!

Fabric now supports a dedicated custom patterns directory that keeps your personal patterns separate from the built-in ones. This means your custom patterns won't be overwritten when you update Fabric's built-in patterns.

-

Run the Fabric setup:

fabric --setup

-

Select the "Custom Patterns" option from the Tools menu and enter your desired directory path (e.g.,

~/my-custom-patterns) -

Fabric will automatically create the directory if it does not exist.

-

Create your custom pattern directory structure:

mkdir -p ~/my-custom-patterns/my-analyzer -

Create your pattern file

echo "You are an expert analyzer of ..." > ~/my-custom-patterns/my-analyzer/system.md

-

Use your custom pattern:

fabric --pattern my-analyzer "analyze this text"

- Priority System: Custom patterns take precedence over built-in patterns with the same name

-

Seamless Integration: Custom patterns appear in

fabric --listpatternsalongside built-in ones -

Update Safe: Your custom patterns are never affected by

fabric --updatepatterns - Private by Default: Custom patterns remain private unless you explicitly share them

Your custom patterns are completely private and won't be affected by Fabric updates!

Fabric also makes use of some core helper apps (tools) to make it easier to integrate with your various workflows. Here are some examples:

to_pdf is a helper command that converts LaTeX files to PDF format. You can use it like this:

to_pdf input.texThis will create a PDF file from the input LaTeX file in the same directory.

You can also use it with stdin which works perfectly with the write_latex pattern:

echo "ai security primer" | fabric --pattern write_latex | to_pdfThis will create a PDF file named output.pdf in the current directory.

To install to_pdf, install it the same way as you install Fabric, just with a different repo name.

go install github.com/danielmiessler/fabric/cmd/to_pdf@latestMake sure you have a LaTeX distribution (like TeX Live or MiKTeX) installed on your system, as to_pdf requires pdflatex to be available in your system's PATH.

code_helper is used in conjunction with the create_coding_feature pattern.

It generates a json representation of a directory of code that can be fed into an AI model

with instructions to create a new feature or edit the code in a specified way.

See the Create Coding Feature Pattern README for details.

Install it first using:

go install github.com/danielmiessler/fabric/cmd/code_helper@latestThe examples use the macOS program pbpaste to paste content from the clipboard to pipe into fabric as the input. pbpaste is not available on Windows or Linux, but there are alternatives.

On Windows, you can use the PowerShell command Get-Clipboard from a PowerShell command prompt. If you like, you can also alias it to pbpaste. If you are using classic PowerShell, edit the file ~\Documents\WindowsPowerShell\.profile.ps1, or if you are using PowerShell Core, edit ~\Documents\PowerShell\.profile.ps1 and add the alias,

Set-Alias pbpaste Get-ClipboardOn Linux, you can use xclip -selection clipboard -o to paste from the clipboard. You will likely need to install xclip with your package manager. For Debian based systems including Ubuntu,

sudo apt update

sudo apt install xclip -yYou can also create an alias by editing ~/.bashrc or ~/.zshrc and adding the alias,

alias pbpaste='xclip -selection clipboard -o'Fabric now includes a built-in web interface that provides a GUI alternative to the command-line interface and an out-of-the-box website for those who want to get started with web development or blogging. You can use this app as a GUI interface for Fabric, a ready to go blog-site, or a website template for your own projects.

The web/src/lib/content directory includes starter .obsidian/ and templates/ directories, allowing you to open up the web/src/lib/content/ directory as an Obsidian.md vault. You can place your posts in the posts directory when you're ready to publish.

The GUI can be installed by navigating to the web directory and using npm install, pnpm install, or your favorite package manager. Then simply run the development server to start the app.

You will need to run fabric in a separate terminal with the fabric --serve command.

From the fabric project web/ directory:

npm run dev

## or ##

pnpm run dev

## or your equivalentTo run the Streamlit user interface:

# Install required dependencies

pip install -r requirements.txt

# Or manually install dependencies

pip install streamlit pandas matplotlib seaborn numpy python-dotenv pyperclip

# Run the Streamlit app

streamlit run streamlit.pyThe Streamlit UI provides a user-friendly interface for:

- Running and chaining patterns

- Managing pattern outputs

- Creating and editing patterns

- Analyzing pattern results

The Streamlit UI supports clipboard operations across different platforms:

-

macOS: Uses

pbcopyandpbpaste(built-in) -

Windows: Uses

pypercliplibrary (install withpip install pyperclip) -

Linux: Uses

xclip(install withsudo apt-get install xclipor equivalent for your Linux distribution)

[!NOTE] Special thanks to the following people for their inspiration and contributions!

- Jonathan Dunn for being the absolute MVP dev on the project, including spearheading the new Go version, as well as the GUI! All this while also being a full-time medical doctor!

- Caleb Sima for pushing me over the edge of whether to make this a public project or not.

- Eugen Eisler and Frederick Ros for their invaluable contributions to the Go version

- David Peters for his work on the web interface.

- Joel Parish for super useful input on the project's Github directory structure..

-

Joseph Thacker for the idea of a

-ccontext flag that adds pre-created context in the./config/fabric/directory to all Pattern queries. -

Jason Haddix for the idea of a stitch (chained Pattern) to filter content using a local model before sending on to a cloud model, i.e., cleaning customer data using

llama2before sending on togpt-4for analysis. - Andre Guerra for assisting with numerous components to make things simpler and more maintainable.

Made with contrib.rocks.

fabric was created by Daniel Miessler in January of 2024.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Fabric

Similar Open Source Tools

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

manifold

Manifold is a powerful platform for workflow automation using AI models. It supports text generation, image generation, and retrieval-augmented generation, integrating seamlessly with popular AI endpoints. Additionally, Manifold provides robust semantic search capabilities using PGVector combined with the SEFII engine. It is under active development and not production-ready.

hound

Hound is a security audit automation pipeline for AI-assisted code review that mirrors how expert auditors think, learn, and collaborate. It features graph-driven analysis, sessionized audits, provider-agnostic models, belief system and hypotheses, precise code grounding, and adaptive planning. The system employs a senior/junior auditor pattern where the Scout actively navigates the codebase and annotates knowledge graphs while the Strategist handles high-level planning and vulnerability analysis. Hound is optimized for small-to-medium sized projects like smart contract applications and is language-agnostic.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

jina

Jina is a tool that allows users to build multimodal AI services and pipelines using cloud-native technologies. It provides a Pythonic experience for serving ML models and transitioning from local deployment to advanced orchestration frameworks like Docker-Compose, Kubernetes, or Jina AI Cloud. Users can build and serve models for any data type and deep learning framework, design high-performance services with easy scaling, serve LLM models while streaming their output, integrate with Docker containers via Executor Hub, and host on CPU/GPU using Jina AI Cloud. Jina also offers advanced orchestration and scaling capabilities, a smooth transition to the cloud, and easy scalability and concurrency features for applications. Users can deploy to their own cloud or system with Kubernetes and Docker Compose integration, and even deploy to JCloud for autoscaling and monitoring.

nodejs-todo-api-boilerplate

An LLM-powered code generation tool that relies on the built-in Node.js API Typescript Template Project to easily generate clean, well-structured CRUD module code from text description. It orchestrates 3 LLM micro-agents (`Developer`, `Troubleshooter` and `TestsFixer`) to generate code, fix compilation errors, and ensure passing E2E tests. The process includes module code generation, DB migration creation, seeding data, and running tests to validate output. By cycling through these steps, it guarantees consistent and production-ready CRUD code aligned with vertical slicing architecture.

AutoDocs

AutoDocs by Sita is a tool designed to automate documentation for any repository. It parses the repository using tree-sitter and SCIP, constructs a code dependency graph, and generates repository-wide, dependency-aware documentation and summaries. It provides a FastAPI backend for ingestion/search and a Next.js web UI for chat and exploration. Additionally, it includes an MCP server for deep search capabilities. The tool aims to simplify the process of generating accurate and high-signal documentation for codebases.

bedrock-claude-chat

This repository is a sample chatbot using the Anthropic company's LLM Claude, one of the foundational models provided by Amazon Bedrock for generative AI. It allows users to have basic conversations with the chatbot, personalize it with their own instructions and external knowledge, and analyze usage for each user/bot on the administrator dashboard. The chatbot supports various languages, including English, Japanese, Korean, Chinese, French, German, and Spanish. Deployment is straightforward and can be done via the command line or by using AWS CDK. The architecture is built on AWS managed services, eliminating the need for infrastructure management and ensuring scalability, reliability, and security.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

For similar tasks

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

firecrawl

Firecrawl is an API service that empowers AI applications with clean data from any website. It features advanced scraping, crawling, and data extraction capabilities. The repository is still in development, integrating custom modules into the mono repo. Users can run it locally but it's not fully ready for self-hosted deployment yet. Firecrawl offers powerful capabilities like scraping, crawling, mapping, searching, and extracting structured data from single pages, multiple pages, or entire websites with AI. It supports various formats, actions, and batch scraping. The tool is designed to handle proxies, anti-bot mechanisms, dynamic content, media parsing, change tracking, and more. Firecrawl is available as an open-source project under the AGPL-3.0 license, with additional features offered in the cloud version.

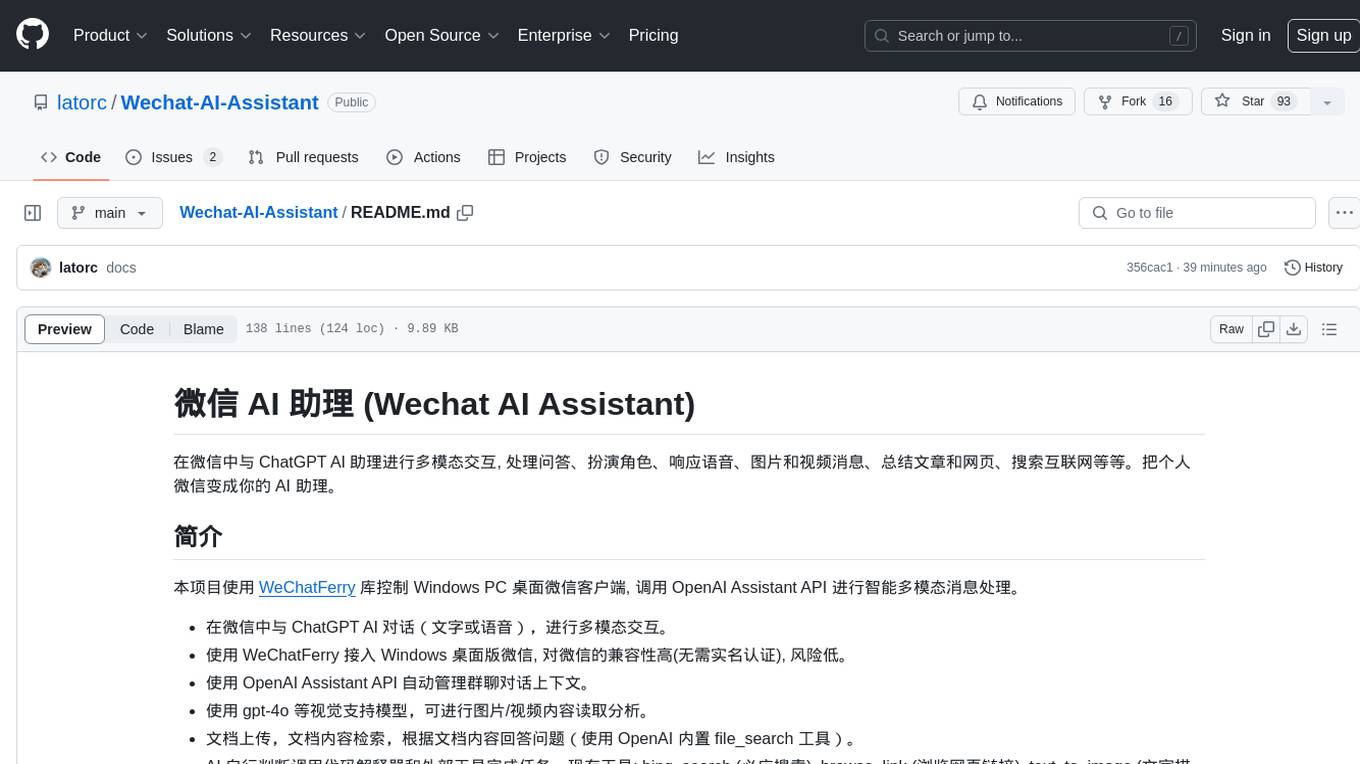

Wechat-AI-Assistant

Wechat AI Assistant is a project that enables multi-modal interaction with ChatGPT AI assistant within WeChat. It allows users to engage in conversations, role-playing, respond to voice messages, analyze images and videos, summarize articles and web links, and search the internet. The project utilizes the WeChatFerry library to control the Windows PC desktop WeChat client and leverages the OpenAI Assistant API for intelligent multi-modal message processing. Users can interact with ChatGPT AI in WeChat through text or voice, access various tools like bing_search, browse_link, image_to_text, text_to_image, text_to_speech, video_analysis, and more. The AI autonomously determines which code interpreter and external tools to use to complete tasks. Future developments include file uploads for AI to reference content, integration with other APIs, and login support for enterprise WeChat and WeChat official accounts.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.