Olares

Olares: An Open-Source Sovereign Cloud OS for Local AI

Stars: 1948

Olares is an open-source sovereign cloud OS designed for local AI, enabling users to build their own AI assistants, sync data across devices, self-host their workspace, stream media, and more within a sovereign cloud environment. Users can effortlessly run leading AI models, deploy open-source AI apps, access AI apps and models anywhere, and benefit from integrated AI for personalized interactions. Olares offers features like edge AI, personal data repository, self-hosted workspace, private media server, smart home hub, and user-owned decentralized social media. The platform provides enterprise-grade security, secure application ecosystem, unified file system and database, single sign-on, AI capabilities, built-in applications, seamless access, and development tools. Olares is compatible with Linux, Raspberry Pi, Mac, and Windows, and offers a wide range of system-level applications, third-party components and services, and additional libraries and components.

README:

https://github.com/user-attachments/assets/3089a524-c135-4f96-ad2b-c66bf4ee7471

Build your local AI assistants, sync data across places, self-host your workspace, stream your own media, and more—all in your sovereign cloud made possible by Olares.

Website · Documentation · Download LarePass · Olares Apps · Olares Space

[!IMPORTANT]

We just finished our rebranding from Terminus to Olares recently. For more information, refer to our rebranding blog.

Convert your hardware into an AI home server with Olares, an open-source sovereign cloud OS built for local AI.

- Run leading AI models on your terms: Effortlessly host powerful open AI models like LLaMA, Stable Diffusion, Whisper, and Flux.1 directly on your hardware, giving you full control over your AI environment.

- Deploy with ease: Discover and install a wide range of open-source AI apps from Olares Market in a few clicks. No more complicated configuration or setup.

- Access anytime, anywhere: Access your AI apps and models through a browser whenever and wherever you need them.

- Integrated AI for smarter AI experience: Using a Model Context Protocol (MCP)-like mechanism, Olares seamlessly connects AI models with AI apps and your private data sets. This creates highly personalized, context-aware AI interactions that adapt to your needs.

🌟 Star us to receive instant notifications about new releases and updates.

Here is why and where you can count on Olares for private, powerful, and secure sovereign cloud experience:

🤖 Edge AI: Run cutting-edge open AI models locally, including large language models, computer vision, and speech recognition. Create private AI services tailored to your data for enhanced functionality and privacy.

📊 Personal data repository: Securely store, sync, and manage your important files, photos, and documents across devices and locations.

🚀 Self-hosted workspace: Build a free collaborative workspace for your team using secure, open-source SaaS alternatives.

🎥 Private media server: Host your own streaming services with your personal media collections.

🏡 Smart Home Hub: Create a central control point for your IoT devices and home automation.

🤝 User-owned decentralized social media: Easily install decentralized social media apps such as Mastodon, Ghost, and WordPress on Olares, allowing you to build a personal brand without the risk of being banned or paying platform commissions.

📚 Learning platform: Explore self-hosting, container orchestration, and cloud technologies hands-on.

Olares has been tested and verified on the following platforms:

| Platform | Operating system | Notes |

|---|---|---|

| Linux | Ubuntu 20.04 LTS or later Debian 11 or later |

|

| Raspberry Pi | RaspbianOS | Verified on Raspberry Pi 4 Model B and Raspberry Pi 5 |

| Windows | Windows 11 23H2 or later Windows 10 22H2 or later WSL2 |

|

| Mac | Monterey (12) or later | |

| Proxmox VE (PVE) | Proxmox Virtual Environment 8.0 |

Note

If you successfully install Olares on an operating system that is not listed in the compatibility table, please let us know! You can open an issue or submit a pull request on our GitHub repository.

To get started with Olares on your own device, follow the Getting Started Guide for step-by-step instructions.

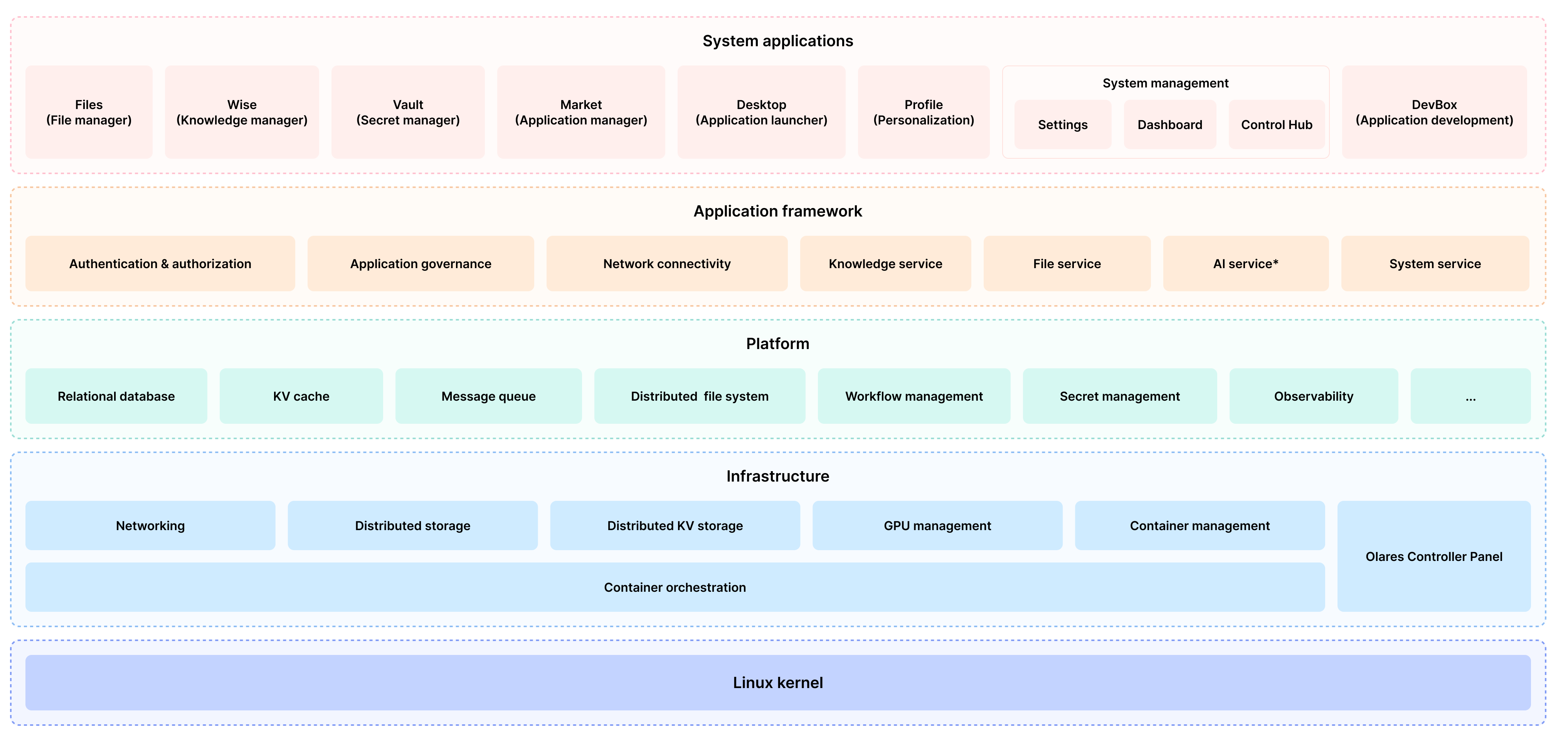

Olares' architecture is based on two core principles:

-

Adopts an Android-like approach to control software permissions and interactivity, ensuring smooth and secure system operations.

-

Leverages cloud-native technologies to manage hardware and middleware services efficiently.

For detailed description of each component, refer to Olares architecture.

Olares offers a wide array of features designed to enhance security, ease of use, and development flexibility:

- Enterprise-grade security: Simplified network configuration using Tailscale, Headscale, Cloudflare Tunnel, and FRP.

- Secure and permissionless application ecosystem: Sandboxing ensures application isolation and security.

- Unified file system and database: Automated scaling, backups, and high availability.

- Single sign-on: Log in once to access all applications within Olares with a shared authentication service.

- AI capabilities: Comprehensive solution for GPU management, local AI model hosting, and private knowledge bases while maintaining data privacy.

- Built-in applications: Includes file manager, sync drive, vault, reader, app market, settings, and dashboard.

- Seamless anywhere access: Access your devices from anywhere using dedicated clients for mobile, desktop, and browsers.

- Development tools: Comprehensive development tools for effortless application development and porting.

Olares consists of numerous code repositories publicly available on GitHub. The current repository is responsible for the final compilation, packaging, installation, and upgrade of the operating system, while specific changes mostly take place in their corresponding repositories.

The following table lists the project directories under Olares and their corresponding repositories. Find the one that interests you:

Framework components

| Directory | Repository | Description |

|---|---|---|

| frameworks/app-service | https://github.com/beclab/app-service | A system framework component that provides lifecycle management and various security controls for all apps in the system. |

| frameworks/backup-server | https://github.com/beclab/backup-server | A system framework component that provides scheduled full or incremental cluster backup services. |

| frameworks/bfl | https://github.com/beclab/bfl | Backend For Launcher (BFL), a system framework component serving as the user access point and aggregating and proxying interfaces of various backend services. |

| frameworks/GPU | https://github.com/grgalex/nvshare | GPU sharing mechanism that allows multiple processes (or containers running on Kubernetes) to securely run on the same physical GPU concurrently, each having the whole GPU memory available. |

| frameworks/l4-bfl-proxy | https://github.com/beclab/l4-bfl-proxy | Layer 4 network proxy for BFL. By prereading SNI, it provides a dynamic route to pass through into the user's Ingress. |

| frameworks/osnode-init | https://github.com/beclab/osnode-init | A system framework component that initializes node data when a new node joins the cluster. |

| frameworks/system-server | https://github.com/beclab/system-server | As a part of system runtime frameworks, it provides a mechanism for security calls between apps. |

| frameworks/tapr | https://github.com/beclab/tapr | Olares Application Runtime components. |

System-Level Applications and Services

| Directory | Repository | Description |

|---|---|---|

| apps/analytic | https://github.com/beclab/analytic | Developed based on Umami, Analytic is a simple, fast, privacy-focused alternative to Google Analytics. |

| apps/market | https://github.com/beclab/market | This repository deploys the front-end part of the application market in Olares. |

| apps/market-server | https://github.com/beclab/market | This repository deploys the back-end part of the application market in Olares. |

| apps/argo | https://github.com/argoproj/argo-workflows | A workflow engine for orchestrating container execution of local recommendation algorithms. |

| apps/desktop | https://github.com/beclab/desktop | The built-in desktop application of the system. |

| apps/devbox | https://github.com/beclab/devbox | An IDE for developers to port and develop Olares applications. |

| apps/vault | https://github.com/beclab/termipass | A free alternative to 1Password and Bitwarden for teams and enterprises of any size Developed based on Padloc. It serves as the client that helps you manage DID, Olares ID, and Olares devices. |

| apps/files | https://github.com/beclab/files | A built-in file manager modified from Filebrowser, providing management of files on Drive, Sync, and various Olares physical nodes. |

| apps/notifications | https://github.com/beclab/notifications | The notifications system of Olares |

| apps/profile | https://github.com/beclab/profile | Linktree alternative in Olares |

| apps/rsshub | https://github.com/beclab/rsshub | A RSS subscription manager based on RssHub. |

| apps/settings | https://github.com/beclab/settings | Built-in system settings. |

| apps/system-apps | https://github.com/beclab/system-apps | Built based on the kubesphere/console project, system-service provides a self-hosted cloud platform that helps users understand and control the system's runtime status and resource usage through a visual Dashboard and feature-rich ControlHub. |

| apps/wizard | https://github.com/beclab/wizard | A wizard application to walk users through the system activation process. |

Third-party Components and Services

| Directory | Repository | Description |

|---|---|---|

| third-party/authelia | https://github.com/beclab/authelia | An open-source authentication and authorization server providing two-factor authentication and single sign-on (SSO) for your applications via a web portal. |

| third-party/headscale | https://github.com/beclab/headscale | An open source, self-hosted implementation of the Tailscale control server in Olares to manage Tailscale in LarePass across different devices. |

| third-party/infisical | https://github.com/beclab/infisical | An open-source secret management platform that syncs secrets across your teams/infrastructure and prevents secret leaks. |

| third-party/juicefs | https://github.com/beclab/juicefs-ext | A distributed POSIX file system built on top of Redis and S3, allowing apps on different nodes to access the same data via POSIX interface. |

| third-party/ks-console | https://github.com/kubesphere/console | Kubesphere console that allows for cluster management via a Web GUI. |

| third-party/ks-installer | https://github.com/beclab/ks-installer-ext | Kubesphere installer component that automatically creates Kubesphere clusters based on cluster resource definitions. |

| third-party/kube-state-metrics | https://github.com/beclab/kube-state-metrics | kube-state-metrics (KSM) is a simple service that listens to the Kubernetes API server and generates metrics about the state of the objects. |

| third-party/notification-manager | https://github.com/beclab/notification-manager-ext | Kubesphere's notification management component for unified management of multiple notification channels and custom aggregation of notification content. |

| third-party/predixy | https://github.com/beclab/predixy | Redis cluster proxy service that automatically identifies available nodes and adds namespace isolation. |

| third-party/redis-cluster-operator | https://github.com/beclab/redis-cluster-operator | A cloud-native tool for creating and managing Redis clusters based on Kubernetes. |

| third-party/seafile-server | https://github.com/beclab/seafile-server | The backend service of Seafile (Sync Drive) for handling data storage. |

| third-party/seahub | https://github.com/beclab/seahub | The front-end and middleware service of Seafile (Sync Drive) for handling file sharing, data synchronization, etc. |

| third-party/tailscale | https://github.com/tailscale/tailscale | Tailscale has been integrated in LarePass of all platforms. |

Additional libraries and components

| Directory | Repository | Description |

|---|---|---|

| build/installer | The template for generating the installer build. | |

| build/manifest | Installation build image list template. | |

| libs/fs-lib | https://github.com/beclab/fs-lib | The SDK library for the iNotify-compatible interface implemented based on JuiceFS. |

| scripts | Assisting scripts for generating the installer build. |

We are welcoming contributions in any form:

-

If you want to develop your own applications on Olares, refer to:

https://docs.olares.xyz/developer/develop/ -

If you want to help improve Olares, refer to:

https://docs.olares.xyz/developer/contribute/olares.html

- GitHub Discussion. Best for sharing feedback and asking questions.

- GitHub Issues. Best for filing bugs you encounter using Olares and submitting feature proposals.

- Discord. Best for sharing anything Olares.

The Olares project has incorporated numerous third-party open source projects, including: Kubernetes, Kubesphere, Padloc, K3S, JuiceFS, MinIO, Envoy, Authelia, Infisical, Dify, Seafile,HeadScale, tailscale, Redis Operator, Nitro, RssHub, predixy, nvshare, LangChain, Quasar, TrustWallet, Restic, ZincSearch, filebrowser, lego, Velero, s3rver, Citusdata.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Olares

Similar Open Source Tools

Olares

Olares is an open-source sovereign cloud OS designed for local AI, enabling users to build their own AI assistants, sync data across devices, self-host their workspace, stream media, and more within a sovereign cloud environment. Users can effortlessly run leading AI models, deploy open-source AI apps, access AI apps and models anywhere, and benefit from integrated AI for personalized interactions. Olares offers features like edge AI, personal data repository, self-hosted workspace, private media server, smart home hub, and user-owned decentralized social media. The platform provides enterprise-grade security, secure application ecosystem, unified file system and database, single sign-on, AI capabilities, built-in applications, seamless access, and development tools. Olares is compatible with Linux, Raspberry Pi, Mac, and Windows, and offers a wide range of system-level applications, third-party components and services, and additional libraries and components.

txtai

Txtai is an all-in-one embeddings database for semantic search, LLM orchestration, and language model workflows. It combines vector indexes, graph networks, and relational databases to enable vector search with SQL, topic modeling, retrieval augmented generation, and more. Txtai can stand alone or serve as a knowledge source for large language models (LLMs). Key features include vector search with SQL, object storage, topic modeling, graph analysis, multimodal indexing, embedding creation for various data types, pipelines powered by language models, workflows to connect pipelines, and support for Python, JavaScript, Java, Rust, and Go. Txtai is open-source under the Apache 2.0 license.

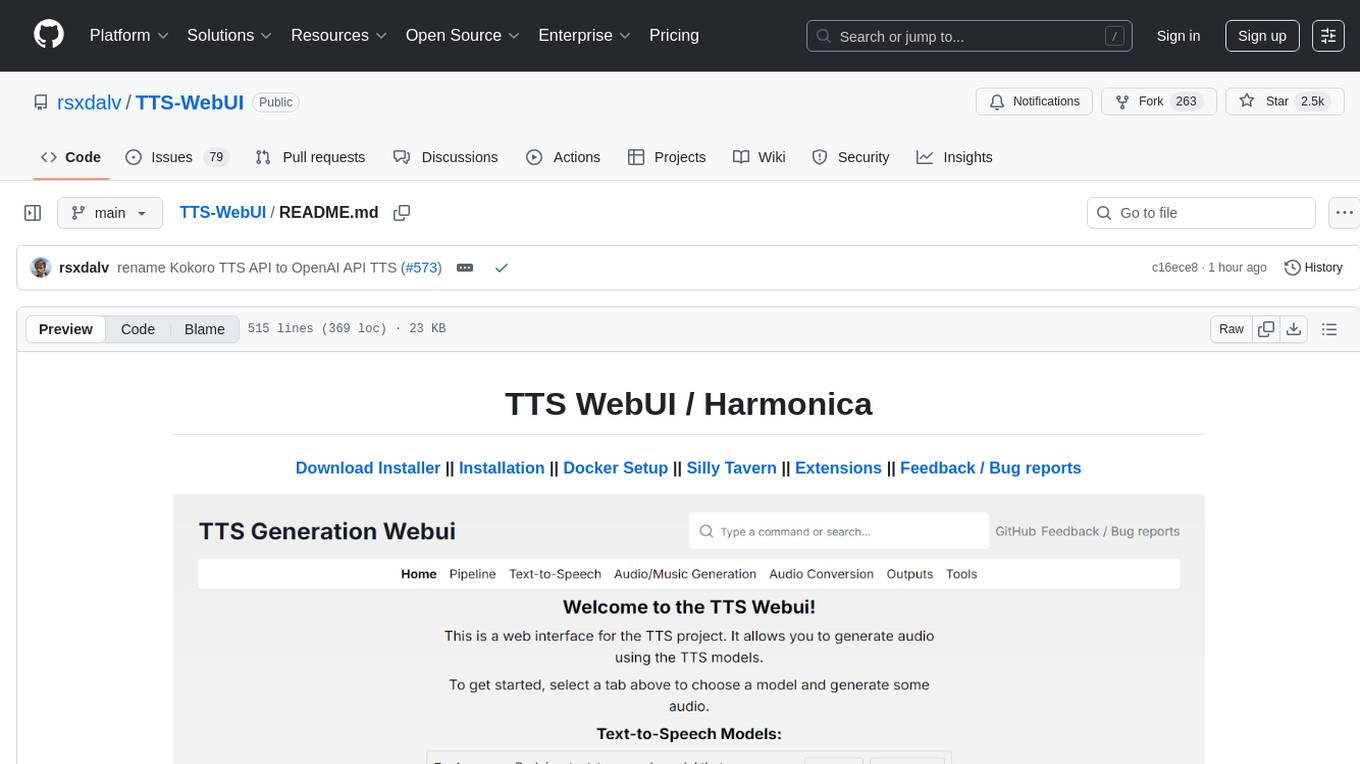

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

RAG-Driven-Generative-AI

RAG-Driven Generative AI provides a roadmap for building effective LLM, computer vision, and generative AI systems that balance performance and costs. This book offers a detailed exploration of RAG and how to design, manage, and control multimodal AI pipelines. By connecting outputs to traceable source documents, RAG improves output accuracy and contextual relevance, offering a dynamic approach to managing large volumes of information. This AI book also shows you how to build a RAG framework, providing practical knowledge on vector stores, chunking, indexing, and ranking. You'll discover techniques to optimize your project's performance and better understand your data, including using adaptive RAG and human feedback to refine retrieval accuracy, balancing RAG with fine-tuning, implementing dynamic RAG to enhance real-time decision-making, and visualizing complex data with knowledge graphs. You'll be exposed to a hands-on blend of frameworks like LlamaIndex and Deep Lake, vector databases such as Pinecone and Chroma, and models from Hugging Face and OpenAI. By the end of this book, you will have acquired the skills to implement intelligent solutions, keeping you competitive in fields ranging from production to customer service across any project.

stm32ai-modelzoo

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

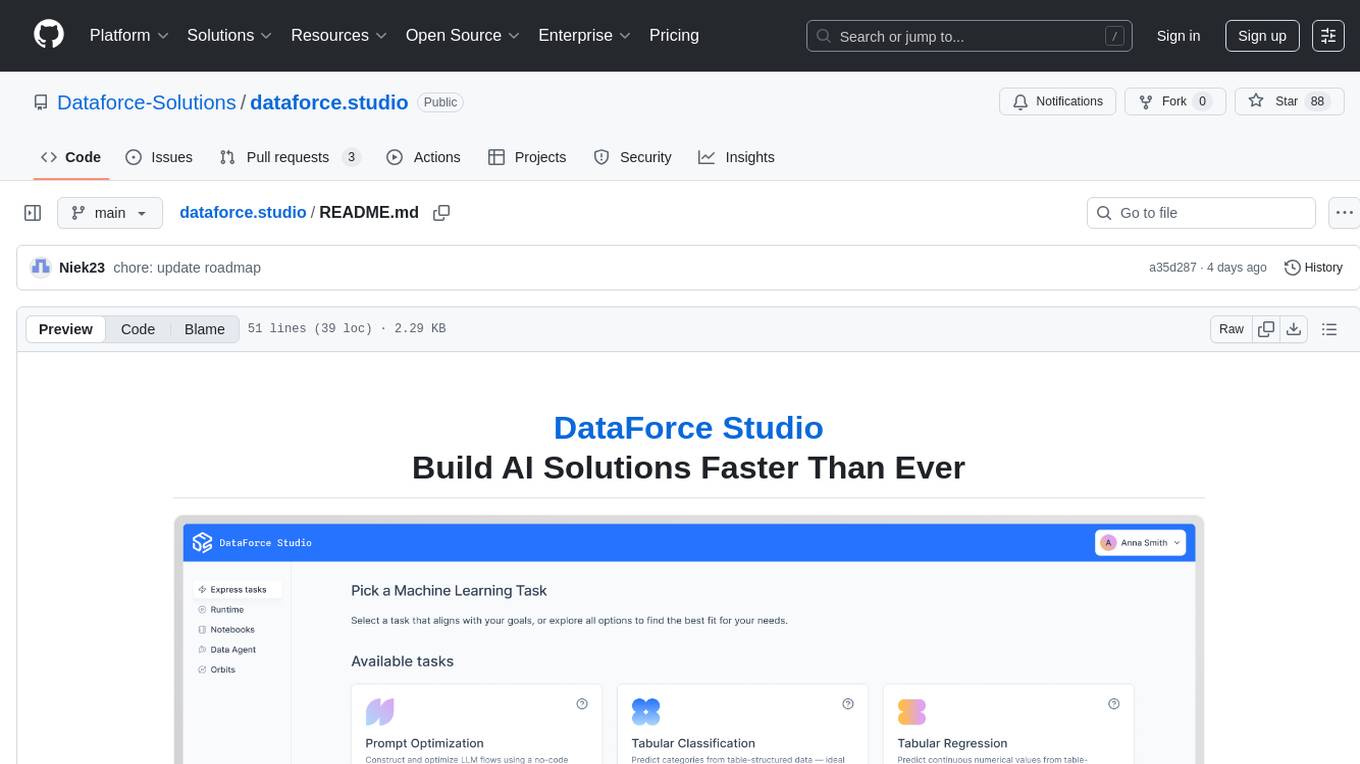

dataforce.studio

DataForce Studio is an open-source MLOps platform designed to help build, manage, and deploy AI/ML models with ease. It supports the entire model lifecycle, from creation to deployment and monitoring, within a user-friendly interface. The platform is in active early development, aiming to provide features like post-deployment monitoring, model deployment, data science agent, experiment snapshots, model cards, Python SDK, model registry, notebooks, in-browser runtime, and express tasks for prompt optimization and tabular data.

Dictate

Dictate is an easy-to-use keyboard tool that utilizes OpenAI Whisper for transcription and dictation. It offers accurate results in multiple languages with punctuation and custom AI rewording using GPT-4 Omni. The tool is designed to simplify the process of transcribing spoken words into text, making it convenient for users to dictate their thoughts and notes effortlessly.

haystack-tutorials

Haystack is an open-source framework for building production-ready LLM applications, retrieval-augmented generative pipelines, and state-of-the-art search systems that work intelligently over large document collections. It lets you quickly try out the latest models in natural language processing (NLP) while being flexible and easy to use.

langfuse-js

langfuse-js is a modular mono repo for the Langfuse JS/TS client libraries. It includes packages for Langfuse API client, tracing, OpenTelemetry export helpers, OpenAI integration, and LangChain integration. The SDK is currently in version 4 and offers universal JavaScript environments support as well as Node.js 20+. The repository provides documentation, reference materials, and development instructions for managing the monorepo with pnpm. It is licensed under MIT.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.

supabase

Supabase is an open source Firebase alternative that provides a wide range of features including a hosted Postgres database, authentication and authorization, auto-generated APIs, REST and GraphQL support, realtime subscriptions, functions, file storage, AI and vector/embeddings toolkit, and a dashboard. It aims to offer developers a Firebase-like experience using enterprise-grade open source tools.

computer

Cua is a tool for creating and running high-performance macOS and Linux VMs on Apple Silicon, with built-in support for AI agents. It provides libraries like Lume for running VMs with near-native performance, Computer for interacting with sandboxes, and Agent for running agentic workflows. Users can refer to the documentation for onboarding and explore demos showcasing the tool's capabilities. Additionally, accessory libraries like Core, PyLume, Computer Server, and SOM offer additional functionality. Contributions to Cua are welcome, and the tool is open-sourced under the MIT License.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

For similar tasks

dust

Dust is a platform that provides customizable and secure AI assistants to amplify your team's potential. With Dust, you can build and deploy AI assistants that are tailored to your specific needs, without the need for extensive technical expertise. Dust's platform is easy to use and provides a variety of features to help you get started quickly, including a library of pre-built blocks, a developer platform, and an API reference.

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

django-ai-assistant

Combine the power of LLMs with Django's productivity to build intelligent applications. Let AI Assistants call methods from Django's side and do anything your users need! Use AI Tool Calling and RAG with Django to easily build state of the art AI Assistants.

Olares

Olares is an open-source sovereign cloud OS designed for local AI, enabling users to build their own AI assistants, sync data across devices, self-host their workspace, stream media, and more within a sovereign cloud environment. Users can effortlessly run leading AI models, deploy open-source AI apps, access AI apps and models anywhere, and benefit from integrated AI for personalized interactions. Olares offers features like edge AI, personal data repository, self-hosted workspace, private media server, smart home hub, and user-owned decentralized social media. The platform provides enterprise-grade security, secure application ecosystem, unified file system and database, single sign-on, AI capabilities, built-in applications, seamless access, and development tools. Olares is compatible with Linux, Raspberry Pi, Mac, and Windows, and offers a wide range of system-level applications, third-party components and services, and additional libraries and components.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

aiocoap

aiocoap is a Python library that implements the Constrained Application Protocol (CoAP) using native asyncio methods in Python 3. It supports various CoAP standards such as RFC7252, RFC7641, RFC7959, RFC8323, RFC7967, RFC8132, RFC9176, RFC8613, and draft-ietf-core-oscore-groupcomm-17. The library provides features for clients and servers, including multicast support, blockwise transfer, CoAP over TCP, TLS, and WebSockets, No-Response, PATCH/FETCH, OSCORE, and Group OSCORE. It offers an easy-to-use interface for concurrent operations and is suitable for IoT applications.

media-stack

media-stack is a self-hosted media ecosystem that combines media management, streaming, AI-powered recommendations, and VPN. It includes tools like Radarr for movie management, Sonarr for TV show management, Prowlarr for torrent indexing, qBittorrent for downloading media, Jellyseerr for media requests, Jellyfin for media streaming, and Recommendarr for AI-powered recommendations. The stack can be deployed with or without a VPN and offers detailed configuration steps for each tool.

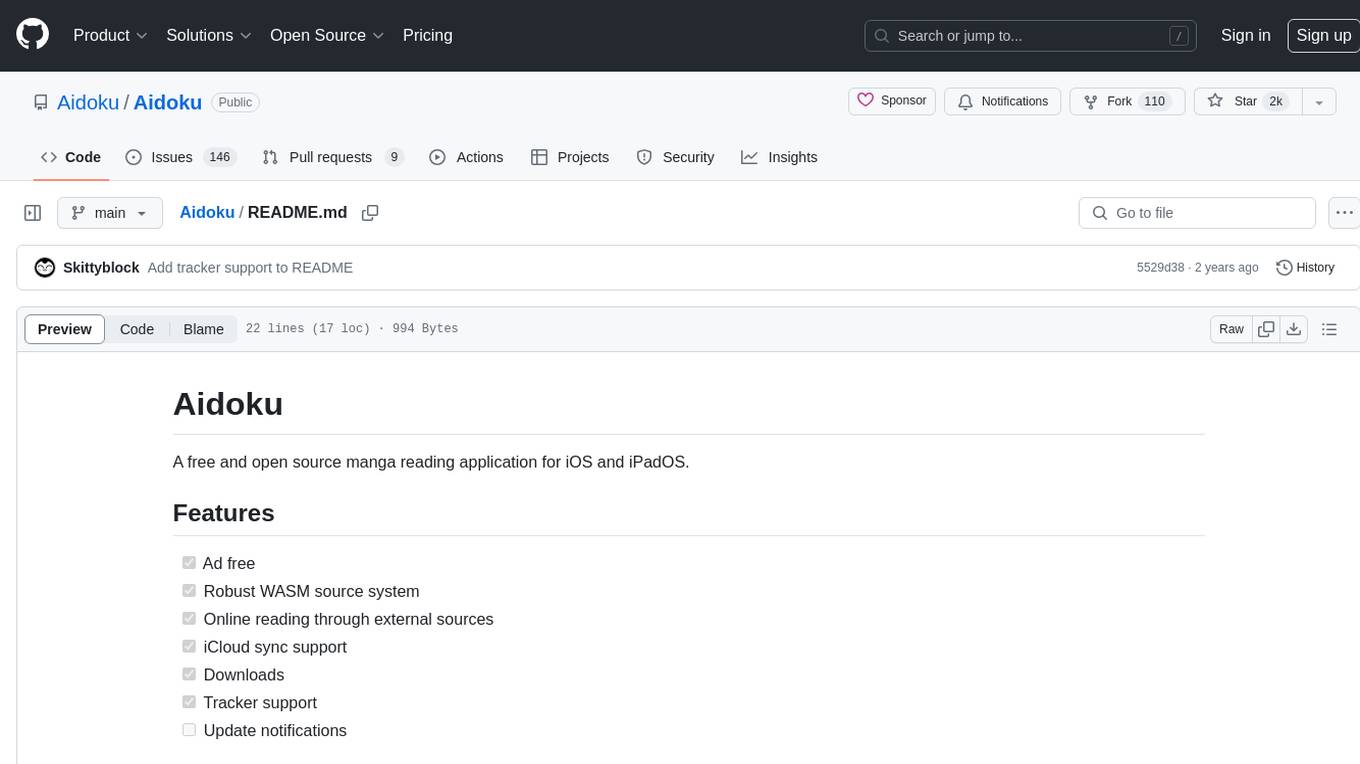

Aidoku

Aidoku is a free and open source manga reading application for iOS and iPadOS. It offers features like ad-free experience, robust WASM source system, online reading through external sources, iCloud sync support, downloads, and tracker support. Users can access the latest ipa from the releases page and join TestFlight via the Aidoku Discord for detailed installation instructions. The project is open to contributions, with planned features and fixes. Translation efforts are welcomed through Weblate for crowd-sourced translations.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.