z.ai2api_python

支持 Z.AI、K2Think、LongCat 等多个 AI 提供商的高性能代理服务

Stars: 210

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

README:

基于 FastAPI 的高性能 OpenAI API 兼容代理服务,采用多提供商架构设计,支持 GLM-4.5 系列、K2Think、LongCat 等多种 AI 模型的完整功能。

- 🔌 完全兼容 OpenAI API - 无缝集成现有应用

- 🏗️ 多提供商架构 - 支持 Z.AI、K2Think、LongCat 等多个 AI 提供商

- 🤖 Claude Code 支持 - 通过 Claude Code Router 接入 Claude Code (CCR 工具请升级到 v1.0.47 以上)

- 🍒 Cherry Studio支持 - Cherry Studio 中可以直接调用 MCP 工具

- 🚀 高性能流式响应 - Server-Sent Events (SSE) 支持

- 🛠️ 增强工具调用 - 改进的 Function Call 实现,支持复杂工具链

- 🧠 思考模式支持 - 智能处理模型推理过程

- 🐳 Docker 部署 - 一键容器化部署(环境变量请参考

.env.example) - 🛡️ 会话隔离 - 匿名模式保护隐私

- 🔧 灵活配置 - 环境变量灵活配置

- 🔄 Token 池管理 - 自动轮询、容错恢复、动态更新

- 🛡️ 错误处理 - 完善的异常捕获和重试机制

- 🔒 服务唯一性 - 基于进程名称(pname)的服务唯一性验证,防止重复启动

- Python 3.9-3.12

- pip 或 uv (推荐)

# 克隆项目

git clone https://github.com/ZyphrZero/z.ai2api_python.git

cd z.ai2api_python

# 使用 uv (推荐)

curl -LsSf https://astral.sh/uv/install.sh | sh

uv sync

uv run python main.py

# 或使用 pip (推荐使用清华源)

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

python main.py🍋🟩 服务启动后访问接口文档:http://localhost:8080/docs

💡 提示:默认端口为 8080,可通过环境变量LISTEN_PORT修改

⚠️ 注意:请勿将AUTH_TOKEN泄露给其他人,请使用AUTH_TOKENS配置多个认证令牌

服务启动后,可以通过标准的 OpenAI API 客户端进行调用。详细的 API 使用方法请参考 OpenAI API 文档。

从 Docker Hub 拉取最新镜像:

# 拉取最新版本

docker pull zyphrzero/z-ai2api-python:latest

# 或拉取指定版本

docker pull zyphrzero/z-ai2api-python:v0.1.0快速启动:

# 基础启动(使用默认配置)

docker run -d \

--name z-ai2api \

-p 8080:8080 \

-e AUTH_TOKEN="sk-your-api-key" \

zyphrzero/z-ai2api-python:latest

# 完整配置启动

docker run -d \

--name z-ai2api \

-p 8080:8080 \

-e AUTH_TOKEN="sk-your-api-key" \

-e ANONYMOUS_MODE="true" \

-e DEBUG_LOGGING="true" \

-e TOOL_SUPPORT="true" \

-v $(pwd)/tokens.txt:/app/tokens.txt \

-v $(pwd)/logs:/app/logs \

zyphrzero/z-ai2api-python:latest使用 Docker Compose:

创建 docker-compose.yml 文件:

version: '3.8'

services:

z-ai2api:

image: zyphrzero/z-ai2api-python:latest

container_name: z-ai2api

ports:

- "8080:8080"

environment:

- AUTH_TOKEN=sk-your-api-key

- ANONYMOUS_MODE=true

- DEBUG_LOGGING=true

- TOOL_SUPPORT=true

- LISTEN_PORT=8080

volumes:

- ./tokens.txt:/app/tokens.txt

- ./logs:/app/logs

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8080/health"]

interval: 30s

timeout: 10s

retries: 3然后启动:

docker-compose up -dcd deploy

docker-compose up -d- 镜像地址: https://hub.docker.com/r/zyphrzero/z-ai2api-python

-

支持架构:

linux/amd64,linux/arm64 -

基础镜像:

python:3.11-slim

为了保持日志和配置文件的持久化,建议挂载以下目录:

# 启动时挂载数据目录

docker run -d \

--name z-ai2api \

-p 8080:8080 \

-e AUTH_TOKEN="sk-your-api-key" \

-v $(pwd)/tokens.txt:/app/tokens.txt \

-v $(pwd)/logs:/app/logs \

-v $(pwd)/.env:/app/.env \

zyphrzero/z-ai2api-python:latest| 模型 | 上游 ID | 描述 | 特性 |

|---|---|---|---|

GLM-4.5 |

0727-360B-API | 标准模型 | 通用对话,平衡性能 |

GLM-4.5-Thinking |

0727-360B-API | 思考模型 | 显示推理过程,透明度高 |

GLM-4.5-Search |

0727-360B-API | 搜索模型 | 实时网络搜索,信息更新 |

GLM-4.5-Air |

0727-106B-API | 轻量模型 | 快速响应,高效推理 |

| 模型 | 描述 | 特性 |

|---|---|---|

MBZUAI-IFM/K2-Think |

K2Think 模型 | 快速的高质量推理 |

| 模型 | 描述 | 特性 |

|---|---|---|

LongCat-Flash |

快速响应模型 | 高速处理,适合实时对话 |

LongCat |

标准模型 | 平衡性能,通用场景 |

LongCat-Search |

搜索增强模型 | 集成搜索功能,信息检索 |

| 变量名 | 默认值 | 说明 |

|---|---|---|

AUTH_TOKEN |

sk-your-api-key |

客户端认证密钥 |

LISTEN_PORT |

8080 |

服务监听端口 |

DEBUG_LOGGING |

true |

调试日志开关 |

ANONYMOUS_MODE |

true |

匿名用户模式开关 |

TOOL_SUPPORT |

true |

Function Call 功能开关 |

SKIP_AUTH_TOKEN |

false |

跳过认证令牌验证 |

SCAN_LIMIT |

200000 |

扫描限制 |

AUTH_TOKENS_FILE |

tokens.txt |

Z.AI 认证token文件路径 |

| 变量名 | 默认值 | 说明 |

|---|---|---|

LONGCAT_PASSPORT_TOKEN |

- | LongCat 单个认证token |

LONGCAT_TOKENS_FILE |

- | LongCat 多个token文件路径 |

💡 详细配置请查看

.env.example文件

# Z.AI 认证配置

AUTH_TOKENS_FILE=tokens.txt

ANONYMOUS_MODE=true# LongCat 认证配置

LONGCAT_PASSPORT_TOKEN=your_passport_token

# 或使用多个token文件

LONGCAT_TOKENS_FILE=longcat_tokens.txt# K2Think 自动处理认证,无需额外配置- 负载均衡:轮询使用多个auth token,分散请求负载

- 自动容错:token失败时自动切换到下一个可用token

- 健康监控:基于Z.AI API的role字段精确验证token类型

- 自动恢复:失败token在超时后自动重新尝试

- 动态管理:支持运行时更新token池

- 智能去重:自动检测和去除重复token

- 类型验证:只接受认证用户token (role: "user"),拒绝匿名token (role: "guest")

- 回退机制:认证模式失败时自动回退到匿名模式,匿名模式无法回退到认证模式

仅有基础功能,暂未完善

# 查看token池状态

curl http://localhost:8080/v1/token-pool/status

# 手动健康检查

curl -X POST http://localhost:8080/v1/token-pool/health-check

# 动态更新token池

curl -X POST http://localhost:8080/v1/token-pool/update \

-H "Content-Type: application/json" \

-d '["new_token1", "new_token2"]'- 智能客服系统:集成到现有客服平台,提供 24/7 智能问答服务

- 内容生成工具:自动生成文章、摘要、翻译等内容

- 代码助手:提供代码补全、解释、优化建议等功能

- 外部 API 集成:连接天气、搜索、数据库等外部服务

- 自动化工作流:构建复杂的多步骤自动化任务

- 智能决策系统:基于实时数据进行智能分析和决策

Q: 如何获取 AUTH_TOKEN?

A: AUTH_TOKEN 为自己自定义的 api key,在环境变量中配置,需要保证客户端与服务端一致。

Q: 启动时提示"服务已在运行"怎么办?

A: 这是服务唯一性验证功能,防止重复启动。解决方法:

- 检查是否已有服务实例在运行:

ps aux | grep z-ai2api-server - 停止现有实例后再启动新的

- 如果确认没有实例运行,删除 PID 文件:

rm z-ai2api-server.pid - 可通过环境变量

SERVICE_NAME自定义服务名称避免冲突

Q: 如何通过 Claude Code 使用本服务?

A: 创建 zai.js 这个 ccr 插件放在./.claude-code-router/plugins目录下,配置 ./.claude-code-router/config.json 指向本服务地址,使用 AUTH_TOKEN 进行认证。

示例配置:

{

"LOG": false,

"LOG_LEVEL": "debug",

"CLAUDE_PATH": "",

"HOST": "127.0.0.1",

"PORT": 3456,

"APIKEY": "",

"API_TIMEOUT_MS": "600000",

"PROXY_URL": "",

"transformers": [

{

"name": "zai",

"path": "C:\\Users\\Administrator\\.claude-code-router\\plugins\\zai.js",

"options": {}

}

],

"Providers": [

{

"name": "GLM",

"api_base_url": "http://127.0.0.1:8080/v1/chat/completions",

"api_key": "sk-your-api-key",

"models": ["GLM-4.5", "GLM-4.5-Air"],

"transformers": {

"use": ["zai"]

}

}

],

"StatusLine": {

"enabled": false,

"currentStyle": "default",

"default": {

"modules": []

},

"powerline": {

"modules": []

}

},

"Router": {

"default": "GLM,GLM-4.5",

"background": "GLM,GLM-4.5",

"think": "GLM,GLM-4.5",

"longContext": "GLM,GLM-4.5",

"longContextThreshold": 60000,

"webSearch": "GLM,GLM-4.5",

"image": "GLM,GLM-4.5"

},

"CUSTOM_ROUTER_PATH": ""

}Q: 匿名模式是什么?

A: 匿名模式使用临时 token,避免对话历史共享,保护隐私。

Q: 如何自定义配置?

A: 通过环境变量配置,推荐使用 .env 文件。

Q: 如何配置 LongCat 认证?

A: 有两种方式配置 LongCat 认证:

- 单个 token:设置

LONGCAT_PASSPORT_TOKEN环境变量 - 多个 token:创建 token 文件并设置

LONGCAT_TOKENS_FILE环境变量

要使用完整的多模态功能,需要获取正式的 Z.ai API Token:

- 打开 Z.ai 聊天界面,然后登录账号

- 按 F12 打开开发者工具

- 切换到 "Application" -> "Local Storage" -> "Cookie"列表中找到名为

token的值 - 复制

token值设置为环境变量,也可以使用官方个人账号下设置的 API Key

❗ 重要提示: 获取的 token 可能有时效性,多模态模型需要官方 Z.ai API 非匿名 Token,匿名 token 不支持多媒体处理

获取 LongCat API Token 才能正常使用该服务(官网匿名对话次数仅有一次):

- 打开 LongCat 官网,登录自己的美团账号

- 按 F12 打开开发者工具

- 切换到 "Application" -> "Local Storage" -> "Cookie"列表中找到名为

passport_token_key的值 - 复制

passport_token_key值设置为环境变量

| 组件 | 技术 | 版本 | 说明 |

|---|---|---|---|

| Web 框架 | FastAPI | 0.116.1 | 高性能异步 Web 框架,支持自动 API 文档生成 |

| ASGI 服务器 | Granian | 2.5.2 | 基于 Rust 的高性能 ASGI 服务器,支持热重载 |

| HTTP 客户端 | HTTPX / Requests | 0.27.0 / 2.32.5 | 异步/同步 HTTP 库,用于上游 API 调用 |

| 数据验证 | Pydantic | 2.11.7 | 类型安全的数据验证与序列化 |

| 配置管理 | Pydantic Settings | 2.10.1 | 基于 Pydantic 的配置管理 |

| 日志系统 | Loguru | 0.7.3 | 高性能结构化日志库 |

| 用户代理 | Fake UserAgent | 2.2.0 | 动态用户代理生成 |

┌──────────────┐ ┌─────────────────────────────────────┐ ┌─────────────────┐

│ OpenAI │ │ │ │ │

│ Client │────▶│ FastAPI Server │────▶│ Z.AI API │

└──────────────┘ │ │ │ │

┌──────────────┐ │ ┌─────────────────────────────────┐ │ │ ┌─────────────┐ │

│ Claude Code │ │ │ Provider Router │ │ │ │0727-360B-API│ │

│ Router │────▶│ │ ┌─────────┬─────────┬─────────┐ │ │ │ └─────────────┘ │

└──────────────┘ │ │ │Z.AI │K2Think │LongCat │ │ │ │ ┌─────────────┐ │

│ │ │Provider │Provider │Provider │ │ │────▶│ │0727-106B-API│ │

│ │ └─────────┴─────────┴─────────┘ │ │ │ └─────────────┘ │

│ └─────────────────────────────────┘ │ │ │

│ ┌─────────────────────────────────┐ │ └─────────────────┘

│ │ /v1/chat/completions │ │ ┌─────────────────┐

│ │ /v1/models │ │ │ K2Think API │

│ │ Enhanced Tools │ │────▶│ │

│ └─────────────────────────────────┘ │ └─────────────────┘

└─────────────────────────────────────┘ ┌─────────────────┐

OpenAI Compatible API │ LongCat API │

│ │

└─────────────────┘

If you like this project, please give it a star ⭐

我们欢迎所有形式的贡献! 请确保代码符合 PEP 8 规范,并更新相关文档。

本项目采用 MIT 许可证 - 查看 LICENSE 文件了解详情。

- 本项目与 Z.AI、K2Think、LongCat 等 AI 提供商官方无关

- 使用前请确保遵守各提供商的服务条款

- 请勿用于商业用途或违反使用条款的场景

- 项目仅供学习和研究使用

- 用户需自行承担使用风险

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for z.ai2api_python

Similar Open Source Tools

z.ai2api_python

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

app-builder

AppBuilder SDK is a one-stop development tool for AI native applications, providing basic cloud resources, AI capability engine, Qianfan large model, and related capability components to improve the development efficiency of AI native applications.

Feishu-MCP

Feishu-MCP is a server that provides access, editing, and structured processing capabilities for Feishu documents for Cursor, Windsurf, Cline, and other AI-driven coding tools, based on the Model Context Protocol server. This project enables AI coding tools to directly access and understand the structured content of Feishu documents, significantly improving the intelligence and efficiency of document processing. It covers the real usage process of Feishu documents, allowing efficient utilization of document resources, including folder directory retrieval, content retrieval and understanding, smart creation and editing, efficient search and retrieval, and more. It enhances the intelligent access, editing, and searching of Feishu documents in daily usage, improving content processing efficiency and experience.

GalTransl

GalTransl is an automated translation tool for Galgames that combines minor innovations in several basic functions with deep utilization of GPT prompt engineering. It is used to create embedded translation patches. The core of GalTransl is a set of automated translation scripts that solve most known issues when using ChatGPT for Galgame translation and improve overall translation quality. It also integrates with other projects to streamline the patch creation process, reducing the learning curve to some extent. Interested users can more easily build machine-translated patches of a certain quality through this project and may try to efficiently build higher-quality localization patches based on this framework.

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

AI-Guide-and-Demos-zh_CN

This is a Chinese AI/LLM introductory project that aims to help students overcome the initial difficulties of accessing foreign large models' APIs. The project uses the OpenAI SDK to provide a more compatible learning experience. It covers topics such as AI video summarization, LLM fine-tuning, and AI image generation. The project also offers a CodePlayground for easy setup and one-line script execution to experience the charm of AI. It includes guides on API usage, LLM configuration, building AI applications with Gradio, customizing prompts for better model performance, understanding LoRA, and more.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

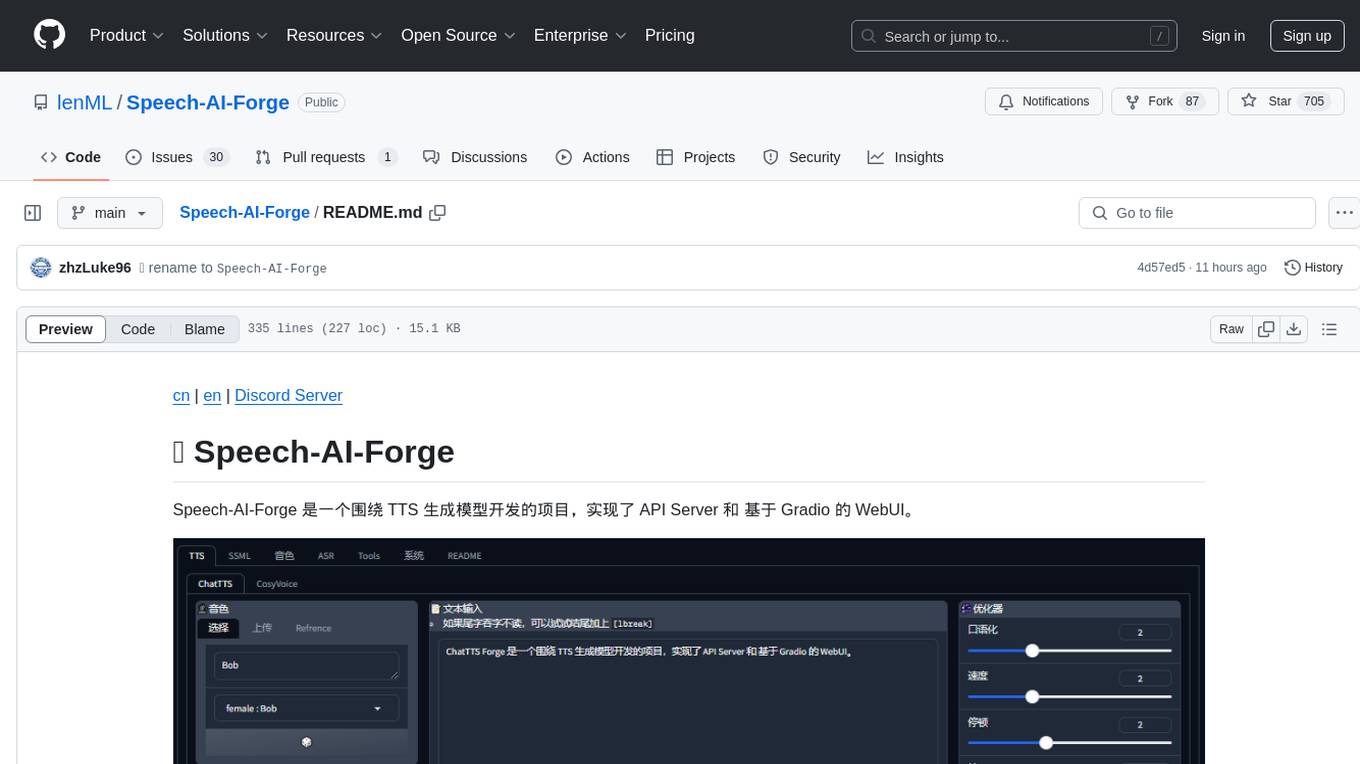

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

chatgpt-mirai-qq-bot

Kirara AI is a chatbot that supports mainstream language models and chat platforms. It features various functionalities such as image sending, keyword-triggered replies, multi-account support, content moderation, personality settings, and support for platforms like QQ, Telegram, Discord, and WeChat. It also offers HTTP server capabilities, plugin support, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, and custom workflows. The tool can be accessed via HTTP API for integration with other platforms.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

Awesome-ChatTTS

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

TelegramForwarder

Telegram Forwarder is a message forwarding tool that allows you to forward messages from specified chats to other chats without the need for a bot to enter the corresponding channels/groups to listen. It can be used for information stream integration filtering, message reminders, content archiving, and more. The tool supports multiple sources forwarding, keyword filtering in whitelist and blacklist modes, regular expression matching, message content modification, AI processing using major vendors' AI interfaces, media file filtering, and synchronization with a universal forum blocking plugin to achieve three-end blocking.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

For similar tasks

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

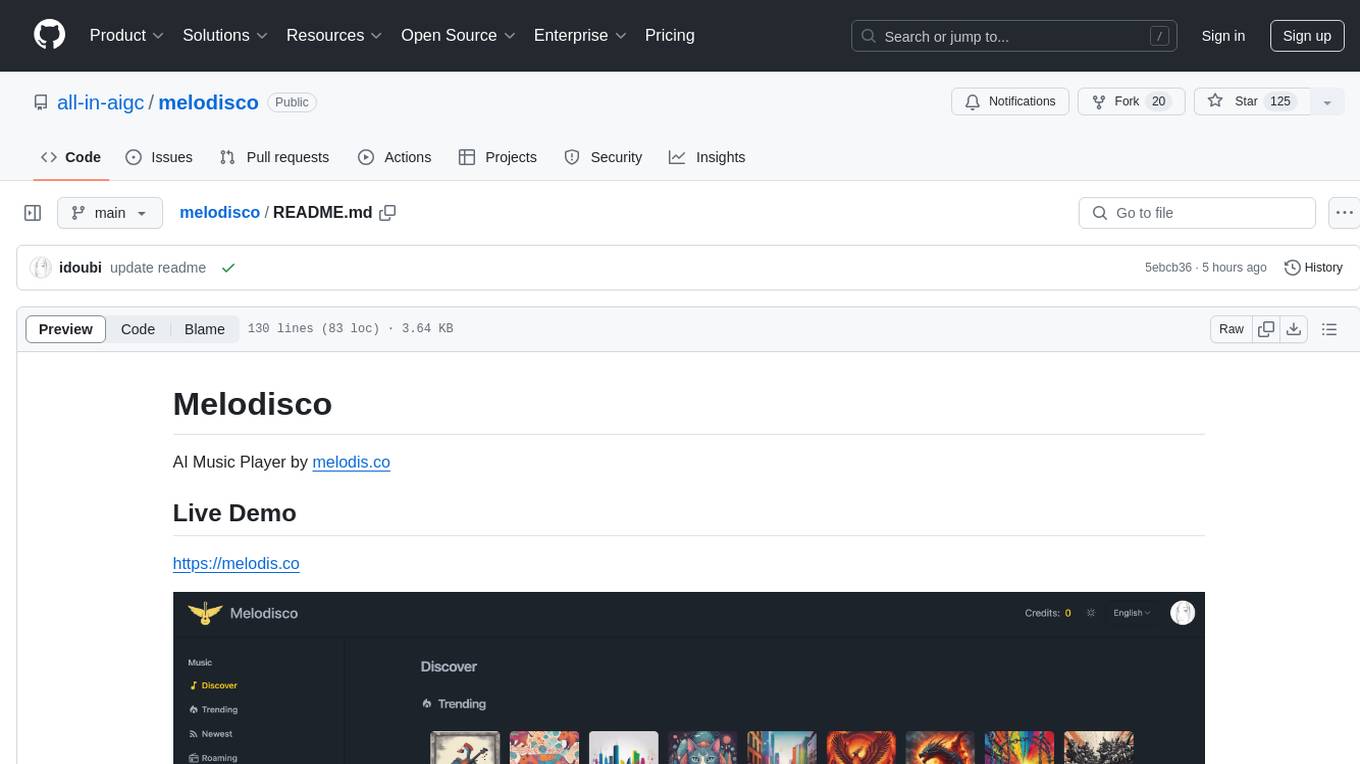

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

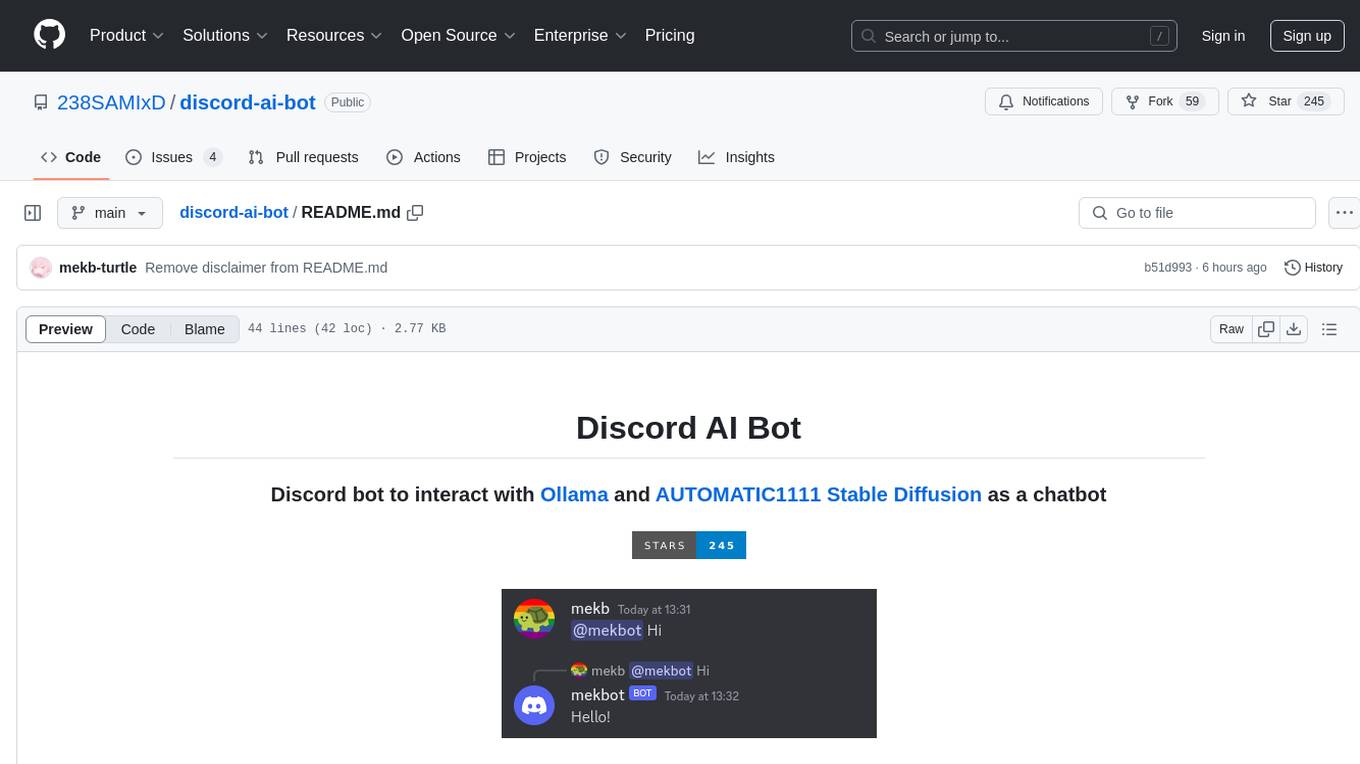

discord-ai-bot

Discord AI Bot is a chatbot tool designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The bot allows users to set up and configure a Discord bot to communicate with the mentioned AI models. Users can follow step-by-step instructions to install Node.js, Ollama, and the required dependencies, create a Discord bot, and interact with the bot by mentioning it in messages. Additionally, the tool provides set-up instructions for Docker users to easily deploy the bot using Docker containers. Overall, Discord AI Bot simplifies the process of integrating AI chatbots into Discord servers for interactive communication.

For similar jobs

Awesome-LLM-RAG-Application

Awesome-LLM-RAG-Application is a repository that provides resources and information about applications based on Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) pattern. It includes a survey paper, GitHub repo, and guides on advanced RAG techniques. The repository covers various aspects of RAG, including academic papers, evaluation benchmarks, downstream tasks, tools, and technologies. It also explores different frameworks, preprocessing tools, routing mechanisms, evaluation frameworks, embeddings, security guardrails, prompting tools, SQL enhancements, LLM deployment, observability tools, and more. The repository aims to offer comprehensive knowledge on RAG for readers interested in exploring and implementing LLM-based systems and products.

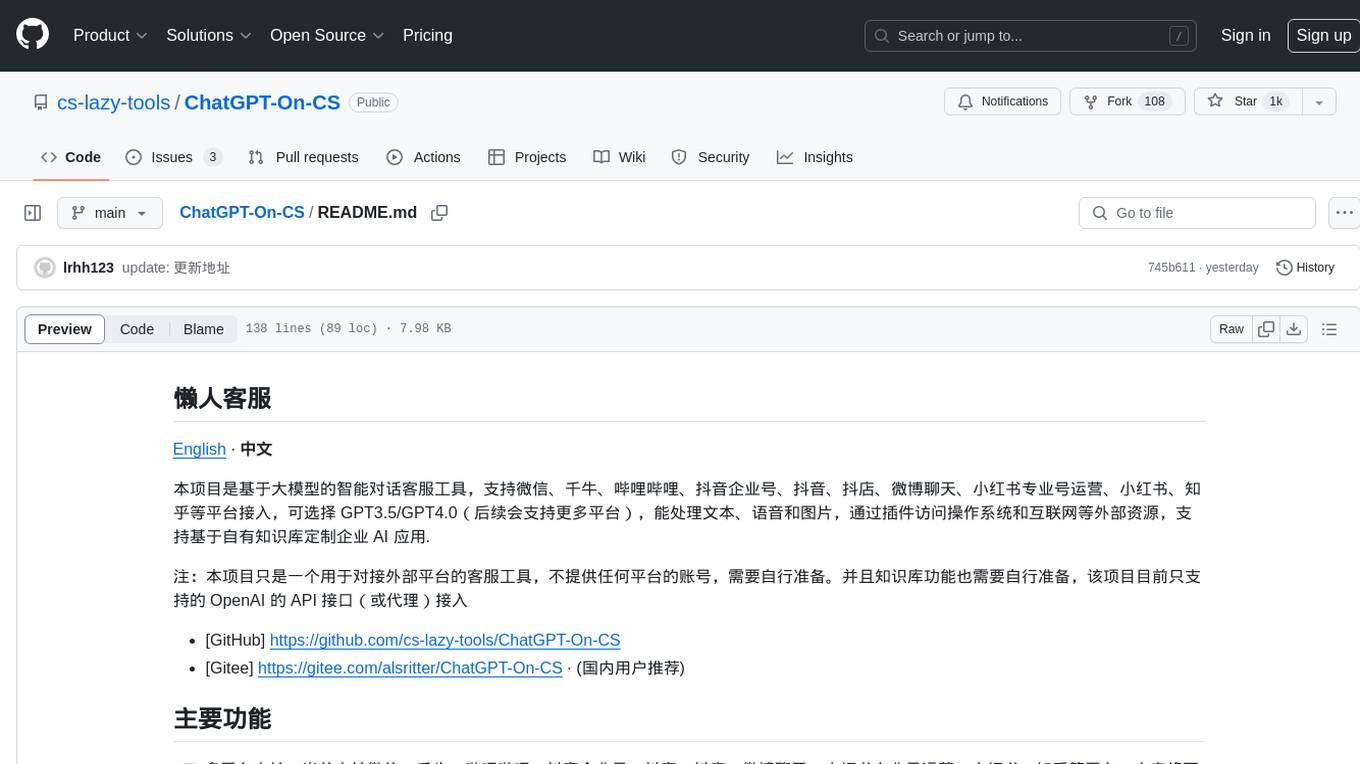

ChatGPT-On-CS

ChatGPT-On-CS is an intelligent chatbot tool based on large models, supporting various platforms like WeChat, Taobao, Bilibili, Douyin, Weibo, and more. It can handle text, voice, and image inputs, access external resources through plugins, and customize enterprise AI applications based on proprietary knowledge bases. Users can set custom replies, utilize ChatGPT interface for intelligent responses, send images and binary files, and create personalized chatbots using knowledge base files. The tool also features platform-specific plugin systems for accessing external resources and supports enterprise AI applications customization.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

awesome-LLM-resourses

A comprehensive repository of resources for Chinese large language models (LLMs), including data processing tools, fine-tuning frameworks, inference libraries, evaluation platforms, RAG engines, agent frameworks, books, courses, tutorials, and tips. The repository covers a wide range of tools and resources for working with LLMs, from data labeling and processing to model fine-tuning, inference, evaluation, and application development. It also includes resources for learning about LLMs through books, courses, and tutorials, as well as insights and strategies from building with LLMs.

tappas

Hailo TAPPAS is a set of full application examples that implement pipeline elements and pre-trained AI tasks. It demonstrates Hailo's system integration scenarios on predefined systems, aiming to accelerate time to market, simplify integration with Hailo's runtime SW stack, and provide a starting point for customers to fine-tune their applications. The tool supports both Hailo-15 and Hailo-8, offering various example applications optimized for different common hosts. TAPPAS includes pipelines for single network, two network, and multi-stream processing, as well as high-resolution processing via tiling. It also provides example use case pipelines like License Plate Recognition and Multi-Person Multi-Camera Tracking. The tool is regularly updated with new features, bug fixes, and platform support.

cloudflare-rag

This repository provides a fullstack example of building a Retrieval Augmented Generation (RAG) app with Cloudflare. It utilizes Cloudflare Workers, Pages, D1, KV, R2, AI Gateway, and Workers AI. The app features streaming interactions to the UI, hybrid RAG with Full-Text Search and Vector Search, switchable providers using AI Gateway, per-IP rate limiting with Cloudflare's KV, OCR within Cloudflare Worker, and Smart Placement for workload optimization. The development setup requires Node, pnpm, and wrangler CLI, along with setting up necessary primitives and API keys. Deployment involves setting up secrets and deploying the app to Cloudflare Pages. The project implements a Hybrid Search RAG approach combining Full Text Search against D1 and Hybrid Search with embeddings against Vectorize to enhance context for the LLM.

pixeltable

Pixeltable is a Python library designed for ML Engineers and Data Scientists to focus on exploration, modeling, and app development without the need to handle data plumbing. It provides a declarative interface for working with text, images, embeddings, and video, enabling users to store, transform, index, and iterate on data within a single table interface. Pixeltable is persistent, acting as a database unlike in-memory Python libraries such as Pandas. It offers features like data storage and versioning, combined data and model lineage, indexing, orchestration of multimodal workloads, incremental updates, and automatic production-ready code generation. The tool emphasizes transparency, reproducibility, cost-saving through incremental data changes, and seamless integration with existing Python code and libraries.

wave-apps

Wave Apps is a directory of sample applications built on H2O Wave, allowing users to build AI apps faster. The apps cover various use cases such as explainable hotel ratings, human-in-the-loop credit risk assessment, mitigating churn risk, online shopping recommendations, and sales forecasting EDA. Users can download, modify, and integrate these sample apps into their own projects to learn about app development and AI model deployment.