new-api

AI模型聚合管理分发系统,支持将多种大模型转为统一格式调用,支持OpenAI、Claude、Gemini等格式,可供个人或者企业内部管理与分发渠道使用,本项目基于One API二次开发。🍥 The next-generation LLM gateway and AI asset management system supports multiple languages.

Stars: 10878

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

README:

中文 | English

[!NOTE]

本项目为开源项目,在One API的基础上进行二次开发

[!IMPORTANT]

- 本项目仅供个人学习使用,不保证稳定性,且不提供任何技术支持。

- 使用者必须在遵循 OpenAI 的使用条款以及法律法规的情况下使用,不得用于非法用途。

- 根据《生成式人工智能服务管理暂行办法》的要求,请勿对中国地区公众提供一切未经备案的生成式人工智能服务。

排名不分先后

详细文档请访问我们的官方Wiki:https://docs.newapi.pro/

New API提供了丰富的功能,详细特性请参考特性说明:

- 🎨 全新的UI界面

- 🌍 多语言支持

- 💰 支持在线充值功能(易支付)

- 🔍 支持用key查询使用额度(配合neko-api-key-tool)

- 🔄 兼容原版One API的数据库

- 💵 支持模型按次数收费

- ⚖️ 支持渠道加权随机

- 📈 数据看板(控制台)

- 🔒 令牌分组、模型限制

- 🤖 支持更多授权登陆方式(LinuxDO,Telegram、OIDC)

- 🔄 支持Rerank模型(Cohere和Jina),接口文档

- ⚡ 支持OpenAI Realtime API(包括Azure渠道),接口文档

- ⚡ 支持Claude Messages 格式,接口文档

- 支持使用路由/chat2link进入聊天界面

- 🧠 支持通过模型名称后缀设置 reasoning effort:

- OpenAI o系列模型

- 添加后缀

-high设置为 high reasoning effort (例如:o3-mini-high) - 添加后缀

-medium设置为 medium reasoning effort (例如:o3-mini-medium) - 添加后缀

-low设置为 low reasoning effort (例如:o3-mini-low)

- 添加后缀

- Claude 思考模型

- 添加后缀

-thinking启用思考模式 (例如:claude-3-7-sonnet-20250219-thinking)

- 添加后缀

- OpenAI o系列模型

- 🔄 思考转内容功能

- 🔄 针对用户的模型限流功能

- 🔄 请求格式转换功能,支持以下三种格式转换:

- OpenAI Chat Completions => Claude Messages

- Clade Messages => OpenAI Chat Completions (可用于Claude Code调用第三方模型)

- OpenAI Chat Completions => Gemini Chat

- 💰 缓存计费支持,开启后可以在缓存命中时按照设定的比例计费:

- 在

系统设置-运营设置中设置提示缓存倍率选项 - 在渠道中设置

提示缓存倍率,范围 0-1,例如设置为 0.5 表示缓存命中时按照 50% 计费 - 支持的渠道:

- [x] OpenAI

- [x] Azure

- [x] DeepSeek

- [x] Claude

- 在

此版本支持多种模型,详情请参考接口文档-中继接口:

- 第三方模型 gpts (gpt-4-gizmo-*)

- 第三方渠道Midjourney-Proxy(Plus)接口,接口文档

- 第三方渠道Suno API接口,接口文档

- 自定义渠道,支持填入完整调用地址

- Rerank模型(Cohere和Jina),接口文档

- Claude Messages 格式,接口文档

- Dify,当前仅支持chatflow

详细配置说明请参考安装指南-环境变量配置:

-

GENERATE_DEFAULT_TOKEN:是否为新注册用户生成初始令牌,默认为false -

STREAMING_TIMEOUT:流式回复超时时间,默认300秒 -

DIFY_DEBUG:Dify渠道是否输出工作流和节点信息,默认true -

FORCE_STREAM_OPTION:是否覆盖客户端stream_options参数,默认true -

GET_MEDIA_TOKEN:是否统计图片token,默认true -

GET_MEDIA_TOKEN_NOT_STREAM:非流情况下是否统计图片token,默认true -

UPDATE_TASK:是否更新异步任务(Midjourney、Suno),默认true -

COHERE_SAFETY_SETTING:Cohere模型安全设置,可选值为NONE,CONTEXTUAL,STRICT,默认NONE -

GEMINI_VISION_MAX_IMAGE_NUM:Gemini模型最大图片数量,默认16 -

MAX_FILE_DOWNLOAD_MB: 最大文件下载大小,单位MB,默认20 -

CRYPTO_SECRET:加密密钥,用于加密数据库内容 -

AZURE_DEFAULT_API_VERSION:Azure渠道默认API版本,默认2025-04-01-preview -

NOTIFICATION_LIMIT_DURATION_MINUTE:通知限制持续时间,默认10分钟 -

NOTIFY_LIMIT_COUNT:用户通知在指定持续时间内的最大数量,默认2 -

ERROR_LOG_ENABLED=true: 是否记录并显示错误日志,默认false

详细部署指南请参考安装指南-部署方式:

[!TIP] 最新版Docker镜像:

calciumion/new-api:latest

- 必须设置环境变量

SESSION_SECRET,否则会导致多机部署时登录状态不一致 - 如果公用Redis,必须设置

CRYPTO_SECRET,否则会导致多机部署时Redis内容无法获取

- 本地数据库(默认):SQLite(Docker部署必须挂载

/data目录) - 远程数据库:MySQL版本 >= 5.7.8,PgSQL版本 >= 9.6

安装宝塔面板(9.2.0版本及以上),在应用商店中找到New-API安装即可。 图文教程

# 下载项目

git clone https://github.com/Calcium-Ion/new-api.git

cd new-api

# 按需编辑docker-compose.yml

# 启动

docker-compose up -d# 使用SQLite

docker run --name new-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/new-api:/data calciumion/new-api:latest

# 使用MySQL

docker run --name new-api -d --restart always -p 3000:3000 -e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi" -e TZ=Asia/Shanghai -v /home/ubuntu/data/new-api:/data calciumion/new-api:latest渠道重试功能已经实现,可以在设置->运营设置->通用设置设置重试次数,建议开启缓存功能。

-

REDIS_CONN_STRING:设置Redis作为缓存 -

MEMORY_CACHE_ENABLED:启用内存缓存(设置了Redis则无需手动设置)

详细接口文档请参考接口文档:

- One API:原版项目

- Midjourney-Proxy:Midjourney接口支持

- chatnio:下一代AI一站式B/C端解决方案

- neko-api-key-tool:用key查询使用额度

其他基于New API的项目:

- new-api-horizon:New API高性能优化版

如有问题,请参考帮助支持:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for new-api

Similar Open Source Tools

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

fit-framework

FIT Framework is a Java enterprise AI development framework that provides a multi-language function engine (FIT), a flow orchestration engine (WaterFlow), and a Java ecosystem alternative solution (FEL). It runs in native/Spring dual mode, supports plug-and-play and intelligent deployment, seamlessly unifying large models and business systems. FIT Core offers language-agnostic computation base with plugin hot-swapping and intelligent deployment. WaterFlow Engine breaks the dimensional barrier of BPM and reactive programming, enabling graphical orchestration and declarative API-driven logic composition. FEL revolutionizes LangChain for the Java ecosystem, encapsulating large models, knowledge bases, and toolchains to integrate AI capabilities into Java technology stack seamlessly. The framework emphasizes engineering practices with intelligent conventions to reduce boilerplate code and offers flexibility for deep customization in complex scenarios.

scabench

ScaBench is a comprehensive framework designed for evaluating security analysis tools and AI agents on real-world smart contract vulnerabilities. It provides curated datasets from recent audits and official tooling for consistent evaluation. The tool includes features such as curated datasets from Code4rena, Cantina, and Sherlock audits, a baseline runner for security analysis, a scoring tool for evaluating findings, a report generator for HTML reports with visualizations, and pipeline automation for complete workflow execution. Users can access curated datasets, generate new datasets, download project source code, run security analysis using LLMs, and evaluate tool findings against benchmarks using LLM matching. The tool enforces strict matching policies to ensure accurate evaluation results.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

yomitoku

YomiToku is a Japanese-focused AI document image analysis engine that provides full-text OCR and layout analysis capabilities for images. It recognizes, extracts, and converts text information and figures in images. It includes 4 AI models trained on Japanese datasets for tasks such as detecting text positions, recognizing text strings, analyzing layouts, and recognizing table structures. The models are specialized for Japanese document images, supporting recognition of over 7000 Japanese characters and analyzing layout structures specific to Japanese documents. It offers features like layout analysis, table structure analysis, and reading order estimation to extract information from document images without disrupting their semantic structure. YomiToku supports various output formats such as HTML, markdown, JSON, and CSV, and can also extract figures, tables, and images from documents. It operates efficiently in GPU environments, enabling fast and effective analysis of document transcriptions without requiring high-end GPUs.

CareGPT

CareGPT is a medical large language model (LLM) that explores medical data, training, and deployment related research work. It integrates resources, open-source models, rich data, and efficient deployment methods. It supports various medical tasks, including patient diagnosis, medical dialogue, and medical knowledge integration. The model has been fine-tuned on diverse medical datasets to enhance its performance in the healthcare domain.

airdrop-tools

Airdrop-tools is a repository containing tools for all Telegram bots. Users can join the Telegram group for support and access various bot apps like Moonbix, Blum, Major, Memefi, and more. The setup requires Node.js and Python, with instructions on creating data directories and installing extensions. Users can run different tools like Blum, Major, Moonbix, Yescoin, Matchain, Fintopio, Agent301, IAMDOG, Banana, Cats, Wonton, and Xkucoin by following specific commands. The repository also provides contact information and options for supporting the creator.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

open-lx01

Open-LX01 is a project aimed at turning the Xiao Ai Mini smart speaker into a fully self-controlled device. The project involves steps such as gaining control, flashing custom firmware, and achieving autonomous control. It includes analysis of main services, reverse engineering methods, cross-compilation environment setup, customization of programs on the speaker, and setting up a web server. The project also covers topics like using custom ASR and TTS, developing a wake-up program, and creating a UI for various configurations. Additionally, it explores topics like gdb-server setup, open-mico-aivs-lab, and open-mipns-sai integration using Porcupine or Kaldi.

readme-ai

README-AI is a developer tool that auto-generates README.md files using a combination of data extraction and generative AI. It streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software. It offers flexible README generation, supports multiple large language models (LLMs), provides customizable output options, works with various programming languages and project types, and includes an offline mode for generating boilerplate README files without external API calls.

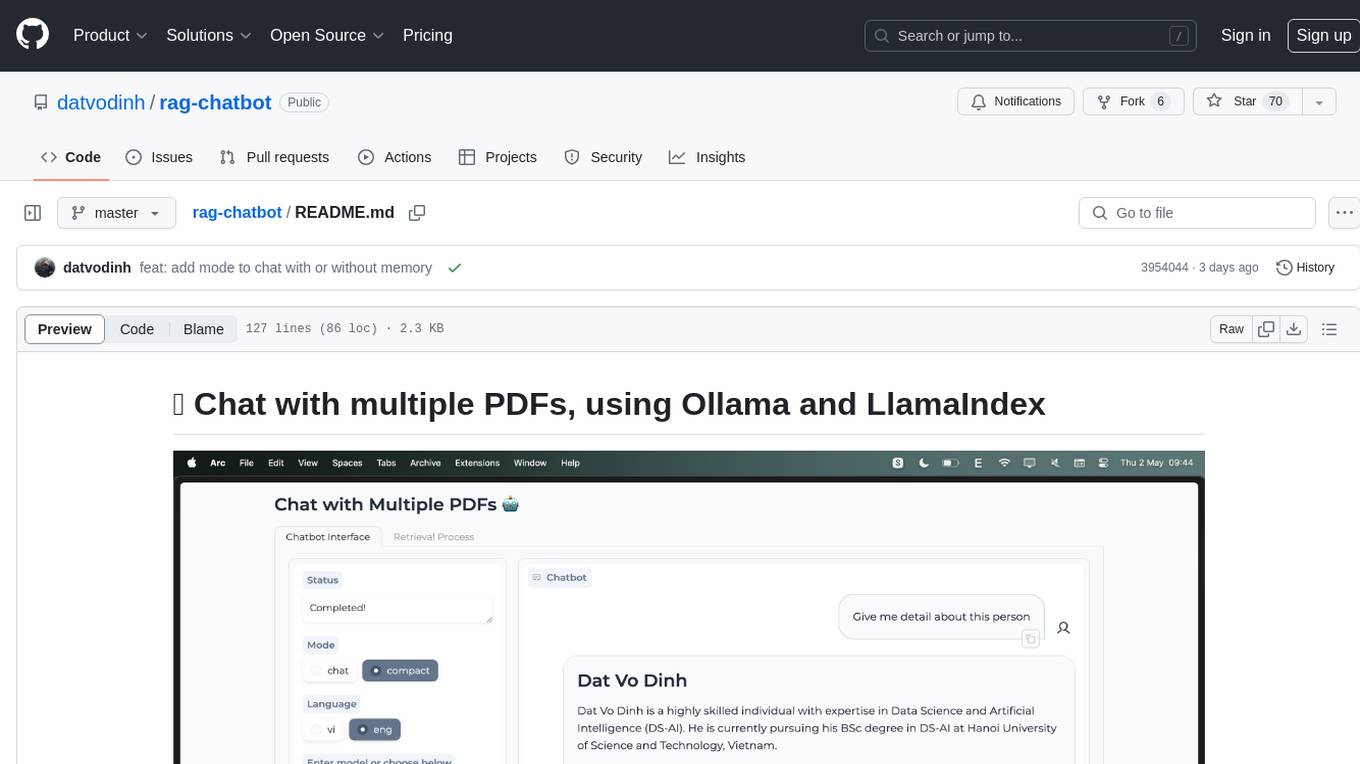

rag-chatbot

rag-chatbot is a tool that allows users to chat with multiple PDFs using Ollama and LlamaIndex. It provides an easy setup for running on local machines or Kaggle notebooks. Users can leverage models from Huggingface and Ollama, process multiple PDF inputs, and chat in multiple languages. The tool offers a simple UI with Gradio, supporting chat with history and QA modes. Setup instructions are provided for both Kaggle and local environments, including installation steps for Docker, Ollama, Ngrok, and the rag_chatbot package. Users can run the tool locally and access it via a web interface. Future enhancements include adding evaluation, better embedding models, knowledge graph support, improved document processing, MLX model integration, and Corrective RAG.

For similar tasks

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

unitycatalog

Unity Catalog is an open and interoperable catalog for data and AI, supporting multi-format tables, unstructured data, and AI assets. It offers plugin support for extensibility and interoperates with Delta Sharing protocol. The catalog is fully open with OpenAPI spec and OSS implementation, providing unified governance for data and AI with asset-level access control enforced through REST APIs.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.