mlstm_kernels

Tiled Flash Linear Attention library for fast and efficient mLSTM Kernels.

Stars: 52

This repository provides fast and efficient mLSTM training and inference Triton kernels built on Tiled Flash Linear Attention (TFLA). It includes implementations in JAX, PyTorch, and Triton, with chunkwise, parallel, and recurrent kernels for mLSTM. The repository also contains a benchmark library for runtime benchmarks and full mLSTM Huggingface models.

README:

Paper: https://arxiv.org/abs/2503.14376

Authors: Maximilian Beck, Korbinian Pöppel, Phillip Lippe, Sepp Hochreiter

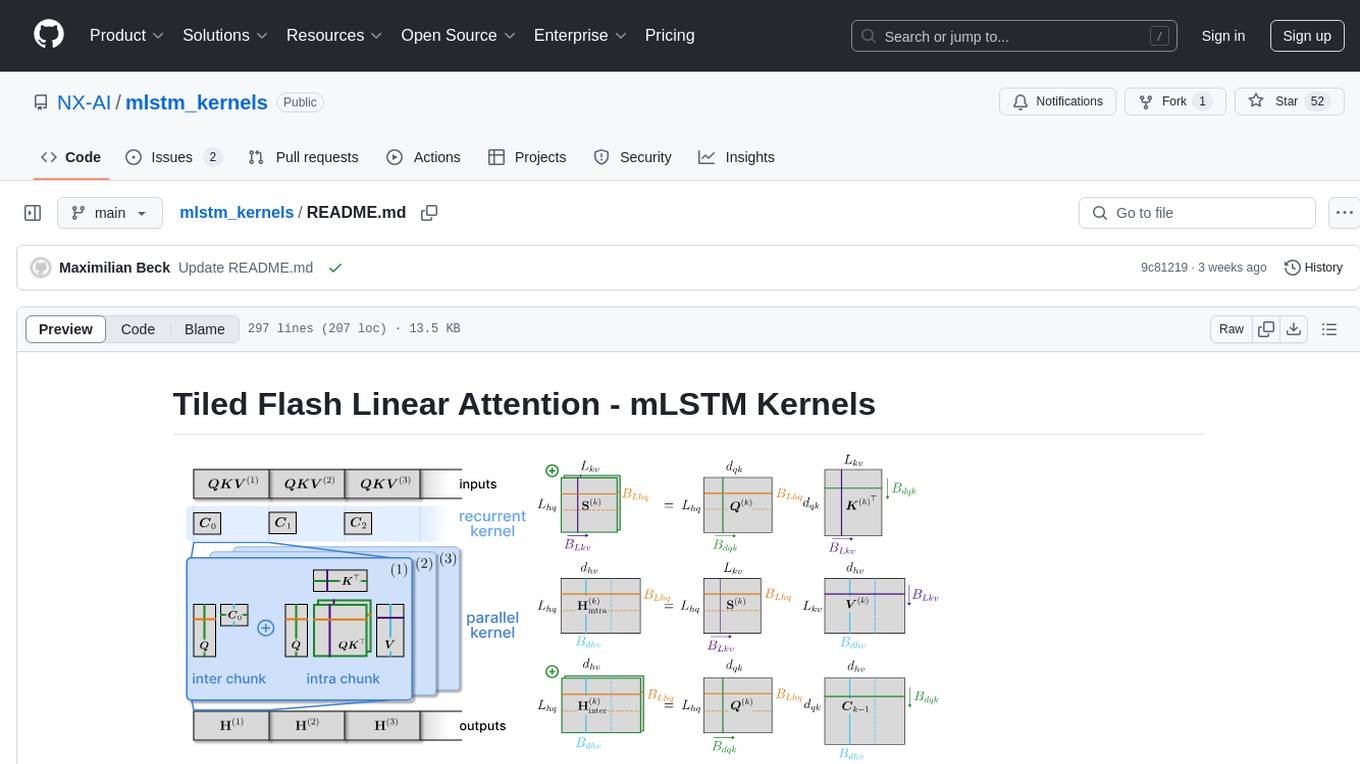

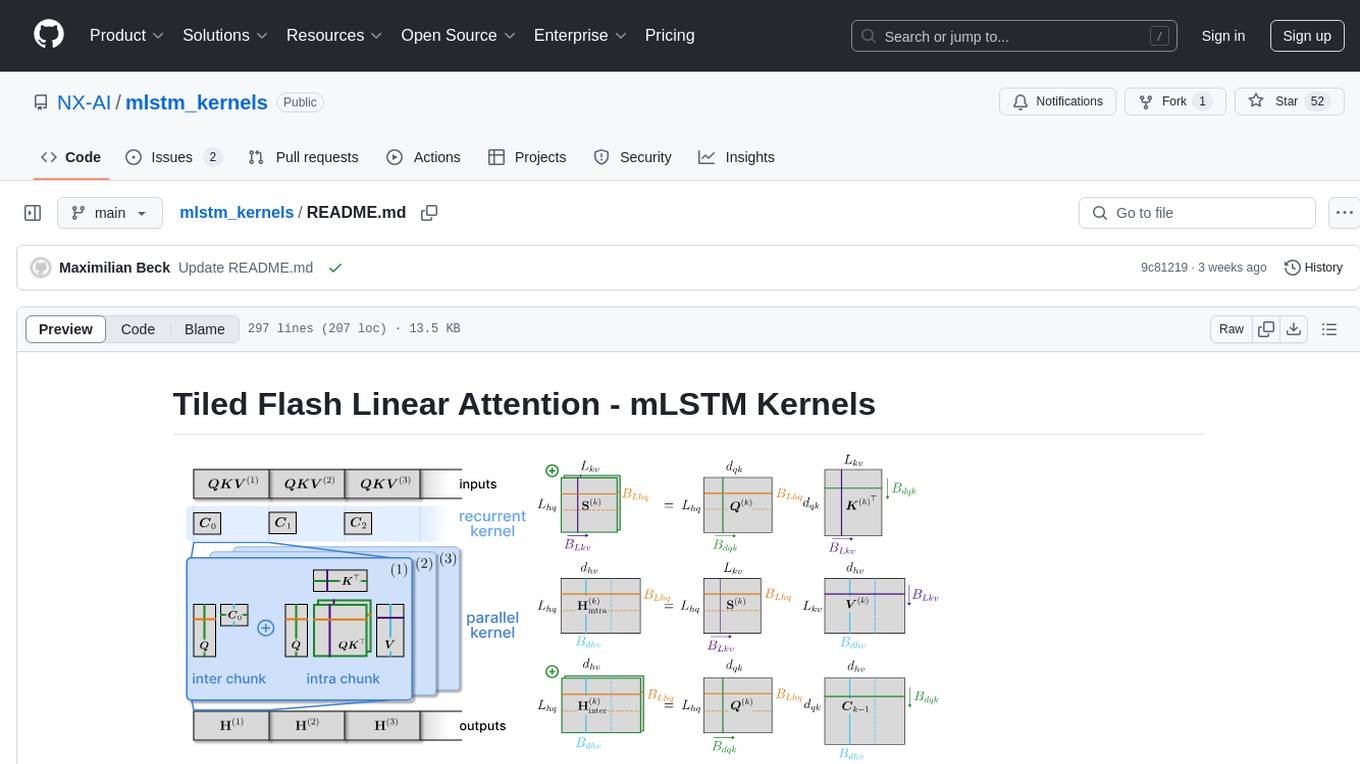

This library provides fast and efficient mLSTM training and inference Triton kernels. The chunkwise-parallel mLSTM Kernels are built on Tiled Flash Linear Attention (TFLA).

This repository also contains an easy to extend library for any kind of runtime benchmarks, which we use to benchmark our mLSTM kernels, as well as full mLSTM Huggingface models.

At its core the mLSTM Kernel library contains several implementations of the mLSTM in JAX, PyTorch as well as kernels in Triton,

which build three toplevel modules within the mlstm_kernels library:

-

jax: Contains JAX native implementations of the mLSTM, as well as JAX Triton integrations. -

torch: Contains PyTorch native implementations of the mLSTM, as well the Triton integrations for PyTorch. It also contains the configurable PyTorch backend module for simple integration of the mLSTM kernels into your models (see below for further details). -

triton: Contains the Triton kernels for the mLSTM, as well as kernel launch parameter heuristics.

The utils module contains code for unit tests, additional analysis (such as the transfer behavior analysis from the TFLA paper) or the benchmark library, which is discussed in detail below.

Each of the three toplevel modules, contains three different types of implementations and kernels for the mLSTM:

-

chunkwise: Chunkwise kernels, that process chunks of the sequence in parallel. These include the TFLA kernels. -

parallel: Parallel kernels that process a sequence in parallel (like Attention). Overall the runtime of these kernels scales quadratically with sequence length. -

recurrent: Recurrent step kernels for text generation during inference.

Runtime comparison of mLSTM chunkwise kernels against other baselines on a NVIDA H100 GPU with a constant number of tokens. This means that as we increase the sequence length on the x-axis we proportionally decrease the batch size to keep the overall number of tokens constant. This is the same setup as for example in FlashAttention 3.

Left: Forward pass Right: Forward and backward pass

We benchmark the two mLSTM versions: mLSTM with exponential input gate (mLSTMexp) and mLSTM with sigmoid input gate (mLSTMsig)

-

mLSTMexp (limit chunk): mLSTMexp kernel with limited chunk size (

chunk_size=64). -

mLSTMexp (TFLA XL chunk): mLSTMexp TFLA kernel with unlimited chunk size (in this benchmark

chunk_size=128) -

mLSTMsig (TFLA XL chunk): mLSTMsig TFLA kernel with unlimited chunk size (in this benchmark

chunk_size=128)

In the following

limit_chunkmeans chunkwise kernels that are limited in chunk_size andxl_chunkmeans TFLA kernels.

For more details we refer to the TFLA paper.

You can find the conda environment file in the envs/ folder. We recommend to use the latest file, i.e. environment_pt251cu124.yaml

Then you can install the mLSTM kernels via pip: pip install mlstm_kernels

or by cloning the repository.

In this library we proivide PyTorch, JAX and Triton implementations of the mLSTM. For the Triton kernels, we provide wrappers in PyTorch and JAX.

There are two options to use our implementations and kernels:

This is the recommended option, if you want to use our mLSTM kernels in your own (language) model.

The backend module is implemented in mlstm_kernels/torch/backend_module.py and provides a configurable wrapper around all our mLSTM implementations and kernels.

Note: This is also how these kernels are implemented in our official implementation for the xLSTM 7B model (see xLSTM 7B model.py)

It allows to switch between training and inference mode and automatically selects the respective kernels.

For example the following code snippet configures the mLSTMBackend to use our TFLA mLSTMexp kernel:

# we use the mLSTMexp TFLA kernel

# we also configure to use the triton step kernel for inference

mlstm_backend_config = mLSTMBackendConfig(

chunkwise_kernel="chunkwise--triton_xl_chunk",

sequence_kernel="native_sequence__triton",

step_kernel="triton",

chunk_size=256,

return_last_states=False,

)

mlstm_backend = mLSTMBackend(mlstm_backend_config)

# run the backend

DEVICE = torch.device("cuda")

DTYPE = torch.bfloat16

B = 2

S = 512

DHQK = 128

DHHV = 256

NH = 4

# create input tensors

torch.manual_seed(1)

matQ = torch.randn((B, NH, S, DHQK), dtype=DTYPE, device=DEVICE)

matK = torch.randn((B, NH, S, DHQK), dtype=DTYPE, device=DEVICE)

matV = torch.randn((B, NH, S, DHHV), dtype=DTYPE, device=DEVICE)

vecI = torch.randn((B, NH, S), dtype=DTYPE, device=DEVICE)

vecF = 3.0 + torch.randn((B, NH, S), dtype=DTYPE, device=DEVICE)

matH = mlstm_backend(q=matQ, k=matK, v=matV, i=vecI, f=vecF)Quickstart: Have a look at the demo notebook demo/integrate_mlstm_via_backend_module_option1.ipynb.

If you directly want to use a specific kernel you can directly import the kernel from the respective module. The following code snippet import the TFLA mLSTMexp kernel and runs a forward pass.

import torch

# directly import mLSTMexp TFLA kernel

from mlstm_kernels.torch.chunkwise.triton_xl_chunk import mlstm_chunkwise__xl_chunk

# run the kernel

DEVICE = torch.device("cuda")

DTYPE = torch.bfloat16

B = 2

S = 512

DHQK = 128

DHHV = 256

NH = 4

torch.manual_seed(1)

matQ = torch.randn((B, NH, S, DHQK), dtype=DTYPE, device=DEVICE)

matK = torch.randn((B, NH, S, DHQK), dtype=DTYPE, device=DEVICE)

matV = torch.randn((B, NH, S, DHHV), dtype=DTYPE, device=DEVICE)

vecI = torch.randn((B, NH, S), dtype=DTYPE, device=DEVICE)

vecF = 3.0 + torch.randn((B, NH, S), dtype=DTYPE, device=DEVICE)

matH1 = mlstm_chunkwise__xl_chunk(

q=matQ, k=matK, v=matV, i=vecI, f=vecF, return_last_states=False, chunk_size=256

)You can also get a specific kernel function via its kernel specifier.

First, display all available kernels via get_available_mlstm_kernels().

This displays all kernels that can be used for training and that have a similar function signature such that they can be used interchangably.

# display all available mlstm chunkwise and parallel kernels

from mlstm_kernels.torch import get_available_mlstm_kernels

get_available_mlstm_kernels()['chunkwise--native_autograd',

'chunkwise--native_custbw',

'chunkwise--triton_limit_chunk',

'chunkwise--triton_xl_chunk',

'chunkwise--triton_xl_chunk_siging',

'parallel--native_autograd',

'parallel--native_custbw',

'parallel--native_stablef_autograd',

'parallel--native_stablef_custbw',

'parallel--triton_limit_headdim',

'parallel--native_siging_autograd',

'parallel--native_siging_custbw']

Then select a kernel via get_mlstm_kernel():

# select the kernel

from mlstm_kernels.torch import get_mlstm_kernel

mlstm_chunkwise_xl_chunk = get_mlstm_kernel("chunkwise--triton_xl_chunk")

matH2 = mlstm_chunkwise_xl_chunk(

q=matQ, k=matK, v=matV, i=vecI, f=vecF, return_last_states=False, chunk_size=256

)

torch.allclose(matH1, matH2, atol=1e-3, rtol=1e-3) # TrueQuickstart for option 2 and 3: Have a look at the demo notebook demo/integrate_mlstm_via_direct_import_option2and3.ipynb.

The JAX module mlstm_kernels.jax mirrors the PyTorch module mlstm_kernels.torch and can be used in the way as the PyTorch kernels with option 2.

The module mlstm_kernels.utils.benchmark contains a configurable benchmark library for benchmarking the runtime and GPU memory usage of kernels or models.

We use this library for all our benchmarks in the TFLA paper and the xLSTM 7B paper.

Step 1: To begin please have a look at mlstm_kernels/utils/benchmark/benchmarks/interface.py

At the core of the benchmark library, there is the BenchmarkInterface dataclass, which is the abstract base class that every new benchmark should inherit from.

The BenchmarkInterface dataclass holds generic benchmark parameters, defines the setup_benchmark function that must be overridden for every specific benchmark and also defines the function to benchmark benchmark_fn, which is the function that is benchmarked.

To run the benchmark the BenchmarkInterface has the method run_benchmark.

The BenchmarkCreator defines the benchmark collection, i.e. the collection of benchmarks that can be run and configured together via a single config.

To create a new benchmark collection, with several benchmarks one has to implement a new BenchmarkCreator.

This is a function that takes as input a KernelSpec dataclass (containing the specification for the benchmark class) and a parameter dict with overrides. It then creates and returns the specified benchmark.

Step 2: Next have a look at mlstm_kernels/utils/benchmark/param_handling.py in order to understand how the benchmarks are configured through a unified config.

We use the dataclass KernelSpec to provide a unified interface to our kernel benchmarks. The kernel_name must be a unique specifier within a benchmark collection. The additional_params field are parameters that are overriden in the respective BenchmarkInterface class.

One level above is the BenchmarkConfig dataclass. This config class enables to configure sweeps over multiple KernelSpec dataclasses.

Step 3: Finally, have a look at mlstm_kernels/utils/benchmark/run_benchmark.py and a corresponding benchmark script, e.g. scripts/run_training_kernel_benchmarks.py.

The "benchmark loops" are implemented in run_benchmark.py. These take as input a BenchmarkConfig and a BenchmarkCreator and run every benchmark member specified in the kernel specs with every parameter combination.

The run_and_record_benchmarks() functions executes these loops, and records the results to disk via .csv files and plots.

Finally, in our case we create scripts that collect several configured benchmarks, which we can then run via different arguments, see for e.g. scripts/run_training_kernel_benchmarks.py.

You should now be able to understand the structure of our benchmark suites, i.e. collections of benchmarks that are run together.

In this repository we create several benchmark suites, for example the kernel benchmarks for the TFLA paper or the model benchmarks for the xLSTM 7B paper.

These are implemented in mlstm_kernels/utils/benchmark/benchmarks/training_kernel_benchmarks.py and mlstm_kernels/utils/benchmark/benchmarks/huggingface_model_benchmark.py, respectively.

Quickstart: For a quick start please have a look at the demo notebook: demo/kernel_speed_benchmark.ipynb.

The following command runs the mLSTM kernels from the figure above. Note that you need a large GPU memory in order to fit the long sequences and large embedding dimension of 4096 for a 7B model.

PYTHONPATH=. python scripts/run_training_kernel_benchmarks.py --consttoken_benchmark mlstm_triton --folder_suffix "mlstm_bench" --num_heads 16 --half_qkdim 1It will create a new subfolder in outputs_kernel_benchmarks/ that contains the results.

The unit tests cross-check the different kernel implementations on numerical deviations for different dtypes. You can run all of them with the following command:

pytest -s tests/torch

# make sure you are in a JAX GPU environment

pytest -s tests/jaxThe -s disables the log capturing so you see the results directly on the command line.

Each test will log the outputs to a new folder with the timestamp as name in the test_outputs/ directory.

Note: The the JAX tests were only tested on NVIDIA H100 GPUs.

Please cite our papers if you use this codebase, or otherwise find our work valuable:

@article{beck:25tfla,

title = {{Tiled Flash Linear Attention}: More Efficient Linear RNN and xLSTM Kernels},

author = {Maximilian Beck and Korbinian Pöppel and Phillip Lippe and Sepp Hochreiter},

year = {2025},

volume = {2503.14376},

journal = {arXiv},

primaryclass = {cs.LG},

url = {https://arxiv.org/abs/2503.14376}

}

@article{beck:25xlstm7b,

title = {{xLSTM 7B}: A Recurrent LLM for Fast and Efficient Inference},

author = {Maximilian Beck and Korbinian Pöppel and Phillip Lippe and Richard Kurle and Patrick M. Blies and Günter Klambauer and Sebastian Böck and Sepp Hochreiter},

year = {2025},

volume = {2503.13427},

journal = {arXiv},

primaryclass = {cs.LG},

url = {https://arxiv.org/abs/2503.13427}

}

@inproceedings{beck:24xlstm,

title={xLSTM: Extended Long Short-Term Memory},

author={Maximilian Beck and Korbinian Pöppel and Markus Spanring and Andreas Auer and Oleksandra Prudnikova and Michael Kopp and Günter Klambauer and Johannes Brandstetter and Sepp Hochreiter},

booktitle = {Thirty-eighth Conference on Neural Information Processing Systems},

year={2024},

url={https://arxiv.org/abs/2405.04517},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mlstm_kernels

Similar Open Source Tools

mlstm_kernels

This repository provides fast and efficient mLSTM training and inference Triton kernels built on Tiled Flash Linear Attention (TFLA). It includes implementations in JAX, PyTorch, and Triton, with chunkwise, parallel, and recurrent kernels for mLSTM. The repository also contains a benchmark library for runtime benchmarks and full mLSTM Huggingface models.

mirage

Mirage Persistent Kernel (MPK) is a compiler and runtime system that automatically transforms LLM inference into a single megakernel—a fused GPU kernel that performs all necessary computation and communication within a single kernel launch. This end-to-end GPU fusion approach reduces LLM inference latency by 1.2× to 6.7×, all while requiring minimal developer effort.

rtdl-num-embeddings

This repository provides the official implementation of the paper 'On Embeddings for Numerical Features in Tabular Deep Learning'. It focuses on transforming scalar continuous features into vectors before integrating them into the main backbone of tabular neural networks, showcasing improved performance. The embeddings for continuous features are shown to enhance the performance of tabular DL models and are applicable to various conventional backbones, offering efficiency comparable to Transformer-based models. The repository includes Python packages for practical usage, exploration of metrics and hyperparameters, and reproducing reported results for different algorithms and datasets.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

x-lstm

This repository contains an unofficial implementation of the xLSTM model introduced in Beck et al. (2024). It serves as a didactic tool to explain the details of a modern Long-Short Term Memory model with competitive performance against Transformers or State-Space models. The repository also includes a Lightning-based implementation of a basic LLM for multi-GPU training. It provides modules for scalar-LSTM and matrix-LSTM, as well as an xLSTM LLM built using Pytorch Lightning for easy training on multi-GPUs.

crb

CRB (Composable Runtime Blocks) is a unique framework that implements hybrid workloads by seamlessly combining synchronous and asynchronous activities, state machines, routines, the actor model, and supervisors. It is ideal for building massive applications and serves as a low-level framework for creating custom frameworks, such as AI-agents. The core idea is to ensure high compatibility among all blocks, enabling significant code reuse. The framework allows for the implementation of algorithms with complex branching, making it suitable for building large-scale applications or implementing complex workflows, such as AI pipelines. It provides flexibility in defining structures, implementing traits, and managing execution flow, allowing users to create robust and nonlinear algorithms easily.

kvpress

This repository implements multiple key-value cache pruning methods and benchmarks using transformers, aiming to simplify the development of new methods for researchers and developers in the field of long-context language models. It provides a set of 'presses' that compress the cache during the pre-filling phase, with each press having a compression ratio attribute. The repository includes various training-free presses, special presses, and supports KV cache quantization. Users can contribute new presses and evaluate the performance of different presses on long-context datasets.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

storm

STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search. While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage. **Try out our [live research preview](https://storm.genie.stanford.edu/) to see how STORM can help your knowledge exploration journey and please provide feedback to help us improve the system 🙏!**

PDEBench

PDEBench provides a diverse and comprehensive set of benchmarks for scientific machine learning, including challenging and realistic physical problems. The repository consists of code for generating datasets, uploading and downloading datasets, training and evaluating machine learning models as baselines. It features a wide range of PDEs, realistic and difficult problems, ready-to-use datasets with various conditions and parameters. PDEBench aims for extensibility and invites participation from the SciML community to improve and extend the benchmark.

LLMUnity

LLM for Unity enables seamless integration of Large Language Models (LLMs) within the Unity engine, allowing users to create intelligent characters for immersive player interactions. The tool supports major LLM models, runs locally without internet access, offers fast inference on CPU and GPU, and is easy to set up with a single line of code. It is free for both personal and commercial use, tested on Unity 2021 LTS, 2022 LTS, and 2023. Users can build multiple AI characters efficiently, use remote servers for processing, and customize model settings for text generation.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

POPPER

Popper is an agentic framework for automated validation of free-form hypotheses using Large Language Models (LLMs). It follows Karl Popper's principle of falsification and designs falsification experiments to validate hypotheses. Popper ensures strict Type-I error control and actively gathers evidence from diverse observations. It delivers robust error control, high power, and scalability across various domains like biology, economics, and sociology. Compared to human scientists, Popper achieves comparable performance in validating complex biological hypotheses while reducing time by 10 folds, providing a scalable, rigorous solution for hypothesis validation.

aire

Aire is a modern Laravel form builder with a focus on expressive and beautiful code. It allows easy configuration of form components using fluent method calls or Blade components. Aire supports customization through config files and custom views, data binding with Eloquent models or arrays, method spoofing, CSRF token injection, server-side and client-side validation, and translations. It is designed to run on Laravel 5.8.28 and higher, with support for PHP 7.1 and higher. Aire is actively maintained and under consideration for additional features like read-only plain text, cross-browser support for custom checkboxes and radio buttons, support for Choices.js or similar libraries, improved file input handling, and better support for content prepending or appending to inputs.

generative-models

Generative Models by Stability AI is a repository that provides various generative models for research purposes. It includes models like Stable Video 4D (SV4D) for video synthesis, Stable Video 3D (SV3D) for multi-view synthesis, SDXL-Turbo for text-to-image generation, and more. The repository focuses on modularity and implements a config-driven approach for building and combining submodules. It supports training with PyTorch Lightning and offers inference demos for different models. Users can access pre-trained models like SDXL-base-1.0 and SDXL-refiner-1.0 under a CreativeML Open RAIL++-M license. The codebase also includes tools for invisible watermark detection in generated images.

For similar tasks

mlstm_kernels

This repository provides fast and efficient mLSTM training and inference Triton kernels built on Tiled Flash Linear Attention (TFLA). It includes implementations in JAX, PyTorch, and Triton, with chunkwise, parallel, and recurrent kernels for mLSTM. The repository also contains a benchmark library for runtime benchmarks and full mLSTM Huggingface models.

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

OnAIR

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment. The platform provides tools for generating environments, handling telemetry data through Redis, running unit tests, and contributing to the repository. Users can set up a conda environment, configure telemetry and Redis examples, run simulations, and conduct unit tests to ensure the functionality of their AI algorithms. The platform also includes guidelines for licensing, copyright, and contributions to the repository.

gemma

Gemma is a family of open-weights Large Language Model (LLM) by Google DeepMind, based on Gemini research and technology. This repository contains an inference implementation and examples, based on the Flax and JAX frameworks. Gemma can run on CPU, GPU, and TPU, with model checkpoints available for download. It provides tutorials, reference implementations, and Colab notebooks for tasks like sampling and fine-tuning. Users can contribute to Gemma through bug reports and pull requests. The code is licensed under the Apache License, Version 2.0.

metaflow-service

Metaflow Service is a metadata service implementation for Metaflow, providing a thin wrapper around a database to keep track of metadata associated with Flows, Runs, Steps, Tasks, and Artifacts. It includes features for managing DB migrations, launching compatible versions of the metadata service, and executing flows locally. The service can be run using Docker or as a standalone service, with options for testing and running unit/integration tests. Users can interact with the service via API endpoints or utility CLI tools.

firebase-ios-sdk

This repository contains the source code for all Apple platform Firebase SDKs except FirebaseAnalytics. Firebase is an app development platform with tools to help you build, grow, and monetize your app. It provides installation methods like Standard pod install, Swift Package Manager, Installing from the GitHub repo, and Experimental Carthage. Development requires Xcode 16.2 or later, and supports CocoaPods and Swift Package Manager. The repository includes instructions for adding a new Firebase Pod, managing headers and imports, code formatting, running unit tests, running sample apps, and generating coverage reports. Specific component instructions are provided for Firebase AI Logic, Firebase Auth, Firebase Database, Firebase Dynamic Links, Firebase Performance Monitoring, Firebase Storage, and Push Notifications. Firebase also offers beta support for macOS, Catalyst, and tvOS, with community support for visionOS and watchOS.

langchain-google

LangChain Google is a repository containing three packages with Google integrations: langchain-google-genai for Google Generative AI models, langchain-google-vertexai for Google Cloud Generative AI on Vertex AI, and langchain-google-community for other Google product integrations. The repository is organized as a monorepo with a structure including libs for different packages, and files like pyproject.toml and Makefile for building, linting, and testing. It provides guidelines for contributing, local development dependencies installation, formatting, linting, working with optional dependencies, and testing with unit and integration tests. The focus is on maintaining unit test coverage and avoiding excessive integration tests, with annotations for GCP infrastructure-dependent tests.

For similar jobs

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

awesome-RK3588

RK3588 is a flagship 8K SoC chip by Rockchip, integrating Cortex-A76 and Cortex-A55 cores with NEON coprocessor for 8K video codec. This repository curates resources for developing with RK3588, including official resources, RKNN models, projects, development boards, documentation, tools, and sample code.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.