roo-code-memory-bank

🧠 Roo Code Memory Bank - Now with new debug mode! (Thanks @TheRealAlexV) Solve the AI context challenge: maintain deep project understanding across sessions with our structured VS Code memory system. Never repeat project details again! ✨

Stars: 200

Roo Code Memory Bank is a tool designed for AI-assisted development to maintain project context across sessions. It provides a structured memory system integrated with VS Code, ensuring deep understanding of the project for the AI assistant. The tool includes key components such as Memory Bank for persistent storage, Mode Rules for behavior configuration, VS Code Integration for seamless development experience, and Real-time Updates for continuous context synchronization. Users can configure custom instructions, initialize the Memory Bank, and organize files within the project root directory. The Memory Bank structure includes files for tracking session state, technical decisions, project overview, progress tracking, and optional project brief and system patterns documentation. Features include persistent context, smart workflows for specialized tasks, knowledge management with structured documentation, and cross-referenced project knowledge. Pro tips include handling multiple projects, utilizing Debug mode for troubleshooting, and managing session updates for synchronization. The tool aims to enhance AI-assisted development by providing a comprehensive solution for maintaining project context and facilitating efficient workflows.

README:

Roo Code Memory Bank solves a critical challenge in AI-assisted development: maintaining context across sessions. By providing a structured memory system integrated with VS Code, it ensures your AI assistant maintains a deep understanding of your project across sessions.

graph LR

A[Memory Bank] --> B[Core Files]

A --> C[Mode Rules]

A --> D[VS Code UI]

B --> E[Project Context]

B --> F[Decisions]

B --> G[Progress]

C --> H[Architect]

C --> I[Code]

C --> J[Ask]

C --> K1[Debug]

K[Real-time Updates] --> B

K --> L[Continuous Sync]

L --> M[Auto-save]

L --> N[Event Tracking]- 🧠 Memory Bank: Persistent storage for project knowledge

- 📋 Mode Rules: YAML-based behavior configuration

- 🔧 VS Code Integration: Seamless development experience

- ⚡ Real-time Updates: Continuous context synchronization

Download and copy these files to your project's root directory:

| Mode | Rule File | Purpose |

|---|---|---|

| Code | .clinerules-code |

Implementation and coding tasks |

| Architect | .clinerules-architect |

System design and architecture |

| Ask | .clinerules-ask |

Information and assistance |

| Debug | .clinerules-debug |

Troubleshooting and problem-solving |

| Modes | .roomodes |

Additional modes |

⚠️ Important: Leave the "Custom Instructions" text boxes empty in VS Code settings (Roo Code Prompts section)

- Switch to Architect or Code mode in Roo Code chat

- Send a message (e.g., "hello")

- Roo will automatically:

- 🔍 Scan for

memory-bank/directory - 📁 Create it if missing (with your approval)

- 📝 Initialize core files

- 🚦 Provide next steps

- 🔍 Scan for

💡 Pro Tip: Project Brief

Create a projectBrief.md in your project root before initialization to give Roo immediate project context.

project-root/

├── .clinerules-architect

├── .clinerules-code

├── .clinerules-ask

├── .clinerules-debug

├── .roomodes

├── memory-bank/

│ ├── activeContext.md

│ ├── productContext.md

│ ├── progress.md

│ └── decisionLog.md

└── projectBrief.md

graph TD

MB[memory-bank/] --> AC[activeContext.md]

MB --> DL[decisionLog.md]

MB --> PC[productContext.md]

MB --> PR[progress.md]

MB --> PB[projectBrief.md]

MB --> SP[systemPatterns.md]

subgraph Core Files

AC[Current Session State]

DL[Technical Decisions]

PC[Project Overview]

PR[Progress Tracking]

end

subgraph Optional

PB[Project Brief]

SP[System Patterns]

end📖 View File Descriptions

| File | Purpose |

|---|---|

activeContext.md |

Tracks current goals, decisions, and session state |

decisionLog.md |

Records architectural choices and their rationale |

productContext.md |

Maintains high-level project context and knowledge |

progress.md |

Documents completed work and upcoming tasks |

projectBrief.md |

Contains initial project requirements (optional) |

systemPatterns.md |

Documents recurring patterns and standards |

- Remembers project details across sessions

- Maintains consistent understanding of your codebase

- Tracks decisions and their rationale

graph LR

A[Architect Mode] -->|Real-time Design Updates| B[Memory Bank]

C[Code Mode] -->|Real-time Implementation| B

D[Ask Mode] -->|Real-time Insights| B

F[Debug Mode] -->|Real-time Analysis| B

B -->|Instant Context| A

B -->|Instant Context| C

B -->|Instant Context| D

B -->|Instant Context| F

E[Event Monitor] -->|Continuous Sync| B- Mode-based operation for specialized tasks

- Automatic context switching

- Project-specific customization via rules

- Structured documentation with clear purposes

- Technical decision tracking with rationale

- Automated progress monitoring

- Cross-referenced project knowledge

graph TD

A[Workspace] --> B[Project 1]

A --> C[Project 2]

B --> D[memory-bank/]

C --> E[memory-bank/]

D --> F[Automatic Detection]

E --> FRoo automatically handles multiple Memory Banks in your workspace!

Roo Code Memory Bank includes a powerful Debug mode for systematic troubleshooting and problem-solving. This mode operates with surgical precision, focusing on root cause analysis and evidence-based verification.

- 🔍 Non-destructive Investigation: Read-only access ensures system integrity during analysis

- 📊 Diagnostic Tools: Access to logging, tracing, and system analysis tools

- 🔬 Systematic Analysis: Methodical problem investigation and isolation

- 🎯 Root Cause Identification: Traces error propagation through all system layers

- ✅ Evidence-based Verification: Validates findings through multiple checkpoints

Debug mode actively monitors and updates Memory Bank files based on:

- 🐛 Bug discoveries and error patterns

- 💾 Memory leaks and resource issues

- 🔄 Race conditions and deadlocks

- 📈 Performance bottlenecks

- 📝 Log analysis and trace outputs

graph TD

A[Debug Mode] --> B[Analysis]

B --> C[Findings]

C --> D[Memory Bank Updates]

D --> E[activeContext.md]

D --> F[progress.md]

D --> G[decisionLog.md]

E --> H[Current Issues]

F --> I[Debug Progress]

G --> J[Solution Decisions]Switch to Debug mode when you need to:

- Investigate system behavior

- Analyze failure patterns

- Isolate root causes

- Verify fixes

- Document debugging insights

- ⚡ Real-time Updates: Memory Bank automatically stays synchronized with your work

- 💾 Manual Updates: Use "UMB" or "update memory bank" as a fallback when:

- Ending a session unexpectedly

- Halting mid-task

- Recovering from connection issues

- Forcing a full synchronization

Apache 2.0 © 2025 GreatScottyMac

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for roo-code-memory-bank

Similar Open Source Tools

roo-code-memory-bank

Roo Code Memory Bank is a tool designed for AI-assisted development to maintain project context across sessions. It provides a structured memory system integrated with VS Code, ensuring deep understanding of the project for the AI assistant. The tool includes key components such as Memory Bank for persistent storage, Mode Rules for behavior configuration, VS Code Integration for seamless development experience, and Real-time Updates for continuous context synchronization. Users can configure custom instructions, initialize the Memory Bank, and organize files within the project root directory. The Memory Bank structure includes files for tracking session state, technical decisions, project overview, progress tracking, and optional project brief and system patterns documentation. Features include persistent context, smart workflows for specialized tasks, knowledge management with structured documentation, and cross-referenced project knowledge. Pro tips include handling multiple projects, utilizing Debug mode for troubleshooting, and managing session updates for synchronization. The tool aims to enhance AI-assisted development by providing a comprehensive solution for maintaining project context and facilitating efficient workflows.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

hia

HIA (Health Insights Agent) is an AI agent designed to analyze blood reports and provide personalized health insights. It features an intelligent agent-based architecture with multi-model cascade system, in-context learning, PDF upload and text extraction, secure user authentication, session history tracking, and a modern UI. The tech stack includes Streamlit for frontend, Groq for AI integration, Supabase for database, PDFPlumber for PDF processing, and Supabase Auth for authentication. The project structure includes components for authentication, UI, configuration, services, agents, and utilities. Contributions are welcome, and the project is licensed under MIT.

llm4s

LLM4S provides a simple, robust, and scalable framework for building Large Language Models (LLM) applications in Scala. It aims to leverage Scala's type safety, functional programming, JVM ecosystem, concurrency, and performance advantages to create reliable and maintainable AI-powered applications. The framework supports multi-provider integration, execution environments, error handling, Model Context Protocol (MCP) support, agent frameworks, multimodal generation, and Retrieval-Augmented Generation (RAG) workflows. It also offers observability features like detailed trace logging, monitoring, and analytics for debugging and performance insights.

open-health

OpenHealth is an AI health assistant that helps users manage their health data by leveraging AI and personal health information. It allows users to consolidate health data, parse it smartly, and engage in contextual conversations with GPT-powered AI. The tool supports various data sources like blood test results, health checkup data, personal physical information, family history, and symptoms. OpenHealth aims to empower users to take control of their health by combining data and intelligence for actionable health management.

HuatuoGPT-o1

HuatuoGPT-o1 is a medical language model designed for advanced medical reasoning. It can identify mistakes, explore alternative strategies, and refine answers. The model leverages verifiable medical problems and a specialized medical verifier to guide complex reasoning trajectories and enhance reasoning through reinforcement learning. The repository provides access to models, data, and code for HuatuoGPT-o1, allowing users to deploy the model for medical reasoning tasks.

WeKnora

WeKnora is a document understanding and semantic retrieval framework based on large language models (LLM), designed specifically for scenarios with complex structures and heterogeneous content. The framework adopts a modular architecture, integrating multimodal preprocessing, semantic vector indexing, intelligent recall, and large model generation reasoning to build an efficient and controllable document question-answering process. The core retrieval process is based on the RAG (Retrieval-Augmented Generation) mechanism, combining context-relevant segments with language models to achieve higher-quality semantic answers. It supports various document formats, intelligent inference, flexible extension, efficient retrieval, ease of use, and security and control. Suitable for enterprise knowledge management, scientific literature analysis, product technical support, legal compliance review, and medical knowledge assistance.

Starmoon

Starmoon is an affordable, compact AI-enabled device that can understand and respond to your emotions with empathy. It offers supportive conversations and personalized learning assistance. The device is cost-effective, voice-enabled, open-source, compact, and aims to reduce screen time. Users can assemble the device themselves using off-the-shelf components and deploy it locally for data privacy. Starmoon integrates various APIs for AI language models, speech-to-text, text-to-speech, and emotion intelligence. The hardware setup involves components like ESP32S3, microphone, amplifier, speaker, LED light, and button, along with software setup instructions for developers. The project also includes a web app, backend API, and background task dashboard for monitoring and management.

eko

Eko is a lightweight and flexible command-line tool for managing environment variables in your projects. It allows you to easily set, get, and delete environment variables for different environments, making it simple to manage configurations across development, staging, and production environments. With Eko, you can streamline your workflow and ensure consistency in your application settings without the need for complex setup or configuration files.

nexa-sdk

Nexa SDK is a comprehensive toolkit supporting ONNX and GGML models for text generation, image generation, vision-language models (VLM), and text-to-speech (TTS) capabilities. It offers an OpenAI-compatible API server with JSON schema mode and streaming support, along with a user-friendly Streamlit UI. Users can run Nexa SDK on any device with Python environment, with GPU acceleration supported. The toolkit provides model support, conversion engine, inference engine for various tasks, and differentiating features from other tools.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

nuitrack-sdk

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

shards

Shards is a high-performance, multi-platform, type-safe programming language designed for visual development. It is a dataflow visual programming language that enables building full-fledged apps and games without traditional coding. Shards features automatic type checking, optimized shard implementations for high performance, and an intuitive visual workflow for beginners. The language allows seamless round-trip engineering between code and visual models, empowering users to create multi-platform apps easily. Shards also powers an upcoming AI-powered game creation system, enabling real-time collaboration and game development in a low to no-code environment.

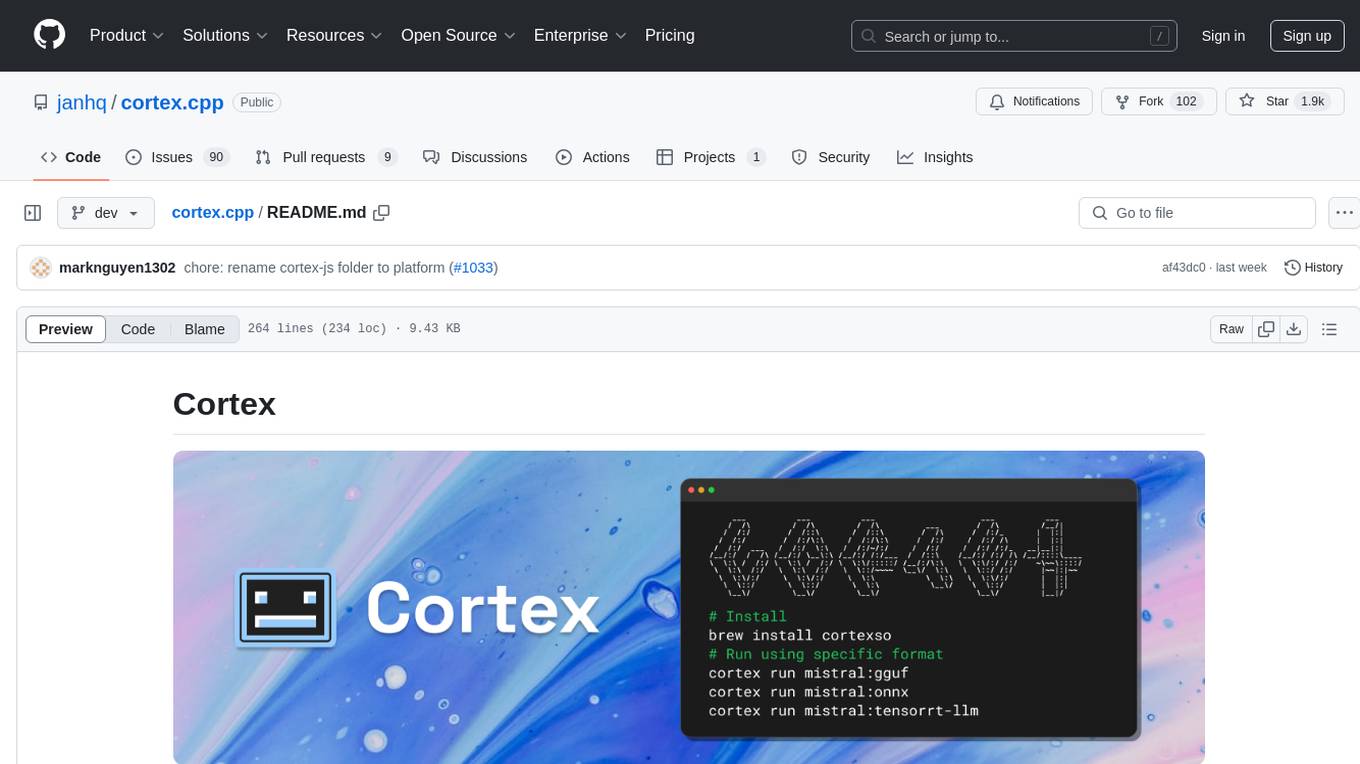

cortex.cpp

Cortex is a C++ AI engine with a Docker-like command-line interface and client libraries. It supports running AI models using ONNX, TensorRT-LLM, and llama.cpp engines. Cortex can function as a standalone server or be integrated as a library. The tool provides support for various engines and models, allowing users to easily deploy and interact with AI models. It offers a range of CLI commands for managing models, embeddings, and engines, as well as a REST API for interacting with models. Cortex is designed to simplify the deployment and usage of AI models in C++ applications.

Q-Bench

Q-Bench is a benchmark for general-purpose foundation models on low-level vision, focusing on multi-modality LLMs performance. It includes three realms for low-level vision: perception, description, and assessment. The benchmark datasets LLVisionQA and LLDescribe are collected for perception and description tasks, with open submission-based evaluation. An abstract evaluation code is provided for assessment using public datasets. The tool can be used with the datasets API for single images and image pairs, allowing for automatic download and usage. Various tasks and evaluations are available for testing MLLMs on low-level vision tasks.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

For similar tasks

roo-code-memory-bank

Roo Code Memory Bank is a tool designed for AI-assisted development to maintain project context across sessions. It provides a structured memory system integrated with VS Code, ensuring deep understanding of the project for the AI assistant. The tool includes key components such as Memory Bank for persistent storage, Mode Rules for behavior configuration, VS Code Integration for seamless development experience, and Real-time Updates for continuous context synchronization. Users can configure custom instructions, initialize the Memory Bank, and organize files within the project root directory. The Memory Bank structure includes files for tracking session state, technical decisions, project overview, progress tracking, and optional project brief and system patterns documentation. Features include persistent context, smart workflows for specialized tasks, knowledge management with structured documentation, and cross-referenced project knowledge. Pro tips include handling multiple projects, utilizing Debug mode for troubleshooting, and managing session updates for synchronization. The tool aims to enhance AI-assisted development by providing a comprehensive solution for maintaining project context and facilitating efficient workflows.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.