AI Safety Initiative

Building a Responsible AI Future

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Features

Advantages

Disadvantages

Frequently Asked Questions

Alternative AI tools for AI Safety Initiative

Similar sites

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

Lumenova AI

Lumenova AI is an AI platform that focuses on making AI ethical, transparent, and compliant. It provides solutions for AI governance, assessment, risk management, and compliance. The platform offers comprehensive evaluation and assessment of AI models, proactive risk management solutions, and simplified compliance management. Lumenova AI aims to help enterprises navigate the future confidently by ensuring responsible AI practices and compliance with regulations.

Enzai

Enzai is an AI governance platform designed to help businesses navigate and comply with AI regulations and standards. It offers solutions for model risk management, generative AI, and EU AI Act compliance. Enzai provides assessments, policies, AI registry, and governance overview features to ensure AI systems' compliance and efficiency. The platform is easy to set up, efficient to use, and supported by leading AI experts. Enzai aims to be a one-stop-shop for AI governance needs, offering tailored solutions for various use cases and industries.

Credo AI

Credo AI is a leading provider of AI governance, risk management, and compliance solutions. The company's platform helps organizations to track, prioritize, and control AI projects to ensure that AI remains profitable, compliant, and safe. Credo AI's platform is used by a variety of organizations, including Fortune 500 companies, government agencies, and non-profit organizations.

Fairo

Fairo is a platform that facilitates Responsible AI Governance, offering tools for reducing AI hallucinations, managing AI agents and assets, evaluating AI systems, and ensuring compliance with various regulations. It provides a comprehensive solution for organizations to align their AI systems ethically and strategically, automate governance processes, and mitigate risks. Fairo aims to make responsible AI transformation accessible to organizations of all sizes, enabling them to build technology that is profitable, ethical, and transformative.

Credo AI

Credo AI is a leading provider of AI governance, risk management, and compliance software. Our platform helps organizations to adopt AI safely and responsibly, while ensuring compliance with regulations and standards. With Credo AI, you can track and prioritize AI projects, assess AI vendor models for risk and compliance, create artifacts for audit, and more.

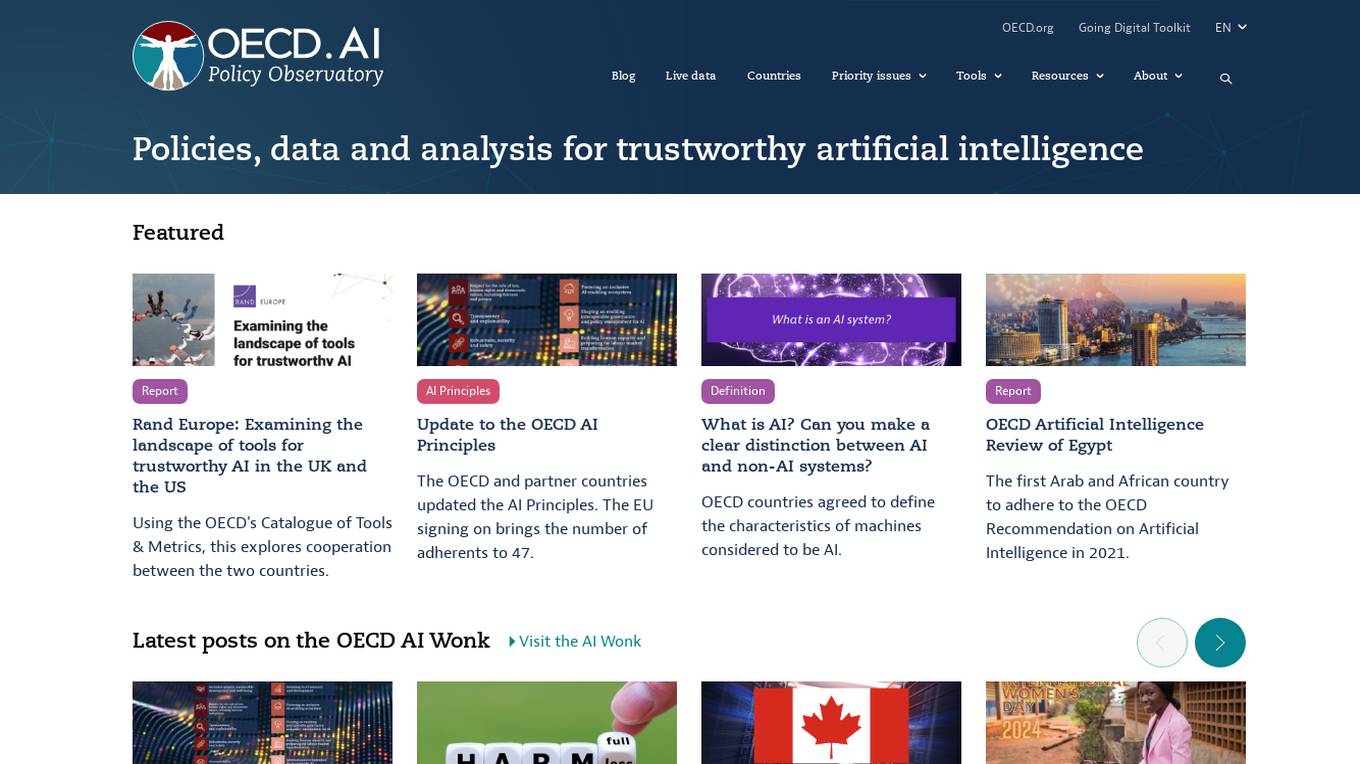

OECD.AI

The OECD Artificial Intelligence Policy Observatory, also known as OECD.AI, is a platform that focuses on AI policy issues, risks, and accountability. It provides resources, tools, and metrics to build and deploy trustworthy AI systems. The platform aims to promote innovative and trustworthy AI through collaboration with countries, stakeholders, experts, and partners. Users can access information on AI incidents, AI principles, policy areas, publications, and videos related to AI. OECD.AI emphasizes the importance of data privacy, generative AI management, AI computing capacities, and AI's potential futures.

Responsible AI Institute

The Responsible AI Institute is a global non-profit organization dedicated to equipping organizations and AI professionals with tools and knowledge to create, procure, and deploy AI systems that are safe and trustworthy. They offer independent assessments, conformity assessments, and certification programs to ensure that AI systems align with internal policies, regulations, laws, and best practices for responsible technology use. The institute also provides resources, news, and a community platform for members to collaborate and stay informed about responsible AI practices and regulations.

AIGA AI Governance Framework

The AIGA AI Governance Framework is a practice-oriented framework for implementing responsible AI. It provides organizations with a systematic approach to AI governance, covering the entire process of AI system development and operations. The framework supports compliance with the upcoming European AI regulation and serves as a practical guide for organizations aiming for more responsible AI practices. It is designed to facilitate the development and deployment of transparent, accountable, fair, and non-maleficent AI systems.

Robust Intelligence

Robust Intelligence is an end-to-end security solution for AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

AltrumAI

AltrumAI is an AI application that provides a platform for enterprises to control and monitor their AI systems in real-time. It offers solutions for deploying and scaling AI safely and compliantly, addressing compliance, security, and operational risks. The application empowers teams with intuitive policy configuration and enforcement, ensuring secure and seamless integration of Generative AI across the enterprise. AltrumAI aims to mitigate risks such as bias, data leaks, and unreliable AI outputs, while providing comprehensive AI risk coverage through guardrails and real-time monitoring.

BABL AI

BABL AI is a leading Auditing Firm specializing in Auditing and Certifying AI Systems, Consulting on Responsible AI best practices, and offering Online Education on related topics. They employ Certified Independent Auditors to ensure AI systems comply with global regulations, provide Responsible AI Consulting services, and offer Online Courses to empower aspiring AI Auditors. Their research division, The Algorithmic Bias Lab, focuses on developing industry-leading methodologies and best practices in Algorithmic Auditing and Responsible AI. BABL AI is known for its expertise, integrity, affordability, and efficiency in the field of AI auditing.

Monitaur

Monitaur is an AI governance software that provides a comprehensive platform for organizations to manage the entire lifecycle of their AI systems. It brings together data, governance, risk, and compliance teams onto one platform to mitigate AI risk, leverage full potential, and turn intention into action. Monitaur's SaaS products offer user-friendly workflows that document the lifecycle of AI journey on one platform, providing a single source of truth for AI that stays honest.

Adversa AI

Adversa AI is a platform that provides Secure AI Awareness, Assessment, and Assurance solutions for various industries to mitigate AI risks. The platform focuses on LLM Security, Privacy, Jailbreaks, Red Teaming, Chatbot Security, and AI Face Recognition Security. Adversa AI helps enable AI transformation by protecting it from cyber threats, privacy issues, and safety incidents. The platform offers comprehensive research, advisory services, and expertise in the field of AI security.

Aporia

Aporia is an AI control platform that provides real-time guardrails and security for AI applications. It offers features such as hallucination mitigation, prompt injection prevention, data leakage prevention, and more. Aporia helps businesses control and mitigate risks associated with AI, ensuring the safe and responsible use of AI technology.

K2 AI

K2 AI is an AI consulting company that offers a range of services from ideation to impact, focusing on AI strategy, implementation, operation, and research. They support and invest in emerging start-ups and push knowledge boundaries in AI. The company helps executives assess organizational strengths, prioritize AI use cases, develop sustainable AI strategies, and continuously monitor and improve AI solutions. K2 AI also provides executive briefings, model development, and deployment services to catalyze AI initiatives. The company aims to deliver business value through rapid, user-centric, and data-driven AI development.

For similar tasks

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

Visor.ai

Visor.ai is an AI Agentic Platform for Enterprise Solutions Company that offers AI Agents capable of learning from businesses, taking actions across systems, and achieving up to 85% automation rate. The platform provides endless possibilities for transforming operations and enhancing customer interactions through intelligent AI experiences. With features like real-time visibility, actionable insights, safe AI, oversight, analytics, guardrails, and powered by leading technology Nexa, Visor.ai aims to empower businesses with advanced AI capabilities.

For similar jobs

INSAIT

INSAIT is an Institute for Computer Science, Artificial Intelligence, and Technology located in Sofia, Bulgaria. The institute focuses on cutting-edge research areas such as Computer Vision, Robotics, Quantum Computing, Machine Learning, and Regulatory AI Compliance. INSAIT is known for its collaboration with top universities and organizations, as well as its commitment to fostering a diverse and inclusive environment for students and researchers.

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.