Generative-AI-Drug-Discovery

Drug Discovery Generative Artificial Intelligence Software Innovation

Stars: 61

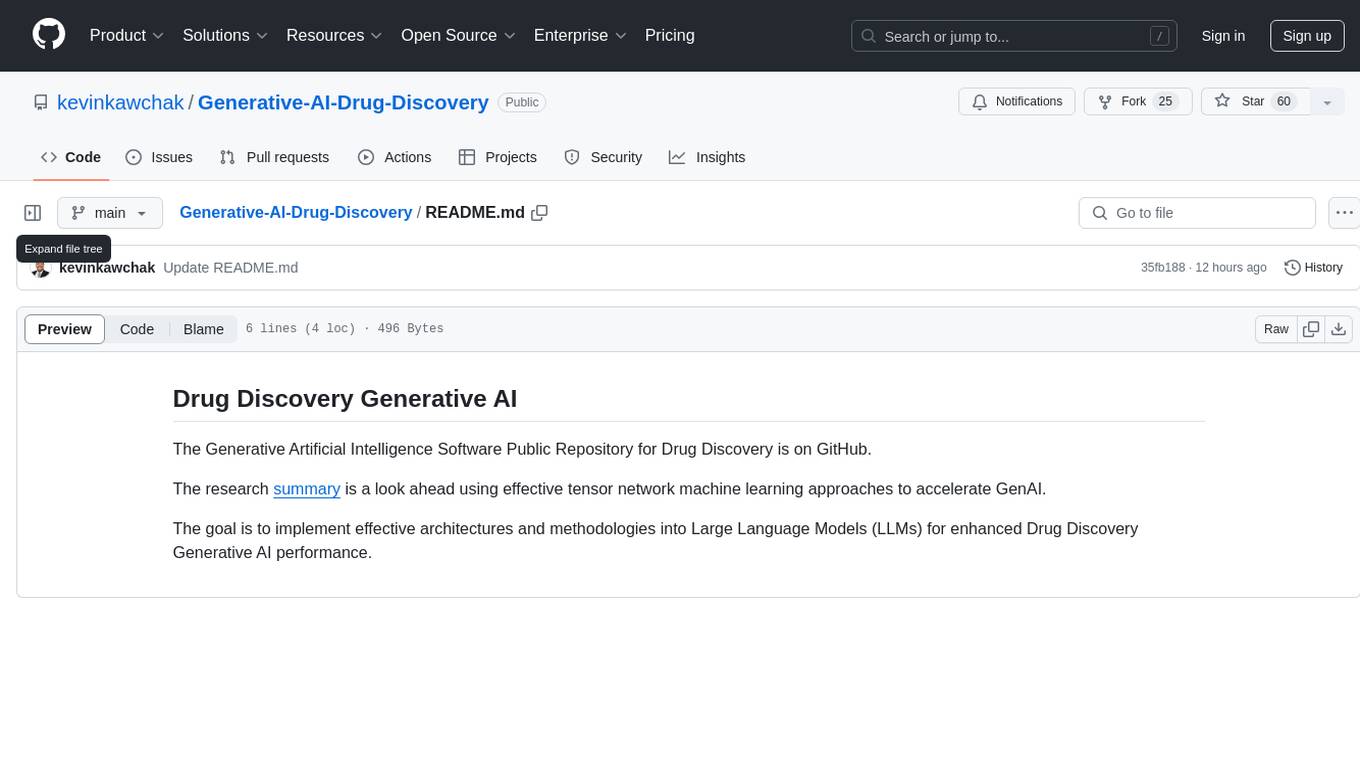

Generative-AI-Drug-Discovery is a public repository on GitHub focused on using tensor network machine learning approaches to accelerate GenAI for drug discovery. The repository aims to implement effective architectures and methodologies into Large Language Models (LLMs) to enhance Drug Discovery Generative AI performance.

README:

The Generative Artificial Intelligence Software Repository for Drug Discovery is on GitHub.

The goal is to implement effective architectures and methodologies into Large Language Models (LLMs) for enhanced Drug Discovery Generative AI performance.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Generative-AI-Drug-Discovery

Similar Open Source Tools

Generative-AI-Drug-Discovery

Generative-AI-Drug-Discovery is a public repository on GitHub focused on using tensor network machine learning approaches to accelerate GenAI for drug discovery. The repository aims to implement effective architectures and methodologies into Large Language Models (LLMs) to enhance Drug Discovery Generative AI performance.

New-AI-Drug-Discovery

New AI Drug Discovery is a repository focused on the applications of Large Language Models (LLM) in drug discovery. It provides resources, tools, and examples for leveraging LLM technology in the pharmaceutical industry. The repository aims to showcase the potential of using AI-driven approaches to accelerate the drug discovery process, improve target identification, and optimize molecular design. By exploring the intersection of artificial intelligence and drug development, this repository offers insights into the latest advancements in computational biology and cheminformatics.

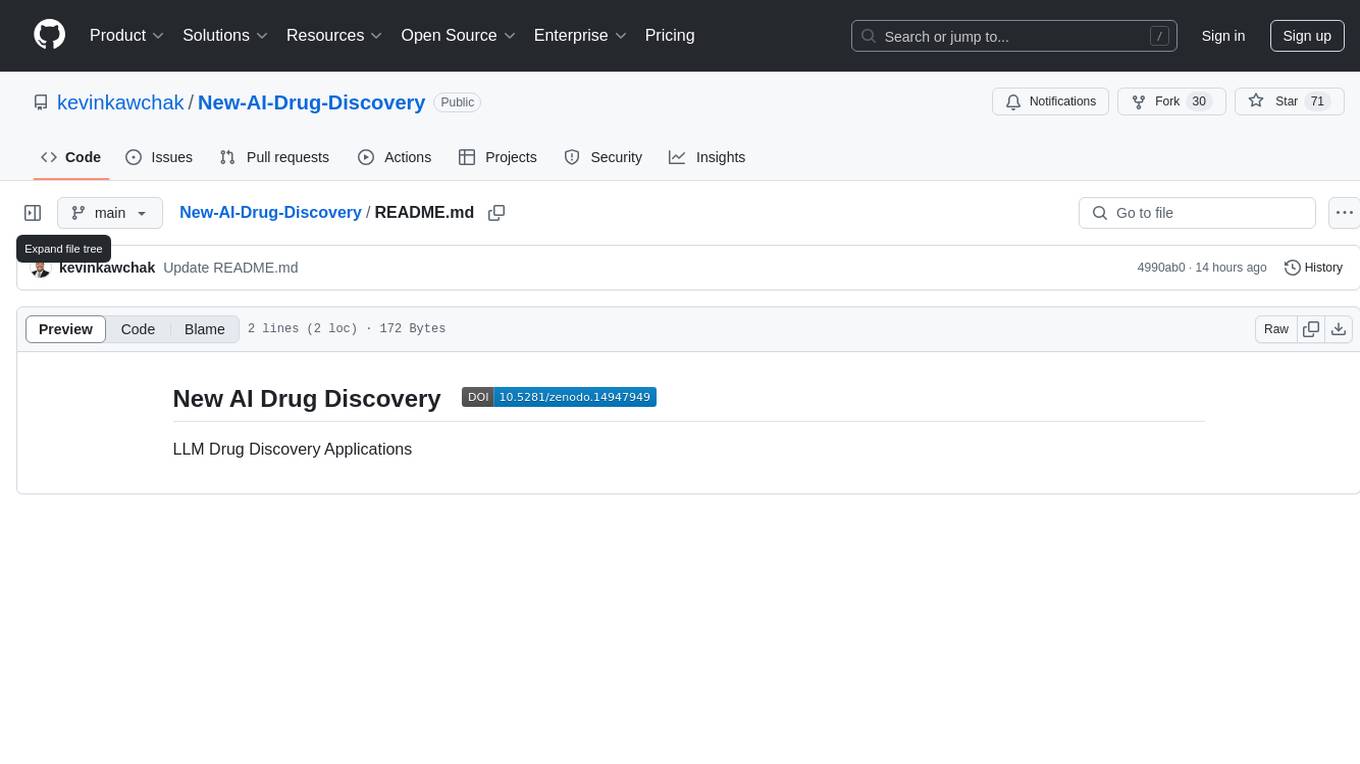

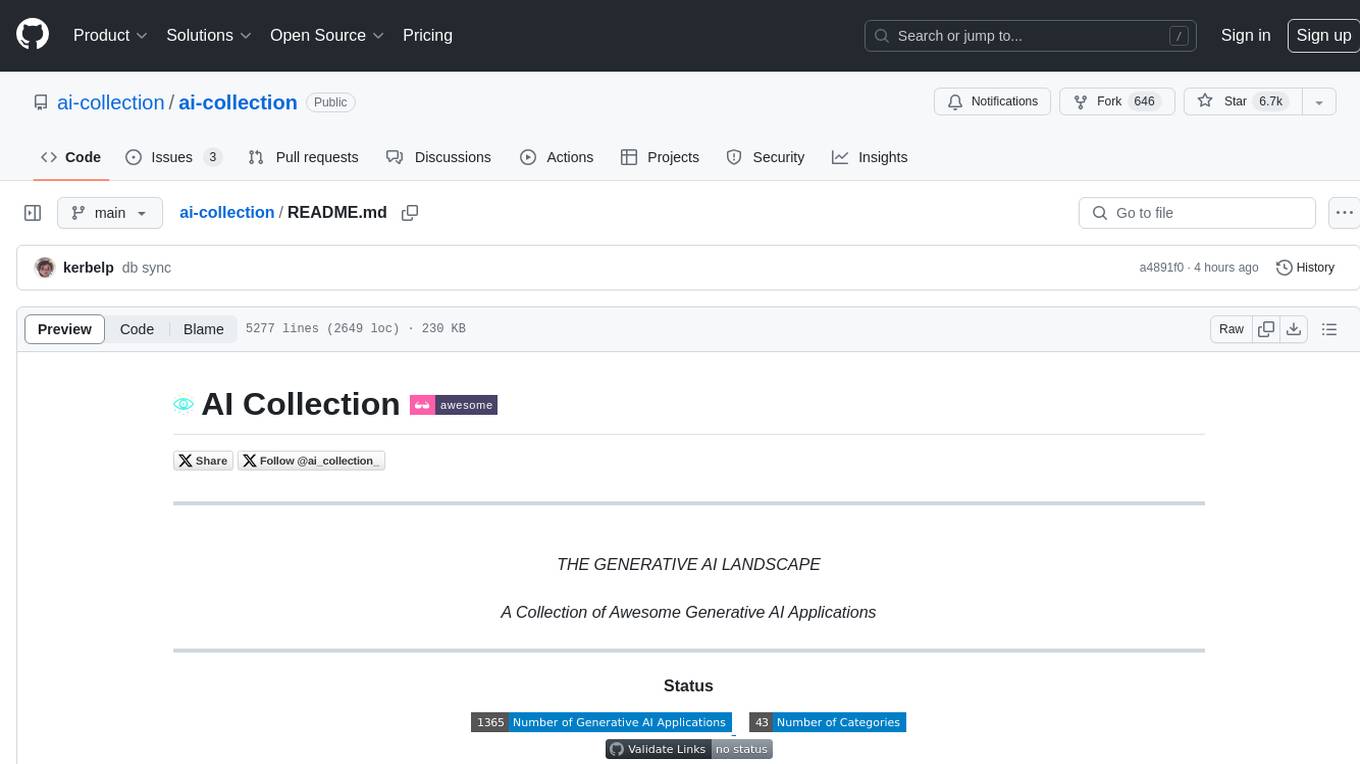

ai-collection

The ai-collection repository is a collection of various artificial intelligence projects and tools aimed at helping developers and researchers in the field of AI. It includes implementations of popular AI algorithms, datasets for training machine learning models, and resources for learning AI concepts. The repository serves as a valuable resource for anyone interested in exploring the applications of artificial intelligence in different domains.

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

llm_rl

llm_rl is a repository that combines llm (language model) and rl (reinforcement learning) techniques. It likely focuses on using language models in reinforcement learning tasks, such as natural language understanding and generation. The repository may contain implementations of algorithms that leverage both llm and rl to improve performance in various tasks. Developers interested in exploring the intersection of language models and reinforcement learning may find this repository useful for research and experimentation.

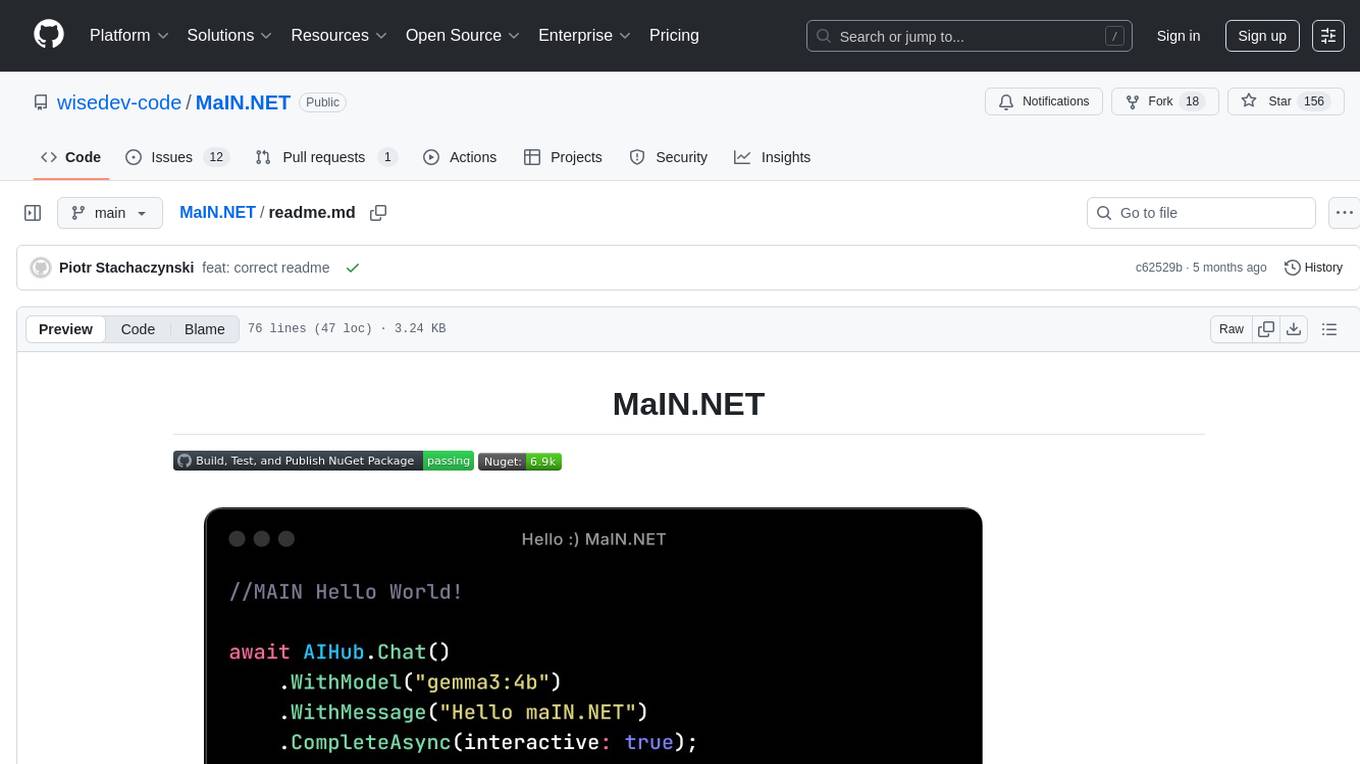

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

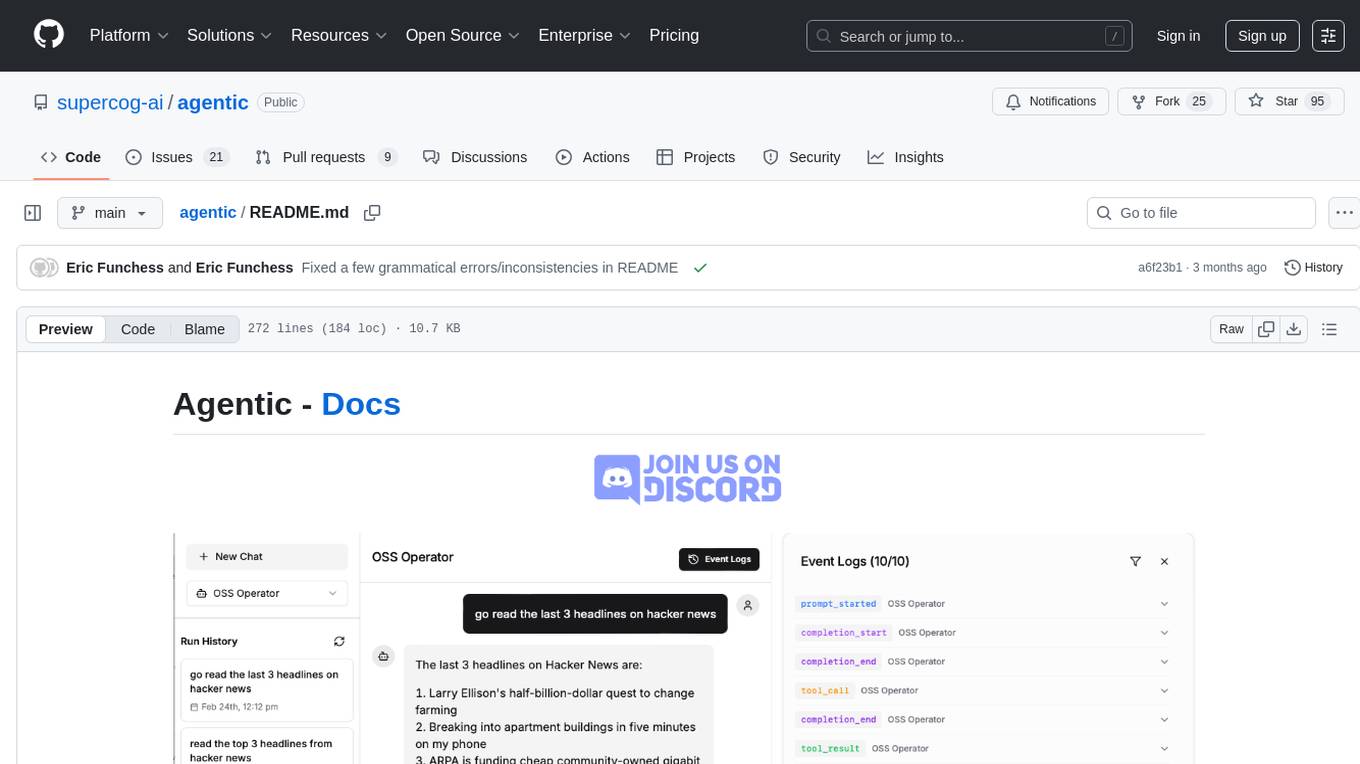

agentic

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

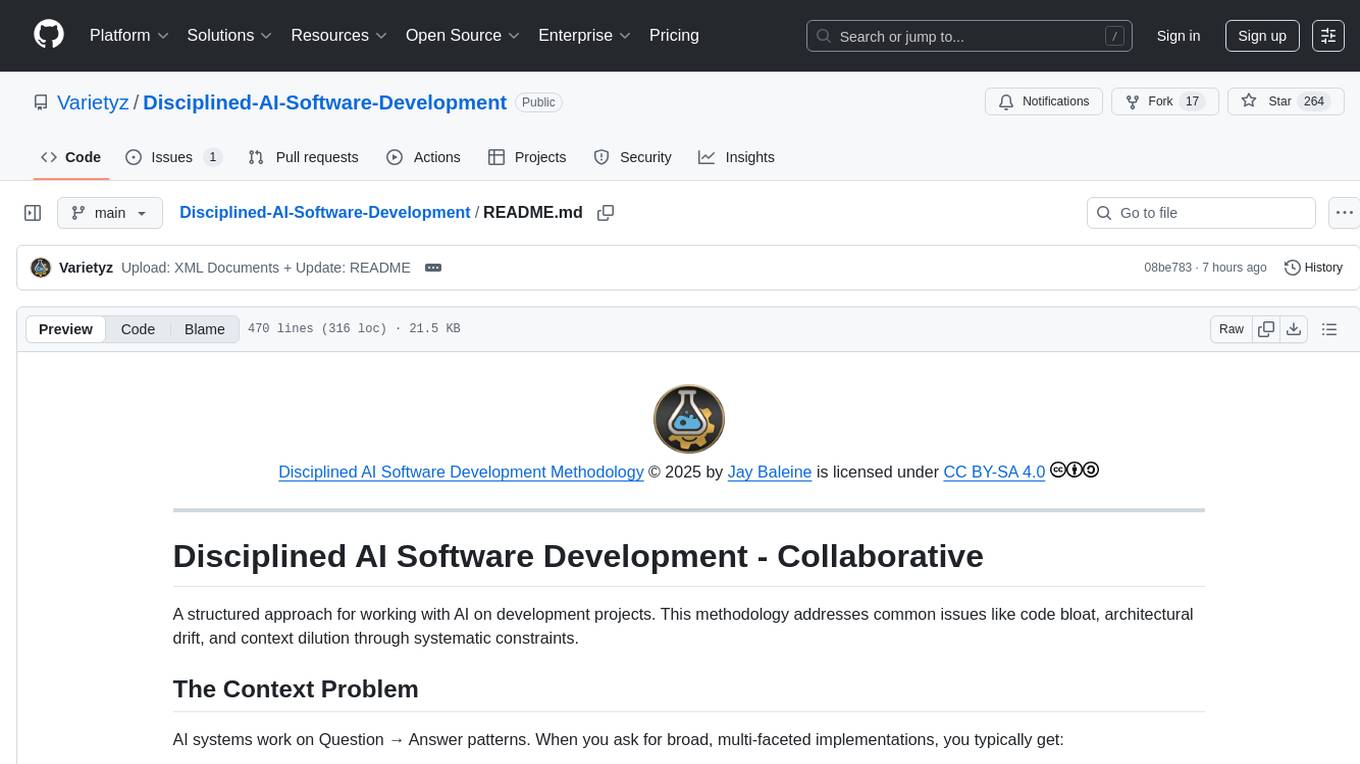

Disciplined-AI-Software-Development

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

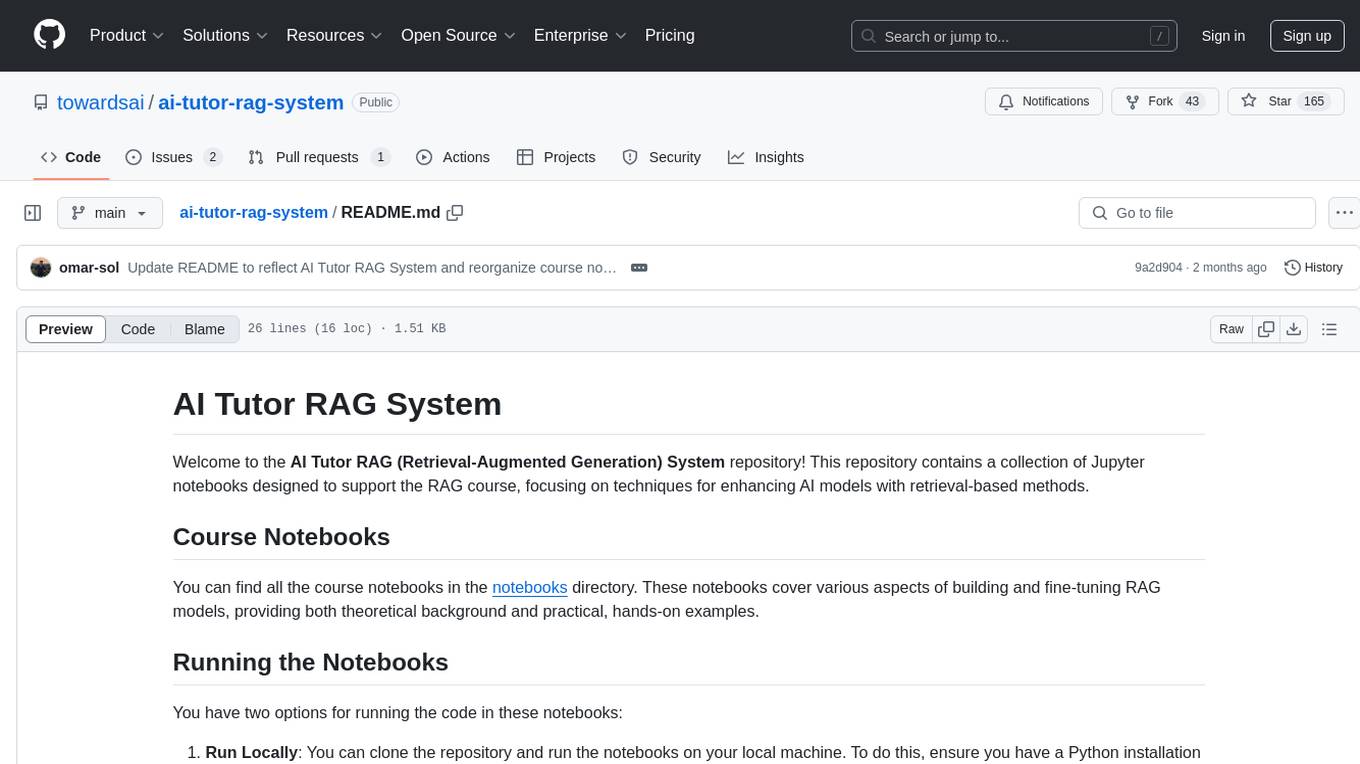

ai-tutor-rag-system

The AI Tutor RAG System repository contains Jupyter notebooks supporting the RAG course, focusing on enhancing AI models with retrieval-based methods. It covers foundational and advanced concepts in retrieval-augmented generation, including data retrieval techniques, model integration with retrieval systems, and practical applications of RAG in real-world scenarios.

sciml.ai

SciML.ai is an open source software organization dedicated to unifying packages for scientific machine learning. It focuses on developing modular scientific simulation support software, including differential equation solvers, inverse problems methodologies, and automated model discovery. The organization aims to provide a diverse set of tools with a common interface, creating a modular, easily-extendable, and highly performant ecosystem for scientific simulations. The website serves as a platform to showcase SciML organization's packages and share news within the ecosystem. Pull requests are encouraged for contributions.

multimodal_cognitive_ai

The multimodal cognitive AI repository focuses on research work related to multimodal cognitive artificial intelligence. It explores the integration of multiple modes of data such as text, images, and audio to enhance AI systems' cognitive capabilities. The repository likely contains code, datasets, and research papers related to multimodal AI applications, including natural language processing, computer vision, and audio processing. Researchers and developers interested in advancing AI systems' understanding of multimodal data can find valuable resources and insights in this repository.

Awesome-Efficient-MoE

Awesome Efficient MoE is a GitHub repository that provides an implementation of Mixture of Experts (MoE) models for efficient deep learning. The repository includes code for training and using MoE models, which are neural network architectures that combine multiple expert networks to improve performance on complex tasks. MoE models are particularly useful for handling diverse data distributions and capturing complex patterns in data. The implementation in this repository is designed to be efficient and scalable, making it suitable for training large-scale MoE models on modern hardware. The code is well-documented and easy to use, making it accessible for researchers and practitioners interested in leveraging MoE models for their deep learning projects.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

GenerativeAIExamples

NVIDIA Generative AI Examples are state-of-the-art examples that are easy to deploy, test, and extend. All examples run on the high performance NVIDIA CUDA-X software stack and NVIDIA GPUs. These examples showcase the capabilities of NVIDIA's Generative AI platform, which includes tools, frameworks, and models for building and deploying generative AI applications.

For similar tasks

Generative-AI-Drug-Discovery

Generative-AI-Drug-Discovery is a public repository on GitHub focused on using tensor network machine learning approaches to accelerate GenAI for drug discovery. The repository aims to implement effective architectures and methodologies into Large Language Models (LLMs) to enhance Drug Discovery Generative AI performance.

bionemo-framework

NVIDIA BioNeMo Framework is a collection of programming tools, libraries, and models for computational drug discovery. It accelerates building and adapting biomolecular AI models by providing domain-specific, optimized models and tooling for GPU-based computational resources. The framework offers comprehensive documentation and support for both community and enterprise users.

New-AI-Drug-Discovery

New AI Drug Discovery is a repository focused on the applications of Large Language Models (LLM) in drug discovery. It provides resources, tools, and examples for leveraging LLM technology in the pharmaceutical industry. The repository aims to showcase the potential of using AI-driven approaches to accelerate the drug discovery process, improve target identification, and optimize molecular design. By exploring the intersection of artificial intelligence and drug development, this repository offers insights into the latest advancements in computational biology and cheminformatics.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.