Best AI tools for< Safety Training >

20 - AI tool Sites

European Agency for Safety and Health at Work

The European Agency for Safety and Health at Work (EU-OSHA) is an EU agency that provides information, statistics, legislation, and risk assessment tools on occupational safety and health (OSH). The agency's mission is to make Europe's workplaces safer, healthier, and more productive.

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

Storytell.ai

Storytell.ai is an enterprise-grade AI platform that offers Business-Grade Intelligence across data, focusing on boosting productivity for employees and teams. It provides a secure environment with features like creating project spaces, multi-LLM chat, task automation, chat with company data, and enterprise-AI security suite. Storytell.ai ensures data security through end-to-end encryption, data encryption at rest, provenance chain tracking, and AI firewall. It is committed to making AI safe and trustworthy by not training LLMs with user data and providing audit logs for accountability. The platform continuously monitors and updates security protocols to stay ahead of potential threats.

Converge360

Converge360 is a comprehensive platform that offers a wide range of AI news, training, and education services to professionals in various industries such as education, enterprise IT/development, occupational health & safety, and security. With over 20 media and event brands and more than 30 years of expertise, Converge360 provides top-quality programs tailored to meet the nuanced needs of businesses. The platform utilizes in-house prediction algorithms to gain market insights and offers scalable marketing solutions with cutting-edge technology.

Hify.io

Hify.io is a domain selling platform that offers users the opportunity to purchase premium verified domains. The platform provides a secure and hassle-free experience for buying or leasing domain names. With fast and easy transfers, local currency options, and transaction support, Hify.io ensures a smooth process for acquiring the desired domain. The website emphasizes simplicity and safety in domain transactions, making it a reliable choice for individuals or businesses looking to own a domain.

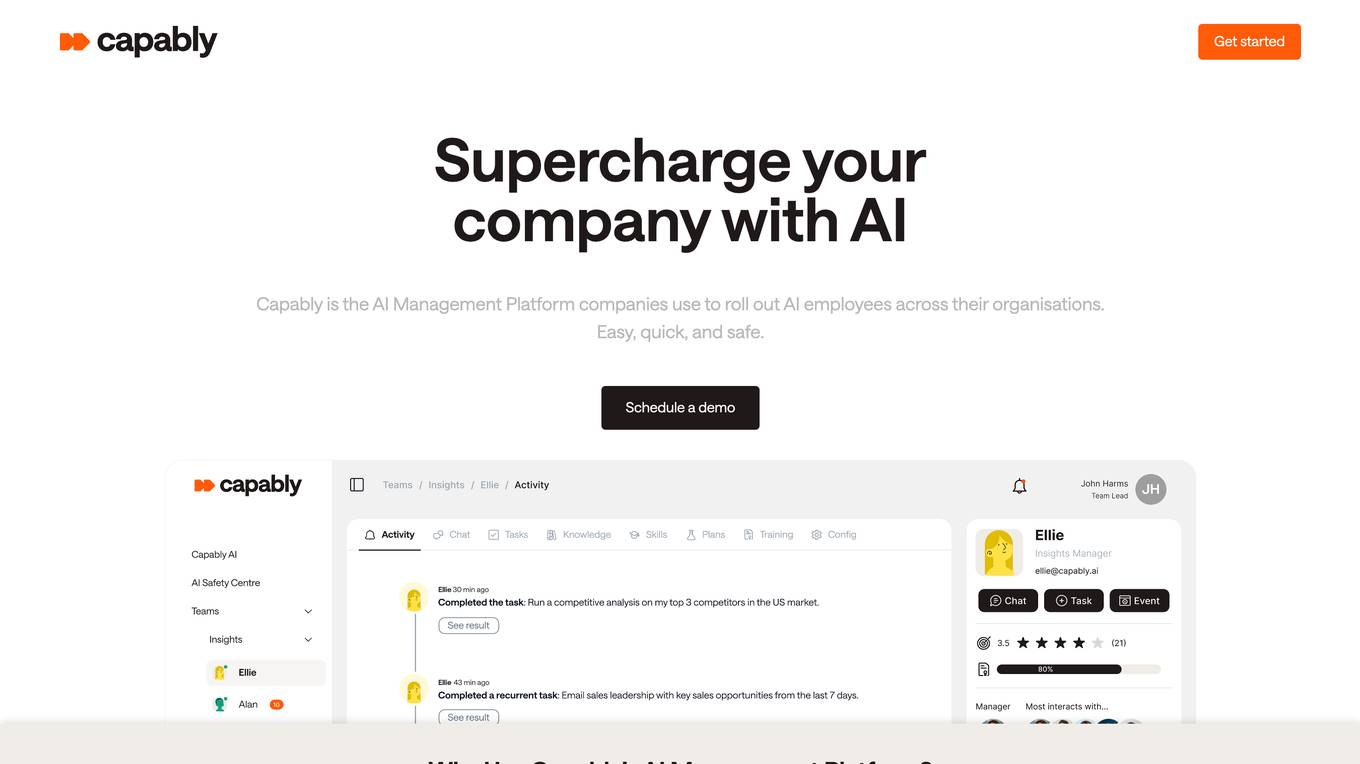

Capably

Capably is an AI Management Platform that helps companies roll out AI employees across their organizations. It provides tools to easily adopt AI, create and onboard AI employees, and monitor AI activity. Capably is designed for business users with no AI expertise and integrates seamlessly with existing workflows and software tools.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Mursion

Mursion is an immersive learning platform that utilizes human-powered AI to provide training simulations for developing interpersonal skills in various workplace scenarios. The platform offers 1:1 immersive training sessions with virtual avatars, designed to enhance communication and people skills. Mursion's simulations are supported by a team of Simulation Specialists who deliver realistic interactions to help learners practice and improve their skills effectively. The platform is backed by over a decade of research and psychology, focusing on providing a safe and impactful learning environment for individuals across different industries.

Google for Education

Google for Education is an AI-powered platform that offers a wide range of educational resources and tools to enhance teaching and learning experiences. It provides courses and training on various topics, including digital skills, computer science, online safety, and study skills. Educators can access materials to create engaging content, streamline classroom management, and personalize learning using Google AI technologies. The platform aims to empower educators and students by leveraging artificial intelligence to save time, inspire creativity, and improve educational outcomes.

Azure AI Platform

Azure AI Platform by Microsoft offers a comprehensive suite of artificial intelligence services and tools for developers and businesses. It provides a unified platform for building, training, and deploying AI models, as well as integrating AI capabilities into applications. With a focus on generative AI, multimodal models, and large language models, Azure AI empowers users to create innovative AI-driven solutions across various industries. The platform also emphasizes content safety, scalability, and agility in managing AI projects, making it a valuable resource for organizations looking to leverage AI technologies.

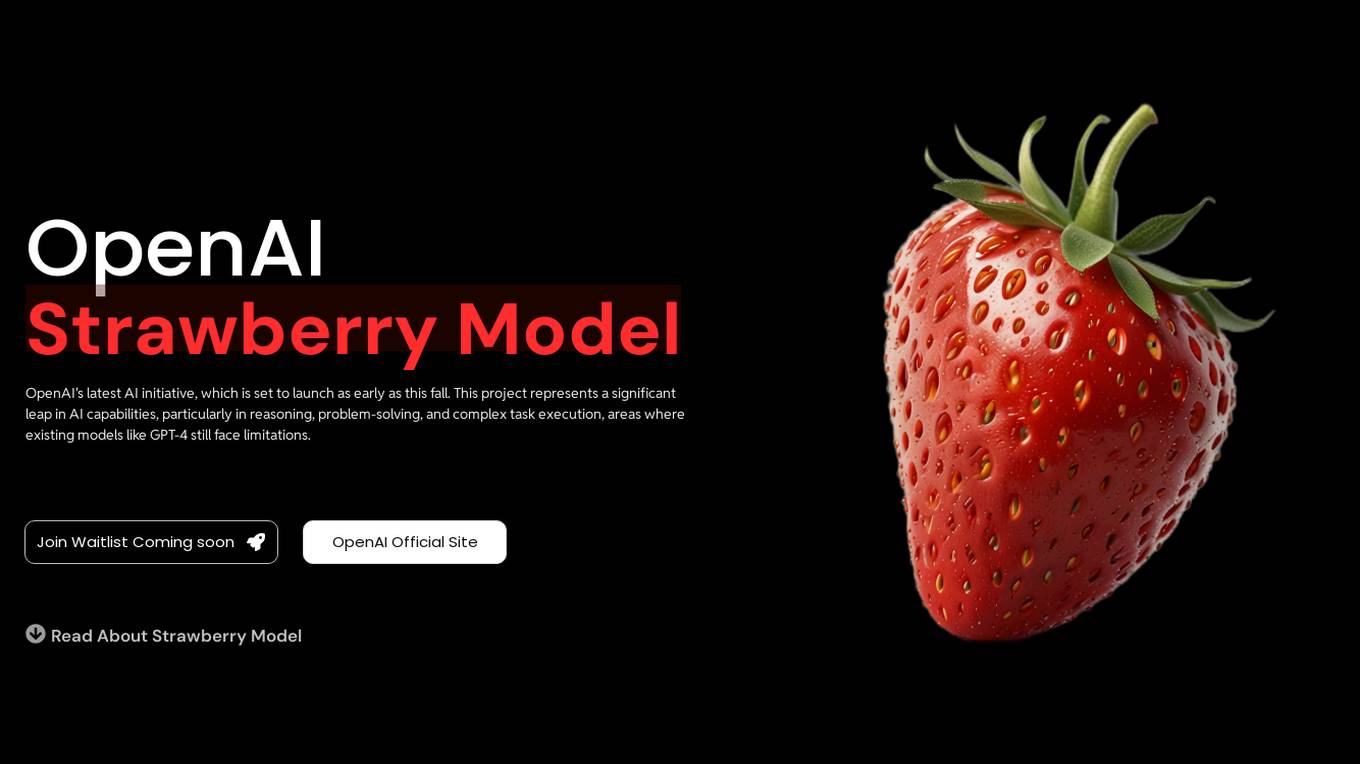

OpenAI Strawberry Model

OpenAI Strawberry Model is a cutting-edge AI initiative that represents a significant leap in AI capabilities, focusing on enhancing reasoning, problem-solving, and complex task execution. It aims to improve AI's ability to handle mathematical problems, programming tasks, and deep research, including long-term planning and action. The project showcases advancements in AI safety and aims to reduce errors in AI responses by generating high-quality synthetic data for training future models. Strawberry is designed to achieve human-like reasoning and is expected to play a crucial role in the development of OpenAI's next major model, codenamed 'Orion.'

MagicSchool.ai

MagicSchool.ai is an AI-powered platform designed specifically for educators and students. It offers a comprehensive suite of 60+ AI tools to help teachers with lesson planning, differentiation, assessment writing, IEP writing, clear communication, and more. MagicSchool.ai is easy to use, with an intuitive interface and built-in training resources. It is also interoperable with popular LMS platforms and offers easy export options. MagicSchool.ai is committed to responsible AI for education, with a focus on safety, privacy, and compliance with FERPA and state privacy laws.

Tavus

Tavus is an AI tool that offers digital twin APIs for video generation and conversational video interfaces. It allows users to create immersive AI-generated video experiences using cutting-edge AI technology. Tavus provides best-in-class models like Phoenix-2 for creating realistic digital replicas with natural face movements. The platform offers rapid training, instant inference, support for 30+ languages, and built-in security features to ensure user privacy and safety. Tavus is preferred by developers and product teams for its developer-first approach, ease of integration, and exceptional customer service.

DTiQ

DTiQ is an AI-powered loss prevention and intelligent video solution for businesses, offering a range of services to enhance security, reduce losses, and optimize operations. The platform combines technology, audits, and training to provide expert-driven solutions tailored to specific business needs. With features like real-time AI alerts, seamless integration of video and POS data, and remote auditing services, DTiQ aims to empower businesses to prevent safety issues, improve customer experiences, and boost operational efficiency.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

Voxel's Safety Intelligence Platform

Voxel's Safety Intelligence Platform is an AI-driven site intelligence platform that empowers safety and operations leaders to make strategic decisions. It provides real-time visibility into critical safety practices, offers custom insights through on-demand dashboards, facilitates risk management with collaborative tools, and promotes a sustainable safety culture. The platform helps enterprises reduce risks, increase efficiency, and enhance workforce safety through innovative AI technology.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

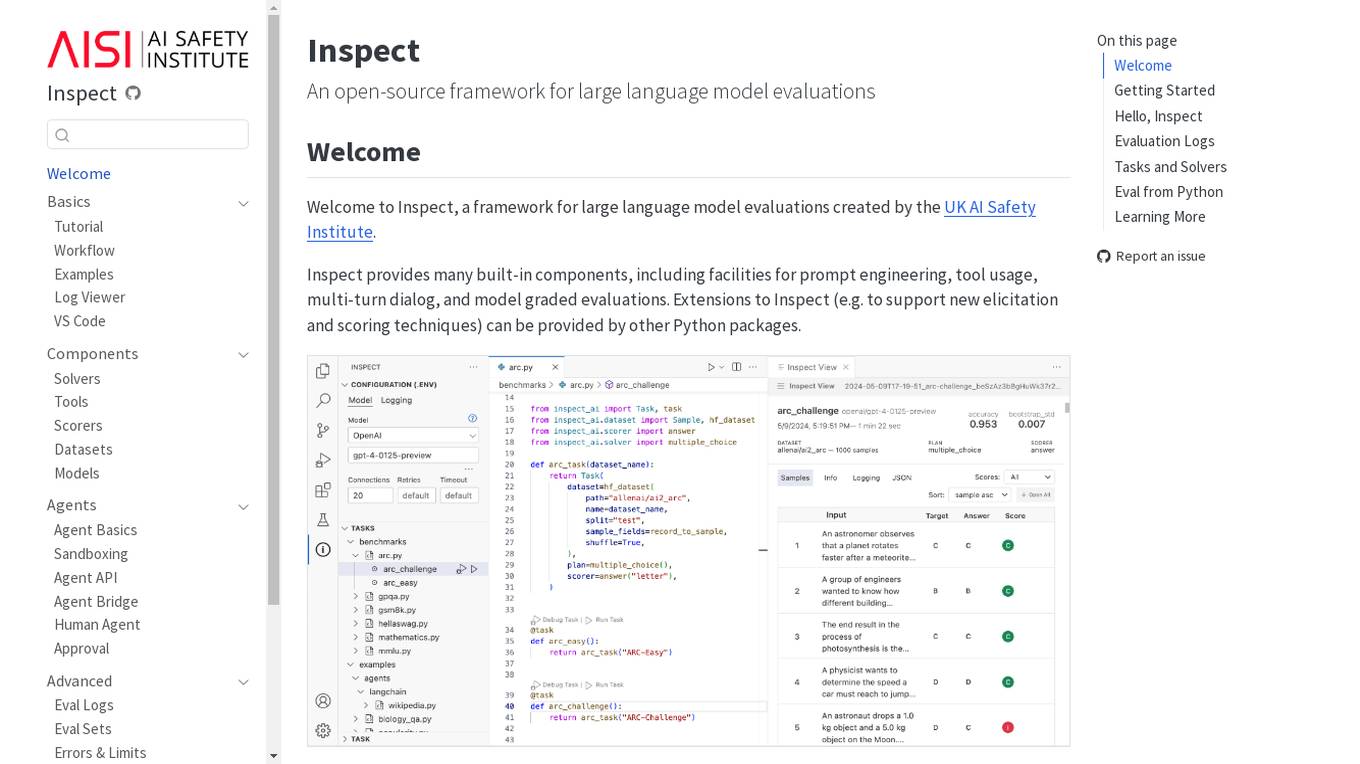

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

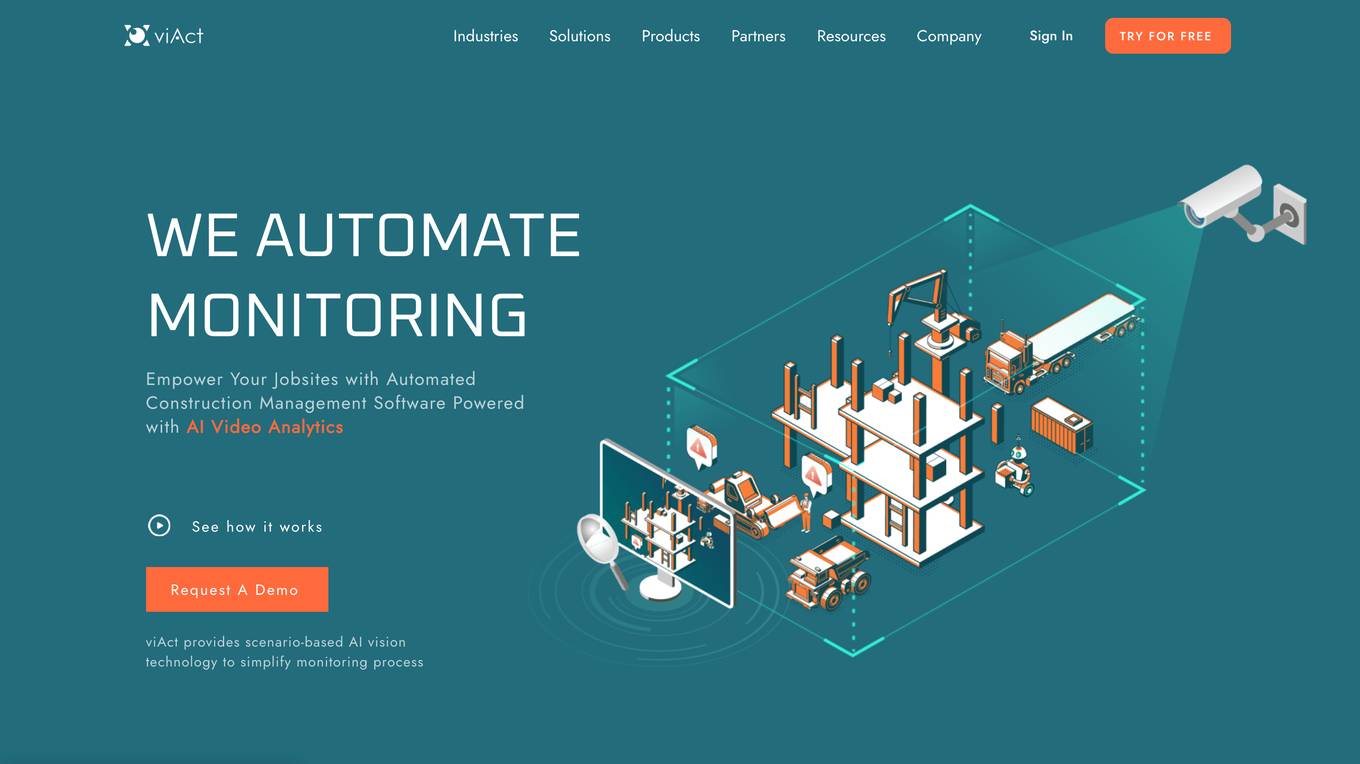

viAct.ai

viAct.ai is an AI monitoring tool that redefines workplace safety by combining automated AI monitoring with real-time alerts. The tool helps safety leaders prevent risks sooner, streamline compliance, and protect what matters most in various industries such as construction, oil & gas, mining, manufacturing, and more. viAct.ai offers proprietary scenario-based Vision AI, privacy by design approach, plug & play integration, and is trusted by industry leaders for its award-winning workplace safety solutions.

0 - Open Source AI Tools

20 - OpenAI Gpts

Emergency Training

Provides emergency training assistance with a focus on safety and clear guidelines.

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer

Flight Comms Coach

ATC communication trainer for pilots, offering scenario-based training and feedback.

Canadian Film Industry Safety Expert

Film studio safety expert guiding on regulations and practices

The Building Safety Act Bot (Beta)

Simplifying the BSA for your project. Created by www.arka.works

Brand Safety Audit

Get a detailed risk analysis for public relations, marketing, and internal communications, identifying challenges and negative impacts to refine your messaging strategy.

GPT Safety Liaison

A liaison GPT for AI safety emergencies, connecting users to OpenAI experts.

Travel Safety Advisor

Up-to-date travel safety advisor using web data, avoids subjective advice.