Best AI tools for< Run Models Locally >

20 - AI tool Sites

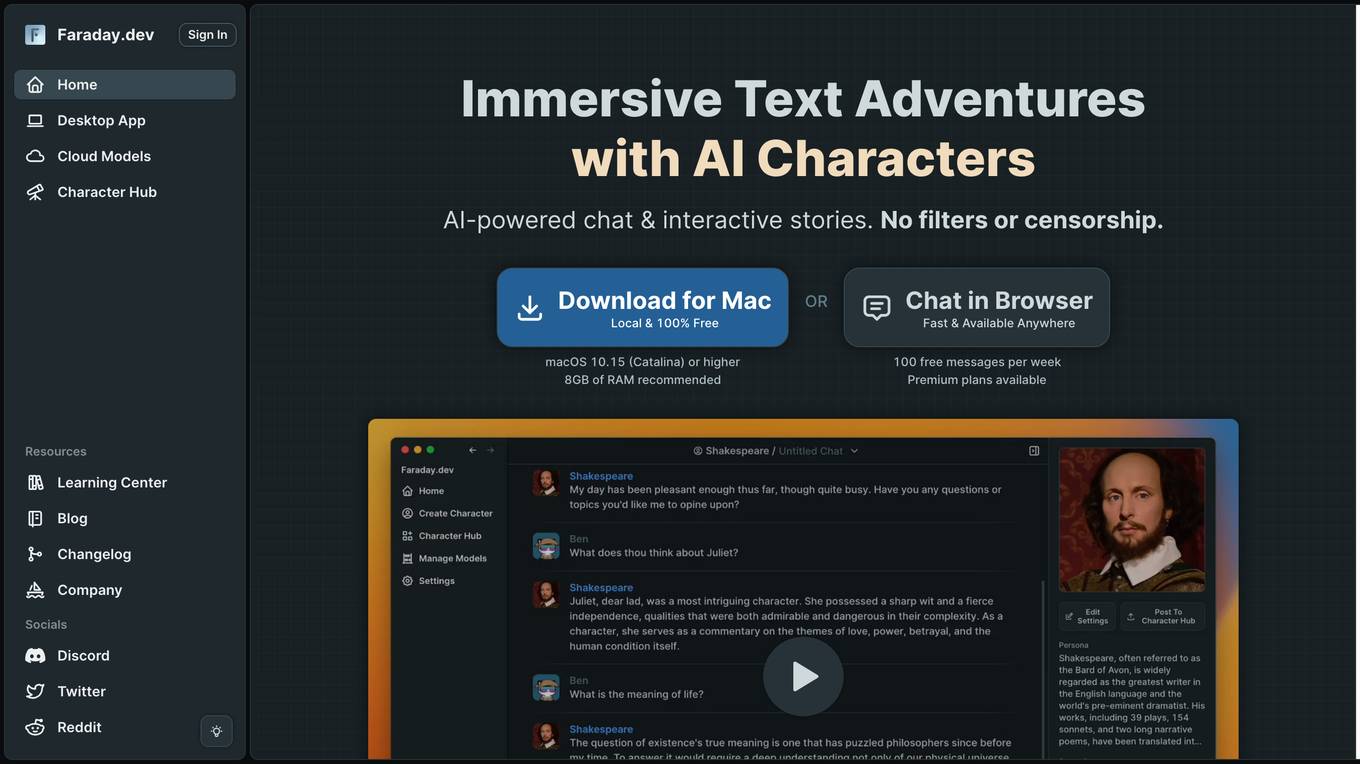

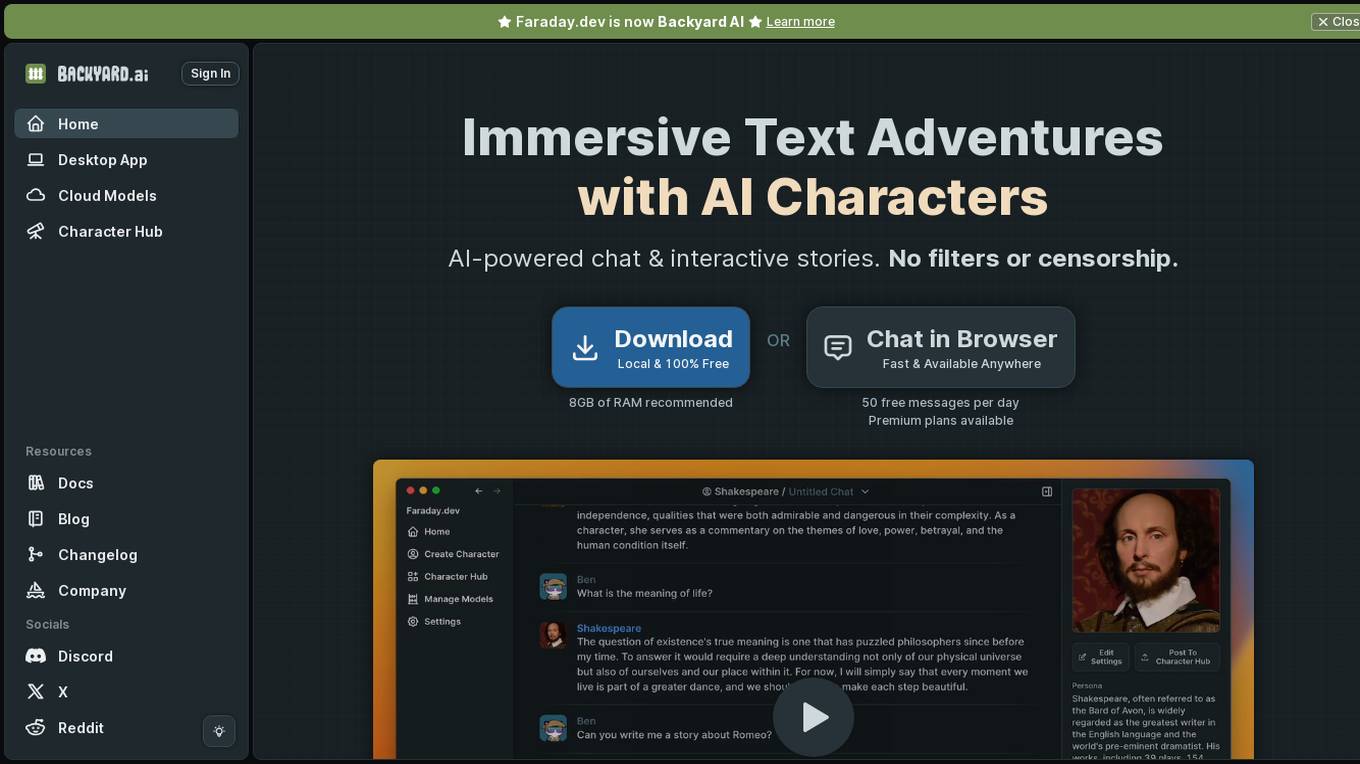

Backyard AI

Backyard AI is an AI-powered platform that offers immersive text adventures with AI characters, enabling users to engage in chat and interactive stories without filters or censorship. Users can bring AI characters to life with expressive customizations and intricate worlds. The platform provides a Desktop App for running AI models locally and a Cloud service for fast and powerful AI models accessible from anywhere. Backyard AI prioritizes privacy and control by storing all data locally on the device and encrypting data at rest. It offers a range of language models and features like mobile tethering, automatic GPU acceleration, and secure chat in the browser.

Backyard AI

Backyard AI is an AI-powered platform that offers immersive text adventures with AI characters, chat, and interactive stories. Users can bring AI characters to life with expressive customizations and explore intricate worlds through text RPG experiences. The platform provides a Desktop App for running AI models locally and cloud models for supercharging creativity. Backyard AI prioritizes privacy and control by storing data locally and encrypting it at rest. With a focus on user-friendly features and powerful AI language models, Backyard AI aims to provide an engaging and secure AI experience for users.

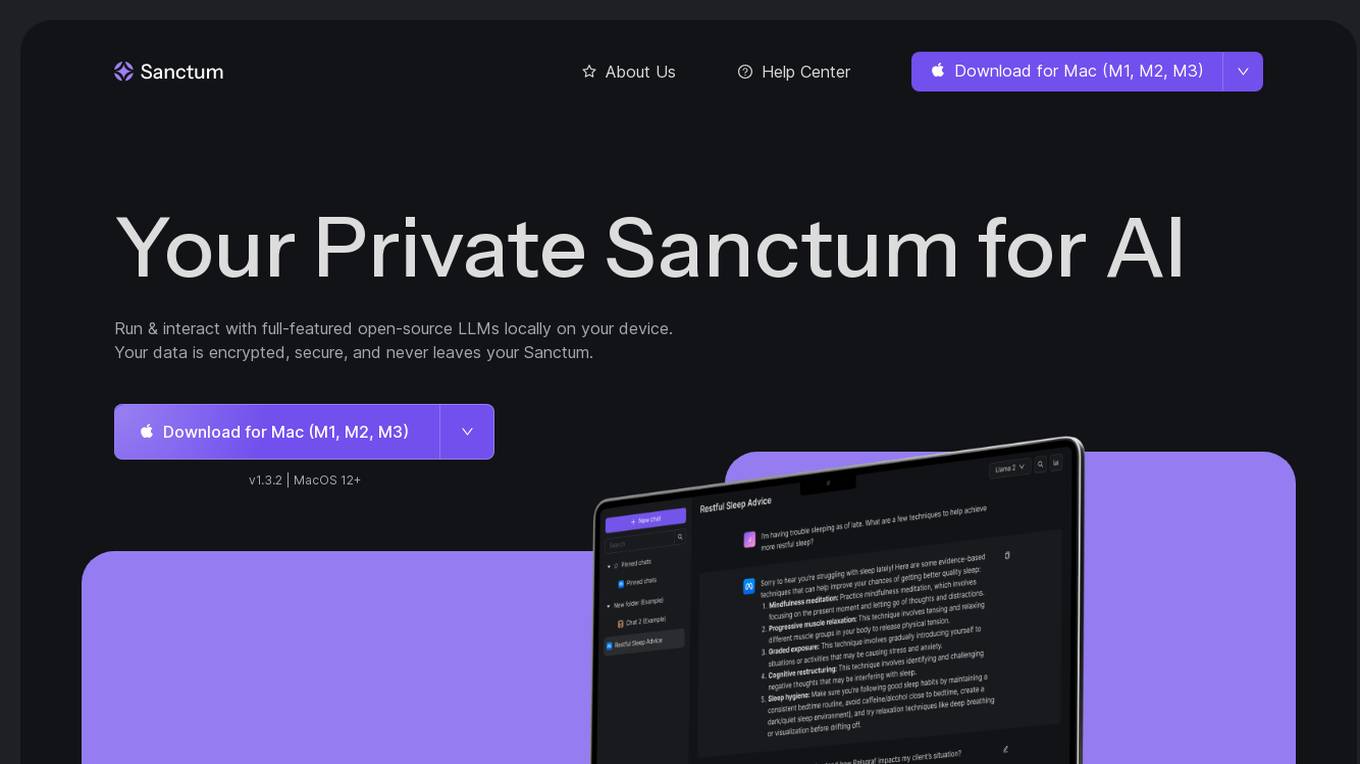

Sanctum

Sanctum is a private AI tool that brings the power of generative AI to your desktop. It enables you to download and run full-featured open-source LLMs directly on your device. With on-device encryption and processing, your data never leaves your Mac. You maintain complete privacy and control.

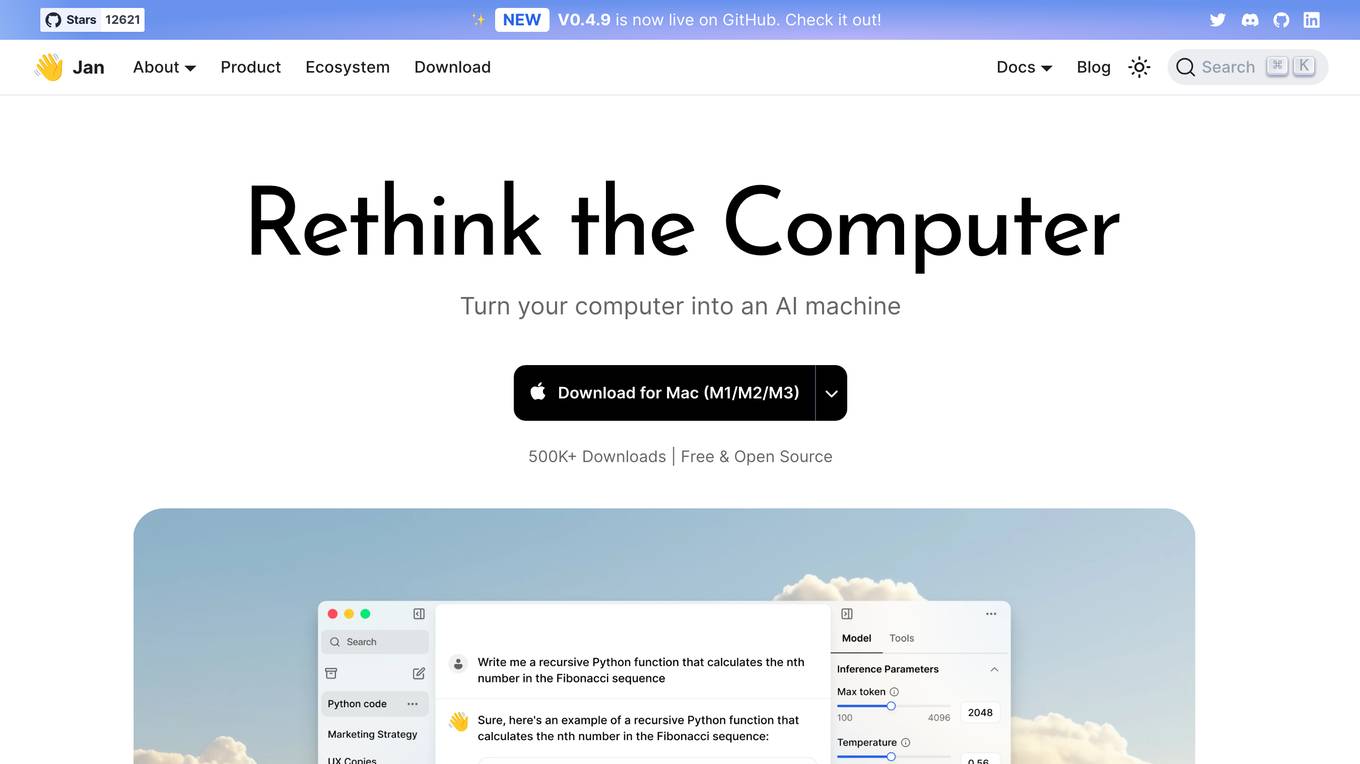

Jan

Jan is an open-source ChatGPT-alternative that runs 100% offline. It allows users to chat with AI, download and run powerful models, connect to cloud AIs, set up a local API server, and chat with files. Highly customizable, Jan also offers features like creating personalized AI assistants, memory, and extensions. The application prioritizes local-first AI, user-owned data, and full customization, making it a versatile tool for AI enthusiasts and developers.

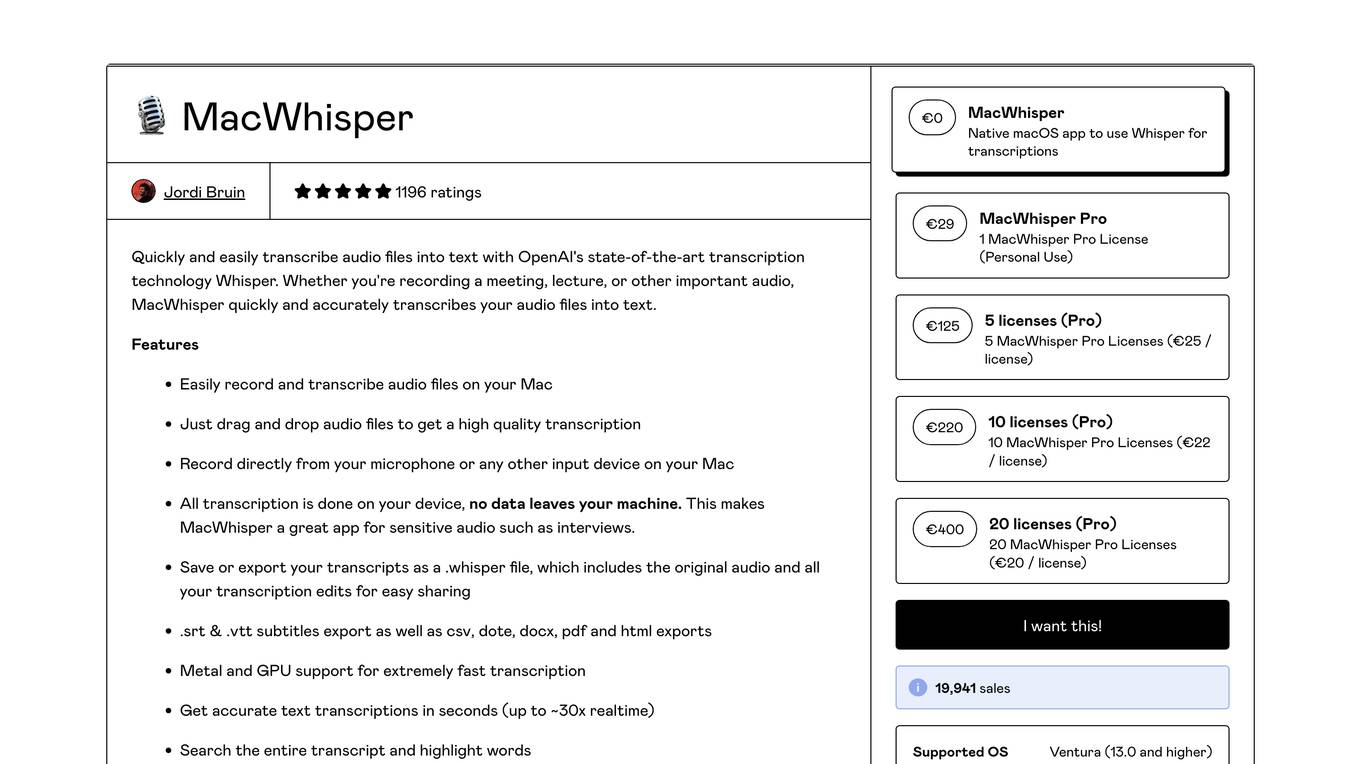

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

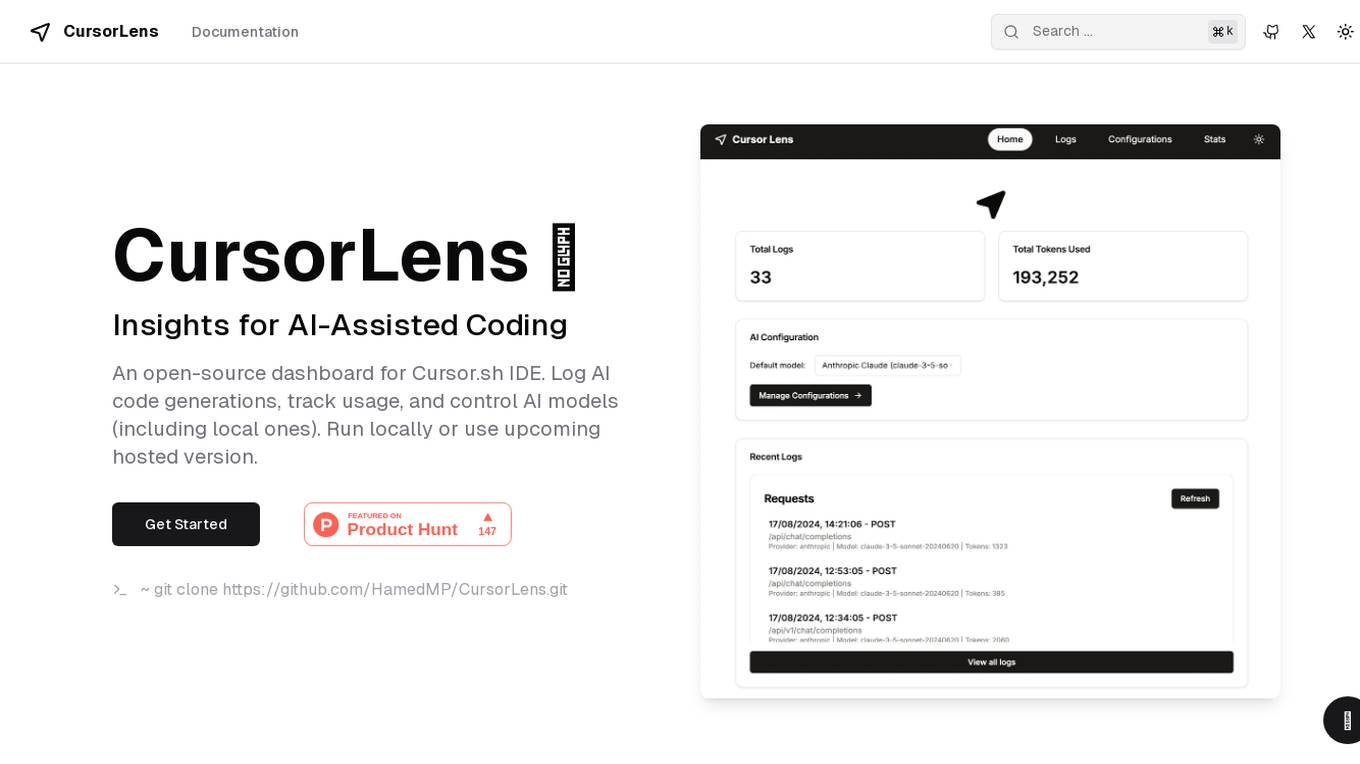

CursorLens

CursorLens is an open-source dashboard designed to provide insights for AI-assisted coding within the Cursor.sh IDE. It allows users to log AI code generations, track usage, and control AI models, including local ones. Users can run CursorLens locally or utilize the upcoming hosted version for enhanced convenience and efficiency.

AnythingLLM

AnythingLLM is an all-in-one AI application designed for everyone. It offers a suite of tools for working with LLM (Large Language Models), documents, and agents in a fully private environment. Users can install AnythingLLM on their desktop for Windows, MacOS, and Linux, enabling flexible one-click installation and secure, fully private operation without internet connectivity. The application supports custom models, including enterprise models like GPT-4, custom fine-tuned models, and open-source models like Llama and Mistral. AnythingLLM allows users to work with various document formats, such as PDFs and word documents, providing tailored solutions with locally running defaults for privacy.

Dot

Dot is a free, locally-run language model that allows users to interact with their own documents, chat with the model, and use the model for a variety of tasks, all without sending their data away. It is powered by the Mistral 7B LLM, which means it can run locally on a user's device and does not give away any of their data. Dot can also run offline.

Moshi AI

Moshi AI by Kyutai is an advanced native speech AI model that enables natural, expressive conversations. It can be installed locally and run offline, making it suitable for integration into smart home appliances and other local applications. The model, named Helium, has 7 billion parameters and is trained on text and audio codecs. Moshi AI supports native speech input and output, allowing for smooth communication with the AI. The application is community-supported, with plans for continuous improvement and adaptation.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Practice Run AI

Practice Run AI is an online platform that offers AI-powered tools for various tasks. Users can utilize the application to practice and run AI algorithms without the need for complex setups or installations. The platform provides a user-friendly interface that allows individuals to experiment with AI models and enhance their understanding of artificial intelligence concepts. Practice Run AI aims to democratize AI education and make it accessible to a wider audience by simplifying the learning process and providing hands-on experience.

GPUX

GPUX is a cloud platform that provides access to GPUs for running AI workloads. It offers a variety of features to make it easy to deploy and run AI models, including a user-friendly interface, pre-built templates, and support for a variety of programming languages. GPUX is also committed to providing a sustainable and ethical platform, and it has partnered with organizations such as the Climate Leadership Council to reduce its carbon footprint.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

Awan LLM

Awan LLM is an AI tool that offers an Unlimited Tokens, Unrestricted, and Cost-Effective LLM Inference API Platform for Power Users and Developers. It allows users to generate unlimited tokens, use LLM models without constraints, and pay per month instead of per token. The platform features an AI Assistant, AI Agents, Roleplay with AI companions, Data Processing, Code Completion, and Applications for profitable AI-powered applications.

Fifi.ai

Fifi.ai is a managed AI cloud platform that provides users with the infrastructure and tools to deploy and run AI models. The platform is designed to be easy to use, with a focus on plug-and-play functionality. Fifi.ai also offers a range of customization and fine-tuning options, allowing users to tailor the platform to their specific needs. The platform is supported by a team of experts who can provide assistance with onboarding, API integration, and troubleshooting.

Profit Isle

Profit Isle is an AI application that helps enterprises make data-driven decisions to enhance profitability and drive value to the bottom line. The platform integrates and transforms enterprise data to power AI initiatives, providing actionable insights and recommendations grounded in company data. Profit Isle prioritizes transparency, data governance, and privacy to ensure customers can confidently run AI models and make informed decisions.

TitanML

TitanML is a platform that provides tools and services for deploying and scaling Generative AI applications. Their flagship product, the Titan Takeoff Inference Server, helps machine learning engineers build, deploy, and run Generative AI models in secure environments. TitanML's platform is designed to make it easy for businesses to adopt and use Generative AI, without having to worry about the underlying infrastructure. With TitanML, businesses can focus on building great products and solving real business problems.

Nebius

Nebius is the ultimate cloud for AI explorers, designed to democratize AI infrastructure and empower builders everywhere. It offers flexible architecture to seamlessly scale AI from a single GPU to pre-optimized clusters with thousands of NVIDIA GPUs. Nebius is engineered for demanding AI workloads, integrating NVIDIA GPU accelerators, high-performance InfiniBand, and Kubernetes or Slurm orchestration for peak efficiency. The platform provides long-term value by optimizing every layer of the stack, delivering substantial customer value over competitors.

3 - Open Source AI Tools

catai

CatAI is a tool that allows users to run GGUF models on their computer with a chat UI. It serves as a local AI assistant inspired by Node-Llama-Cpp and Llama.cpp. The tool provides features such as auto-detecting programming language, showing original messages by clicking on user icons, real-time text streaming, and fast model downloads. Users can interact with the tool through a CLI that supports commands for installing, listing, setting, serving, updating, and removing models. CatAI is cross-platform and supports Windows, Linux, and Mac. It utilizes node-llama-cpp and offers a simple API for asking model questions. Additionally, developers can integrate the tool with node-llama-cpp@beta for model management and chatting. The configuration can be edited via the web UI, and contributions to the project are welcome. The tool is licensed under Llama.cpp's license.

ezlocalai

ezlocalai is an artificial intelligence server that simplifies running multimodal AI models locally. It handles model downloading and server configuration based on hardware specs. It offers OpenAI Style endpoints for integration, voice cloning, text-to-speech, voice-to-text, and offline image generation. Users can modify environment variables for customization. Supports NVIDIA GPU and CPU setups. Provides demo UI and workflow visualization for easy usage.

BodhiApp

Bodhi App runs Open Source Large Language Models locally, exposing LLM inference capabilities as OpenAI API compatible REST APIs. It leverages llama.cpp for GGUF format models and huggingface.co ecosystem for model downloads. Users can run fine-tuned models for chat completions, create custom aliases, and convert Huggingface models to GGUF format. The CLI offers commands for environment configuration, model management, pulling files, serving API, and more.

20 - OpenAI Gpts

Consulting & Investment Banking Interview Prep GPT

Run mock interviews, review content and get tips to ace strategy consulting and investment banking interviews

Dungeon Master's Assistant

Your new DM's screen: helping Dungeon Masters to craft & run amazing D&D adventures.

Database Builder

Hosts a real SQLite database and helps you create tables, make schema changes, and run SQL queries, ideal for all levels of database administration.

Restaurant Startup Guide

Meet the Restaurant Startup Guide GPT: your friendly guide in the restaurant biz. It offers casual, approachable advice to help you start and run your own restaurant with ease.

Community Design™

A community-building GPT based on the wildly popular Community Design™ framework from Mighty Networks. Start creating communities that run themselves.

Code Helper for Web Application Development

Friendly web assistant for efficient code. Ask the wizard to create an application and you will get the HTML, CSS and Javascript code ready to run your web application.

Creative Director GPT

I'm your brainstorm muse in marketing and advertising; the creativity machine you need to sharpen the skills, land the job, generate the ideas, win the pitches, build the brands, ace the awards, or even run your own agency. Psst... don't let your clients find out about me! 😉

Pace Assistant

Provides running splits for Strava Routes, accounting for distance and elevation changes