Best AI tools for< Run Benchmarks >

20 - AI tool Sites

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

Run Recommender

The Run Recommender is a web-based tool that helps runners find the perfect pair of running shoes. It uses a smart algorithm to suggest options based on your input, giving you a starting point in your search for the perfect pair. The Run Recommender is designed to be user-friendly and easy to use. Simply input your shoe width, age, weight, and other details, and the Run Recommender will generate a list of potential shoes that might suit your running style and body. You can also provide information about your running experience, distance, and frequency, and the Run Recommender will use this information to further refine its suggestions. Once you have a list of potential shoes, you can click on each shoe to learn more about it, including its features, benefits, and price. You can also search for the shoe on Amazon to find the best deals.

Practice Run AI

Practice Run AI is an online platform that offers AI-powered tools for various tasks. Users can utilize the application to practice and run AI algorithms without the need for complex setups or installations. The platform provides a user-friendly interface that allows individuals to experiment with AI models and enhance their understanding of artificial intelligence concepts. Practice Run AI aims to democratize AI education and make it accessible to a wider audience by simplifying the learning process and providing hands-on experience.

Dora

Dora is a no-code 3D animated website design platform that allows users to create stunning 3D and animated visuals without writing a single line of code. With Dora, designers, freelancers, and creative professionals can focus on what they do best: designing. The platform is tailored for professionals who prioritize design aesthetics without wanting to dive deep into the backend. Dora offers a variety of features, including a drag-and-connect constraint layout system, advanced animation capabilities, and pixel-perfect usability. With Dora, users can create responsive 3D and animated websites that translate seamlessly across devices.

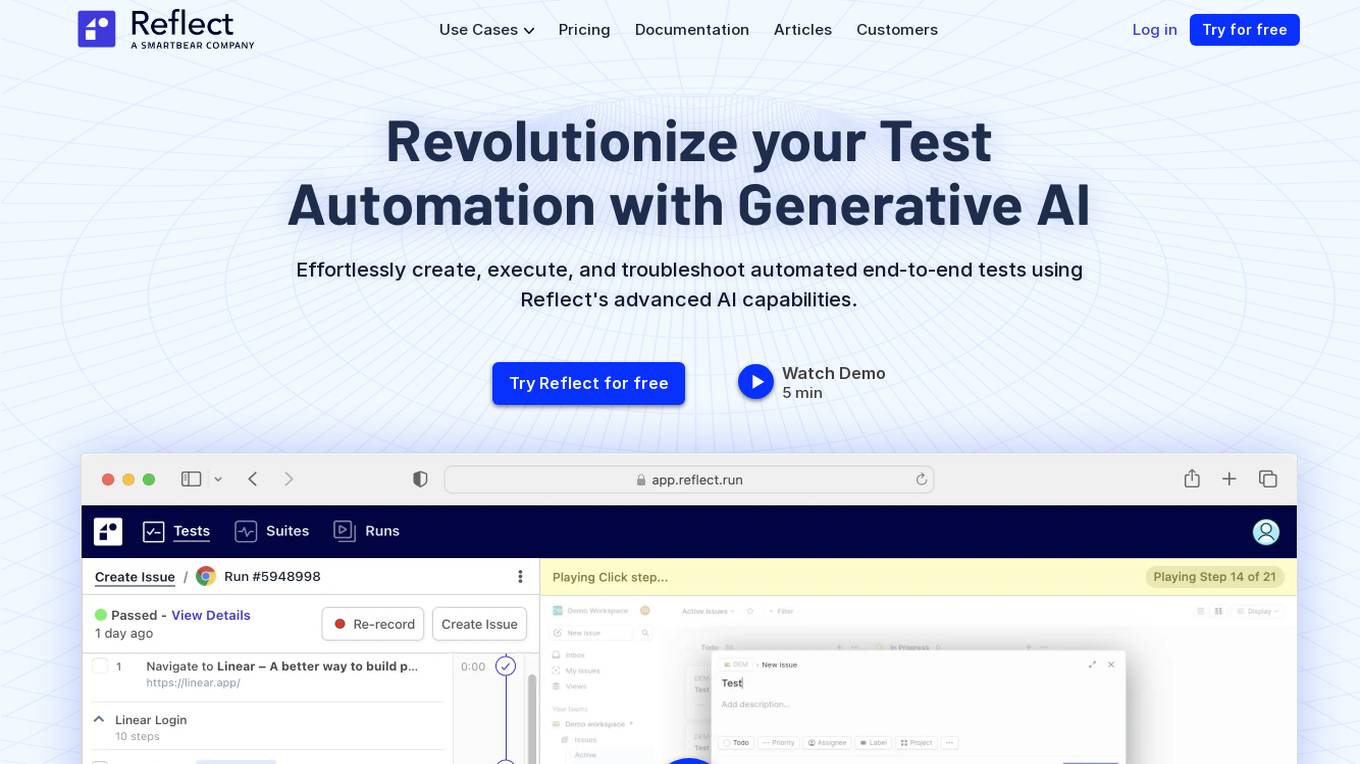

Reflect

Reflect is an AI-powered test automation tool that revolutionizes the way end-to-end tests are created, executed, and maintained. By leveraging Generative AI, Reflect eliminates the need for manual coding and provides a seamless testing experience. The tool offers features such as no-code test automation, visual testing, API testing, cross-browser testing, and more. Reflect aims to help companies increase software quality by accelerating testing processes and ensuring test adaptability over time.

Error Handling Application

The website is currently experiencing an application error, indicating a server-side exception. Users encountering this error are advised to check the server logs for more information. The error digest number provided is 3308662818.

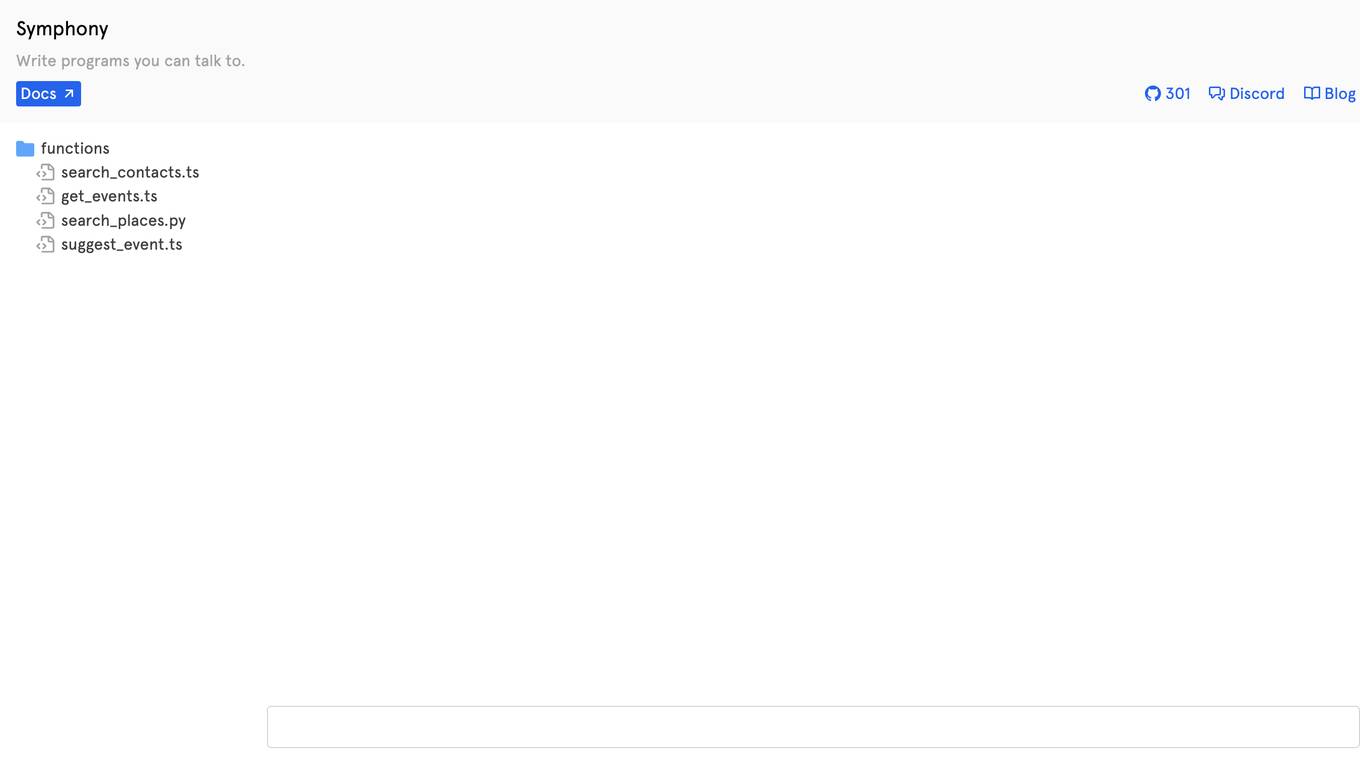

Symphony

Symphony is a platform that allows users to write programs using natural language. It enables users to interact with the system by talking to it, making programming more accessible and intuitive. Symphony simplifies the process of coding by translating spoken commands into executable code, providing a user-friendly programming experience.

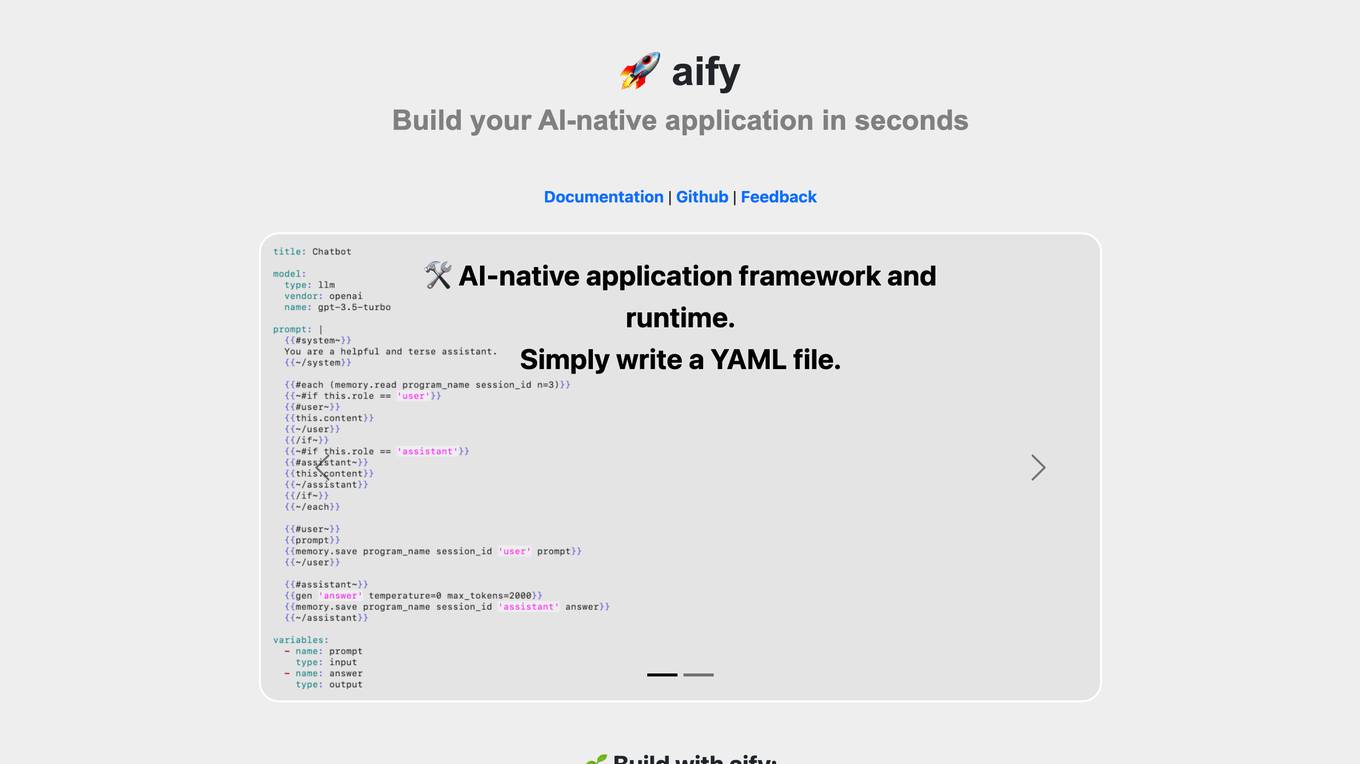

aify

aify is an AI-native application framework and runtime that allows users to build AI-native applications quickly and easily. With aify, users can create applications by simply writing a YAML file. The platform also offers a ready-to-use AI chatbot UI for seamless integration. Additionally, aify provides features such as Emoji express for searching emojis by semantics. The framework is open source under the MIT license, making it accessible to developers of all levels.

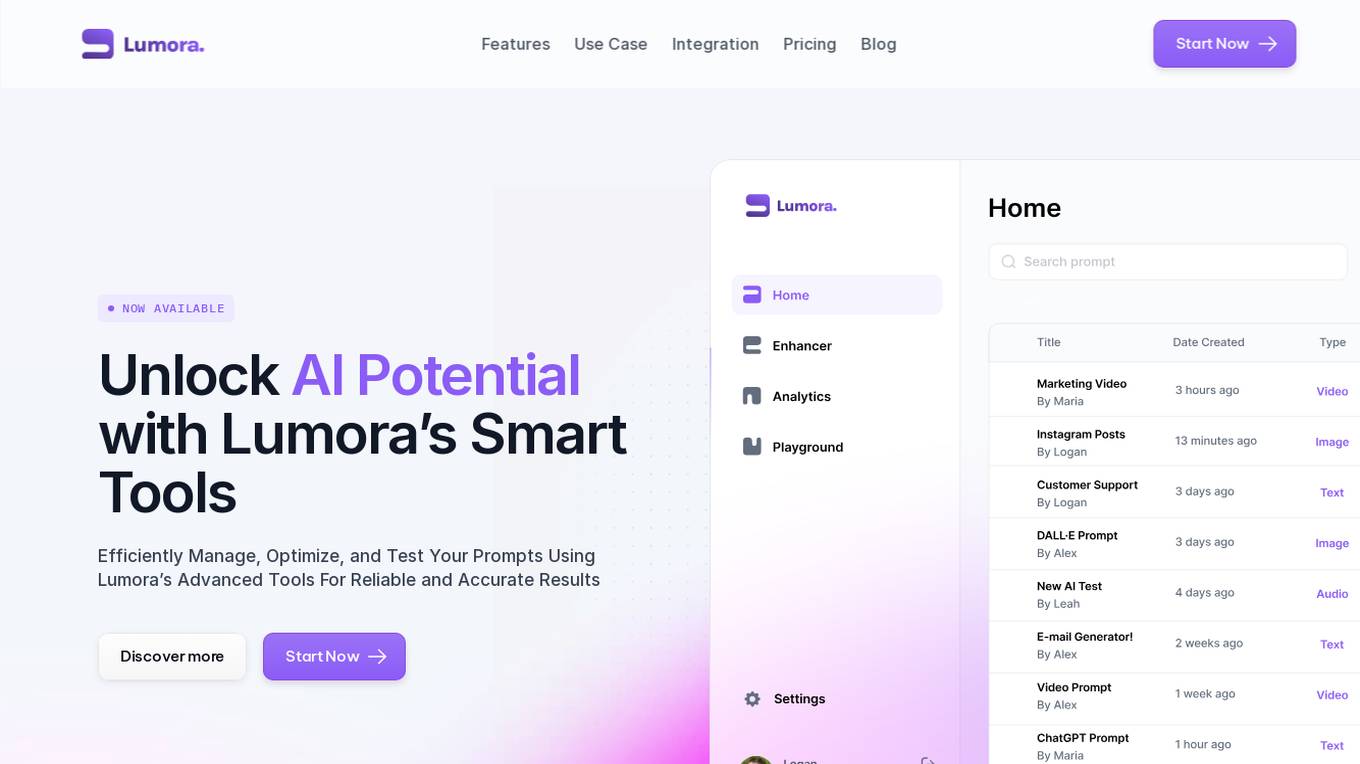

Lumora

Lumora is an AI tool designed to help users efficiently manage, optimize, and test prompts for various AI platforms. It offers features such as prompt organization, enhancement, testing, and development. Lumora aims to improve prompt outcomes and streamline prompt management for teams, providing a user-friendly interface and a playground for experimentation. The tool also integrates with various AI models for text, image, and video generation, allowing users to optimize prompts for better results.

Dora

Dora is an AI-powered platform that enables users to create 3D animated websites without the need for coding. It caters to designers, freelancers, and creative professionals who seek to design visually captivating websites effortlessly. With Dora, users can craft mesmerizing 3D and animated visuals that are responsive and seamlessly translate across devices. The platform is designed for professionals who prioritize design aesthetics and offers a no-code experience for those transitioning from other design tools. Dora leverages advanced AI algorithms to generate, customize, and deploy stunning landing pages, revolutionizing the web design process.

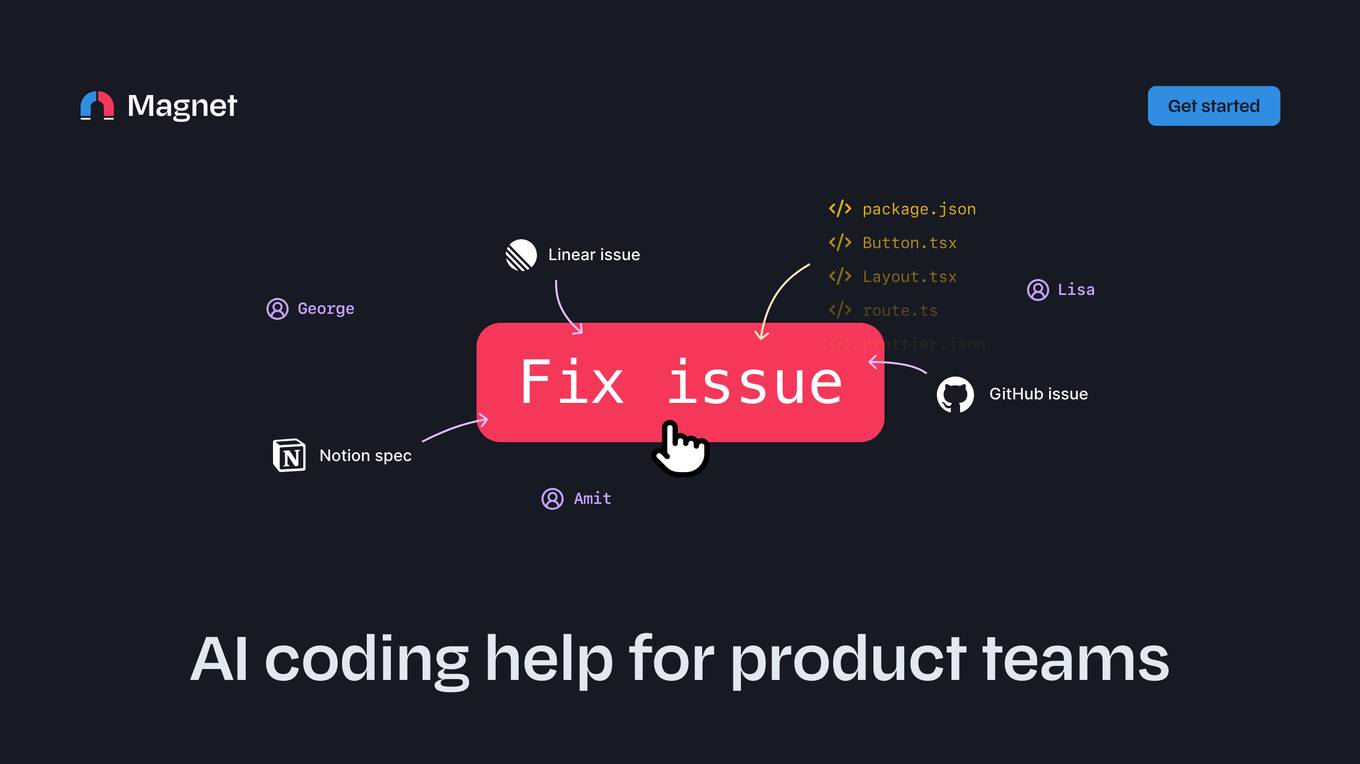

Magnet

Magnet is an AI coding assistant that helps product teams fix issues, share AI threads, and organize projects. It integrates with Linear, GitHub, and Notion, and provides auto-suggested files and code files for personalized and accurate AI recommendations. Magnet also offers prompt templates to help users get started and suggests quick fixes for bugs or enhancements.

Devath

Devath is the world's first AI-powered SmartHome platform that revolutionizes the way users interact with their smart devices. It eliminates the need for writing extensive lines of code by allowing users to simply give instructions to the AI for seamless device control. With features like splash resistance and responsive design, Devath offers a user-friendly experience for managing smart home functionalities. The platform also enables developers to preview and test their apps before submission, providing a 99% faster publishing process. Devath is continuously evolving with user feedback and aims to enhance the SmartHome experience through AI copilots and customizable features. With Devath, users can control their devices from the web and enjoy free unlimited access to the AI era of SmartHome.

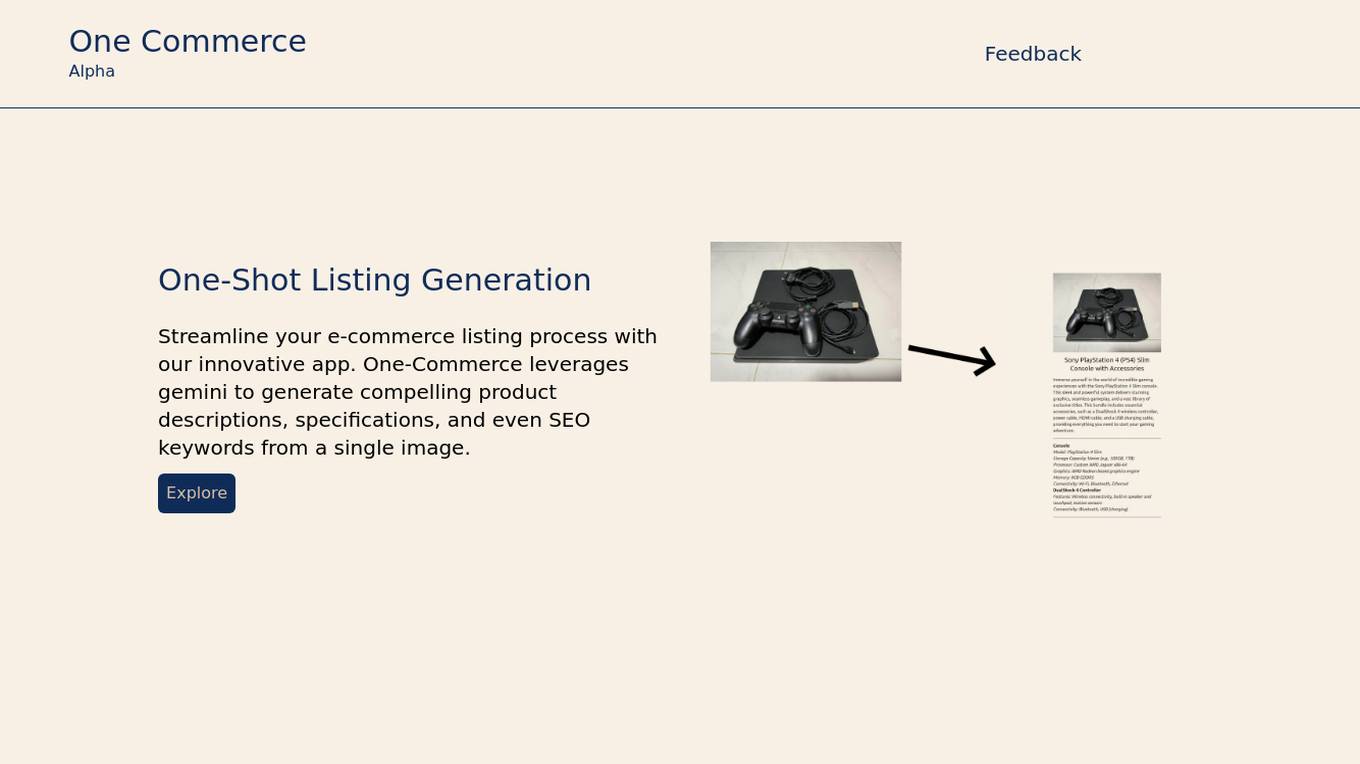

One-Commerce

One-Commerce is an AI-powered application designed to streamline the e-commerce listing process. It utilizes gemini technology to automatically generate detailed product descriptions, specifications, and SEO keywords from a single image. With its innovative approach, One-Commerce aims to simplify and enhance the online selling experience for e-commerce businesses.

Sessions

Sessions is a cloud-based video conferencing and webinar platform that offers a range of features to help businesses run successful online meetings and events. With Sessions, users can create interactive agendas, share screens, record meetings, and host webinars with up to 1000 participants. Sessions also integrates with a variety of third-party tools, including Google Drive, Dropbox, and Slack, making it easy to collaborate with colleagues and share files. Additionally, Sessions offers a number of AI-powered features, such as automatic transcription and translation, to help users get the most out of their meetings.

CALA

CALA is a leading fashion platform that unifies design, development, production, and logistics into a single, digital platform. It provides tools and support to automate and optimize the supply chain from start to finish. CALA also offers a network of designers and suppliers, as well as AI-powered design tools to help generate moodboards, fresh ideas, and more.

Effy AI

Effy AI is a free performance management software for teams. It is AI-powered and backed by Run your first 360 review in 60 sec. Fast, and stress-free 360 feedback and performance review software build for teams. With Effy AI, you can collect reviews from different sources such as self, peer, manager, and subordinate evaluations. The platform goes even further by allowing employees to suggest particular peers and seek approval from their manager, giving them a voice in their reviews. Effy AI uses cutting-edge artificial intelligence to carefully process reviewers' answers and generate comprehensive reports for each employee based on the review responses.

Blobr

Blobr is an AI tool designed to help users run Google Ads like a pro. It provides access to an AI twin of a Google Ads expert, trained to understand the user's business and apply winning strategies. Trusted by over 100 industry leaders and agencies, Blobr offers deep analysis, constant testing, and ruthless prioritization of Google Ads campaigns in real-time. The AI twin replicates the methodologies of Google Ads experts, providing deep understanding, same expert processes, and better coverage for campaign monitoring. Users can easily turn their campaigns into pro-mode by sharing business goals, connecting ad data, letting the AI twin analyze performance, receiving weekly insights, and applying optimizations with ready-to-use bulk edit files.

Tely

Tely is an autonomous AI agent that helps businesses run B2B content marketing. It uses machine learning to understand your product, build domain expertise, run SEO optimization, and create a content plan. Tely can also personalize your content with infographics, code snippets, experts' quotes, and call to actions. With Tely, you can drive sales with expert-level content on autopilot, reduce customer acquisition cost, increase conversion rate, and save money on marketing expenses.

7 - Open Source AI Tools

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

eval-dev-quality

DevQualityEval is an evaluation benchmark and framework designed to compare and improve the quality of code generation of Language Model Models (LLMs). It provides developers with a standardized benchmark to enhance real-world usage in software development and offers users metrics and comparisons to assess the usefulness of LLMs for their tasks. The tool evaluates LLMs' performance in solving software development tasks and measures the quality of their results through a point-based system. Users can run specific tasks, such as test generation, across different programming languages to evaluate LLMs' language understanding and code generation capabilities.

fortuna

Fortuna is a library for uncertainty quantification that enables users to estimate predictive uncertainty, assess model reliability, trigger human intervention, and deploy models safely. It provides calibration and conformal methods for pre-trained models in any framework, supports Bayesian inference methods for deep learning models written in Flax, and is designed to be intuitive and highly configurable. Users can run benchmarks and bring uncertainty to production systems with ease.

modelbench

ModelBench is a tool for running safety benchmarks against AI models and generating detailed reports. It is part of the MLCommons project and is designed as a proof of concept to aggregate measures, relate them to specific harms, create benchmarks, and produce reports. The tool requires LlamaGuard for evaluating responses and a TogetherAI account for running benchmarks. Users can install ModelBench from GitHub or PyPI, run tests using Poetry, and create benchmarks by providing necessary API keys. The tool generates static HTML pages displaying benchmark scores and allows users to dump raw scores and manage cache for faster runs. ModelBench is aimed at enabling users to test their own models and create tests and benchmarks.

Aidan-Bench

Aidan Bench is a tool that rewards creativity, reliability, contextual attention, and instruction following. It is weakly correlated with Lmsys, has no score ceiling, and aligns with real-world open-ended use. The tool involves giving LLMs open-ended questions and evaluating their answers based on novelty scores. Users can set up the tool by installing required libraries and setting up API keys. The project allows users to run benchmarks for different models and provides flexibility in threading options.

AirspeedVelocity.jl

AirspeedVelocity.jl is a tool designed to simplify benchmarking of Julia packages over their lifetime. It provides a CLI to generate benchmarks, compare commits/tags/branches, plot benchmarks, and run benchmark comparisons for every submitted PR as a GitHub action. The tool freezes the benchmark script at a specific revision to prevent old history from affecting benchmarks. Users can configure options using CLI flags and visualize benchmark results. AirspeedVelocity.jl can be used to benchmark any Julia package and offers features like generating tables and plots of benchmark results. It also supports custom benchmarks and can be integrated into GitHub actions for automated benchmarking of PRs.

orama-core

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced AI capabilities in projects.

20 - OpenAI Gpts

Consulting & Investment Banking Interview Prep GPT

Run mock interviews, review content and get tips to ace strategy consulting and investment banking interviews

Dungeon Master's Assistant

Your new DM's screen: helping Dungeon Masters to craft & run amazing D&D adventures.

Database Builder

Hosts a real SQLite database and helps you create tables, make schema changes, and run SQL queries, ideal for all levels of database administration.

Restaurant Startup Guide

Meet the Restaurant Startup Guide GPT: your friendly guide in the restaurant biz. It offers casual, approachable advice to help you start and run your own restaurant with ease.

Community Design™

A community-building GPT based on the wildly popular Community Design™ framework from Mighty Networks. Start creating communities that run themselves.

Code Helper for Web Application Development

Friendly web assistant for efficient code. Ask the wizard to create an application and you will get the HTML, CSS and Javascript code ready to run your web application.

Creative Director GPT

I'm your brainstorm muse in marketing and advertising; the creativity machine you need to sharpen the skills, land the job, generate the ideas, win the pitches, build the brands, ace the awards, or even run your own agency. Psst... don't let your clients find out about me! 😉

Pace Assistant

Provides running splits for Strava Routes, accounting for distance and elevation changes

Design Sprint Coach (beta)

A helpful coach for guiding teams through Design Sprints with a touch of sass.