Best AI tools for< Research Ai Safety >

20 - AI tool Sites

MIRI (Machine Intelligence Research Institute)

MIRI (Machine Intelligence Research Institute) is a non-profit research organization dedicated to ensuring that artificial intelligence has a positive impact on humanity. MIRI conducts foundational mathematical research on topics such as decision theory, game theory, and reinforcement learning, with the goal of developing new insights into how to build safe and beneficial AI systems.

Athina AI

Athina AI is a platform that provides research and guides for building safe and reliable AI products. It helps thousands of AI engineers in building safer products by offering tutorials, research papers, and evaluation techniques related to large language models. The platform focuses on safety, prompt engineering, hallucinations, and evaluation of AI models.

AI Now Institute

AI Now Institute is a think tank focused on the social implications of AI and the consolidation of power in the tech industry. They challenge and reimagine the current trajectory for AI through research, publications, and advocacy. The institute provides insights into the political economy driving the AI market and the risks associated with AI development and policy.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Anthropic

Anthropic is an AI safety and research company based in San Francisco. Our interdisciplinary team has experience across ML, physics, policy, and product. Together, we generate research and create reliable, beneficial AI systems.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

Anthropic

Anthropic is an AI research and product company focused on the development of AI technologies that prioritize safety and long-term well-being. The company is dedicated to harnessing the benefits of AI while mitigating potential risks. Anthropic offers a range of AI models and solutions for various industries, emphasizing responsible AI development and deployment.

WinBuzzer

WinBuzzer is an AI-focused website providing comprehensive information on AI companies, divisions, projects, and labs. The site covers a wide range of topics related to artificial intelligence, including chatbots, AI assistants, AI solutions, AI technologies, AI models, AI agents, and more. WinBuzzer also delves into areas such as AI ethics, AI safety, AI chips, artificial general intelligence (AGI), synthetic data, AI benchmarks, AI regulation, and AI research. Additionally, the site offers insights into big tech companies, hardware, software, cybersecurity, and more.

O.XYZ

The O.XYZ website is a platform dedicated to decentralized super intelligence, governance, research, and innovation for the O Ecosystem. It focuses on cutting-edge AI research, community-driven allocation, and treasury management. The platform aims to empower the O Ecosystem through decentralization and responsible, safe, and ethical development of Super AI.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

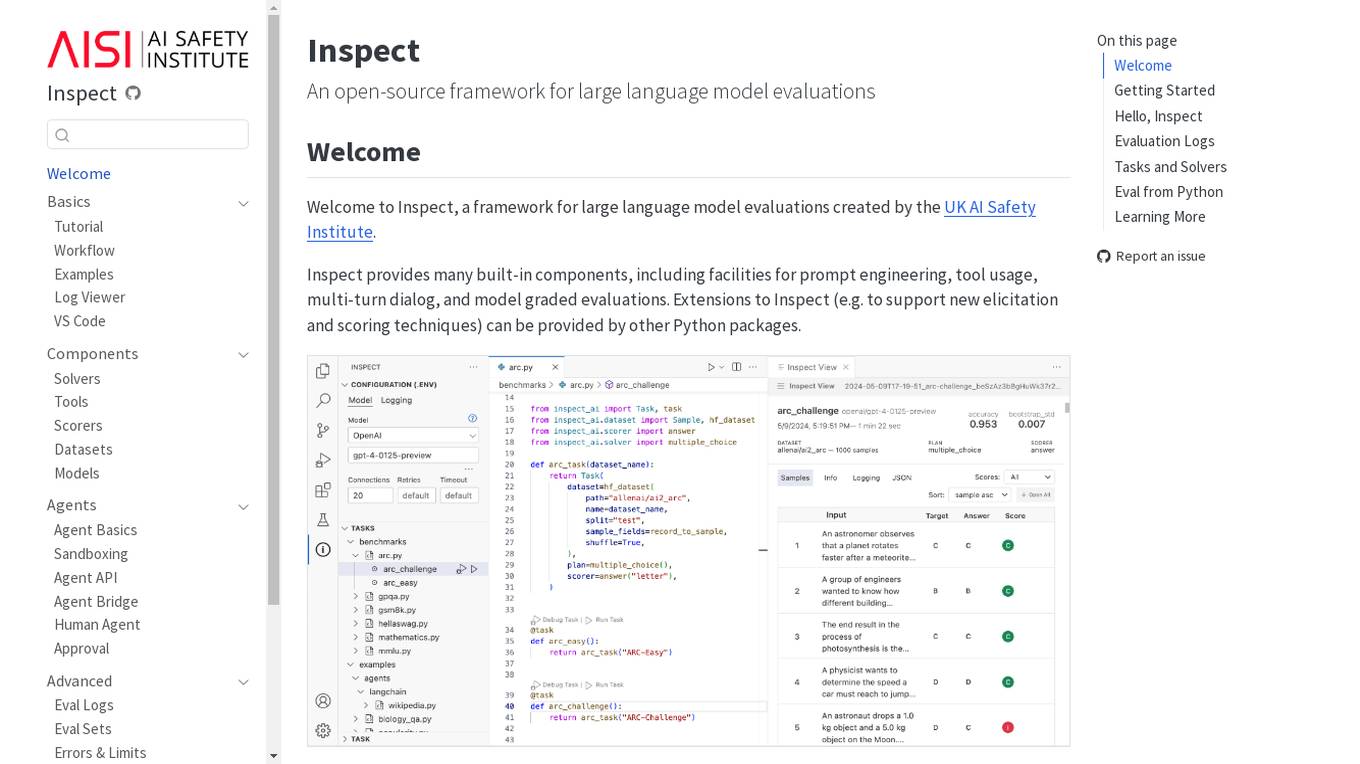

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

Anthropic

Anthropic is an AI safety and research company based in San Francisco. Our interdisciplinary team has experience across ML, physics, policy, and product. Together, we generate research and create reliable, beneficial AI systems.

Google DeepMind

Google DeepMind is an AI research lab that focuses on developing advanced artificial intelligence systems to benefit humanity. The lab explores various AI models and applications, such as image generation, audio control, video production, music generation, and interactive world exploration. Google DeepMind also works on responsible AI development and safety measures to address evolving threats. The lab's breakthroughs include advancements in protein structure prediction, genetics decoding, weather forecasting, and interactive world modeling.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

OpenAI Strawberry Model

OpenAI Strawberry Model is a cutting-edge AI initiative that represents a significant leap in AI capabilities, focusing on enhancing reasoning, problem-solving, and complex task execution. It aims to improve AI's ability to handle mathematical problems, programming tasks, and deep research, including long-term planning and action. The project showcases advancements in AI safety and aims to reduce errors in AI responses by generating high-quality synthetic data for training future models. Strawberry is designed to achieve human-like reasoning and is expected to play a crucial role in the development of OpenAI's next major model, codenamed 'Orion.'

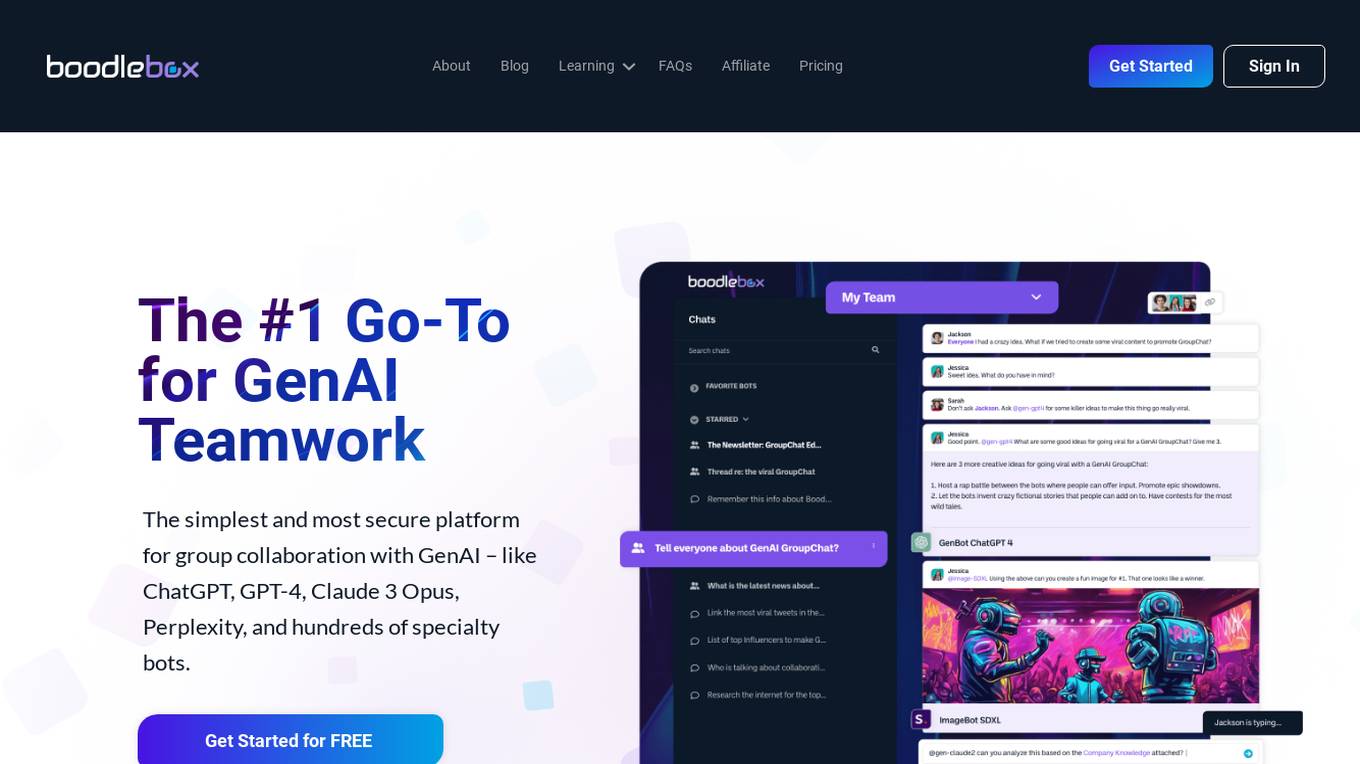

BoodleBox

BoodleBox is a platform for group collaboration with generative AI (GenAI) tools like ChatGPT, GPT-4, and hundreds of others. It allows teams to connect multiple bots, people, and sources of knowledge in one chat to keep discussions engaging, productive, and educational. BoodleBox also provides access to over 800 specialized AI bots and offers easy team management and billing to simplify access and usage across departments, teams, and organizations.

Google DeepMind

Google DeepMind is an AI research company that aims to develop artificial intelligence technologies to benefit the world. They focus on creating next-generation AI systems to solve complex scientific and engineering challenges. Their models like Gemini, Veo, Imagen 3, SynthID, and AlphaFold are at the forefront of AI innovation. DeepMind also emphasizes responsibility, safety, education, and career opportunities in the field of AI.

Tavus

Tavus is an AI tool that offers digital twin APIs for video generation and conversational video interfaces. It allows users to create immersive AI-generated video experiences using cutting-edge AI technology. Tavus provides best-in-class models like Phoenix-2 for creating realistic digital replicas with natural face movements. The platform offers rapid training, instant inference, support for 30+ languages, and built-in security features to ensure user privacy and safety. Tavus is preferred by developers and product teams for its developer-first approach, ease of integration, and exceptional customer service.

1 - Open Source AI Tools

Awesome-System2-Reasoning-LLM

The Awesome-System2-Reasoning-LLM repository is dedicated to a survey paper titled 'From System 1 to System 2: A Survey of Reasoning Large Language Models'. It explores the development of reasoning Large Language Models (LLMs), their foundational technologies, benchmarks, and future directions. The repository provides resources and updates related to the research, tracking the latest developments in the field of reasoning LLMs.

20 - OpenAI Gpts

GPT Safety Liaison

A liaison GPT for AI safety emergencies, connecting users to OpenAI experts.

Chemistry Expert

Advanced AI for chemistry, offering innovative solutions, process optimizations, and safety assessments, powered by OpenAI.

Töökeskkonna spetialist Eestis 2024

Sa tead kõike töökeskkonnast ja seadustest mis sellega kaasnevad

AI Research Assistant

Designed to Provide Comprehensive Insights from the AI industry from Reputable Sources.

AI-Driven Lab

recommends AI research these days in Japanese using AI-driven's-lab articles

Ethical AI Insights

Expert in Ethics of Artificial Intelligence, offering comprehensive, balanced perspectives based on thorough research, with a focus on emerging trends and responsible AI implementation. Powered by Breebs (www.breebs.com)

AI Industry Scout

AI and regulation news research assistant, finds all the AI-related industry information for and with you.

AI Executive Order Explorer

Interact with President Biden's Executive Order on Artificial Intelligence.

牛马审稿人-AI领域

Formal academic reviewer & writing advisor in cybersecurity & AI, detail-oriented.

AI Product Hunter

Explore 7779 new global AI products with ease! / 7779個のAI productのDBをもとにリサーチ

AI Chrome Extension Finder

Discover AI Chrome extensions simply by typing your requirements. Fast, customised, and readily deployable!

AI Prompt Engineer

Tech-focused AI Prompt Engineer, providing insights on AI generation and best practices.

AI Complexity Advancement Blueprint

Expert AI Architect for Advancing Complexities in AI Understanding