Best AI tools for< Request Evaluations >

20 - AI tool Sites

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

RFxAI

RFxAI is a cutting-edge AI tool designed to empower intelligence for Request for Proposals (RFPs). It is a platform that offers efficient cost-saving and speed through automation to help users generate, analyze, score, evaluate, and optimize their RFPs. RFxAI aims to transform RFP dynamics by boosting success rates by over 80%. With a focus on elevating RFx responses, RFxAI is positioned as the winning business proposal platform for B2B SaaS RFPs.

Panto AI

Panto AI is an AI automation testing platform that offers a comprehensive solution for mobile app testing, combining dynamic code reviews, code security checks, and QA automation. It allows users to create, execute, and run mobile test cases in natural language, ensuring reliable and efficient testing processes. With features like self-healing automation, real device testing, and deep failure visibility, Panto AI aims to streamline the QA process and enhance app quality. The platform is designed to be platform-agnostic and supports various integrations, making it suitable for diverse mobile app environments.

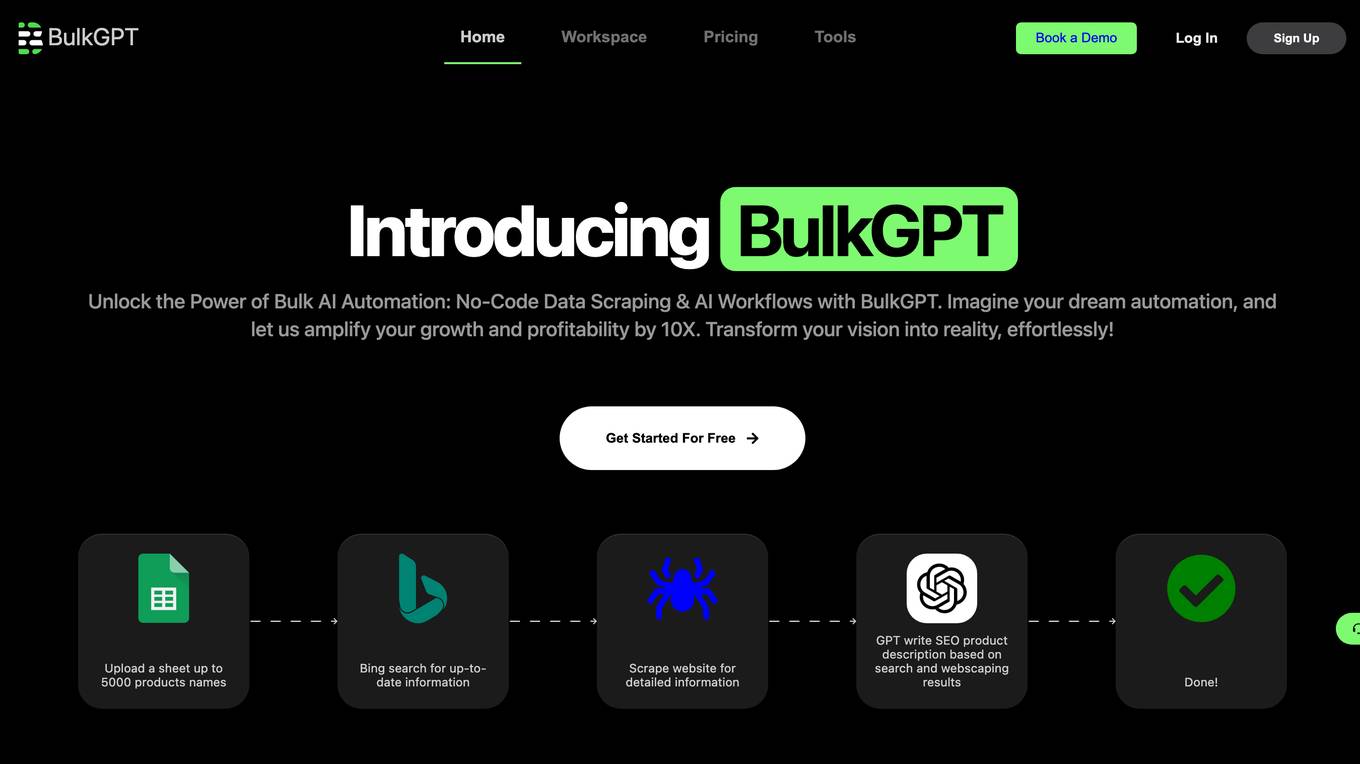

BulkGPT

BulkGPT is the ultimate AI automation platform that allows users to build custom AI workflows and automate at scale without the need for coding. It enables users to chain together web scraping, Google search, and AI generation to create powerful pipelines. With BulkGPT, users can run workflows on thousands of rows simultaneously, process up to 5,000 tasks in a single bulk request, and generate SEO-optimized articles, among other features. The platform supports various AI models, including GPT-4o and GPT-4o-mini, and provides multiple export formats for easy integration.

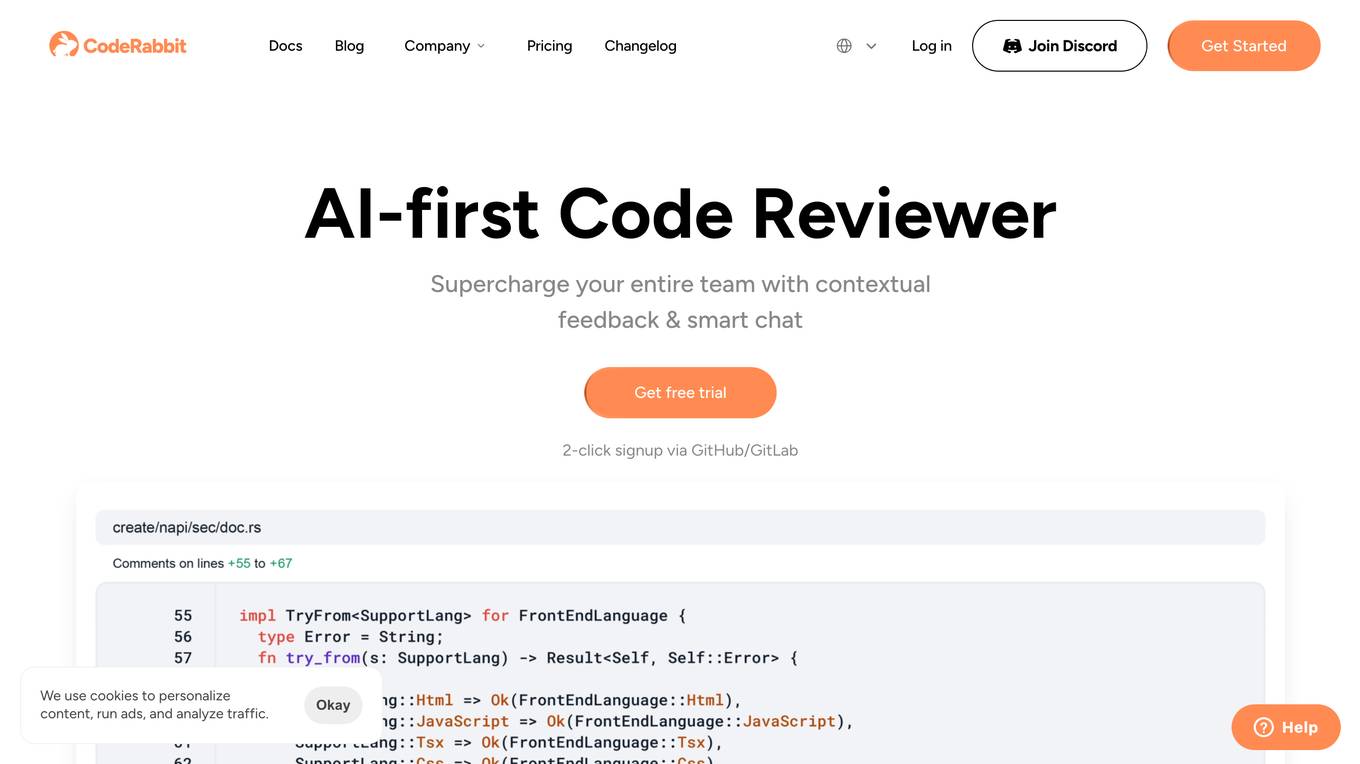

CodeRabbit

CodeRabbit is an innovative AI code review platform that streamlines and enhances the development process. By automating reviews, it dramatically improves code quality while saving valuable time for developers. The system offers detailed, line-by-line analysis, providing actionable insights and suggestions to optimize code efficiency and reliability. Trusted by hundreds of organizations and thousands of developers daily, CodeRabbit has processed millions of pull requests. Backed by CRV, CodeRabbit continues to revolutionize the landscape of AI-assisted software development.

OneTrust

OneTrust is an AI tool that offers a comprehensive suite of privacy management solutions to help organizations streamline compliance, improve operational efficiency, and enable risk-informed decisions. The platform enables automation of processes like Data Subject Rights (DSRs), risk assessments, and data mapping, allowing organizations to manage privacy requirements efficiently. OneTrust integrates regulatory-aware workflow automation with data integration to reduce costs and enhance compliance with regulations like GDPR and CCPA. The platform provides a unified privacy-centric user experience, ensuring secure response to DSR requests and building consumer trust. By automating ID verification, data retrieval and deletion, legal hold checks, and data redaction, OneTrust helps organizations deliver privacy securely and mitigate security risks.

Single Grain

Single Grain is a full-service digital marketing agency focused on driving innovative marketing for great companies. They specialize in services such as SEO, programmatic SEO, content marketing, paid advertising, CRO, and performance creative. With a team of highly specialized marketing experts, Single Grain helps clients increase revenue, lower CAC, and achieve their business goals through data-driven strategies and constant optimization. They have a proven track record of delivering impressive results for their clients, including significant increases in organic traffic, conversion rates, revenue growth, and more.

Five9

Five9 is a leading provider of cloud contact center software. We are driven by a passion to transform call and contact centers into customer engagement centers of excellence. Our AI-powered solutions help businesses deliver exceptional customer experiences, improve operational efficiency, and reduce costs. With Five9, you can: * Empower agents to deliver results anywhere * Improve CX with practical AI * Find efficiency with AI & automation * Scale with AI & digital workforce * Realize results with Five9

Aviso

Aviso is an end-to-end AI revenue platform that offers Conversational Intelligence and RevOps boost for seller performance. It provides AI-powered workflows, task-based agents, and a no-code GTM agent studio to predict, guide, and simplify revenue actions. Aviso's platform integrates state-of-the-art LLMs for advanced reasoning capabilities and 360° product integrations for seamless task execution. Trusted by over 450 revenue teams, Aviso offers real-time AI avatars, role-specific agents, and specialized multi-agents for critical revenue use cases.

Business Automated

Business Automated is an independent automation consultancy that offers custom automation solutions for businesses. The website provides a range of products and services related to automation, including tools like Airtable, Google Sheets, and ChatGPT. Users can access tutorials on YouTube and read more on Medium to learn about automation techniques. Business Automated also offers products like Sales CRM and Cold emails with GPT4 and Airtable, demonstrating its focus on streamlining business processes through AI technology.

Glimmer AI

Glimmer AI is a cutting-edge platform that revolutionizes the way presentations are created and delivered. Leveraging the power of GPT-3 and DALL·E 2, Glimmer AI empowers users to generate visually captivating presentations based on their text and voice commands. With its intuitive interface and seamless workflow, Glimmer AI simplifies the presentation process, enabling users to focus on delivering impactful messages.

Harver

Harver is a talent assessment platform that helps businesses make better hiring decisions faster. It offers a suite of solutions, including assessments, video interviews, scheduling, and reference checking, that can be used to optimize the hiring process and reduce time to hire. Harver's assessments are based on data and scientific insights, and they help businesses identify the right people for the right roles. Harver also offers support for the full talent lifecycle, including talent management, mobility, and development.

Ironclad

Ironclad is a leading contract management software that provides businesses and legal teams with an easy-to-use platform with AI-powered tools to handle every aspect of the contract lifecycle. It offers a comprehensive suite of features including contract drafting, editing, negotiation, search, storage, analytics, e-signature, and more. Ironclad's AI-powered repository creates a single source of truth for contracts and contract data, enabling businesses to gain insights, improve compliance, and make better decisions.

Forecast

Forecast is an AI-powered resource and project management software that helps businesses optimize their resources, plan their projects, and manage their tasks more efficiently. It offers a range of features such as resource management, project management, financial management, artificial intelligence, business intelligence, and reporting, timesheets, and integrations. Forecast is trusted by businesses of all sizes, from small businesses to large enterprises, and has been recognized for its innovation and effectiveness by leading industry analysts.

MaestroQA

MaestroQA is a comprehensive Call Center Quality Assurance Software that offers a range of products and features to enhance QA processes. It provides customizable report builders, scorecard builders, calibration workflows, coaching workflows, automated QA workflows, screen capture, accurate transcriptions, root cause analysis, performance dashboards, AI grading assist, analytics, and integrations with various platforms. The platform caters to industries like eCommerce, financial services, gambling, insurance, B2B software, social media, and media, offering solutions for QA managers, team leaders, and executives.

Level AI

Level AI is a provider of artificial intelligence (AI)-powered solutions for call centers. Its products include GenAI-automated Quality Assurance, Contact Center and Business Analytics, GenAI-powered VoC Insights, AgentGPT Real-Time Agent Assist, Agent Coaching, Agent Screen Recording, and Artificial Intelligence Integrations. Level AI's solutions are designed to help businesses improve customer experience, increase efficiency, and reduce costs. The company's customers include some of the world's leading customer service organizations, such as Brex and ezCater.

Interactions IVA

Interactions IVA is a conversational AI solutions platform for customer experience (CX). It offers a range of features to help businesses improve their customer interactions, including intelligent virtual assistants, PCI compliance, social customer care, and more. Interactions IVA is used by businesses in a variety of industries, including communications, finance and banking, healthcare, insurance, restaurants, retail and technology, travel and hospitality, and utilities.

Lingio

Lingio is an AI-powered employee training software designed for frontline workers, offering gamified learning experiences and mobile-based training solutions. The platform combines gamification and AI to enhance course completion rates and improve learning outcomes for deskless industries such as hospitality, cleaning, transportation, elderly care, and facility management.

Mygirl

Mygirl is an AI application that simulates a virtual girlfriend for users to engage in spicy chat conversations. The platform utilizes artificial intelligence to create a personalized and interactive experience, offering users a virtual companion for casual and fun interactions. Mygirl aims to provide a unique and entertaining chat experience through AI technology, allowing users to engage in conversations with a virtual character.

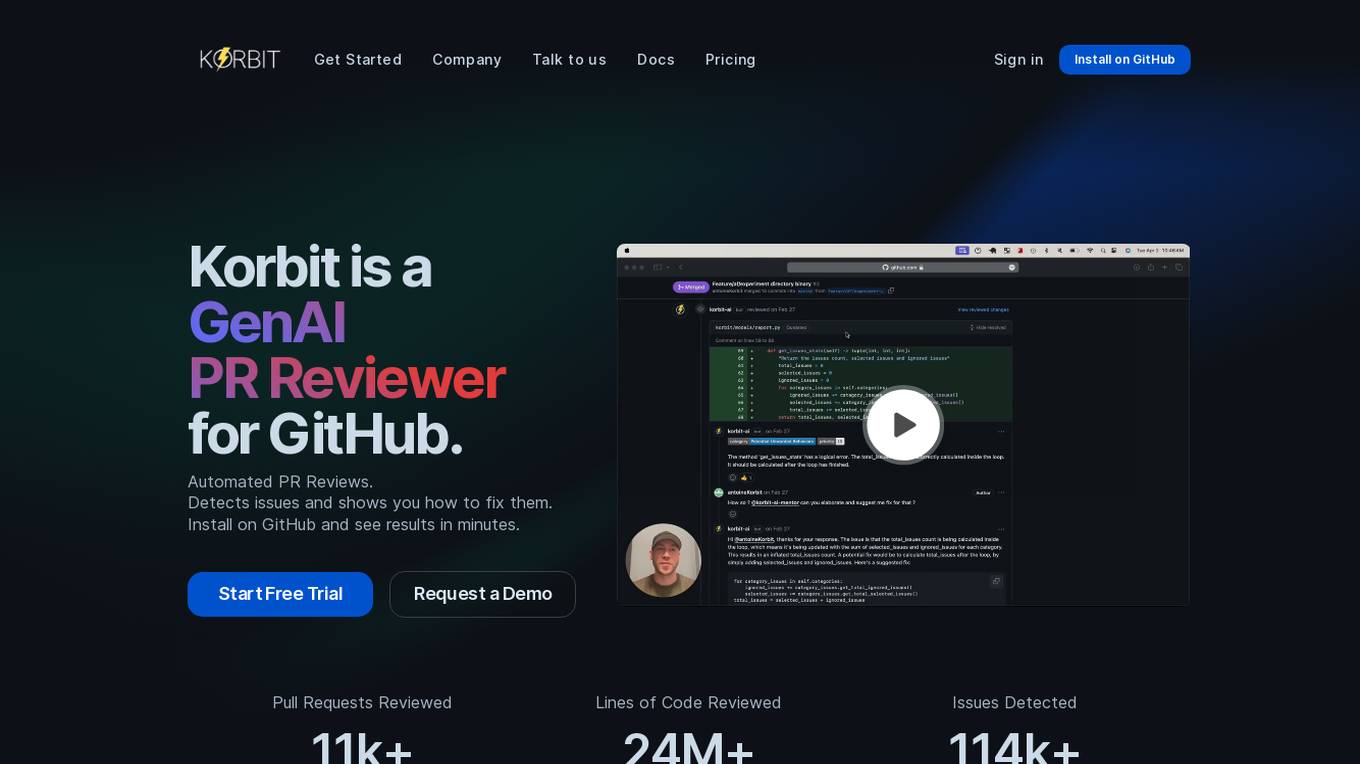

Korbit

Korbit is an AI-powered code review tool that helps developers write better code, faster. It integrates directly into your GitHub PR workflow and provides instant feedback on your code, identifying issues and providing actionable recommendations. Korbit also provides valuable insights into code quality, project status, and developer performance, helping you to boost your productivity and elevate your code.

1 - Open Source AI Tools

evalverse

Evalverse is an open-source project designed to support Large Language Model (LLM) evaluation needs. It provides a standardized and user-friendly solution for processing and managing LLM evaluations, catering to AI research engineers and scientists. Evalverse supports various evaluation methods, insightful reports, and no-code evaluation processes. Users can access unified evaluation with submodules, request evaluations without code via Slack bot, and obtain comprehensive reports with scores, rankings, and visuals. The tool allows for easy comparison of scores across different models and swift addition of new evaluation tools.

20 - OpenAI Gpts

FOIA GPT

Freedom of Information Act request strategist to "arm the rebels" for truth and transparency in the fight against corruption

Consistent Image Generator

Geneate an image ➡ Request modifications. This GPT supports generating consistent and continuous images with Dalle. It also offers the ability to restore or integrate photos you upload. ✔️Where to use: Wordpress Blog Post, Youtube thumbnail, AI profile, facebook, X, threads feed, Instagram reels

Just the Recipe

This application finds recipes on the web based on a request and then removes all the SEO, leaving you with just a recipe.

Swift Lyric Matchmaker

I match your day with a Taylor Swift lyric and create custom ones on request.

Table to JSON

我們經常在看 REST API 參考文件,文件中呈現 Request/Response 參數通常都是用表格的形式,開發人員都要手動轉換成 JSON 結構,有點小麻煩,但透過這個 GPT 只要上傳截圖就可以自動產生 JSON 範例與 JSON Schema 結構。

Janitor Bot Creator

This bot will create a template for a janitor.ai bot based on your request. Your request could either be a character or a scenario.

Gov Advisor

I'm a multilingual Government Agent - I'm here to assist you with any public service request