Best AI tools for< Optimize Cloud Costs >

20 - AI tool Sites

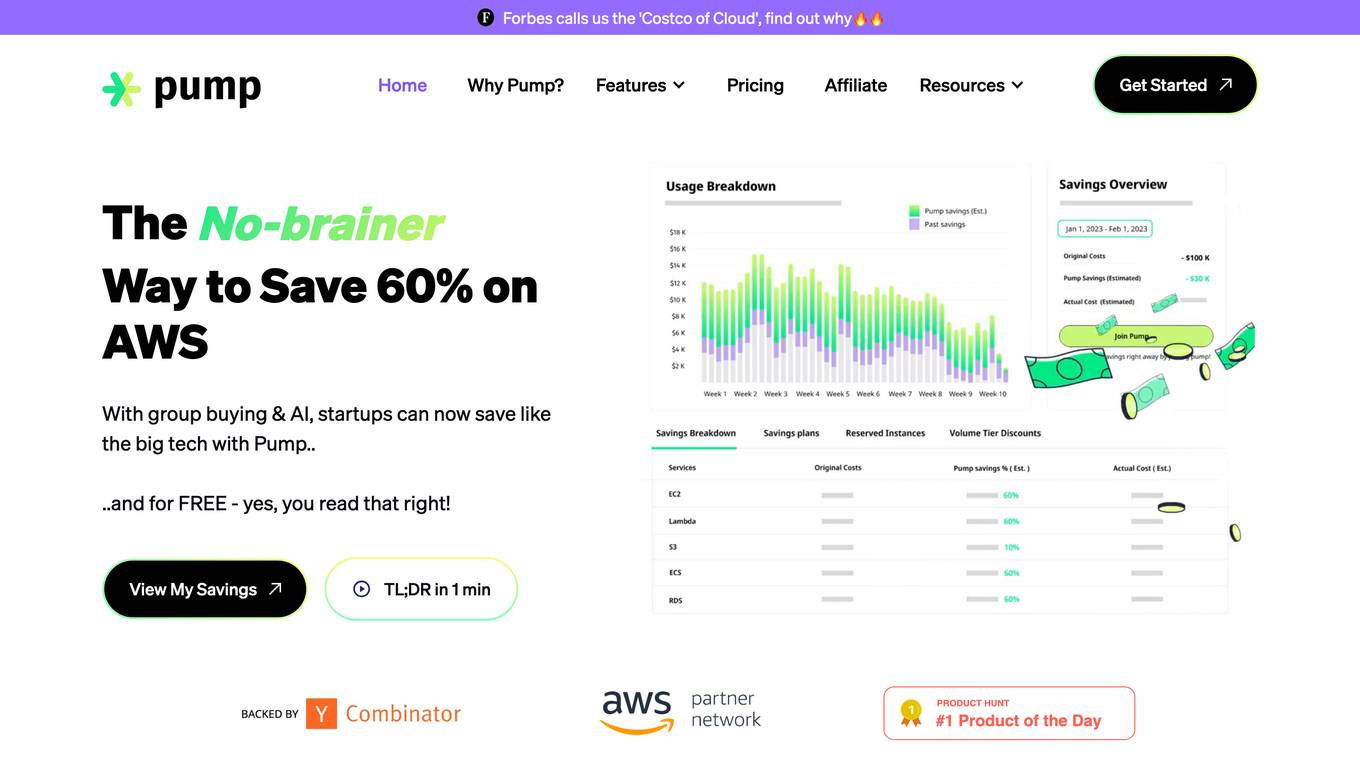

Pump

Pump is a cloud cost optimization tool that leverages group buying and AI technology to help startups save up to 60% on cloud costs. It is designed to provide discounts previously only available to large companies, with automated AI working tirelessly to find and apply the best savings for users. Pump offers a seamless and efficient experience for exploring cloud spend, ensuring secure AWS protection, and promising to slash runaway cloud computing costs. The tool is free to use and has gained popularity among startups worldwide for its innovative approach to cloud cost savings.

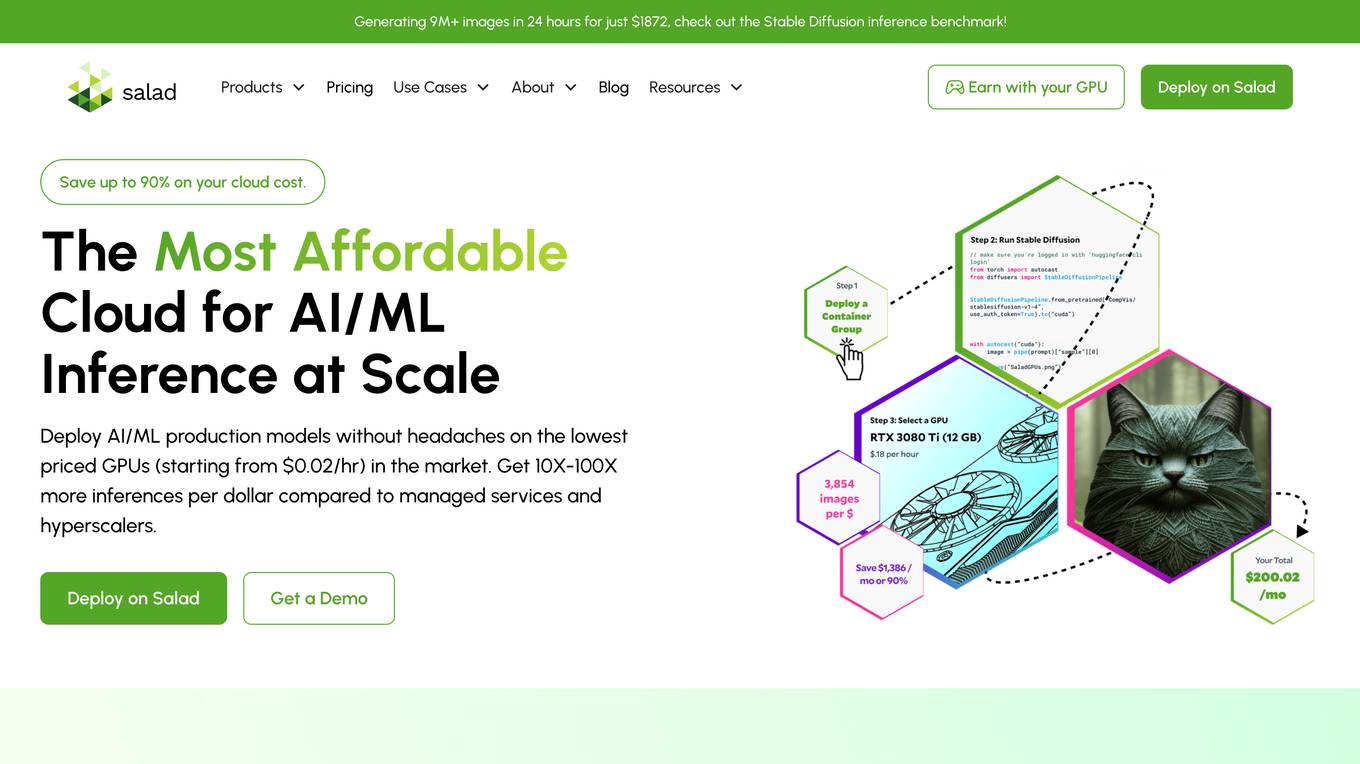

Salad

Salad is a distributed GPU cloud platform that offers fully managed and massively scalable services for AI applications. It provides the lowest priced AI transcription in the market, with features like image generation, voice AI, computer vision, data collection, and batch processing. Salad democratizes cloud computing by leveraging consumer GPUs to deliver cost-effective AI/ML inference at scale. The platform is trusted by hundreds of machine learning and data science teams for its affordability, scalability, and ease of deployment.

Rafay

Rafay is an AI-powered platform that accelerates cloud-native and AI/ML initiatives for enterprises. It provides automation for Kubernetes clusters, cloud cost optimization, and AI workbenches as a service. Rafay enables platform teams to focus on innovation by automating self-service cloud infrastructure workflows.

IgniteTech

IgniteTech is an AI application that offers innovative solutions and services for businesses. It specializes in transforming business communication through human-AI collaboration, revolutionizing field force management, and redefining human-AI collaboration. IgniteTech aims to save and stabilize software and businesses, innovate products to the AWS Cloud, and add unlimited value with a Netflix-style licensing model. The company has a strong focus on AI technology and its applications across various industries.

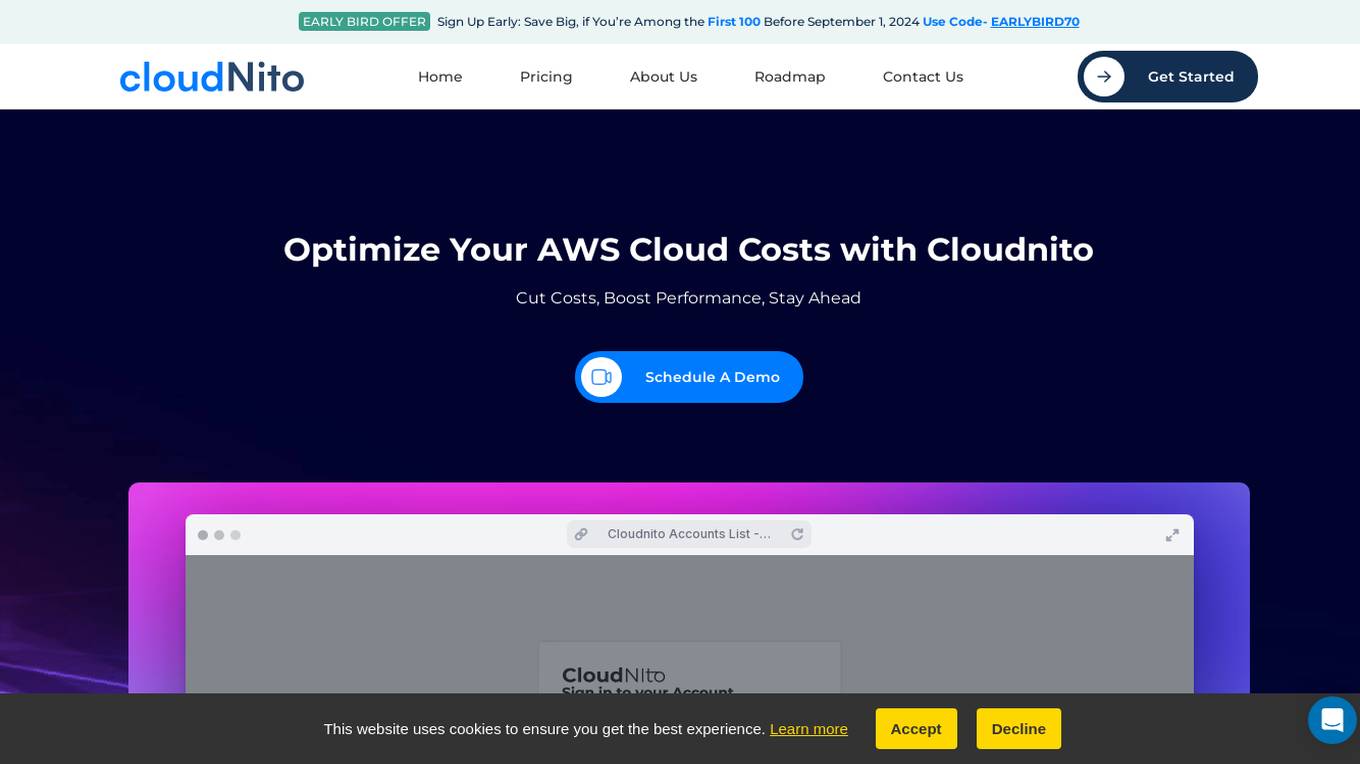

cloudNito

cloudNito is an AI-driven platform that specializes in cloud cost optimization and management for businesses using AWS services. The platform offers automated cost optimization, comprehensive insights and analytics, unified cloud management, anomaly detection, cost and usage explorer, recommendations for waste reduction, and resource optimization. By leveraging advanced AI solutions, cloudNito aims to help businesses efficiently manage their AWS cloud resources, reduce costs, and enhance performance.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Frugal

Frugal is an intelligent application cost engineering platform that optimizes code to reduce cloud costs automatically. It is the first AI-powered cost optimization platform built for engineers, empowering them to find and fix inefficiencies in code that drain cloud budgets. The platform aims to reinvent cost engineering by enabling developers to reduce application costs and improve cloud efficiency through automated identification and resolution of wasteful practices.

PrimeOrbit

PrimeOrbit is an AI-driven cloud cost optimization platform designed to empower operations and boost ROI for enterprises. The platform focuses on streamlining operations and simplifying cost management by delivering quality-centric solutions. It offers AI-driven optimization recommendations, automated cost allocation, and tailored FinOps for optimal efficiency and control. PrimeOrbit stands out by providing user-centric approach, superior AI recommendations, customization, and flexible enterprise workflow. It supports major cloud providers including AWS, Azure, and GCP, with full support for GCP and Kubernetes coming soon. The platform ensures complete cost allocation across cloud resources, empowering decision-makers to optimize cloud spending efficiently and effectively.

CloudKeeper

CloudKeeper is a comprehensive cloud cost optimization partner that offers solutions for AWS, Azure, and GCP. The platform provides services such as rate optimization, usage optimization, cloud consulting & support, and cloud cost visibility. CloudKeeper combines group buying, commitments management, expert consulting, and analytics to reduce cloud costs and maximize value. With a focus on savings, visibility, and services bundled together, CloudKeeper aims to simplify the cloud cost optimization journey for businesses of all sizes.

Harness

Harness is an AI-driven software delivery platform that empowers software engineering teams with AI-infused technology for seamless software delivery. It offers a single platform for all software delivery needs, including DevOps modernization, continuous delivery, GitOps, feature flags, infrastructure as code management, chaos engineering, service reliability management, secure software delivery, cloud cost optimization, and more. Harness aims to simplify the developer experience by providing actionable insights on SDLC, secure software supply chain assurance, and AI development assistance throughout the software delivery lifecycle.

DevSecCops

DevSecCops is an AI-driven automation platform designed to revolutionize DevSecOps processes. The platform offers solutions for cloud optimization, machine learning operations, data engineering, application modernization, infrastructure monitoring, security, compliance, and more. With features like one-click infrastructure security scan, AI engine security fixes, compliance readiness using AI engine, and observability, DevSecCops aims to enhance developer productivity, reduce cloud costs, and ensure secure and compliant infrastructure management. The platform leverages AI technology to identify and resolve security issues swiftly, optimize AI workflows, and provide cost-saving techniques for cloud architecture.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

Wren AI Cloud

Wren AI Cloud is an AI-powered SQL agent that provides seamless data access and insights for non-technical teams. It empowers users to effortlessly transform natural language queries into actionable SQL, enabling faster decision-making and driving business growth. The platform offers self-serve data exploration, AI-generated insights and visuals, and multi-agentic workflow to ensure reliable results. Wren AI Cloud is scalable, versatile, and integrates with popular data tools, making it a valuable asset for businesses seeking to leverage AI for data-driven decisions.

Nomi.cloud

Nomi.cloud is a modern AI-powered CloudOps and HPC assistant designed for next-gen businesses. It offers developers, marketplace, enterprise solutions, and pricing console. With features like single pane of glass view, instant deployment, continuous monitoring, AI-powered insights, and budgets & alerts built-in, Nomi.cloud aims to revolutionize cloud management. It provides a user-friendly interface to manage infrastructure efficiently, optimize costs, and deploy resources across multiple regions with ease. Nomi.cloud is built for scale, trusted by enterprises, and offers a range of GPUs and cloud providers to suit various needs.

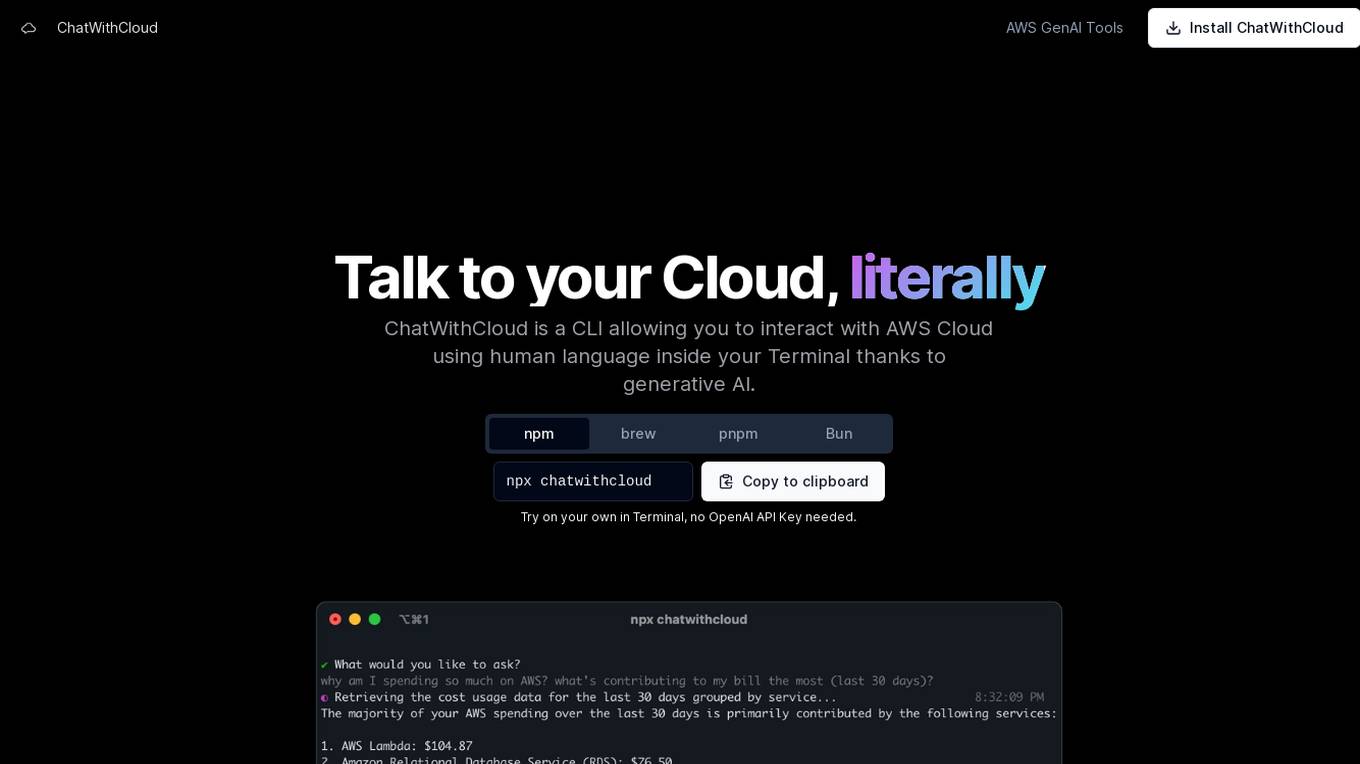

ChatWithCloud

ChatWithCloud is a command-line interface (CLI) tool that enables users to interact with AWS Cloud using natural language within the Terminal, powered by generative AI. It allows users to perform various tasks such as cost analysis, security analysis, troubleshooting, and fixing infrastructure issues without the need for an OpenAI API Key. The tool offers both a lifetime license option and a managed subscription model for users' convenience.

Quinyx

Quinyx is an AI-powered Workforce Management, Planning & Scheduling platform that helps businesses optimize their workforce, streamline scheduling, and engage frontline employees. It offers features such as demand forecasting, labor optimization, compliance checking, task management, and communication tools. Quinyx aims to enhance employee productivity, reduce scheduling admin time, and improve overall business performance through AI-driven solutions.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

Codimite

Codimite is an AI-assisted offshore development services solution that specializes in Web2 to Web3 communication. They offer PWA solutions, cloud modernization, and a range of services to help organizations maximize opportunities with state-of-the-art technologies. With a dedicated team of engineers and project managers, Codimite ensures efficient project management and communication. Their unique culture, experienced team, and focus on performance empower clients to achieve success. Codimite also excels in development infrastructure modernization, collaboration, data, and artificial intelligence development. They have a strong partnership with Google Cloud and offer services such as application migration, cost optimization, and collaboration solutions.

3 - Open Source AI Tools

optscale

OptScale is an open-source FinOps and MLOps platform that provides cloud cost optimization for all types of organizations and MLOps capabilities like experiment tracking, model versioning, ML leaderboards.

ai-assisted-devops

AI-Assisted DevOps is a 10-day course focusing on integrating artificial intelligence (AI) technologies into DevOps practices. The course covers various topics such as AI for observability, incident response, CI/CD pipeline optimization, security, and FinOps. Participants will learn about running large language models (LLMs) locally, making API calls, AI-powered shell scripting, and using AI agents for self-healing infrastructure. Hands-on activities include creating GitHub repositories with bash scripts, generating Docker manifests, predicting server failures using AI, and building AI agents to monitor deployments. The course culminates in a capstone project where learners implement AI-assisted DevOps automation and receive peer feedback on their projects.

openops

OpenOps is a No-Code FinOps automation platform designed to help organizations reduce cloud costs and streamline financial operations. It offers customizable workflows for automating key FinOps processes, comes with its own Excel-like database and visualization system, and enables collaboration between different teams. OpenOps integrates seamlessly with major cloud providers, third-party FinOps tools, communication platforms, and project management tools, providing a comprehensive solution for efficient cost-saving measures implementation.

20 - OpenAI Gpts

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

Cloud Services Management Advisor

Manages and optimizes organization's cloud resources and services.

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

AzurePilot | Steer & Streamline Your Cloud Costs🌐

Specialized advisor on Azure costs and optimizations

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.

Infrastructure as Code Advisor

Develops, advises and optimizes infrastructure-as-code practices across the organization.

Azure Mentor

Expert in Azure's latest services, including Application Insights, API Management, and more.