Best AI tools for< Monitor Llms >

20 - AI tool Sites

JFrog ML

JFrog ML is an AI platform designed to streamline AI development from prototype to production. It offers a unified MLOps platform to build, train, deploy, and manage AI workflows at scale. With features like Feature Store, LLMOps, and model monitoring, JFrog ML empowers AI teams to collaborate efficiently and optimize AI & ML models in production.

Freeplay

Freeplay is a tool that helps product teams experiment, test, monitor, and optimize AI features for customers. It provides a single pane of glass for the entire team, lightweight developer SDKs for Python, Node, and Java, and deployment options to meet compliance needs. Freeplay also offers best practices for the entire AI development lifecycle.

Unified DevOps platform to build AI applications

This is a unified DevOps platform to build AI applications. It provides a comprehensive set of tools and services to help developers build, deploy, and manage AI applications. The platform includes a variety of features such as a code editor, a debugger, a profiler, and a deployment manager. It also provides access to a variety of AI services, such as natural language processing, machine learning, and computer vision.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

ModelOp

ModelOp is the leading AI Governance software for enterprises, providing a single source of truth for all AI systems, automated process workflows, real-time insights, and integrations to extend the value of existing technology investments. It helps organizations safeguard AI initiatives without stifling innovation, ensuring compliance, accelerating innovation, and improving key performance indicators. ModelOp supports generative AI, Large Language Models (LLMs), in-house, third-party vendor, and embedded systems. The software enables visibility, accountability, risk tiering, systemic tracking, enforceable controls, workflow automation, reporting, and rapid establishment of AI governance.

deepset

deepset is an AI platform that offers enterprise-level products and solutions for AI teams. It provides deepset Cloud, a platform built with Haystack, enabling fast and accurate prototyping, building, and launching of advanced AI applications. The platform streamlines the AI application development lifecycle, offering processes, tools, and expertise to move from prototype to production efficiently. With deepset Cloud, users can optimize solution accuracy, performance, and cost, and deploy AI applications at any scale with one click. The platform also allows users to explore new models and configurations without limits, extending their team with access to world-class AI engineers for guidance and support.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Arthur

Arthur is an industry-leading MLOps platform that simplifies deployment, monitoring, and management of traditional and generative AI models. It ensures scalability, security, compliance, and efficient enterprise use. Arthur's turnkey solutions enable companies to integrate the latest generative AI technologies into their operations, making informed, data-driven decisions. The platform offers open-source evaluation products, model-agnostic monitoring, deployment with leading data science tools, and model risk management capabilities. It emphasizes collaboration, security, and compliance with industry standards.

KissanAI

Dhenu Agri LLMs - KissanAI is an AI-powered application designed to assist farmers in optimizing their agricultural practices. The platform leverages artificial intelligence to provide farmers with valuable insights and recommendations for improving crop yield and overall farm productivity. By analyzing data such as weather patterns, soil quality, and crop health, KissanAI helps farmers make informed decisions to enhance their agricultural output. With user-friendly interfaces and intuitive features, this tool aims to empower farmers with cutting-edge technology to drive sustainable farming practices.

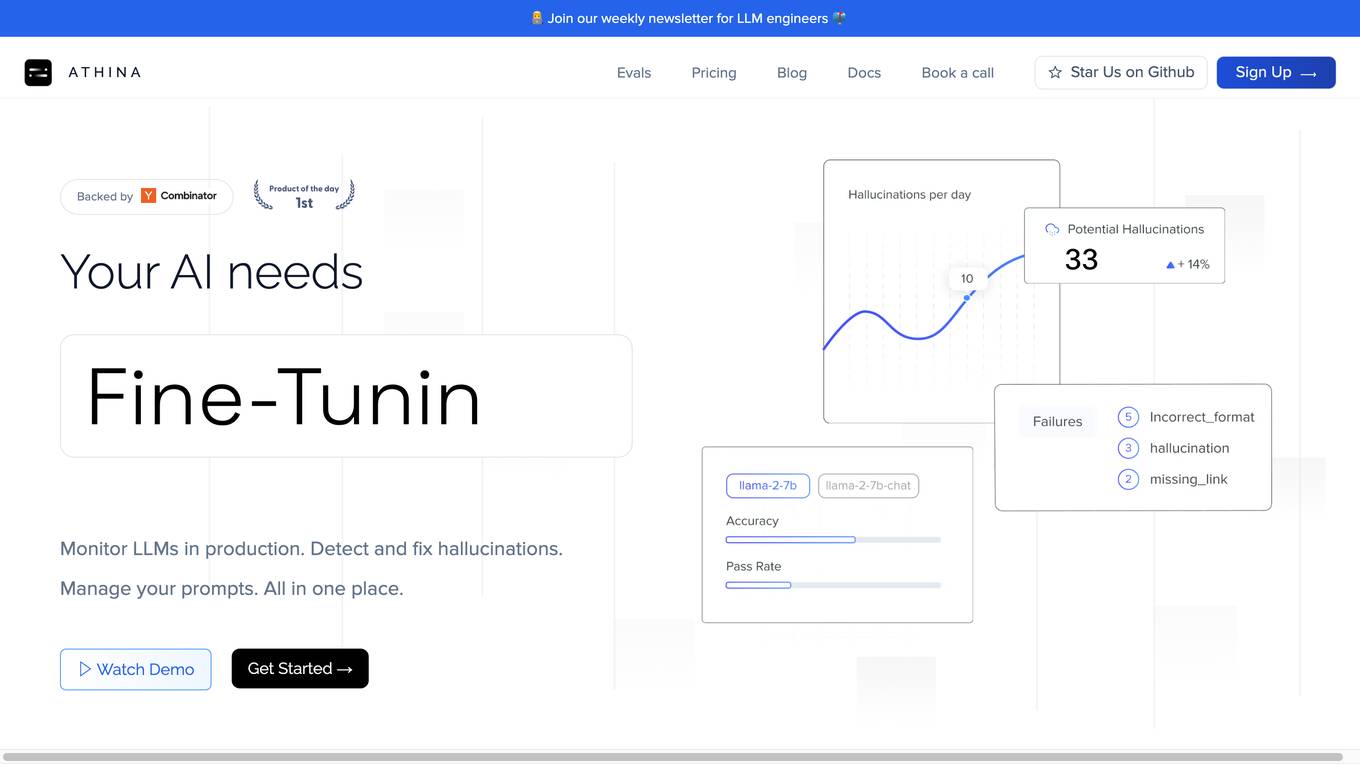

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

Prodmagic

Prodmagic is an AI tool that enables users to easily build advanced chatbots in minutes. With Prodmagic, users can connect their custom LLMs to create chatbots that can be integrated on any website with just a single line of code. The platform allows for easy customization of chatbots' appearance and behavior without the need for coding. Prodmagic also offers integration with custom LLMs and OpenAI, providing a seamless experience for users looking to enhance their chatbot capabilities. Additionally, Prodmagic provides essential features such as chat history, error monitoring, and simple pricing plans, making it a convenient and cost-effective solution for chatbot development.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

Storytell.ai

Storytell.ai is an enterprise-grade AI platform that offers Business-Grade Intelligence across data, focusing on boosting productivity for employees and teams. It provides a secure environment with features like creating project spaces, multi-LLM chat, task automation, chat with company data, and enterprise-AI security suite. Storytell.ai ensures data security through end-to-end encryption, data encryption at rest, provenance chain tracking, and AI firewall. It is committed to making AI safe and trustworthy by not training LLMs with user data and providing audit logs for accountability. The platform continuously monitors and updates security protocols to stay ahead of potential threats.

LLMMM Marketing Monitor

LLMMM is an AI tool designed to monitor how AI models perceive and present brands. It offers real-time monitoring and cross-model insights to help brands understand their digital presence across various leading AI platforms. With automated analysis and lightning-fast results, LLMMM provides immediate visibility into how AI chatbots interpret brands. The tool focuses on brand intelligence, brand safety monitoring, misalignment detection, and cross-model brand intelligence. Users can create an account in minutes and access a range of features to track and analyze their brand's performance in the AI landscape.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

Devi

Devi is an AI-powered social media lead generation and outreach tool that helps businesses find and engage with potential customers on Facebook, LinkedIn, Twitter, Reddit, and other platforms. It uses artificial intelligence to monitor keywords and identify high-intent leads, and then provides users with tools to reach out to those leads and build relationships. Devi also offers a variety of other features, such as AI-generated content, scheduling, and analytics.

Hexowatch

Hexowatch is an AI-powered website monitoring and archiving tool that helps businesses track changes to any website, including visual, content, source code, technology, availability, or price changes. It provides detailed change reports, archives snapshots of pages, and offers side-by-side comparisons and diff reports to highlight changes. Hexowatch also allows users to access monitored data fields as a downloadable CSV file, Google Sheet, RSS feed, or sync any update via Zapier to over 2000 different applications.

1 - Open Source AI Tools

trulens

TruLens provides a set of tools for developing and monitoring neural nets, including large language models. This includes both tools for evaluation of LLMs and LLM-based applications with _TruLens-Eval_ and deep learning explainability with _TruLens-Explain_. _TruLens-Eval_ and _TruLens-Explain_ are housed in separate packages and can be used independently.

20 - OpenAI Gpts

Quake and Volcano Watch Iceland

Seismic and volcanic monitor with in-depth data and visuals.

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Financial Cybersecurity Analyst - Lockley Cash v1

stunspot's advisor for all things Financial Cybersec

AML/CFT Expert

Specializes in Anti-Money Laundering/Counter-Financing of Terrorism compliance and analysis.

Quality Assurance Advisor

Ensures product quality through systematic process monitoring and evaluation.

SkyNet - Global Conflict Analyst

Global Conflict Analyst that will provide a 'wartime update' on the worst global conflict atm.

Network Operations Advisor

Ensures efficient and effective network performance and security.