Best AI tools for< Manage Pipelines >

20 - AI tool Sites

Modjo

Modjo is an AI platform designed to boost sales team productivity, enhance commercial efficiency, and develop sales skills. It captures interactions with customers, creates an augmented conversational database, and deploys AI solutions within organizations. Modjo's AI capabilities help in productivity, sales execution, and coaching, enabling teams to focus on selling, manage pipelines efficiently, and develop sales skills. The platform offers features like AI call scoring, CRM filling, call reviews, email follow-up, and insights library.

Salesmate

Salesmate is a modern CRM software designed for teams to market, sell, and service from one platform. It offers advanced automation features to streamline processes, engage customers across all channels, generate leads, and make better decisions with rich data and insights. Salesmate is highly customizable, user-friendly, and suitable for various industries and roles. With time-saving tools, automations, and AI capabilities, Salesmate aims to improve user experience and boost business growth.

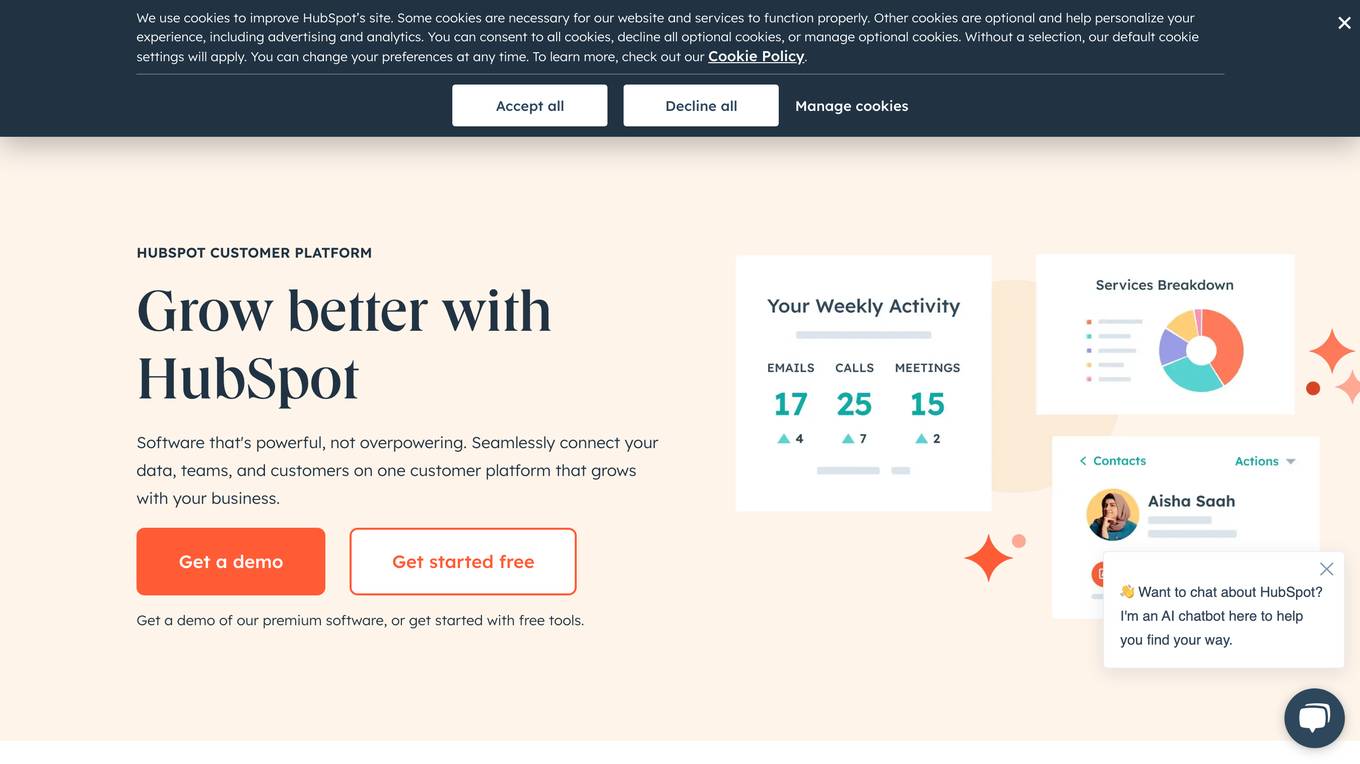

HubSpot

HubSpot is a customer relationship management (CRM) platform that provides software and tools for marketing, sales, customer service, content management, and operations. It is designed to help businesses grow by connecting their data, teams, and customers on one platform. HubSpot's AI tools are used to automate tasks, personalize marketing campaigns, and provide insights into customer behavior.

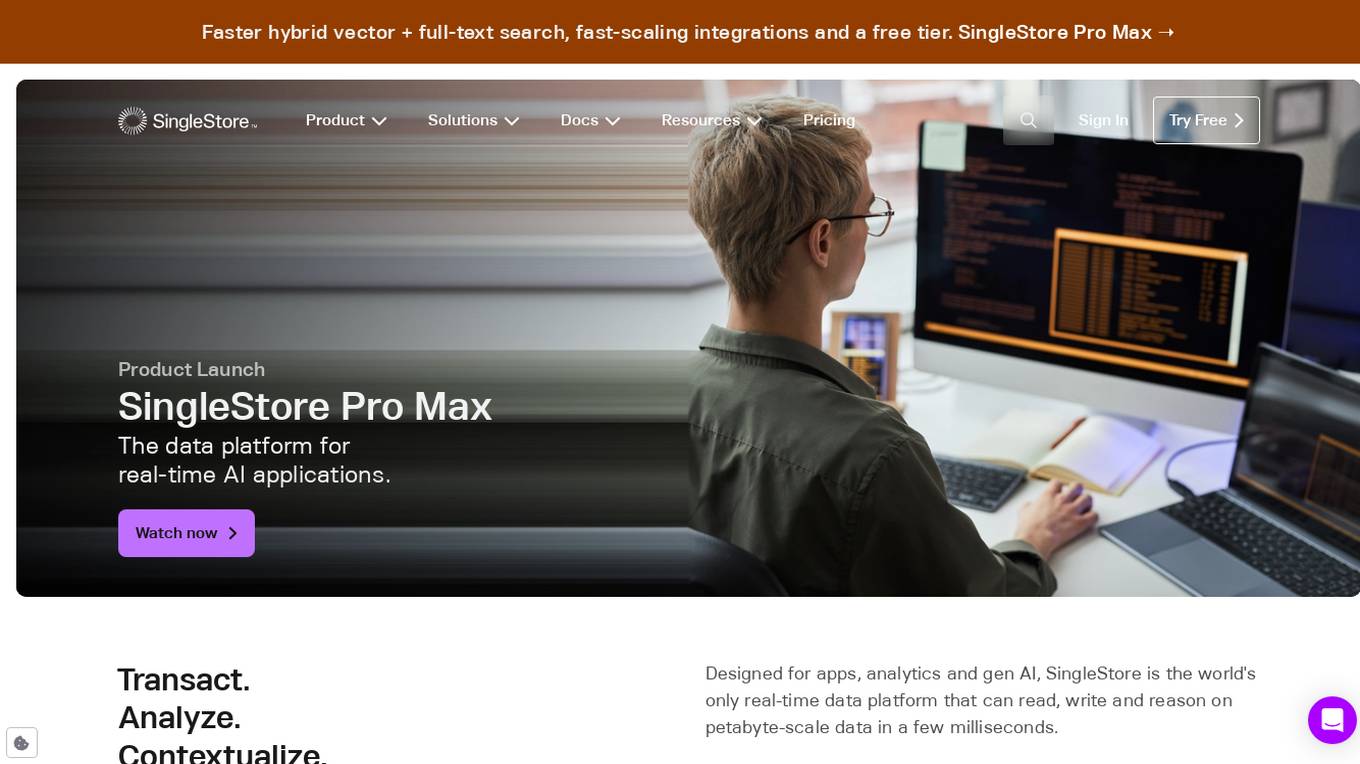

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

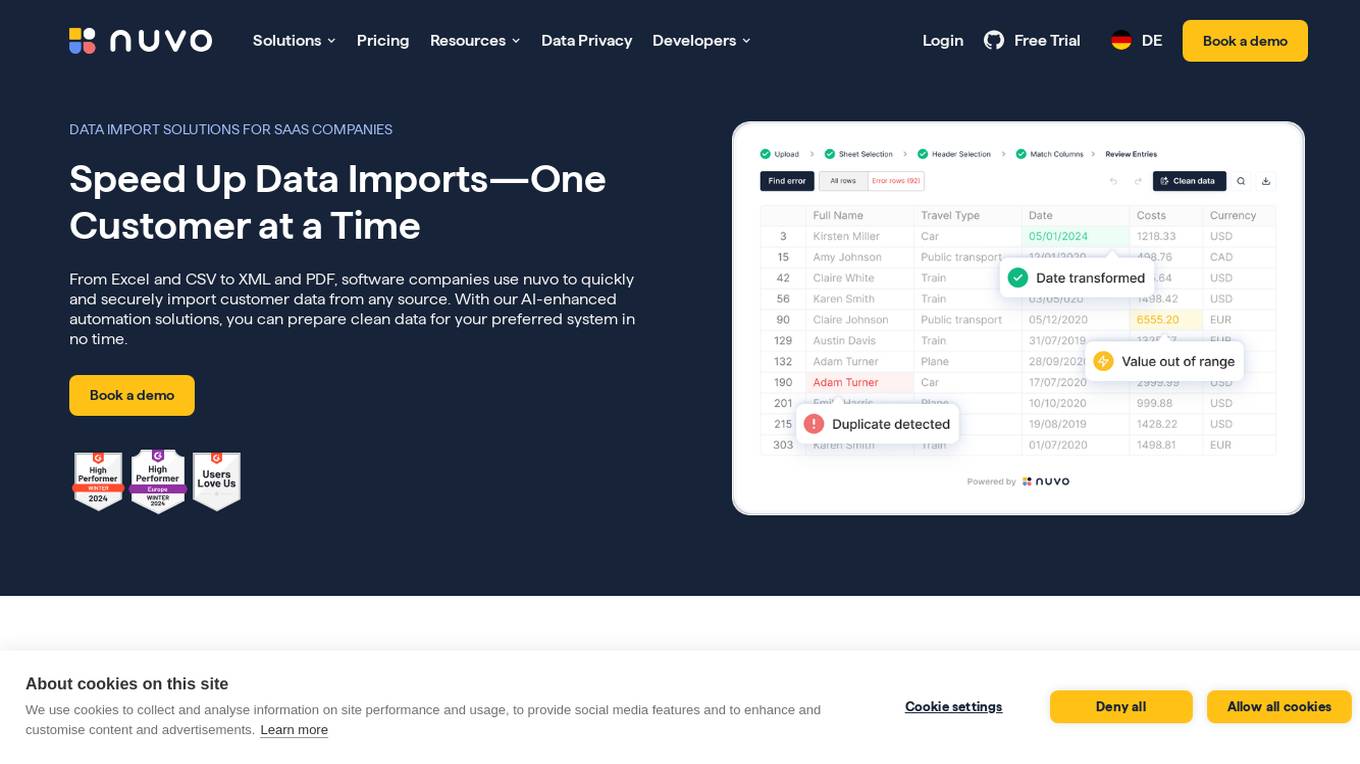

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

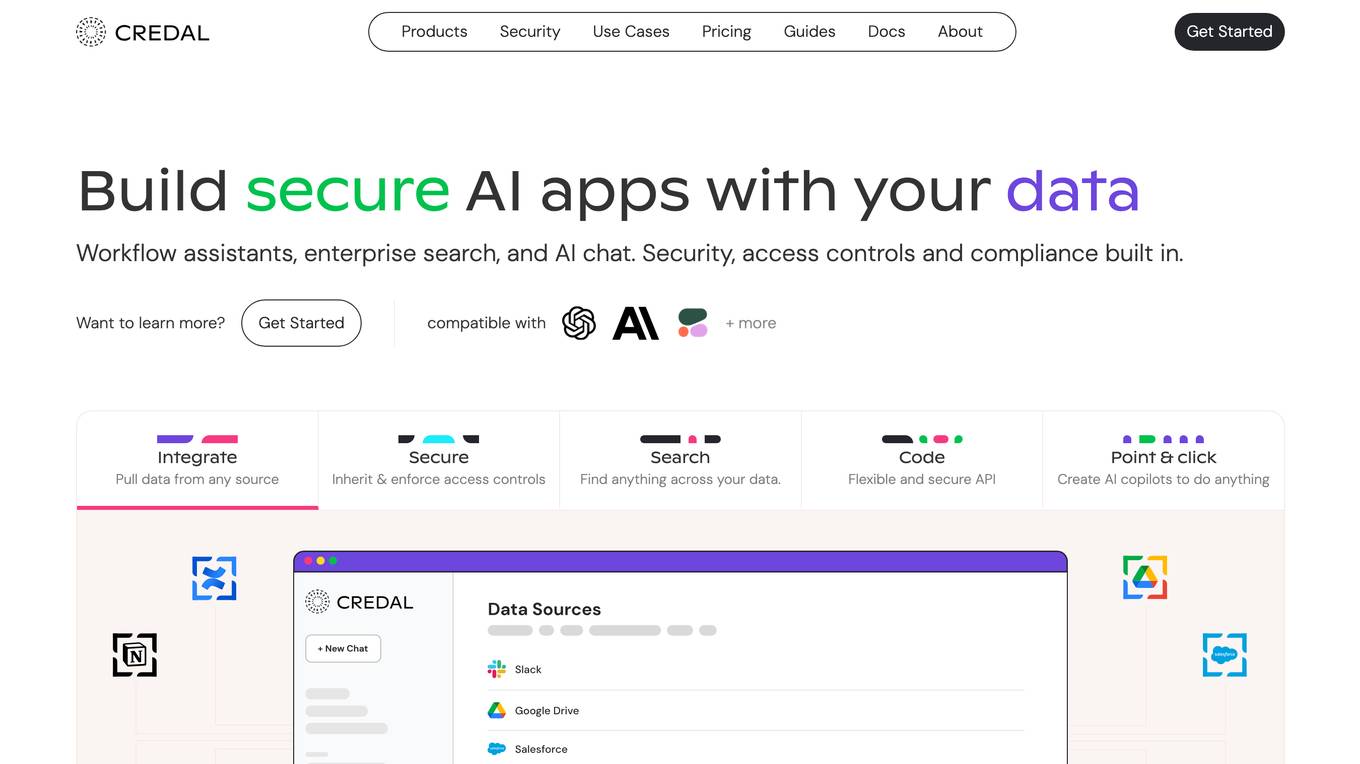

Credal

Credal is an AI tool that allows users to build secure AI assistants for enterprise operations. It enables every employee to create customized AI assistants with built-in security, permissions, and compliance features. Credal supports data integration, access control, search functionalities, and API development. The platform offers real-time sync, automatic permissions synchronization, and AI model deployment with security and compliance measures. It helps enterprises manage ETL pipelines, schedule tasks, and configure data processing. Credal ensures data protection, compliance with regulations like HIPAA, and comprehensive audit capabilities for generative AI applications.

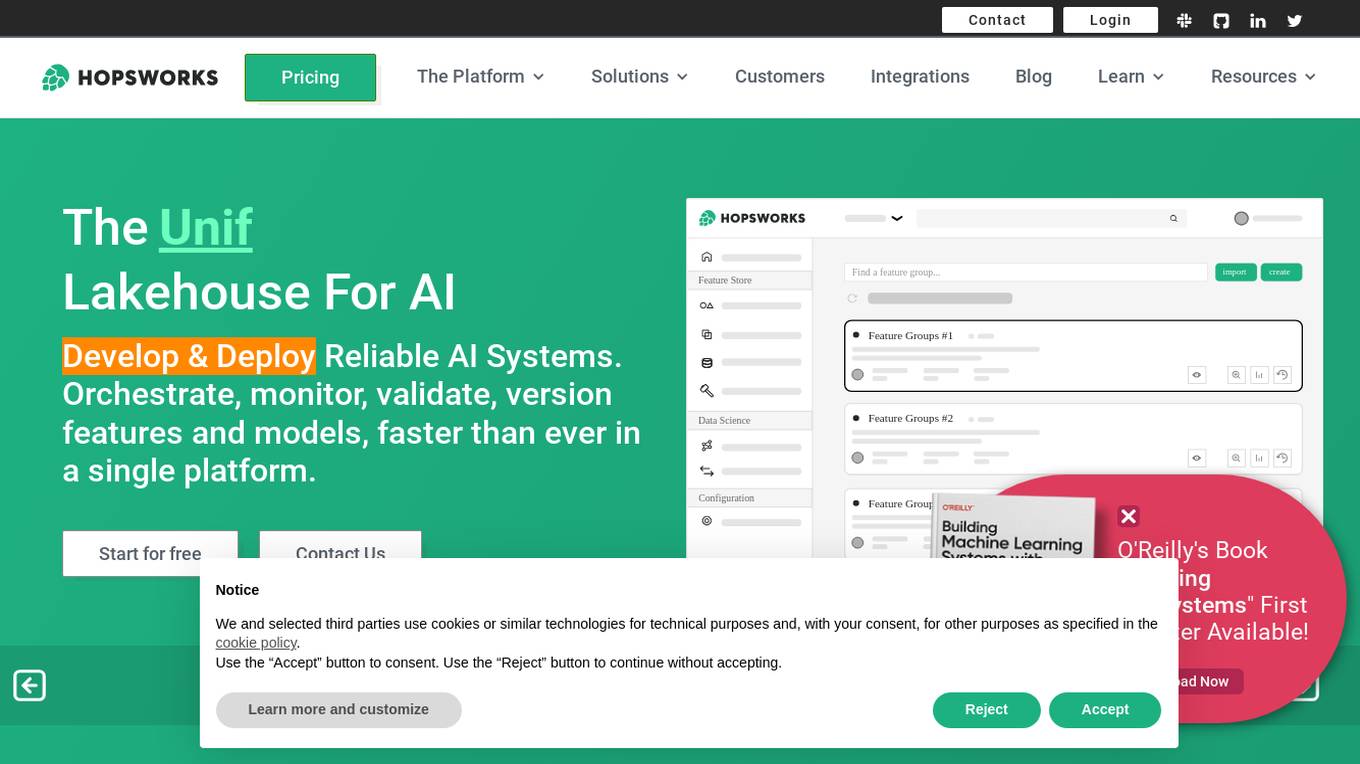

Hopsworks

Hopsworks is an AI platform that offers a comprehensive solution for building, deploying, and monitoring machine learning systems. It provides features such as a Feature Store, real-time ML capabilities, and generative AI solutions. Hopsworks enables users to develop and deploy reliable AI systems, orchestrate and monitor models, and personalize machine learning models with private data. The platform supports batch and real-time ML tasks, with the flexibility to deploy on-premises or in the cloud.

FrogHire.ai

FrogHire.ai is an AI-powered job-seeking toolkit designed to help users search, research, improve, and manage their job applications effectively. It offers features such as Profile Match Rate, Keywords Highlighter, Strategy Job Manager, Interview Hub, Resume Builder, H1B Job List, and Career Outcome Navigator. FrogHire.ai empowers international students and job seekers by providing insights, customized search strategies, and tracking tools to enhance their job search experience.

Astera Software

Astera Software offers enterprise-ready data management solutions, including data integration, unstructured data management, data warehousing, and EDI Connect. The platform provides automated data processing, data governance, and AI capabilities to transform data into powerful insights, enabling smarter decisions and innovation. Astera simplifies data management with features like data pipeline builder, data warehouse automation, and EDI transaction optimization. Trusted by leading enterprises worldwide, Astera boosts operational efficiency, accelerates time to market, ensures data accuracy, and reduces operational costs through AI-powered data management.

DVC

DVC is an open-source version control system for machine learning projects. It allows users to track and manage their data, models, and code in a single place. DVC also provides a number of features that make it easy to collaborate on machine learning projects, such as experiment tracking, model registration, and pipeline management.

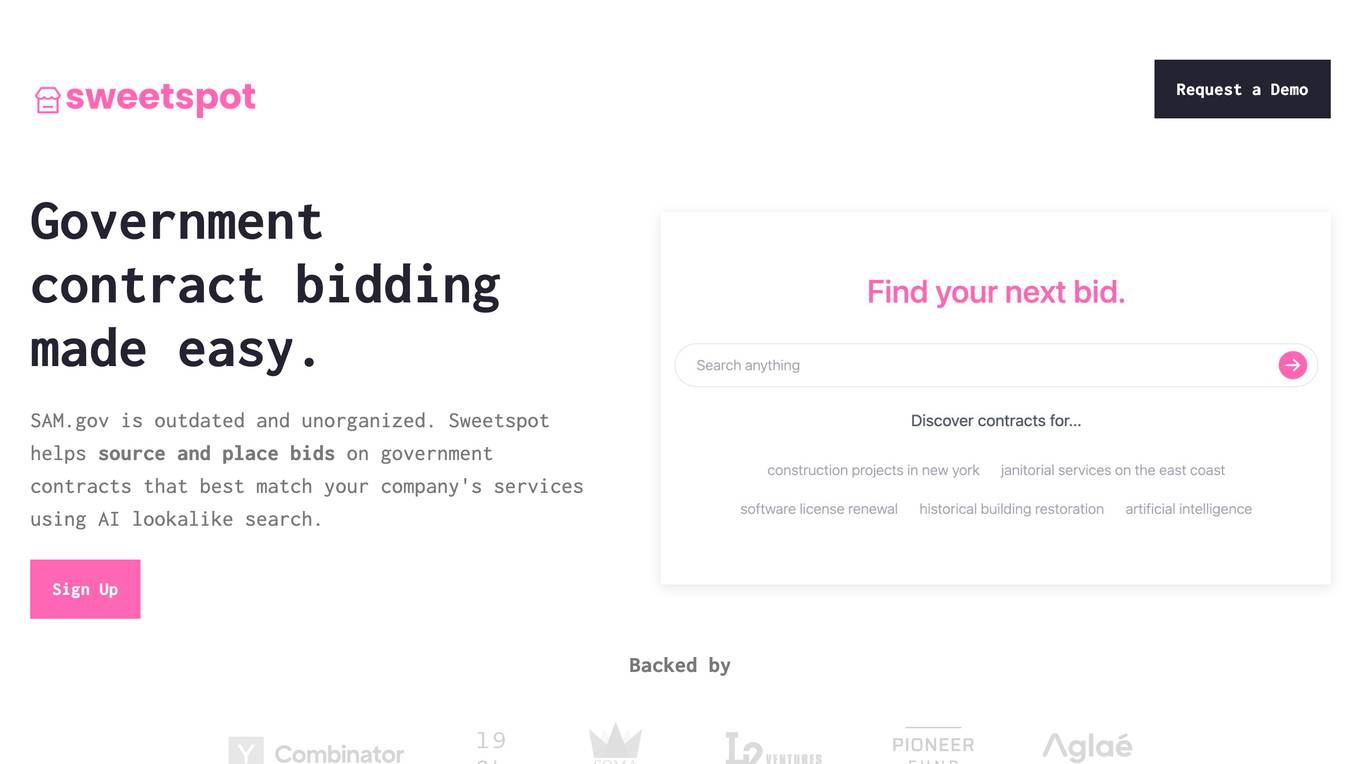

Sweetspot

Sweetspot is an AI-powered platform designed for Government Contracting, offering a comprehensive solution to find, manage, and bid on federal, state, local, and education government opportunities. It leverages cutting-edge AI technology to streamline the capture process, from search and business intelligence to proposal writing, providing an all-in-one solution for public sector teams.

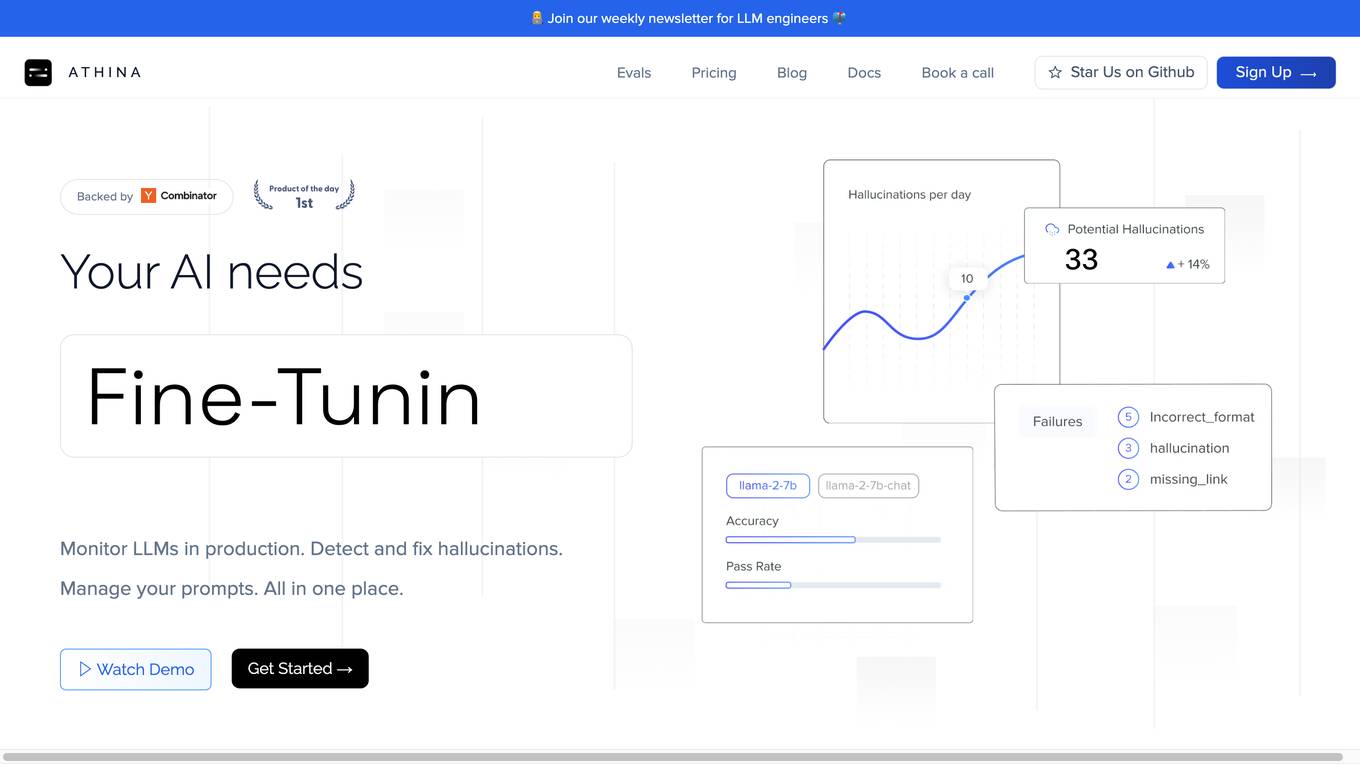

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

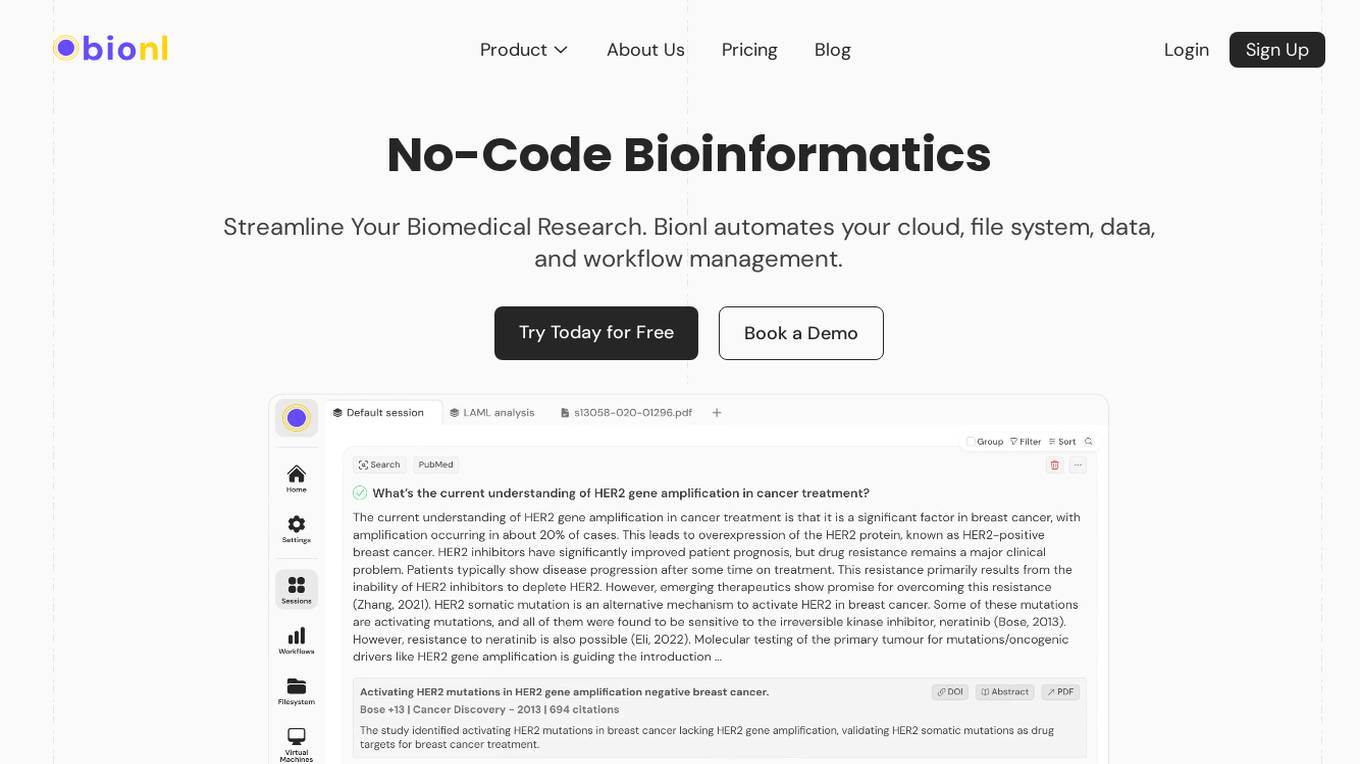

Bionl

Bionl is a no-code bioinformatics platform designed to streamline biomedical research for researchers and scientists. It offers a full workspace with features such as bioinformatics pipelines customization, GenAI for data analysis, AI-powered literature search, PDF analysis, and access to public datasets. Bionl aims to automate cloud, file system, data, and workflow management for efficient and precise analyses. The platform caters to Pharma and Biotech companies, academic researchers, and bioinformatics CROs, providing powerful tools for genetic analysis and speeding up research processes.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

Evolphin

Evolphin is a leading AI-powered platform for Digital Asset Management (DAM) and Media Asset Management (MAM) that caters to creatives, sports professionals, marketers, and IT teams. It offers advanced AI capabilities for fast search, robust version control, and Adobe plugins. Evolphin's AI automation streamlines video workflows, identifies objects, faces, logos, and scenes in media, generates speech-to-text for search and closed captioning, and enables automations based on AI engine identification. The platform allows for editing videos with AI, creating rough cuts instantly. Evolphin's cloud solutions facilitate remote media production pipelines, ensuring speed, security, and simplicity in managing creative assets.

StreamDeploy

StreamDeploy is an AI-powered cloud deployment platform designed to streamline and secure application deployment for agile teams. It offers a range of features to help developers maximize productivity and minimize costs, including a Dockerfile generator, automated security checks, and support for continuous integration and delivery (CI/CD) pipelines. StreamDeploy is currently in closed beta, but interested users can book a demo or follow the company on Twitter for updates.

Oorwin

Oorwin is an AI Recruitment & Talent Management Platform trusted by over 1,000 customers globally. It offers advanced data security and features like Applicant Tracking System, Customer Relation Management, Human Resource Management, Candidate Relationship, Talent Recruitment, and Marketplace Apps. Oorwin leverages AI to streamline job posting, screening, interview scheduling, client interactions, sales pipelines, employee onboarding, talent nurturing, and recruitment processes. It also provides generative AI capabilities for auto-generating emails, job descriptions, and personalized outreach. Oorwin is designed to simplify and accelerate the talent lifecycle, reduce manual work, and boost recruiter productivity for modern hiring teams.

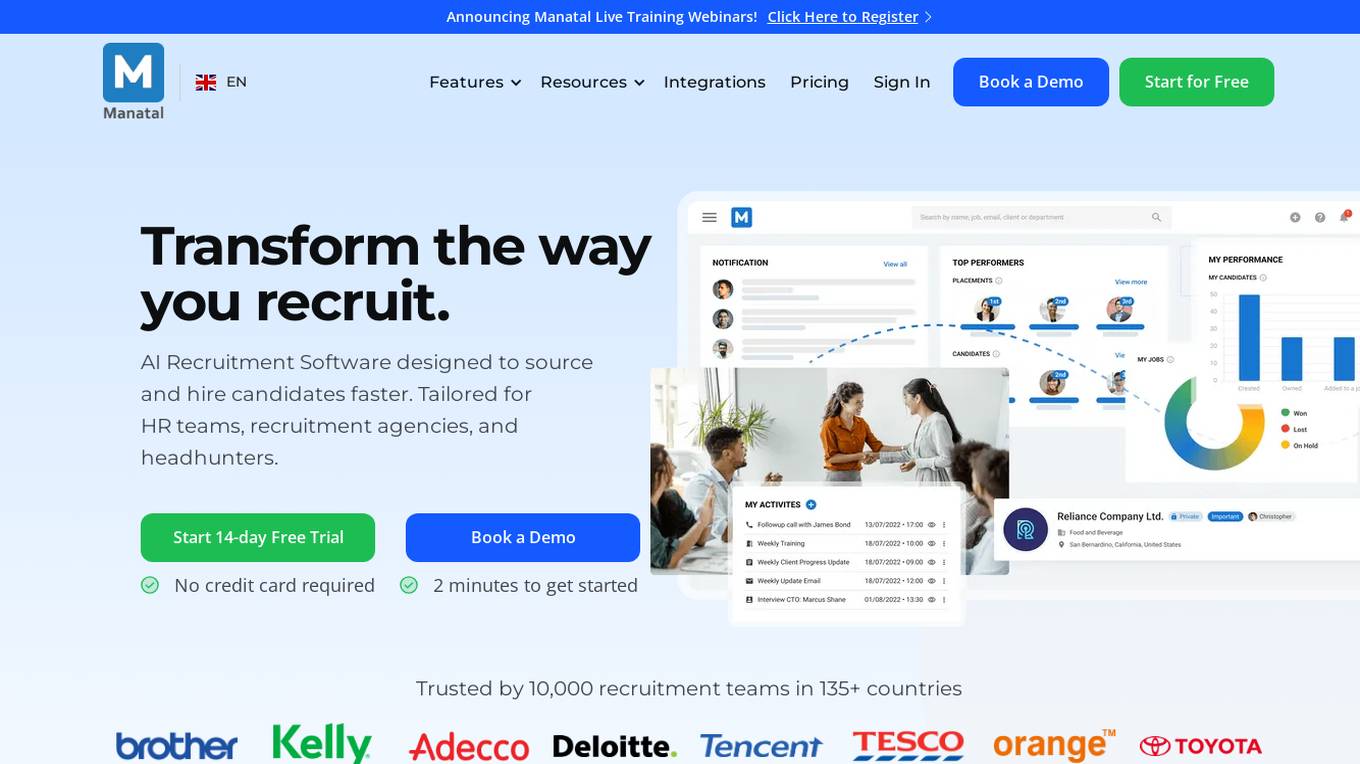

Manatal

Manatal is an AI Recruitment Software designed to streamline the hiring processes for HR teams, recruitment agencies, and headhunters. It offers features such as candidate sourcing from various channels, applicant tracking system, recruitment CRM, candidate enrichment, AI recommendations, collaboration tools, reports & analytics, branded career page creation, support & assistance, data privacy compliance, and more. The platform provides customizable pipelines, AI-powered recommendations, candidate profile enrichment, mobile application access, and onboarding & placement management. Manatal aims to transform recruitment by leveraging AI technology to source and hire candidates faster and more efficiently.

Tecton

Tecton is an AI data platform that helps build smarter AI applications by simplifying feature engineering, generating training data, serving real-time data, and enhancing AI models with context-rich prompts. It automates data pipelines, improves model accuracy, and lowers production costs, enabling faster deployment of AI models. Tecton abstracts away data complexity, provides a developer-friendly experience, and allows users to create features from any source. Trusted by top engineering teams, Tecton streamlines ML delivery processes, improves customer interactions, and automates release processes through CI/CD pipelines.

1 - Open Source AI Tools

hayhooks

Hayhooks is a tool that simplifies the deployment and serving of Haystack pipelines as REST APIs. It allows users to wrap their pipelines with custom logic and expose them via HTTP endpoints, including OpenAI-compatible chat completion endpoints. With Hayhooks, users can easily convert their Haystack pipelines into API services with minimal boilerplate code.

20 - OpenAI Gpts

HubSpot Harry

Your go-to expert for all things HubSpot, from basic tool use to advanced API coding.

AI Workload Optimizer

You've heard that AI can save you time, but you don't know how? Tell me what you do in a typical workweek, and I'll tell you how!

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Pipedrive Pro

Pipedrive CRM expert assisting novice users with 'How to' guides and answers.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Triage Management and Pipeline Architecture

Strategic advisor for triage management and pipeline optimization in business operations.

FODMAPs Dietician

Dietician that helps those with IBS manage their symptoms via FODMAPs. FODMAP stands for fermentable oligosaccharides, disaccharides, monosaccharides and polyols. These are the chemical names of 5 naturally occurring sugars that are not well absorbed by your small intestine.

Cognitive Behavioral Coach

Provides cognitive-behavioral and emotional therapy guidance, helping users understand and manage their thoughts, behaviors, and emotions.

1ACulma - Management Coach

Cross-cultural management. Useful for those who relocate to another country or manage cross-cultural teams.