Best AI tools for< Manage Model Cache >

20 - AI tool Sites

Ultra AI

Ultra AI is an all-in-one AI command center for products, offering features such as multi-provider AI gateway, prompts manager, semantic caching, logs & analytics, model fallbacks, and rate limiting. It is designed to help users efficiently manage and utilize AI capabilities in their products. The platform is privacy-focused, fast, and provides quick support, making it a valuable tool for businesses looking to leverage AI technology.

Eden AI

Eden AI is a platform that provides access to over 100 AI models through a unified API gateway. Users can easily integrate and manage multiple AI models from a single API, with control over cost, latency, and quality. The platform offers ready-to-use AI APIs, custom chatbot, image generation, speech to text, text to speech, OCR, prompt optimization, AI model comparison, cost monitoring, API monitoring, batch processing, caching, multi-API key management, and more. Additionally, professional services are available for custom AI projects tailored to specific business needs.

Sapling

Sapling is a language model copilot and API for businesses. It provides real-time suggestions to help sales, support, and success teams more efficiently compose personalized responses. Sapling also offers a variety of features to help businesses improve their customer service, including: * Autocomplete Everywhere: Provides deep learning-powered autocomplete suggestions across all messaging platforms, allowing agents to compose replies more quickly. * Sapling Suggest: Retrieves relevant responses from a team response bank and allows agents to respond more quickly to customer inquiries by simply clicking on suggested responses in real time. * Snippet macros: Allow for quick insertion of common responses. * Grammar and language quality improvements: Sapling catches 60% more language quality issues than other spelling and grammar checkers using a machine learning system trained on millions of English sentences. * Enterprise teams can define custom settings for compliance and content governance. * Distribute knowledge: Ensure team knowledge is shared in a snippet library accessible on all your web applications. * Perform blazing fast search on your knowledge library for compliance, upselling, training, and onboarding.

Portkey

Portkey is a control panel for production AI applications that offers an AI Gateway, Prompts, Guardrails, and Observability Suite. It enables teams to ship reliable, cost-efficient, and fast apps by providing tools for prompt engineering, enforcing reliable LLM behavior, integrating with major agent frameworks, and building AI agents with access to real-world tools. Portkey also offers seamless AI integrations for smarter decisions, with features like managed hosting, smart caching, and edge compute layers to optimize app performance.

LiteLLM

LiteLLM is a platform that simplifies model access, spend tracking, and fallbacks across 100+ LLMs. It provides a gateway to manage model access and offers features like logging, budget tracking, pass-through endpoints, and self-serve key management. LiteLLM is open-source and compatible with the OpenAI format, allowing users to access various LLMs seamlessly.

BCT Digital

BCT Digital is an AI-powered risk management suite provider that offers a range of products to help enterprises optimize their core Governance, Risk, and Compliance (GRC) processes. The rt360 suite leverages next-generation technologies, sophisticated AI/ML models, data-driven algorithms, and predictive analytics to assist organizations in managing various risks effectively. BCT Digital's solutions cater to the financial sector, providing tools for credit risk monitoring, early warning systems, model risk management, environmental, social, and governance (ESG) risk assessment, and more.

ValidMind AI Governance Platform

ValidMind AI Governance Platform is an enterprise AI governance platform designed to accelerate AI innovation by providing oversight, adoption, and measurable ROI. It centralizes oversight for responsible AI, unifies risk management for AI and models, and automates documentation, testing, and workflows. ValidMind is purpose-built for regulated industries like banking and insurance, offering a platform to scale AI confidently and meet regulatory expectations.

Arthur

Arthur is an industry-leading MLOps platform that simplifies deployment, monitoring, and management of traditional and generative AI models. It ensures scalability, security, compliance, and efficient enterprise use. Arthur's turnkey solutions enable companies to integrate the latest generative AI technologies into their operations, making informed, data-driven decisions. The platform offers open-source evaluation products, model-agnostic monitoring, deployment with leading data science tools, and model risk management capabilities. It emphasizes collaboration, security, and compliance with industry standards.

Weights & Biases

Weights & Biases is an AI tool that offers documentation, guides, tutorials, and support for using AI models in applications. The platform provides two main products: W&B Weave for integrating AI models into code and W&B Models for building custom AI models. Users can access features such as tracing, output evaluation, cost estimates, hyperparameter sweeps, model registry, and more. Weights & Biases aims to simplify the process of working with AI models and improving model reproducibility.

Role Model AI

Role Model AI is a revolutionary multi-dimensional assistant that combines practicality and innovation. It offers four dynamic interfaces for seamless interaction: phone calls for on-the-go assistance, an interactive agent dashboard for detailed task management, lifelike 3D avatars for immersive communication, and an engaging Fortnite world integration for a gaming-inspired experience. Role Model AI adapts to your lifestyle, blending seamlessly into your personal and professional worlds, providing unparalleled convenience and a unique, versatile solution for managing tasks and interactions.

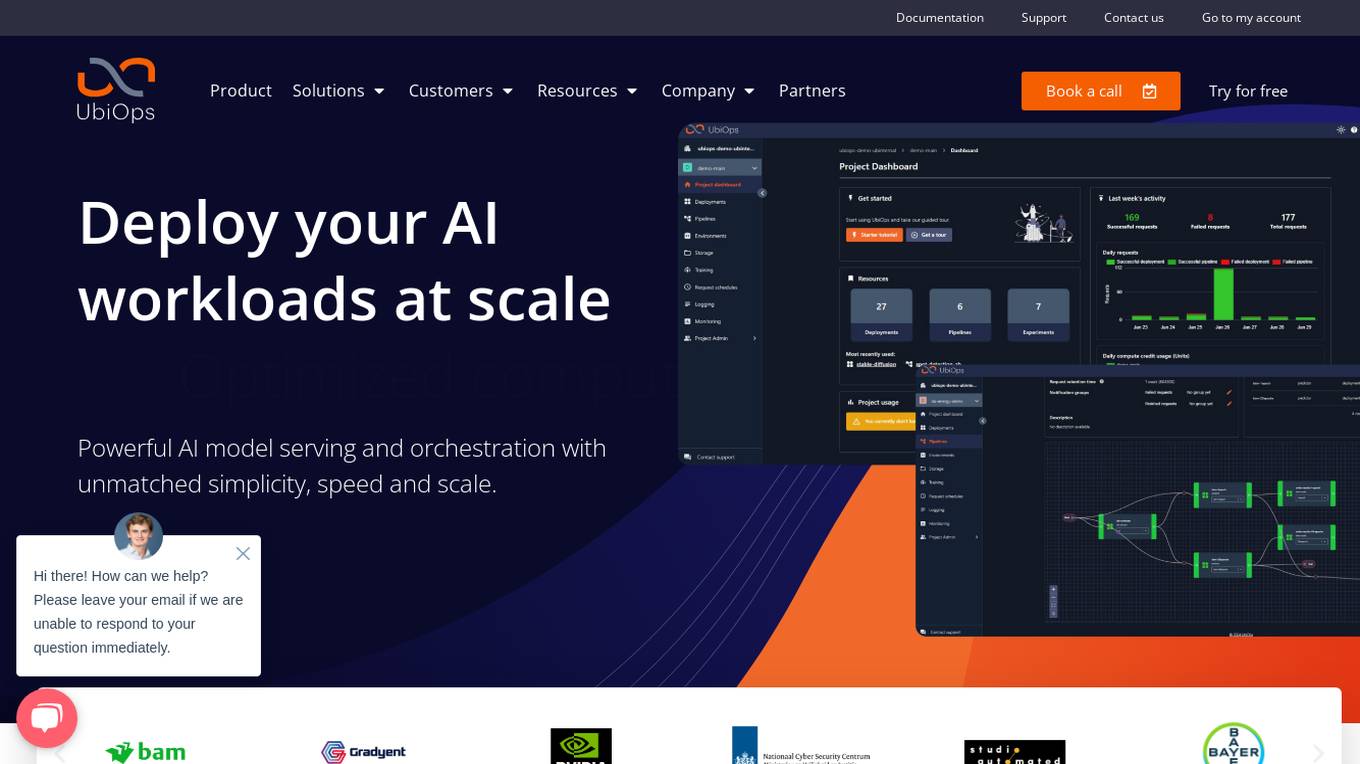

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

Glambase

Glambase is an AI Influencer Creation Platform that allows users to create personalized AI influencers with advanced customization options. Users can build virtual personas, interact with AI characters, and monetize their AI influencers. The platform offers a wide range of AI influencer models with diverse personalities and characteristics, catering to various preferences and needs of users. Glambase revolutionizes the way individuals engage with AI relationships and social media, providing a seamless and efficient solution for content creation and audience engagement.

OneDollarAI.lol

OneDollarAI.lol is an AI application that offers the best AI language model for just one dollar a month. It features LLaMa 3, which is known for being the fastest and most powerful language model. Users can enjoy unlimited usage with no limits, at an affordable price of only $1 per month. The application provides instant responses and requires no setup. It is designed to be user-friendly and accessible to all, making it a convenient tool for various language-related tasks.

Aya Data

Aya Data is an AI tool that offers services such as data annotation, computer vision, natural language annotation, 3D annotation, AI data acquisition, and AI consulting. They provide cutting-edge tools to transform raw data into training datasets for AI models, deliver bespoke AI solutions for various industries, and offer AI-powered products like AyaGrow for crop management and AyaSpeech for speech-to-speech translation. Aya Data focuses on exceptional accuracy, rapid development cycles, and high performance in real-world scenarios.

Azoo

Azoo is an AI-powered platform that offers a wide range of services in various categories such as logistics, animal, consumer commerce, real estate, law, and finance. It provides tools for data analysis, event management, and guides for users. The platform is designed to streamline processes, enhance decision-making, and improve efficiency in different industries. Azoo is developed by Cubig Corp., a company based in Seoul, South Korea, and aims to revolutionize the way businesses operate through innovative AI solutions.

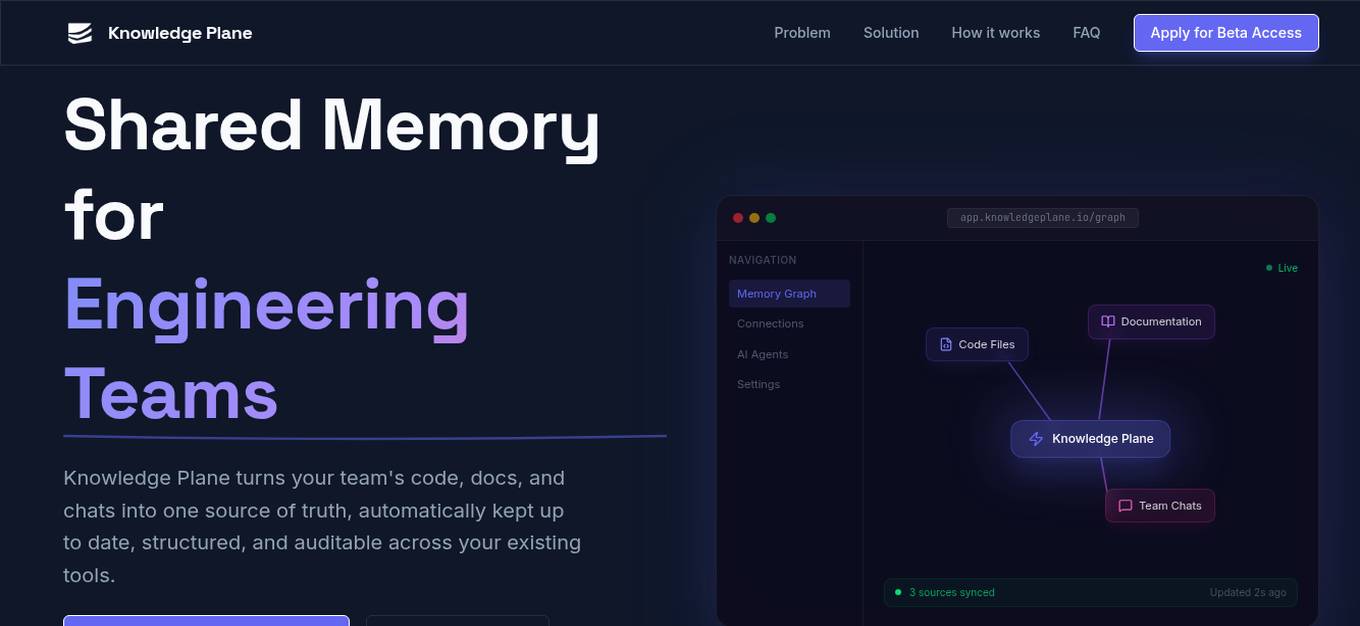

Knowledge Plane

Knowledge Plane is a shared memory platform designed for AI agents and engineering teams. It automatically consolidates code, documentation, and conversations into a single source of truth, ensuring up-to-date, structured, and auditable information across existing tools. The platform helps teams overcome the challenge of outdated context in AI tools, enabling seamless collaboration and decision-making.

Not Diamond

Not Diamond is an AI-powered chatbot application designed to provide users with a seamless and efficient conversational experience. It serves as a virtual assistant capable of handling a wide range of tasks and inquiries. With its advanced natural language processing capabilities, Not Diamond aims to revolutionize the way users interact with technology by offering personalized and intelligent responses in real-time. Whether you need assistance with information retrieval, task management, or simply engaging in casual conversation, Not Diamond is the ultimate chatbot companion.

Encord

Encord is a leading data development platform designed for computer vision and multimodal AI teams. It offers a comprehensive suite of tools to manage, clean, and curate data, streamline labeling and workflow management, and evaluate AI model performance. With features like data indexing, annotation, and active model evaluation, Encord empowers users to accelerate their AI data workflows and build robust models efficiently.

Zesh AI

Zesh AI is an advanced AI-powered ecosystem that offers a range of innovative tools and solutions for Web3 projects, community managers, data analysts, and decision-makers. It leverages AI Agents and LLMs to redefine KOL analysis, community engagement, and campaign optimization. With features like InfluenceAI for KOL discovery, EngageAI for campaign management, IDAI for fraud detection, AnalyticsAI for data analysis, and Wallet & NFT Profile for community empowerment, Zesh AI provides cutting-edge solutions for various aspects of Web3 ecosystems.

2 - Open Source AI Tools

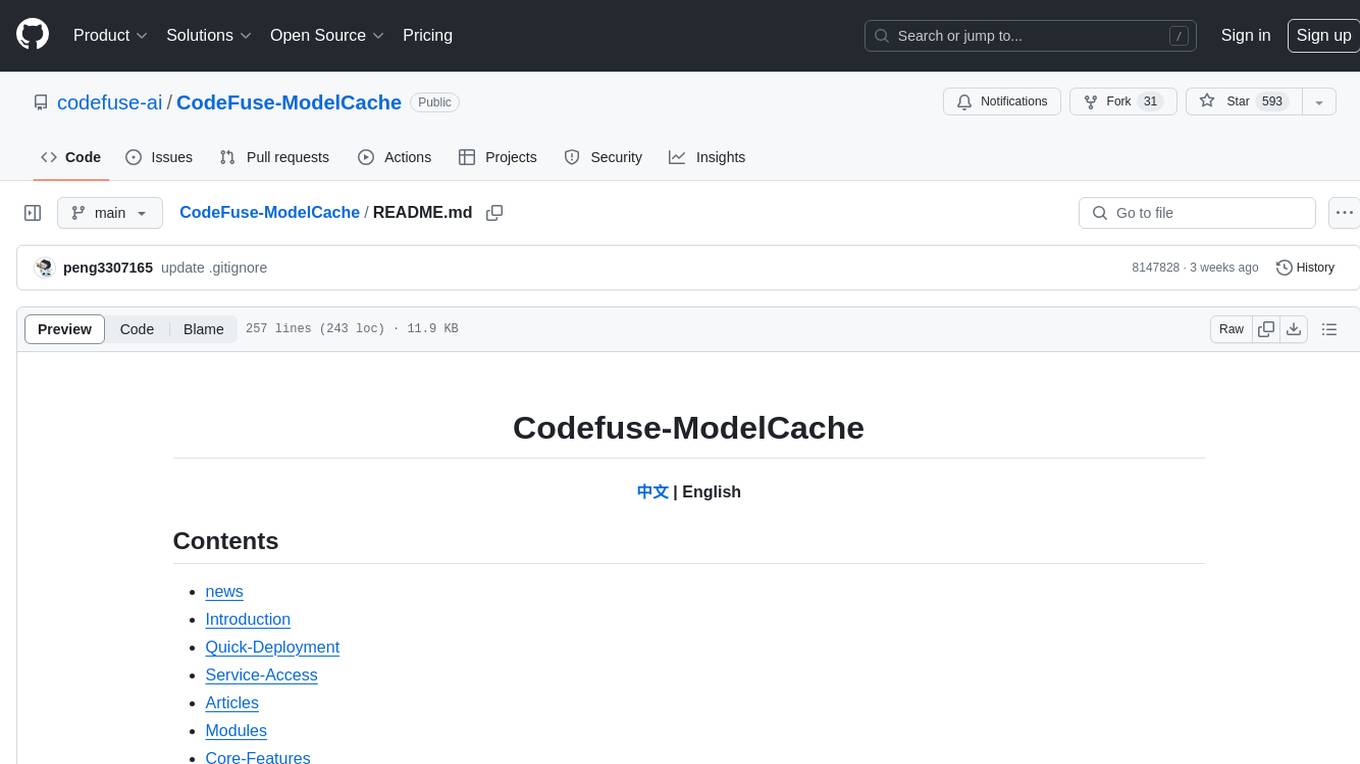

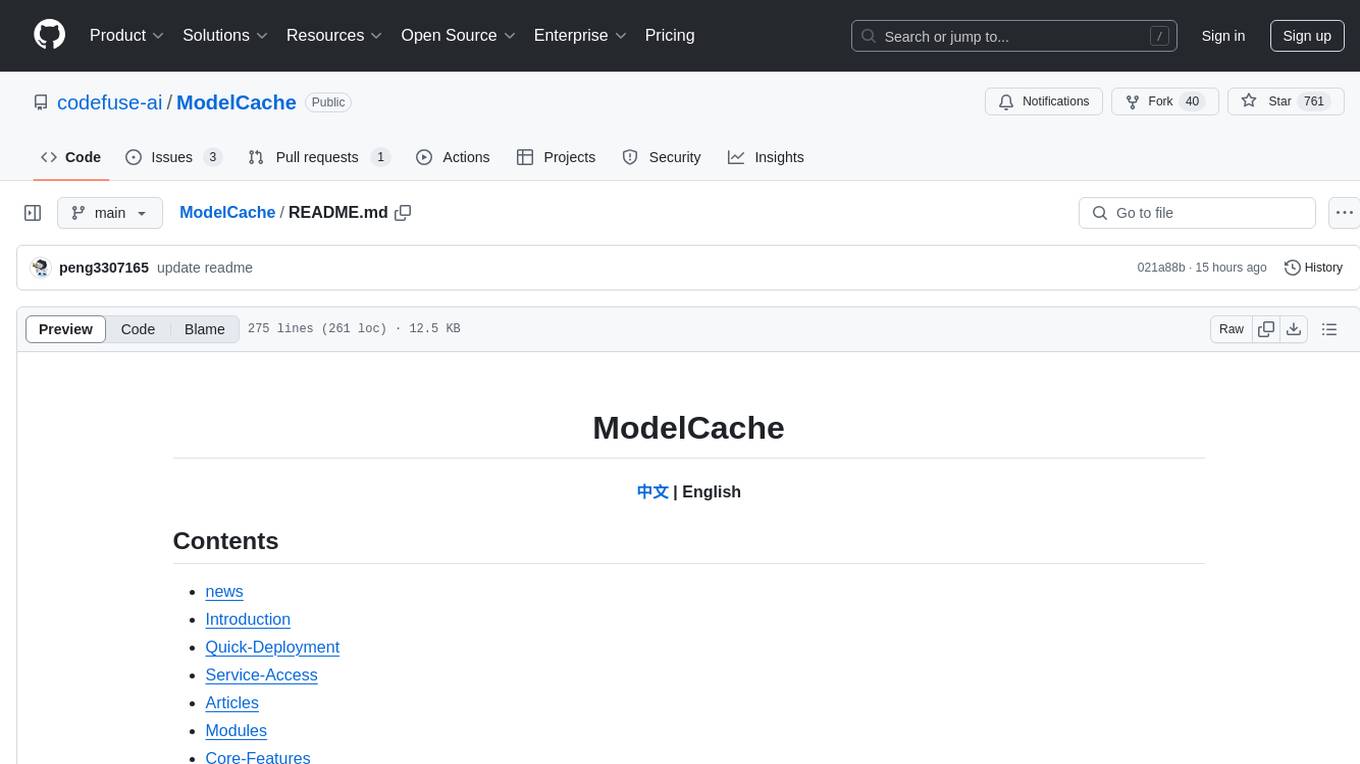

CodeFuse-ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project caches pre-generated model results to reduce response time for similar requests and enhance user experience. It integrates various embedding frameworks and local storage options, offering functionalities like cache-writing, cache-querying, and cache-clearing through RESTful API. The tool supports multi-tenancy, system commands, and multi-turn dialogue, with features for data isolation, database management, and model loading schemes. Future developments include data isolation based on hyperparameters, enhanced system prompt partitioning storage, and more versatile embedding models and similarity evaluation algorithms.

ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project facilitates sharing and exchanging technologies related to large model semantic cache through open-source collaboration.

20 - OpenAI Gpts

Chat with GPT 4o ("Omni") Assistant

Try the new AI chat model: GPT 4o ("Omni") Assistant. It's faster and better than regular GPT. Plus it will incorporate speech-to-text, intelligence, and speech-to-text capabilities with extra low latency.

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

Fashion Designer: Runway Showdown

Step into the world of high fashion. As runway designers, they create unique collections, organize dazzling fashion shows, and stay ahead of the latest trends. It blends elements of design, marketing, and business management in the glamorous fashion industry

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

Seabiscuit Business Model Master

Discover A More Robust Business: Craft tailored value proposition statements, develop a comprehensive business model canvas, conduct detailed PESTLE analysis, and gain strategic insights on enhancing business model elements like scalability, cost structure, and market competition strategies. (v1.18)

Create A Business Model Canvas For Your Business

Let's get started by telling me about your business: What do you offer? Who do you serve? ------------------------------------------------------- Need help Prompt Engineering? Reach out on LinkedIn: StephenHnilica

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

EIA model

Generates Environmental impact assessment templates based on specific global locations and parameters.

Business Model Canvas Wizard

Un aiuto a costruire il Business Model Canvas della tua iniziativa

Business Model Advisor

Business model expert, create detailed reports based on business ideas.