Best AI tools for< Integrate With Vllm >

20 - AI tool Sites

vLLM

vLLM is a fast and easy-to-use library for LLM inference and serving. It offers state-of-the-art serving throughput, efficient management of attention key and value memory, continuous batching of incoming requests, fast model execution with CUDA/HIP graph, and various decoding algorithms. The tool is flexible with seamless integration with popular HuggingFace models, high-throughput serving, tensor parallelism support, and streaming outputs. It supports NVIDIA GPUs and AMD GPUs, Prefix caching, and Multi-lora. vLLM is designed to provide fast and efficient LLM serving for everyone.

Luma AI

Luma AI is an AI-powered platform that specializes in video generation using advanced models like Ray2 and Dream Machine. The platform offers director-grade control over style, character, and setting, allowing users to reshape videos with ease. Luma AI aims to build multimodal general intelligence that can generate, understand, and operate in the physical world, paving the way for creative, immersive, and interactive systems beyond traditional text-based approaches. The platform caters to creatives in various industries, offering powerful tools for worldbuilding, storytelling, and creative expression.

The Video Calling App

The Video Calling App is an AI-powered platform designed to revolutionize meeting experiences by providing laser-focused, context-aware, and outcome-driven meetings. It aims to streamline post-meeting routines, enhance collaboration, and improve overall meeting efficiency. With powerful integrations and AI features, the app captures, organizes, and distills meeting content to provide users with a clearer perspective and free headspace. It offers seamless integration with popular tools like Slack, Linear, and Google Calendar, enabling users to automate tasks, manage schedules, and enhance productivity. The app's user-friendly interface, interactive features, and advanced search capabilities make it a valuable tool for global teams and remote workers seeking to optimize their meeting experiences.

Skillate

Skillate is an AI Recruitment Platform that offers advanced decision-making engine to make hiring easy, fast, and transparent. It provides solutions such as AI Powered Matching Engine, Chatbot Screening, Resume Parser, Auto Interview Scheduler, and more to automate and improve the recruitment process. Skillate helps in intelligent hiring, enhancing candidate experience, utilizing people analytics, and promoting diversity and inclusion in recruitment practices.

echowin

echowin is an AI Voice Agent Builder Platform that enables businesses to create AI agents for calls, chat, and Discord. It offers a comprehensive solution for automating customer support with features like Agentic AI logic and reasoning, support for over 30 languages, parallel call answering, and 24/7 availability. The platform allows users to build, train, test, and deploy AI agents quickly and efficiently, without compromising on capabilities or scalability. With a focus on simplicity and effectiveness, echowin empowers businesses to enhance customer interactions and streamline operations through cutting-edge AI technology.

Yonder

Yonder is an AI-powered chatbot and review platform designed specifically for the tourism industry. It helps tourism businesses to increase website sales, generate more enquiries, and save staff time by providing AI chatbot services, personalized recommendations, live reviews showcase, website chat support, customer surveys, team feedback categorization, and online review management. Yonder's AI chatbot can answer up to 80% of customer questions immediately on the website and Messenger, 24/7. The platform also offers features like conversation analytics, ratings breakdown, and integration with reservation systems for automation and efficiency.

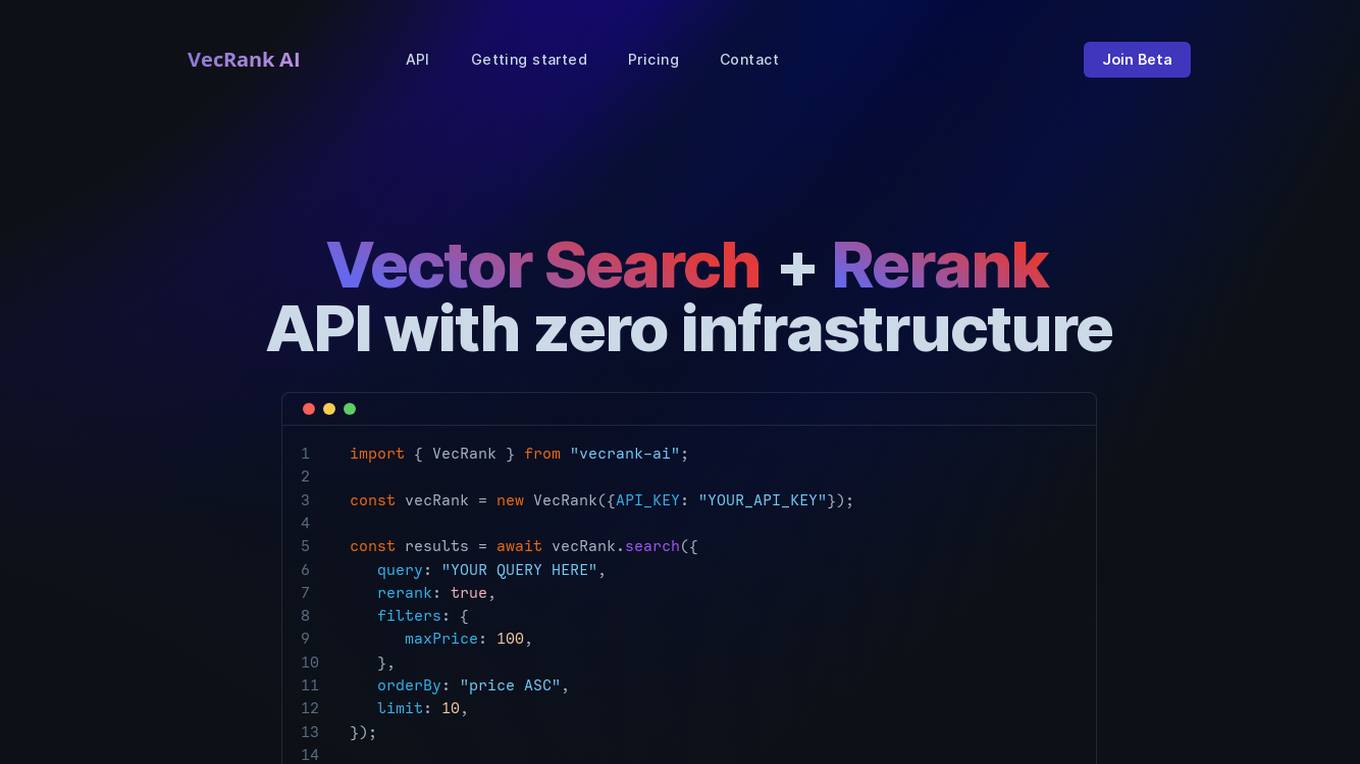

VecRank

VecRank is an AI-powered Vector Search and Reranking API service that leverages cutting-edge GenAI technologies to enhance natural language understanding and contextual relevance. It offers a scalable, AI-driven search solution for software developers and business owners. With VecRank, users can revolutionize their search capabilities with the power of AI, enabling seamless integration and powerful tools that scale with their business needs. The service allows for bulk data upload, incremental data updates, and easy integration into various programming languages and platforms, all without the hassle of setting up infrastructure for embeddings and vector search databases.

Yoodocs

Yoodocs is an AI-powered documentation service that simplifies document creation, management, and collaboration. It offers features such as document hierarchy organization, open-source documentation creation, export to various formats, workspace diversity, language management, version control, seamless migration, AI-powered editor assistant, comprehensive search, automated sync with GitLab and GitHub, self-hosted solution, collaborative development, customization styles and themes, and integrations. Yoodocs aims to enhance productivity and efficiency in projects by providing a comprehensive solution for documentation needs.

FormX.ai

FormX.ai is an AI-powered data extraction and conversion tool that automates the process of extracting data from physical documents and converting it into digital formats. It supports a wide range of document types, including invoices, receipts, purchase orders, bank statements, contracts, HR forms, shipping orders, loyalty member applications, annual reports, business certificates, personnel licenses, and more. FormX.ai's pre-configured data extraction models and effortless API integration make it easy for businesses to integrate data extraction into their existing systems and workflows. With FormX.ai, businesses can save time and money on manual data entry and improve the accuracy and efficiency of their data processing.

MedoSync

MedoSync is an AI-driven health platform that empowers users to monitor and analyze their vital and medical data, leveraging AI to provide personalized insights and recommendations for a healthier life. Users can upload lab results, digitize medical documents, use an AI symptom checker, create accounts for family members, and integrate with their healthcare system. The platform offers easy data export, accuracy in health insights, and personalized health recommendations, with a high user satisfaction rate.

Turing AI

Turing AI is a cloud-based video security system powered by artificial intelligence. It offers a range of AI-powered video surveillance products and solutions to enhance safety, security, and operations. The platform provides smart video search capabilities, real-time alerts, instant video sharing, and hardware offerings compatible with various cameras. With flexible licensing options and integration with third-party devices, Turing AI is trusted by customers across industries for its robust and innovative approach to cloud video security.

Luma AI

Luma AI is a 3D capture platform that allows users to create interactive 3D scenes from videos. With Luma AI, users can capture 3D models of people, objects, and environments, and then use those models to create interactive experiences such as virtual tours, product demonstrations, and training simulations.

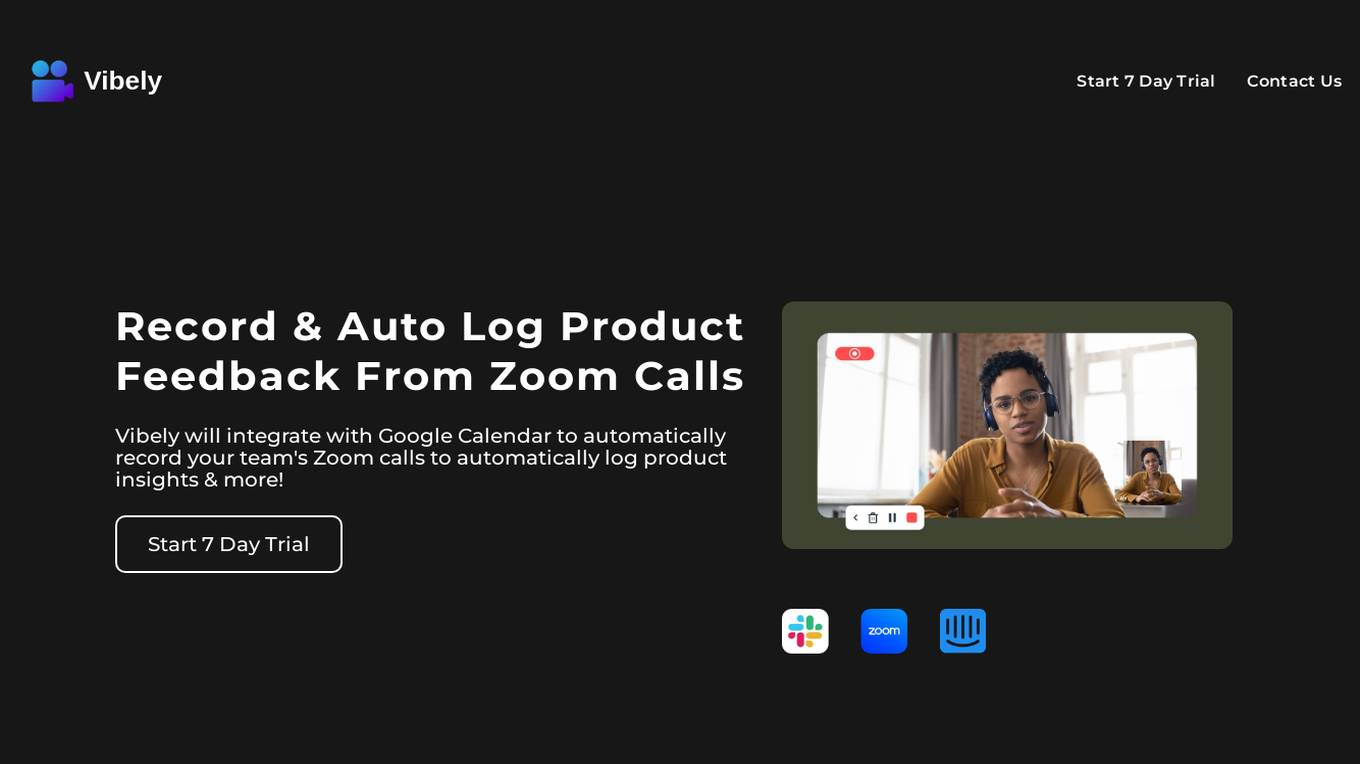

Vibely

Vibely is an AI-powered application that seamlessly integrates with Google Calendar to automatically record and log product feedback from Zoom calls. It offers features such as automatic recording of Zoom calls, summarizing team calls, detecting feature requests using AI, providing insights based on past calls, and cost-effective pricing compared to competitors. With Vibely, users can enhance collaboration, sharing, and learning from their calls effortlessly.

Distyl AI

Distyl AI is an AI application that specializes in building AI systems for the world's largest enterprises. Their purpose-built AI solutions have created a competitive edge for leaders in various industries such as Telecom, Healthcare, Manufacturing, Insurance, and Retail. Distyl delivers real, scalable AI solutions that integrate seamlessly into enterprise operations to drive significant impacts. The platform allows users to deploy and manage AI systems in weeks, seamlessly integrate with existing workflows and systems, and benefit from outcome-driven partnerships with elite AI researchers and engineers from renowned institutions.

Kapa.ai

Kapa.ai is an AI tool that transforms technical documentation and knowledge bases into a reliable, LLM-powered AI assistant. It provides precise, context-specific answers, identifies documentation gaps, and integrates effortlessly with various platforms. Trusted by leading companies, Kapa.ai is designed for organizations with technical products, offering accuracy, rapid deployment features, and enterprise-grade security.

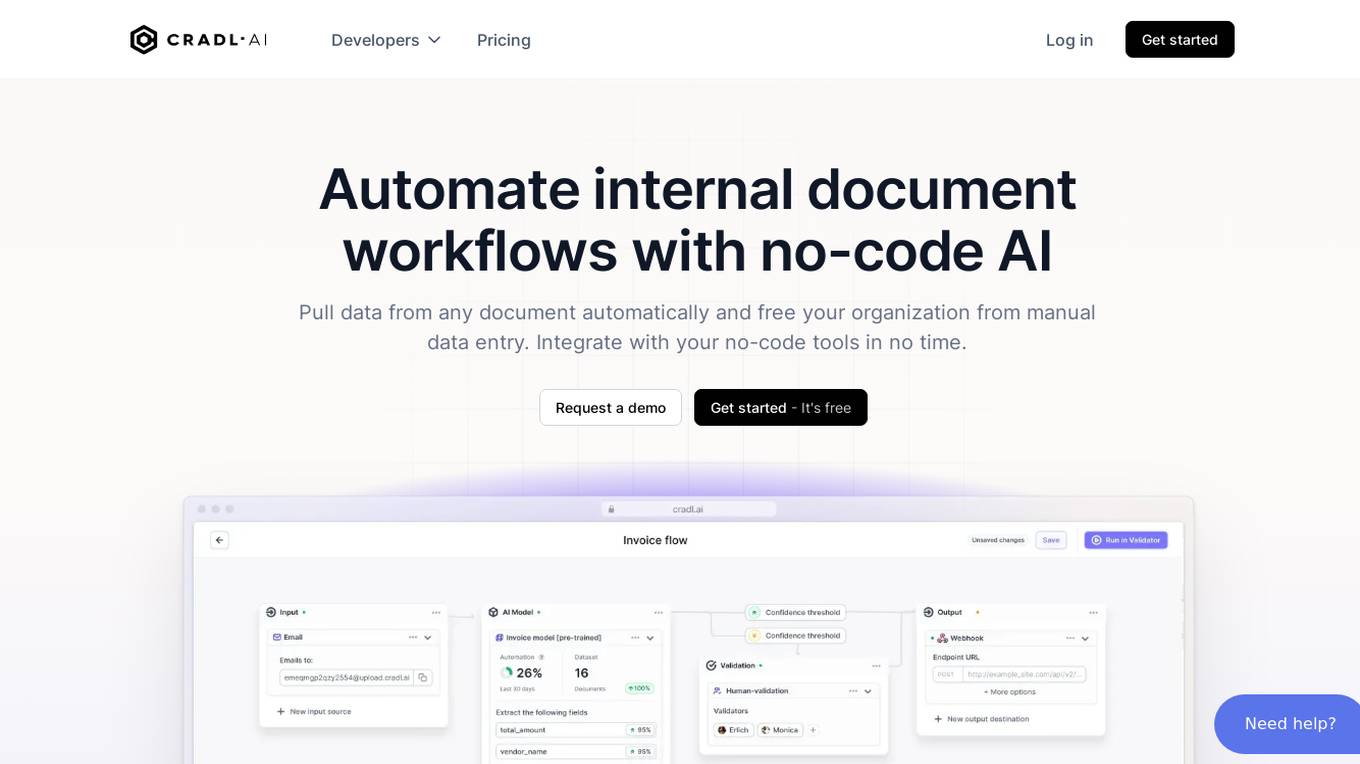

Cradl AI

Cradl AI is an AI-powered tool designed to automate document workflows with no-code AI. It enables users to extract data from any document automatically, integrate with no-code tools, and build custom AI models through an easy-to-use interface. The tool empowers automation teams across industries by extracting data from complex document layouts, regardless of language or structure. Cradl AI offers features such as line item extraction, fine-tuning AI models, human-in-the-loop validation, and seamless integration with automation tools. It is trusted by organizations for business-critical document automation, providing enterprise-level features like encrypted transmission, GDPR compliance, secure data handling, and auto-scaling.

Membit

Membit is an AI tool designed to provide real-time context for AI developer documentation. It offers a seamless experience for data hunters by enabling them to try Membit Agent and integrate with LLM/Agent for loading data efficiently. Membit enhances the workflow of developers by offering contextual insights and support during the documentation process.

DeepL Translate

DeepL is an AI-powered translation tool that offers accurate and efficient translation services across multiple languages. It provides various features such as document translation, AI-powered edits, real-time voice translation, and integration with essential productivity tools. DeepL is widely used for personal, professional, and enterprise translation needs due to its high translation quality and user-friendly interface.

Sphinx Mind

Sphinx Mind is an AI-powered marketing assistant chatbot that seamlessly integrates with various marketing platforms. It offers a range of features such as AI-powered reports, prompt scheduling, custom GPTs on ChatGPT, chat exports, prompt library, smart autocomplete, and Slack and Microsoft Teams integration. Sphinx Mind empowers marketers with one-click integrations, multi-platform interaction, unlimited possibilities, personalized experiences, tailored pricing plans, and a privacy-first approach.

Promptitude.io

Promptitude.io is a platform that allows users to integrate GPT into their apps and workflows. It provides a variety of features to help users manage their prompts, personalize their AI responses, and publish their prompts for use by others. Promptitude.io also offers a library of pre-built prompts that users can use to get started quickly.

1 - Open Source AI Tools

LMCache

LMCache is a serving engine extension designed to reduce time to first token (TTFT) and increase throughput, particularly in long-context scenarios. It stores key-value caches of reusable texts across different locations like GPU, CPU DRAM, and Local Disk, allowing the reuse of any text in any serving engine instance. By combining LMCache with vLLM, significant delay savings and GPU cycle reduction are achieved in various large language model (LLM) use cases, such as multi-round question answering and retrieval-augmented generation (RAG). LMCache provides integration with the latest vLLM version, offering both online serving and offline inference capabilities. It supports sharing key-value caches across multiple vLLM instances and aims to provide stable support for non-prefix key-value caches along with user and developer documentation.

20 - OpenAI Gpts

Flashcard Maker, Research, Learn and Send to Anki

Creates educational flashcards and integrates with Anki.

Bootstrap 5 & React Crafter Copilot

Guides on Bootstrap 5 themes and templates for React, provides code snippets with step-by-step instructions. v1.1

Customer.io Liquid Helper

Specializes in Customer.io Liquid code, always outputs code (not affiliated with customer.io)

Fill PDF Forms

Fill legal forms & complex PDF documents easily! Upload a file, provide data sources and I'll handle the rest.

Apple Activity Kit Complete Code Expert

A detailed expert trained on all 1,337 pages of Apple ActivityKit, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

Liferay Guide - Via official sources of Liferay

Liferay expert, answering queries with official sources, including GitHub.

Magento Guru

Expert in Magento and Adobe Commerce, ready to share knowledge. For creation and support of Magento and Adobe Commerce stores - contact https://magenable.com.au

Selenium Sage

Expert in Selenium test automation, providing practical advice and solutions.