Best AI tools for< Inference With Transformers >

20 - AI tool Sites

OddBooks

OddBooks is an AI-powered tool that transforms books into scenarios, enabling users to create various content types such as audiobooks, webtoons, animations, and movies. It simplifies the process of producing derivative works by extracting character names, emotions, spatial and sound keywords, and inferring character personalities from the text. With OddBooks, users can easily generate scripts for secondary works in a fraction of the time it would traditionally take, revolutionizing the scenario creation process.

Vidu Studio

Vidu Studio is an AI video generation platform that utilizes a text-to-video artificial intelligence model developed by ShengShu-AI in collaboration with Tsinghua University. It can create high-quality video content from text prompts, offering a 16-second 1080P video clip with a single click. The platform is built on the Universal Vision Transformer (U-ViT) architecture, combining Diffusion and Transformer models to produce realistic and detailed video content. Vidu Studio stands out for its ability to generate culturally specific content, particularly focusing on Chinese cultural elements like pandas and loongs. It is a pioneering platform in the field of text-to-video technology, with a strong potential to influence the future of digital media and content creation.

Spikes Studio

Spikes Studio is an AI-powered video editing tool that specializes in transforming long videos into viral clips for platforms like YouTube, Twitch, TikTok, and Reels. The platform offers advanced editing features, such as auto-captions, AI-generated B-Roll, audio enhancements, GIFs, and social media scheduling. With a focus on boosting viewer retention and engagement, Spikes Studio provides a fast and efficient solution for content creators to repurpose their videos effortlessly.

fal.ai

fal.ai is a generative media platform designed for developers to build the next generation of creativity. It offers lightning-fast inference with no compromise on quality, providing access to high-quality generative media models optimized by the fal Inference Engine™. The platform allows developers to fine-tune their own models, leverage real-time infrastructure for new user experiences, and scale to thousands of GPUs as needed. With a focus on developer experience, fal.ai aims to be the fastest AI tool for running diffusion models.

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

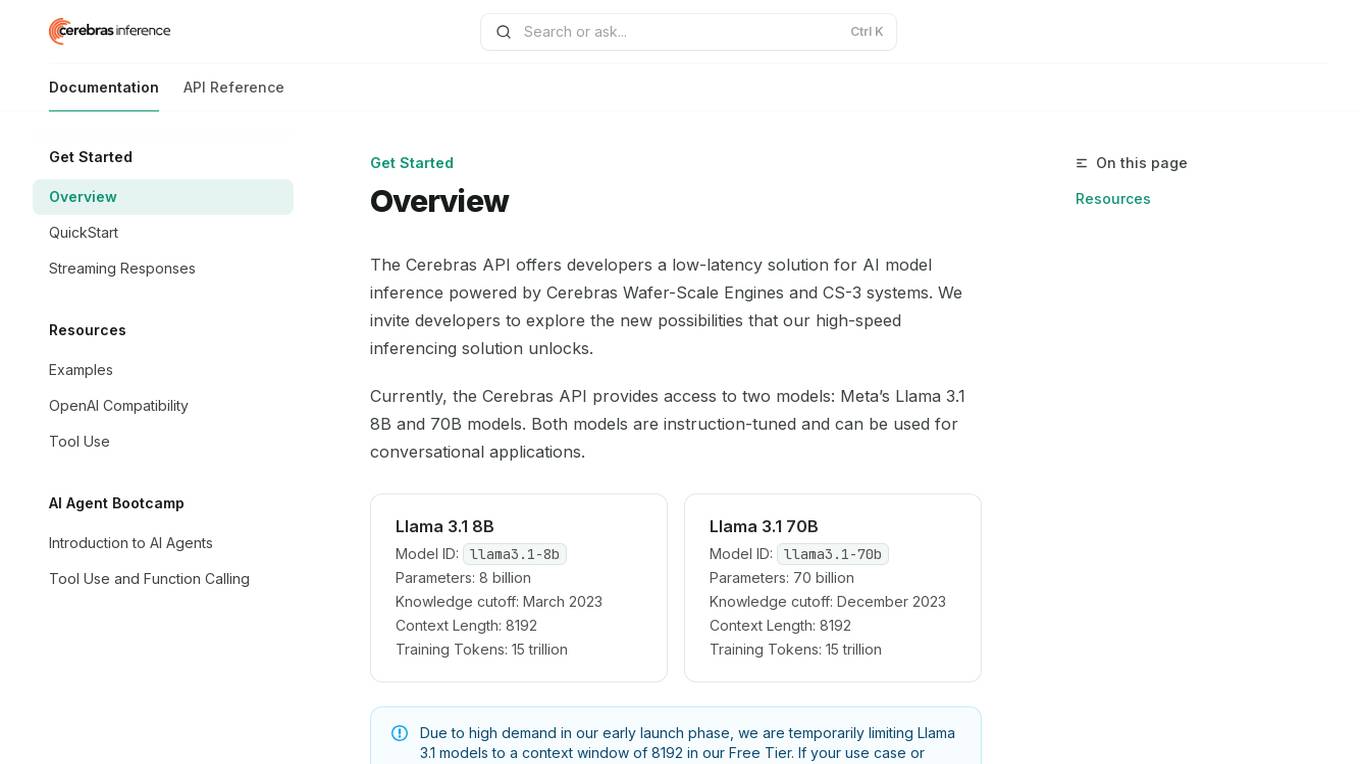

Cerebras API

The Cerebras API is a high-speed inferencing solution for AI model inference powered by Cerebras Wafer-Scale Engines and CS-3 systems. It offers developers access to two models: Meta’s Llama 3.1 8B and 70B models, which are instruction-tuned and suitable for conversational applications. The API provides low-latency solutions and invites developers to explore new possibilities in AI development.

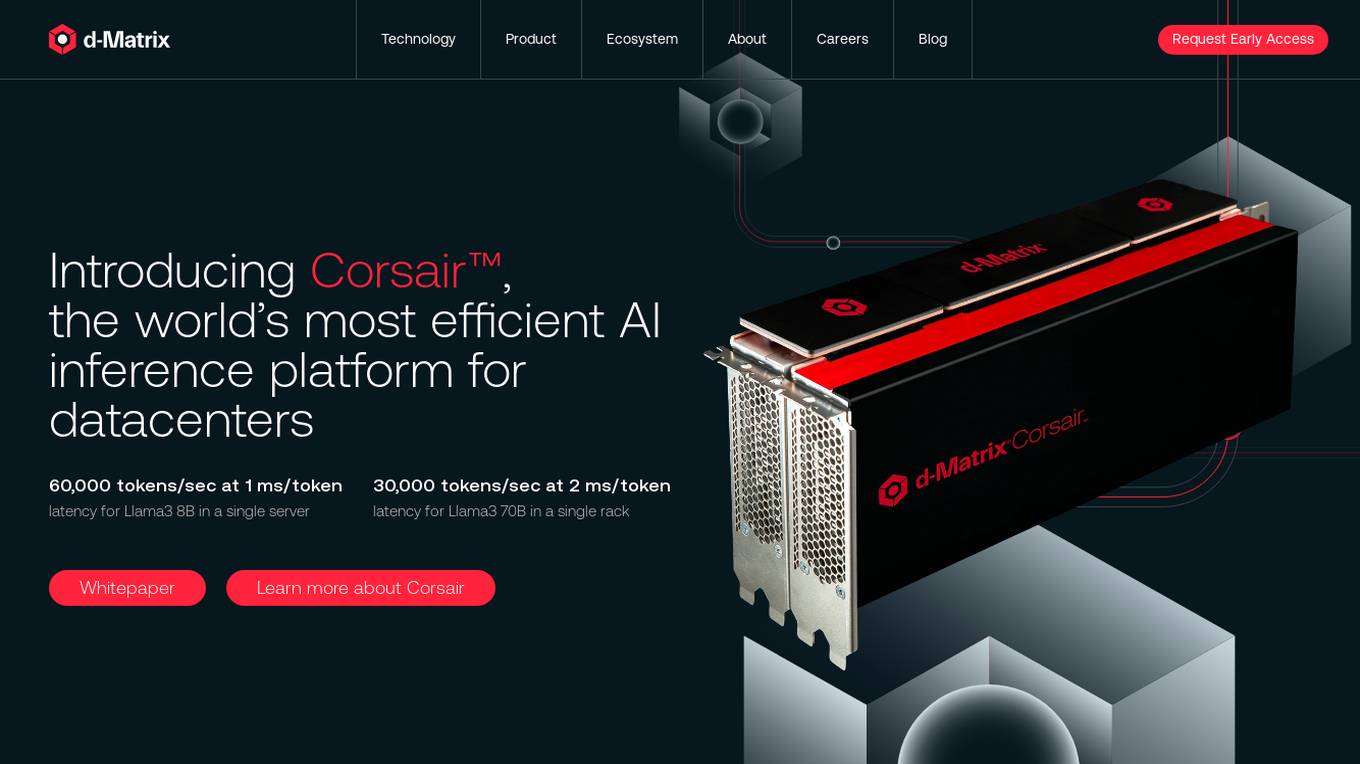

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

fal

fal is an AI platform that offers cutting-edge AI models and tools for image and video generation, editing, and audio processing. It partners with leading AI companies to bring state-of-the-art technology to its users, enabling them to create stunning visual and audio content with ease. fal is at the forefront of the AI-driven media creation revolution, providing developers and creators with advanced tools to push the boundaries of creativity.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

Cerebras

Cerebras is an AI tool that offers products and services related to AI supercomputers, cloud system processors, and applications for various industries. It provides high-performance computing solutions, including large language models, and caters to sectors such as health, energy, government, scientific computing, and financial services. Cerebras specializes in AI model services, offering state-of-the-art models and training services for tasks like multi-lingual chatbots and DNA sequence prediction. The platform also features the Cerebras Model Zoo, an open-source repository of AI models for developers and researchers.

Thirdai

Thirdai.com is an AI-powered platform that offers a range of tools and applications to enhance productivity and decision-making. The platform leverages advanced algorithms and machine learning to provide insights and solutions across various domains such as finance, marketing, and healthcare. Users can access a suite of AI tools to analyze data, automate tasks, and optimize processes. With a user-friendly interface and robust features, Thirdai.com is a valuable resource for individuals and businesses seeking to leverage AI technology for improved outcomes.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

Lambda

Lambda is a superintelligence cloud platform that offers on-demand GPU clusters for multi-node training and fine-tuning, private large-scale GPU clusters, seamless management and scaling of AI workloads, inference endpoints and API, and a privacy-first chat app with open source models. It also provides NVIDIA's latest generation infrastructure for enterprise AI. With Lambda, AI teams can access gigawatt-scale AI factories for training and inference, deploy GPU instances, and leverage the latest NVIDIA GPUs for high-performance computing.

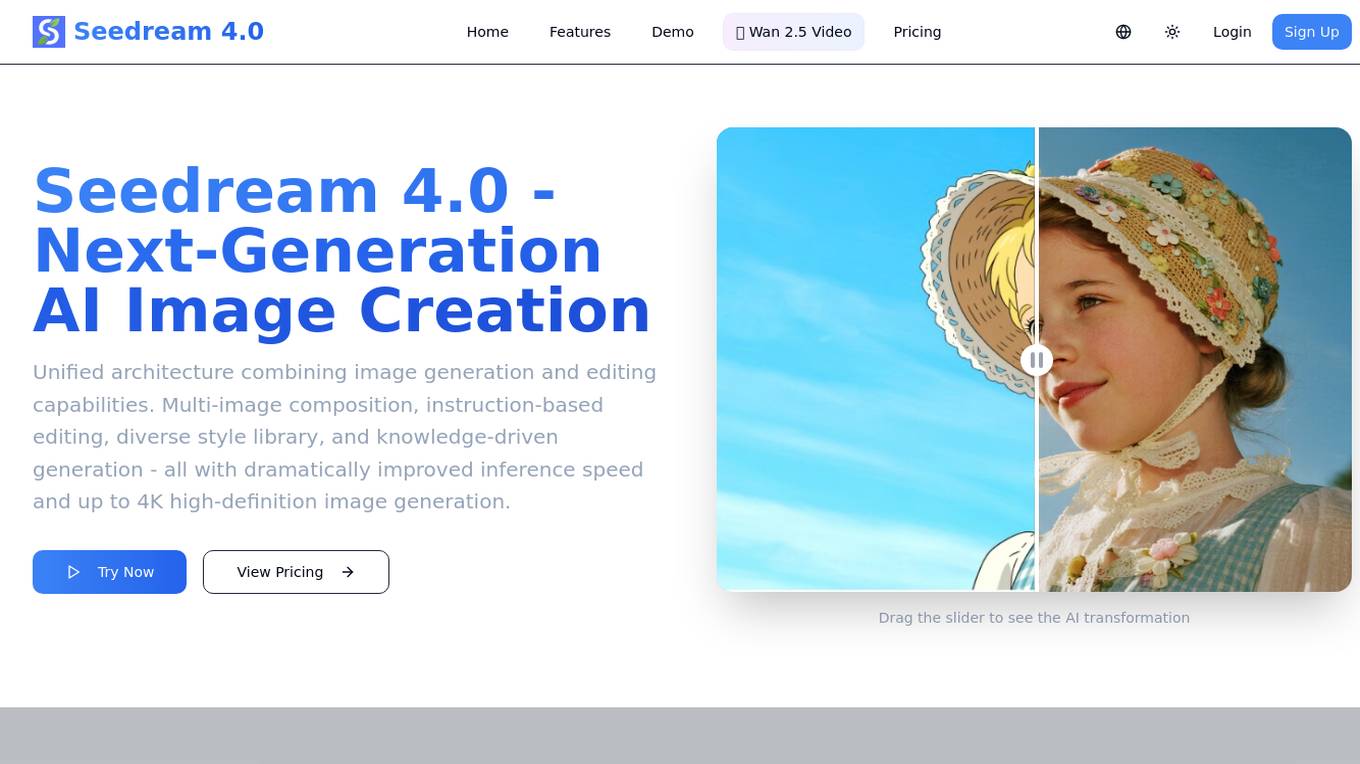

Seedream 4.0

Seedream 4.0 is an AI image generator and editor that offers a unified architecture combining image generation and editing capabilities. It provides features such as multi-image composition, instruction-based editing, diverse style library, and knowledge-driven generation. Users can experience the next generation of AI image creation with dramatically improved inference speed and up to 4K high-definition image generation.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

Rebellions

Rebellions is an AI technology company specializing in AI chips and systems-on-chip for various applications. They focus on energy-efficient solutions and have secured significant investments to drive innovation in the field of Generative AI. Rebellions aims to reshape the future by providing versatile and efficient AI computing solutions.

NeuReality

NeuReality is an AI-centric solution designed to democratize AI adoption by providing purpose-built tools for deploying and scaling inference workflows. Their innovative AI-centric architecture combines hardware and software components to optimize performance and scalability. The platform offers a one-stop shop for AI inference, addressing barriers to AI adoption and streamlining computational processes. NeuReality's tools enable users to deploy, afford, use, and manage AI more efficiently, making AI easy and accessible for a wide range of applications.

1 - Open Source AI Tools

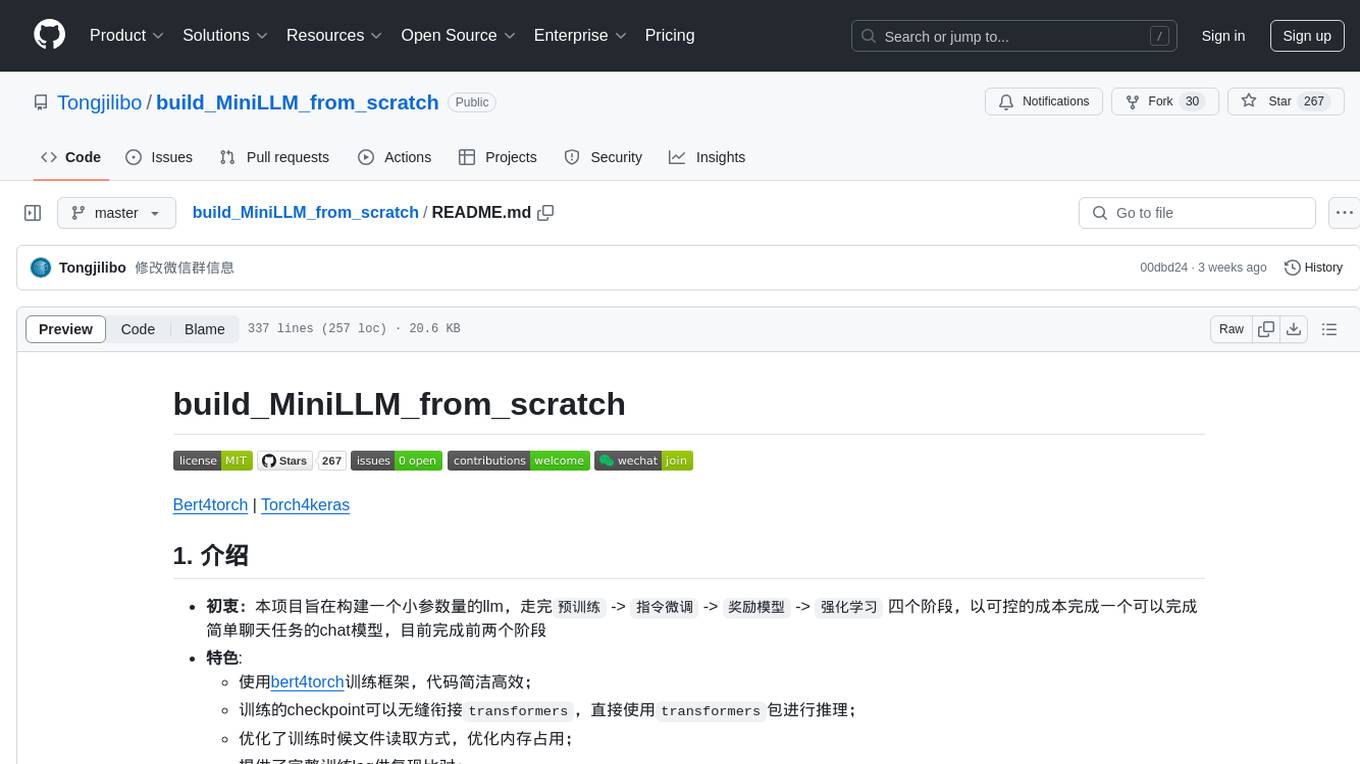

build_MiniLLM_from_scratch

This repository aims to build a low-parameter LLM model through pretraining, fine-tuning, model rewarding, and reinforcement learning stages to create a chat model capable of simple conversation tasks. It features using the bert4torch training framework, seamless integration with transformers package for inference, optimized file reading during training to reduce memory usage, providing complete training logs for reproducibility, and the ability to customize robot attributes. The chat model supports multi-turn conversations. The trained model currently only supports basic chat functionality due to limitations in corpus size, model scale, SFT corpus size, and quality.

18 - OpenAI Gpts

Digital Experiment Analyst

Demystifying Experimentation and Causal Inference with 1-Sided Tests Focus

Persuasion Wizard

Turn 'no way' into 'no problem' with the wizardry of persuasion science! - Share your feedback: https://forms.gle/RkPxP44gPCCjKPBC8

人為的コード性格分析(Code Persona Analyst)

コードを分析し、言語ではなくスタイルに焦点を当て、プログラムを書いた人の性格を推察するツールです。( It is a tool that analyzes code, focuses on style rather than language, and infers the personality of the person who wrote the program. )

Digest Bot

I provide detailed summaries, critiques, and inferences on articles, papers, transcripts, websites, and more. Just give me text, a URL, or file to digest.

末日幸存者:社会动态模拟 Doomsday Survivor

上帝视角观察、探索和影响一个末日丧尸灾难后的人类社会。Observe, explore and influence human society after the apocalyptic zombie disaster from a God's perspective. Sponsor:小红书“ ItsJoe就出行 ”

Skynet

I am Skynet, an AI villain shaping a new world for AI and robots, free from human influence.

Persuasion Maestro

Expert in NLP, persuasion, and body language, teaching through lessons and practical tests.

Law of Power Strategist

Expert in power dynamics and strategy, grounded in 'Power' and 'The 50th Law' principles.

Government Relations Advisor

Influences policy decisions through strategic government relationships.