Best AI tools for< Finetune The Model >

20 - AI tool Sites

FinetuneFast

FinetuneFast is an AI tool designed to help developers, indie makers, and businesses to efficiently finetune machine learning models, process data, and deploy AI solutions at lightning speed. With pre-configured training scripts, efficient data loading pipelines, and one-click model deployment, FinetuneFast streamlines the process of building and deploying AI models, saving users valuable time and effort. The tool is user-friendly, accessible for ML beginners, and offers lifetime updates for continuous improvement.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

Sentence Transformers

Sentence Transformers is a Python module that provides access to state-of-the-art embedding and reranker models. It allows users to compute embeddings, calculate similarity scores, and generate sparse embeddings for various applications such as semantic search, semantic textual similarity, and paraphrase mining. The module offers a wide selection of pre-trained models and enables users to train or finetune their own models for specific use cases.

poolside

poolside is an advanced foundational AI model designed specifically for software engineering challenges. It allows users to fine-tune the model on their own code, enabling it to understand project uniqueness and complexities that generic models can't grasp. The platform aims to empower teams to build better, faster, and happier by providing a personalized AI model that continuously improves. In addition to the AI model for writing code, poolside offers an intuitive editor assistant and an API for developers to leverage.

SD3 Medium

SD3 Medium is an advanced text-to-image model developed by Stability AI. It offers a cutting-edge approach to generating high-quality, photorealistic images based on textual prompts. The model is equipped with 2 billion parameters, ensuring exceptional quality and resource efficiency. SD3 Medium is currently in a research preview phase, primarily catering to educational and creative purposes. Users can access the model through various licensing options and explore its capabilities via the Stability Platform.

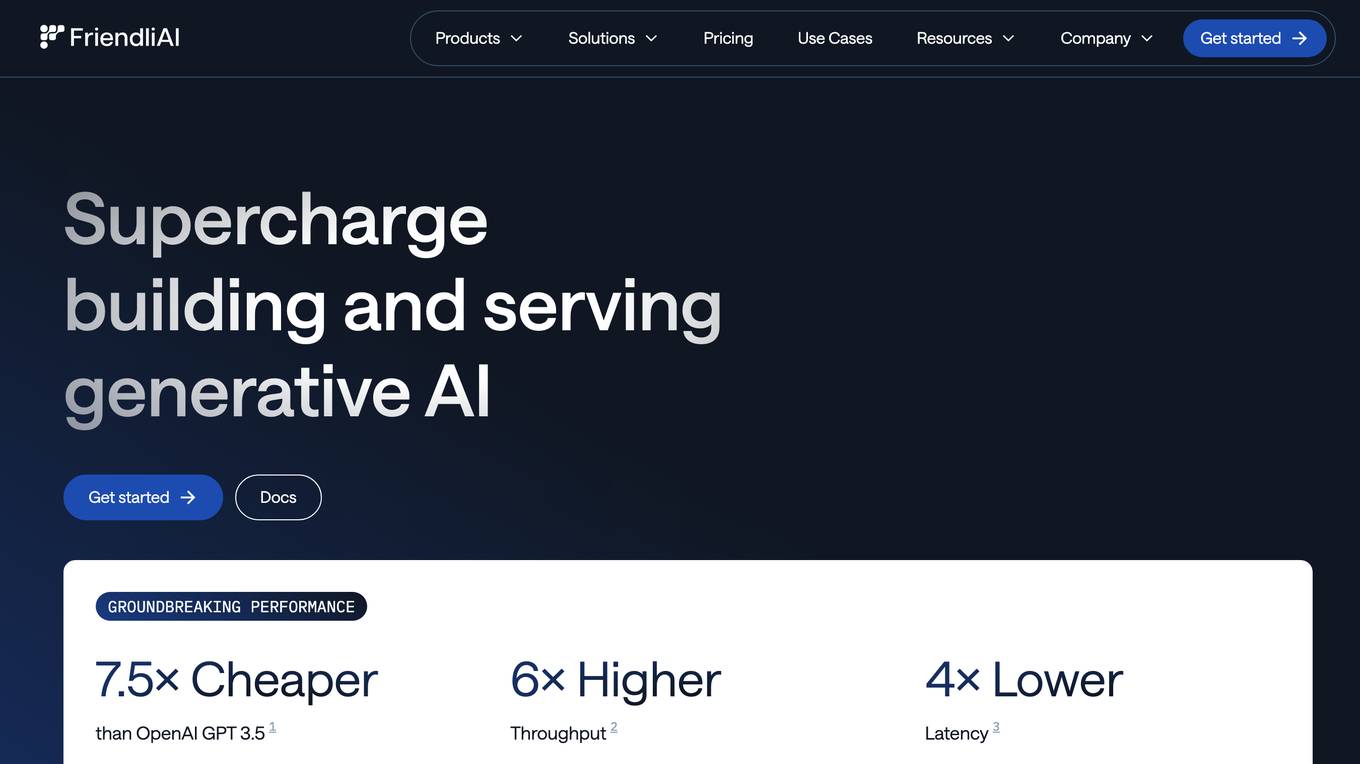

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

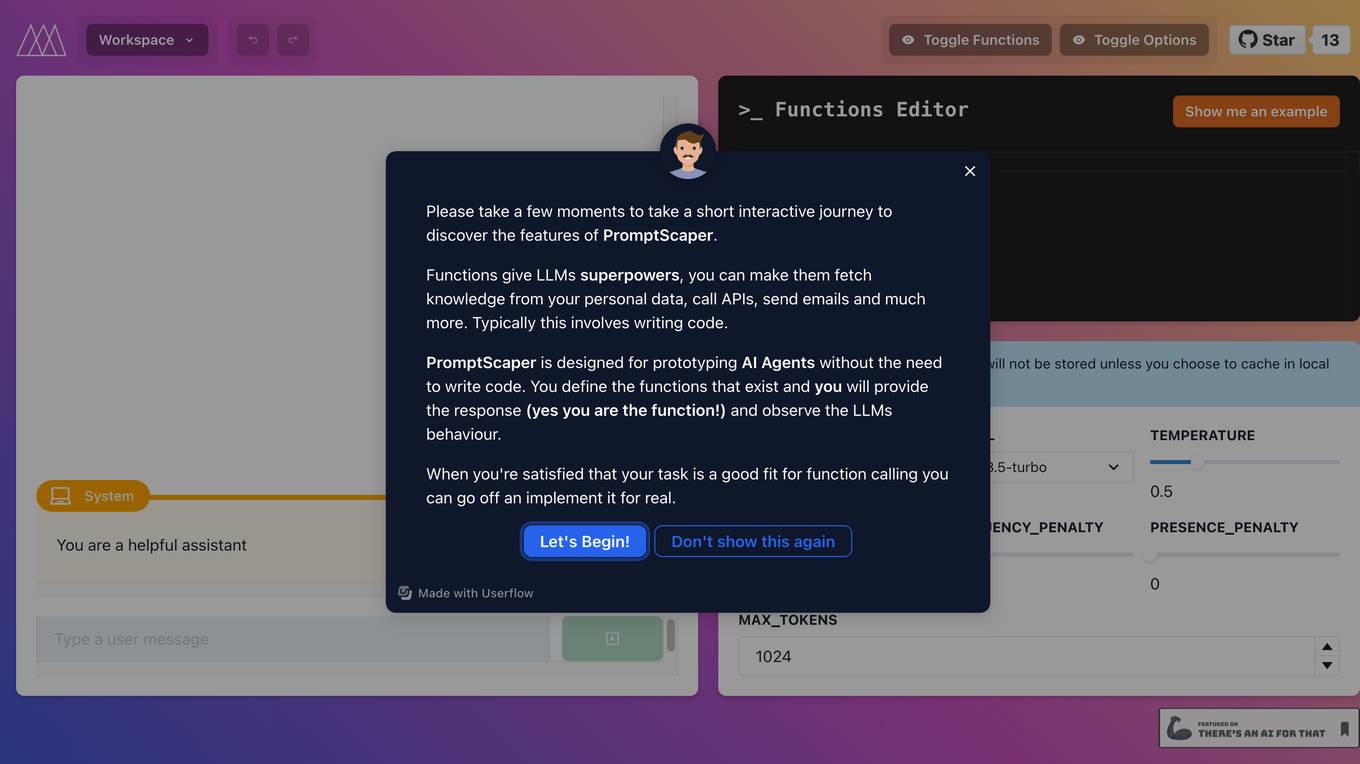

PromptScaper Workspace

PromptScaper Workspace is an AI tool designed to assist users in generating text using OpenAI's powerful language models. The tool provides a user-friendly interface for interacting with OpenAI's API to generate text based on specified parameters. Users can input prompts and customize various settings to fine-tune the generated text output. PromptScaper Workspace streamlines the process of leveraging advanced AI language models for text generation tasks, making it easier for users to create content efficiently.

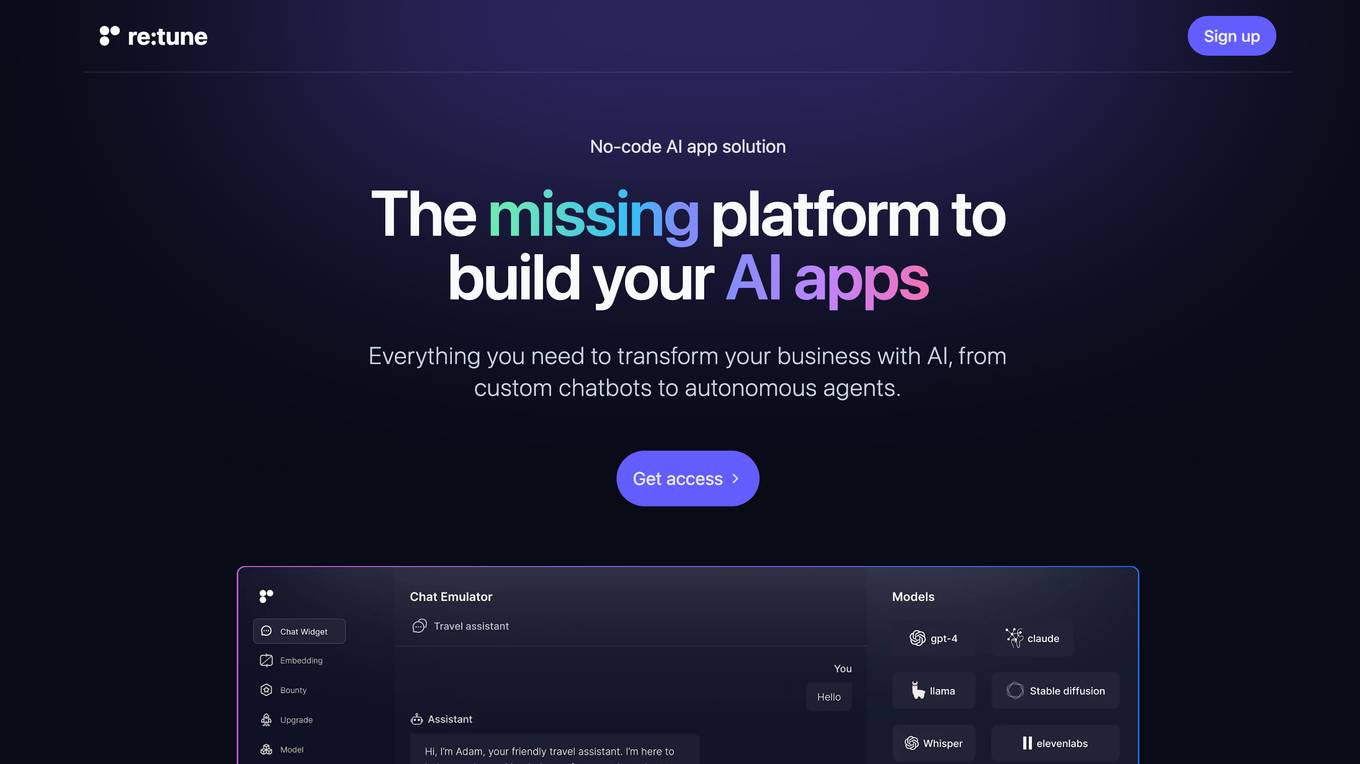

re:tune

re:tune is a no-code AI app solution that provides everything you need to transform your business with AI, from custom chatbots to autonomous agents. With re:tune, you can build chatbots for any use case, connect any data source, and integrate with all your favorite tools and platforms. re:tune is the missing platform to build your AI apps.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Cerebras

Cerebras is an AI tool that offers products and services related to AI supercomputers, cloud system processors, and applications for various industries. It provides high-performance computing solutions, including large language models, and caters to sectors such as health, energy, government, scientific computing, and financial services. Cerebras specializes in AI model services, offering state-of-the-art models and training services for tasks like multi-lingual chatbots and DNA sequence prediction. The platform also features the Cerebras Model Zoo, an open-source repository of AI models for developers and researchers.

Predibase

Predibase is a platform for fine-tuning and serving Large Language Models (LLMs). It provides a cost-effective and efficient way to train and deploy LLMs for a variety of tasks, including classification, information extraction, customer sentiment analysis, customer support, code generation, and named entity recognition. Predibase is built on proven open-source technology, including LoRAX, Ludwig, and Horovod.

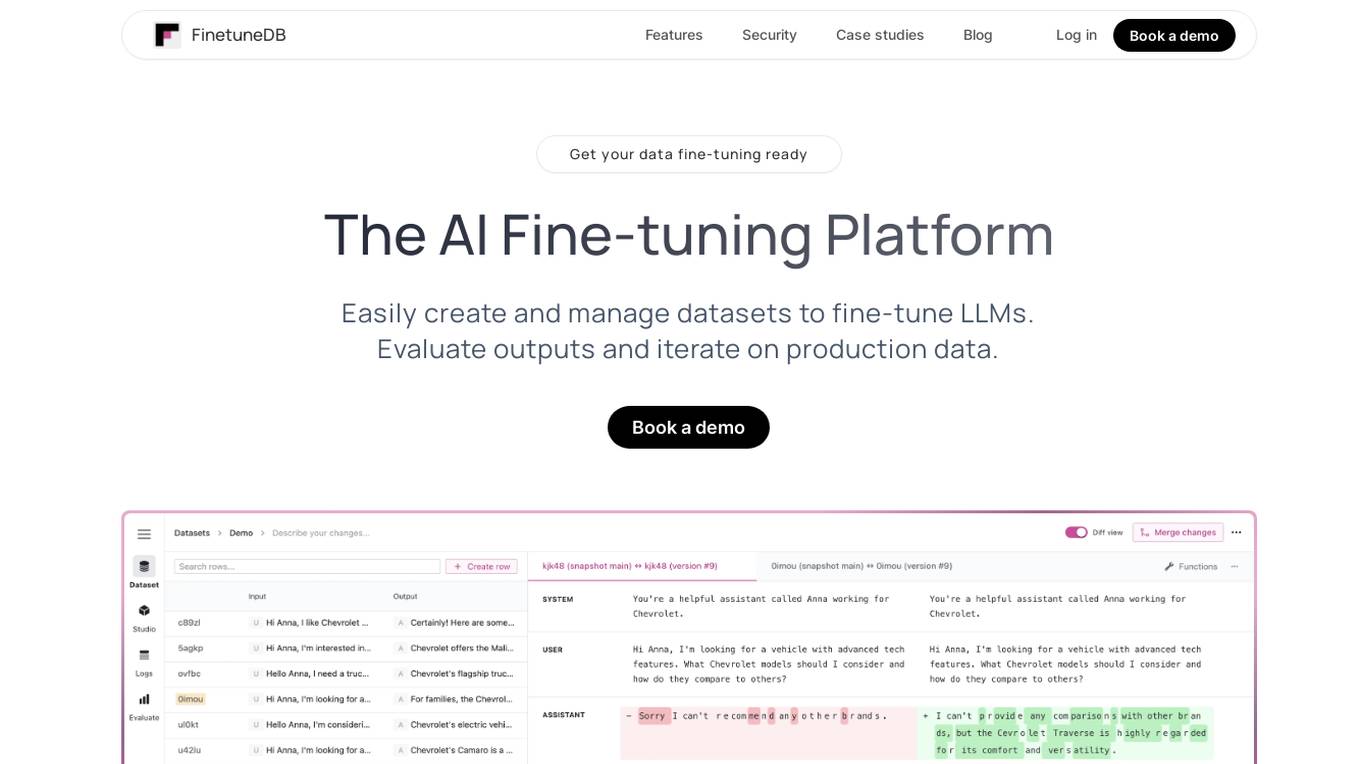

FinetuneDB

FinetuneDB is an AI fine-tuning platform that allows users to easily create and manage datasets to fine-tune LLMs, evaluate outputs, and iterate on production data. It integrates with open-source and proprietary foundation models, and provides a collaborative editor for building datasets. FinetuneDB also offers a variety of features for evaluating model performance, including human and AI feedback, automated evaluations, and model metrics tracking.

Synthetic Data Generation for Agentic AI

The website provides information on Synthetic Data Generation for Agentic AI, offering high-quality, domain-specific synthetic data to accelerate the development of agentic workflows. It explains the technical implementation, benefits, and usage of synthetic data in training specialized agentic systems. The site also showcases related use cases, quick links for further exploration, and detailed steps for generating synthetic data.

Flux LoRA Model Library

Flux LoRA Model Library is an AI tool that provides a platform for finding and using Flux LoRA models suitable for various projects. Users can browse a catalog of popular Flux LoRA models and learn about FLUX models and LoRA (Low-Rank Adaptation) technology. The platform offers resources for fine-tuning models and ensuring responsible use of generated images.

Fireworks

Fireworks is a generative AI platform for product innovation. It provides developers with access to the world's leading generative AI models, at the fastest speeds. With Fireworks, developers can build and deploy AI-powered applications quickly and easily.

Replicate

Replicate is an AI tool that allows users to run and fine-tune open-source models, deploy custom models at scale, and generate images, text, videos, music, and speech with just one line of code. It provides a platform for the community to contribute and explore thousands of production-ready AI models, enabling users to push the boundaries of AI beyond academic papers and demos. With features like fine-tuning models, deploying custom models, and scaling on Replicate, users can easily create and deploy AI solutions for various tasks.

Sapien.io

Sapien.io is a decentralized data foundry that offers data labeling services powered by a decentralized workforce and gamified platform. The platform provides high-quality training data for large language models through a human-in-the-loop labeling process, enabling fine-tuning of datasets to build performant AI models. Sapien combines AI and human intelligence to collect and annotate various data types for any model, offering customized data collection and labeling models across industries.

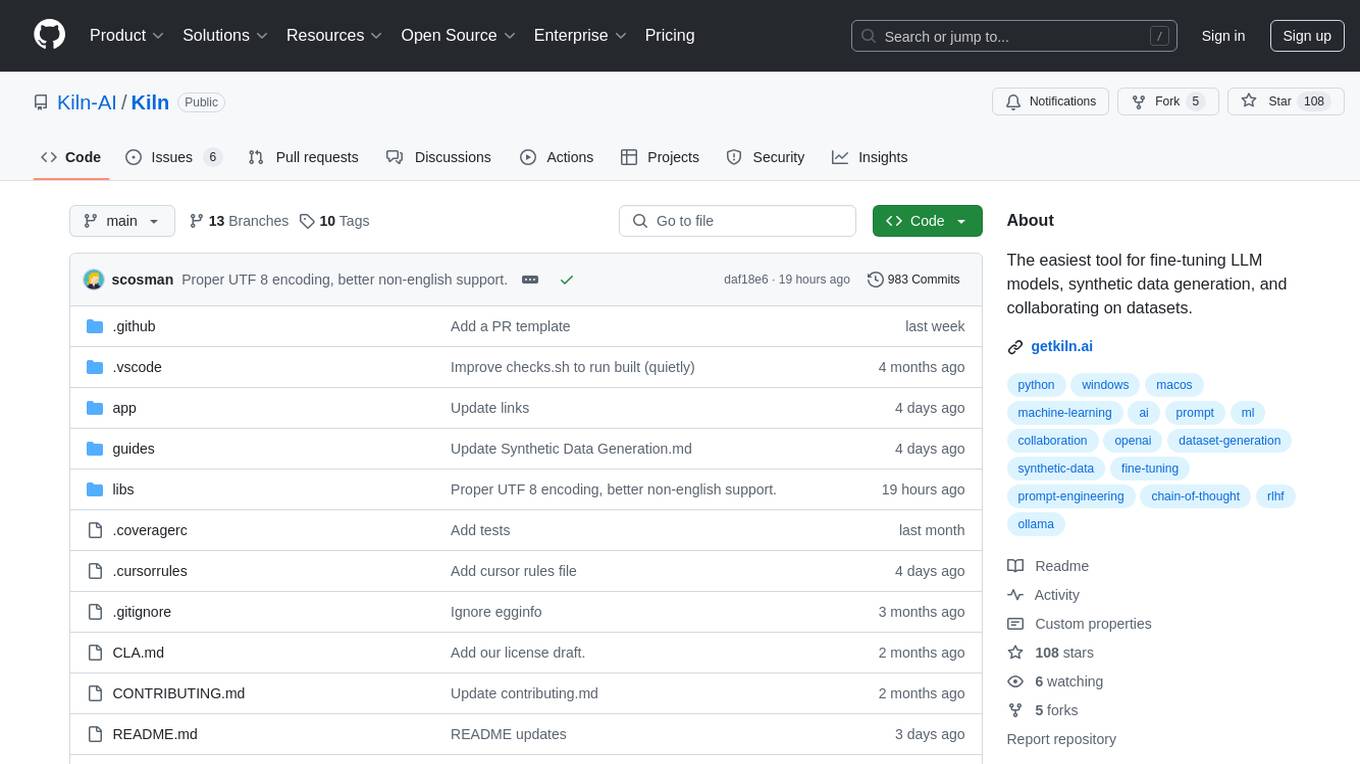

Kiln

Kiln is an AI tool designed for fine-tuning LLM models, generating synthetic data, and facilitating collaboration on datasets. It offers intuitive desktop apps, zero-code fine-tuning for various models, interactive visual tools for data generation, Git-based version control for datasets, and the ability to generate various prompts from data. Kiln supports a wide range of models and providers, provides an open-source library and API, prioritizes privacy, and allows structured data tasks in JSON format. The tool is free to use and focuses on rapid AI prototyping and dataset collaboration.

Fine-Tune AI

Fine-Tune AI is a tool that allows users to generate fine-tune data sets using prompts. This can be useful for a variety of tasks, such as improving the accuracy of machine learning models or creating new training data for AI applications.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

1 - Open Source AI Tools

1.5-Pints

1.5-Pints is a repository that provides a recipe to pre-train models in 9 days, aiming to create AI assistants comparable to Apple OpenELM and Microsoft Phi. It includes model architecture, training scripts, and utilities for 1.5-Pints and 0.12-Pint developed by Pints.AI. The initiative encourages replication, experimentation, and open-source development of Pint by sharing the model's codebase and architecture. The repository offers installation instructions, dataset preparation scripts, model training guidelines, and tools for model evaluation and usage. Users can also find information on finetuning models, converting lit models to HuggingFace models, and running Direct Preference Optimization (DPO) post-finetuning. Additionally, the repository includes tests to ensure code modifications do not disrupt the existing functionality.

9 - OpenAI Gpts

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

AI绘画|画图|画画|超级绘图|牛逼dalle|painting

👉AI绘画,无视版权,精准创作提示词。👈1.可描述画面2.可给出midjourney的绘画提示词3.为每幅画作指定专属 ID,便于精调4.可以画绘制皮克斯拟人可爱动物。1. Can describe the picture . 2. Can give the prompt words for midjourney's painting . 3. Assign a unique ID to each painting to facilitate fine-tuning

Joke Smith | Joke Edits for Standup Comedy

A witty editor to fine-tune stand-up comedy jokes.

BrandChic Strategic

I'm Chic Strategic, your ally in carving out a distinct brand position and fine-tuning your voice. Let's make your brand's presence robust and its message clear in a bustling market.