Best AI tools for< Extract Text From Pdf >

20 - AI tool Sites

FileDrop

FileDrop is a file or document manager that allows you to drag and drop files into a document with automatic linking and save them to Google Drive. It also offers features like OCR, translation, and AI integration. With FileDrop, you can easily insert, save, and link files in Google Sheets cells, Docs, and Slides.

Scanner Go

Scanner Go is a free PDF tool that offers easy-to-use features for high-quality scanning and conversion of various documents into PDF format. With powerful OCR technology, it allows users to extract text from PDFs and images, making it convenient to edit and share documents. The tool also provides options for managing, editing, printing, and sharing documents, enhancing productivity. Additionally, Scanner Go offers a range of popular tools for converting, optimizing, and securing PDF files, catering to diverse user needs.

Swiftask

Swiftask is an all-in-one AI Assistant designed to enhance individual and team productivity and creativity. It integrates a range of AI technologies, chatbots, and productivity tools into a cohesive chat interface. Swiftask offers features such as generating text, language translation, creative content writing, answering questions, extracting text from images and PDFs, table and form extraction, audio transcription, speech-to-text conversion, AI-based image generation, and project management capabilities. Users can benefit from Swiftask's comprehensive AI solutions to work smarter and achieve more.

Picture to Text Converter

Picture to Text Converter is an online tool that uses Optical Character Recognition (OCR) technology to extract text from images. It can process various image formats like JPG, PNG, GIF, scanned documents (PDFs), and even photos taken with your phone's camera. The extracted text can be copied to the clipboard or downloaded as a TXT file. Picture to Text Converter is free to use and does not require any registration or installation. It is a convenient and efficient way to convert images into editable text.

ToolLab

ToolLab is a professional online AI tool that specializes in removing watermarks from PDF files and images. It offers instant and high-quality removal of watermarks while maintaining document integrity. The tool is user-friendly, secure, and does not require any installation or registration. With its AI-powered technology, ToolLab ensures efficient and effective watermark removal, making it a reliable choice for individuals and businesses seeking professional results.

GetSearchablePDF

GetSearchablePDF is an online tool that allows users to convert scanned or image-based PDF documents into searchable PDFs. With its advanced OCR (Optical Character Recognition) technology, the tool accurately extracts text from images, making the resulting PDFs easy to search, edit, and share. The process is simple and straightforward: users simply connect their Dropbox or OneDrive account, drag and drop their PDF files into the designated folder, and the tool automatically converts them into searchable PDFs.

GrabText

GrabText is an online OCR tool that allows users to convert handwritten or printed text from photos, graphics, or documents into editable text. It uses ChatGPT to automatically correct spelling, grammar, and other illegal writings. The tool also supports math equations and offers flexible output options such as txt, latex, doc, and pdf.

ImageTextify

ImageTextify is a free, AI-powered OCR tool that enables users to extract text from images, PDFs, and handwritten notes with high accuracy and efficiency. The tool offers a wide range of features, including multi-format support, batch processing, and a mobile-friendly interface. ImageTextify is designed to cater to both personal and professional needs, providing a seamless solution for converting images to text. With a focus on privacy, speed, and support for multiple languages and formats, ImageTextify stands out as a reliable and user-friendly OCR tool.

Parseur

Parseur is an AI data extraction software that uses artificial intelligence to extract structured data from various types of documents such as PDFs, emails, and scanned documents. It offers features like template-based data extraction, OCR software for character recognition, and dynamic OCR for extracting fields that move or change size. Parseur is trusted by businesses in finance, tech, logistics, healthcare, real estate, e-commerce, marketing, and human resources industries to automate data extraction processes, saving time and reducing manual errors.

Docubase.ai

Docubase.ai is a powerful document analysis tool that uses advanced natural language processing and machine learning to extract information and provide relevant answers to your queries. It can automatically extract text content from uploaded documents, generate relevant questions, and extract answers from the document content. Docubase.ai supports a wide range of document formats, including PDF, Word, Excel, PowerPoint, and text documents. It also allows users to ask their own questions and provides options to export answers in different formats for easy sharing and documentation.

Honeybear.ai

Honeybear.ai is an AI tool designed to simplify document reading tasks. It utilizes advanced algorithms to extract and analyze text from various documents, making it easier for users to access and comprehend information. With Honeybear.ai, users can streamline their document processing workflows and enhance productivity.

Chat PDF AI Online

Chat PDF AI Online is an advanced AI tool that revolutionizes the way users interact with PDF documents. It offers cutting-edge AI features to enhance the PDF experience, providing seamless solutions for reading, summarizing, analyzing, and translating PDF files. With features like longer context support, powerful tabular data analysis, and advanced LLM support, Chat PDF AI Online ensures smarter and faster document processing. Users can securely upload and process large PDF files, benefiting from high accuracy and efficiency in document handling.

ChatPDF

ChatPDF is an AI-powered tool that allows users to interact with PDF documents in a conversational manner. It uses natural language processing (NLP) to understand user queries and provide relevant information or perform actions on the PDF. With ChatPDF, users can ask questions about the content of the PDF, search for specific information, extract data, translate text, and more, all through a simple chat-like interface.

EaseUS ChatPDF

EaseUS ChatPDF is an AI-powered PDF summarizer tool that allows users to understand, summarize, and chat with PDF files efficiently. It simplifies PDF reading by providing automatic summarization, analysis, and chat features. The tool is designed to help users save time and effort in extracting key insights from PDF documents. With integrated AI models and OCR capabilities, EaseUS ChatPDF offers a secure and reliable platform for interacting with PDF content.

Shortify

Shortify is a tool that helps you summarize text from various sources, including articles, YouTube videos, PDFs, and more. It integrates with your existing apps, allowing you to easily summarize content by tapping the Share button and selecting Shortify. The summarized text is presented in a concise and easy-to-read format, saving you time and effort. Shortify also offers additional features such as ultra-short summaries, sharing options, and usage statistics.

basebox

basebox is an AI application designed to provide secure and efficient AI solutions for businesses across various industries. It offers a range of features such as secure text editing, data extraction from PDFs and Excel documents, academic text summarization, multilingual translation, and blog post creation. With a focus on data privacy and security, basebox ensures end-to-end encryption, GDPR compliance, and hosting in Europe. The application is user-friendly, requiring no technical expertise for setup, and offers transparent pricing based on actual usage.

Solvr

Solvr is an AI-powered Chrome extension that allows users to solve questions effortlessly without leaving the webpage. It offers two powerful modes for capturing and getting instant answers, as well as the ability to extract and solve content from PDFs. With sleek and structured results, Solvr provides visually appealing and organized information at a glance, making problem-solving swift and simple.

PrivacyDoc

PrivacyDoc is an AI-powered portal that allows users to analyze and query PDF and ebooks effortlessly. By leveraging advanced NLP technology, PrivacyDoc enables users to uncover insights and conduct thorough document analysis. The platform offers features such as easy file upload, query functionality, enhanced security measures, and free access to powerful PDF analysis tools. With PrivacyDoc, users can experience the convenience of logging in with their Google account, submitting queries for prompt AI-driven responses, and ensuring data privacy with secure file handling.

AI Bank Statement Converter

The AI Bank Statement Converter is an industry-leading tool designed for accountants and bookkeepers to extract data from financial documents using artificial intelligence technology. It offers features such as automated data extraction, integration with accounting software, enhanced security, streamlined workflow, and multi-format conversion capabilities. The tool revolutionizes financial document processing by providing high-precision data extraction, tailored for accounting businesses, and ensuring data security through bank-level encryption. It also offers Intelligent Document Processing (IDP) using AI and machine learning techniques to process structured, semi-structured, and unstructured documents.

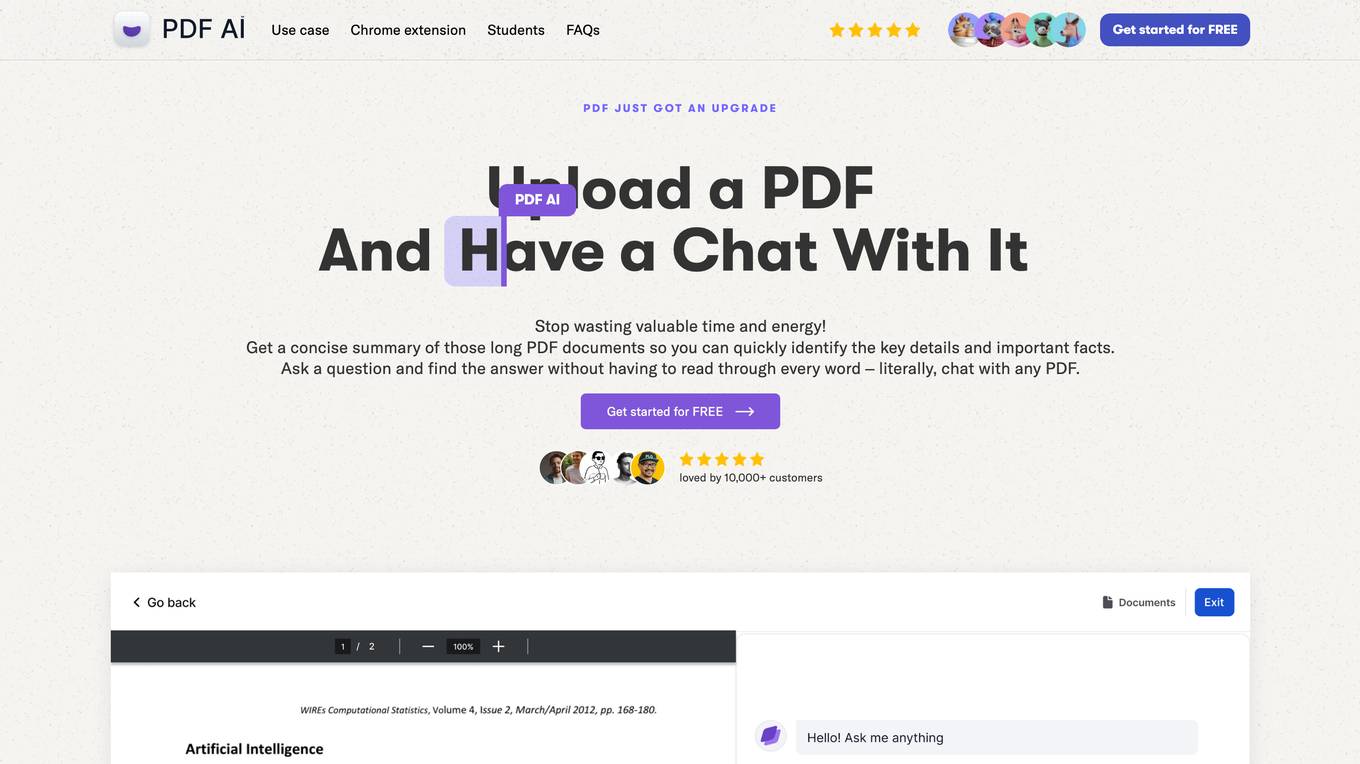

PDF AI

The website offers an AI-powered PDF reader that allows users to chat with any PDF document. Users can upload a PDF, ask questions, get answers, extract precise sections of text, summarize, annotate, highlight, classify, analyze, translate, and more. The AI tool helps in quickly identifying key details, finding answers without reading through every word, and citing sources. It is ideal for professionals in various fields like legal, finance, research, academia, healthcare, and public sector, as well as students. The tool aims to save time, increase productivity, and simplify document management and analysis.

5 - Open Source AI Tools

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

terraform-genai-doc-summarization

This solution showcases how to summarize a large corpus of documents using Generative AI. It provides an end-to-end demonstration of document summarization going all the way from raw documents, detecting text in the documents and summarizing the documents on-demand using Vertex AI LLM APIs, Cloud Vision Optical Character Recognition (OCR) and BigQuery.

chatwise-releases

ChatWise is an offline tool that supports various AI models such as OpenAI, Anthropic, Google AI, Groq, and Ollama. It is multi-modal, allowing text-to-speech powered by OpenAI and ElevenLabs. The tool supports text files, PDFs, audio, and images across different models. ChatWise is currently available for macOS (Apple Silicon & Intel) with Windows support coming soon.

any-parser

AnyParser provides an API to accurately extract unstructured data (e.g., PDFs, images, charts) into a structured format. Users can set up their API key, run synchronous and asynchronous extractions, and perform batch extraction. The tool is useful for extracting text, numbers, and symbols from various sources like PDFs and images. It offers flexibility in processing data and provides immediate results for synchronous extraction while allowing users to fetch results later for asynchronous and batch extraction. AnyParser is designed to simplify data extraction tasks and enhance data processing efficiency.

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

20 - OpenAI Gpts

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

QCM

ce GPT va recevoir des images dans lesquelles il y a des questions QCM codingame ou Problem Solving sur les sujets : Java, Hibernate, Angular, Spring Boot, SQL. Il doit extraire le texte depuis l'image et répondre au question QCM le plus rapidement possible.

Spreadsheet Composer

Magically turning text from emails, lists and website content into spreadsheet tables

kz image 2 typescript 2 image

Generate a Structured description in typescript format from the image and generate an image from that description. and OCR

Digest Bot

I provide detailed summaries, critiques, and inferences on articles, papers, transcripts, websites, and more. Just give me text, a URL, or file to digest.

ExtractWisdom

Takes in any text and extracts the wisdom from it like you spent 3 hours taking handwritten notes.

Ringkesan

Nyimpulkeun sareng nimba poin konci tina téks, artikel, video, dokumén sareng seueur deui