Best AI tools for< Evaluate Language Sources >

20 - AI tool Sites

LlamaIndex

LlamaIndex is a leading data framework designed for building LLM (Large Language Model) applications. It allows enterprises to turn their data into production-ready applications by providing functionalities such as loading data from various sources, indexing data, orchestrating workflows, and evaluating application performance. The platform offers extensive documentation, community-contributed resources, and integration options to support developers in creating innovative LLM applications.

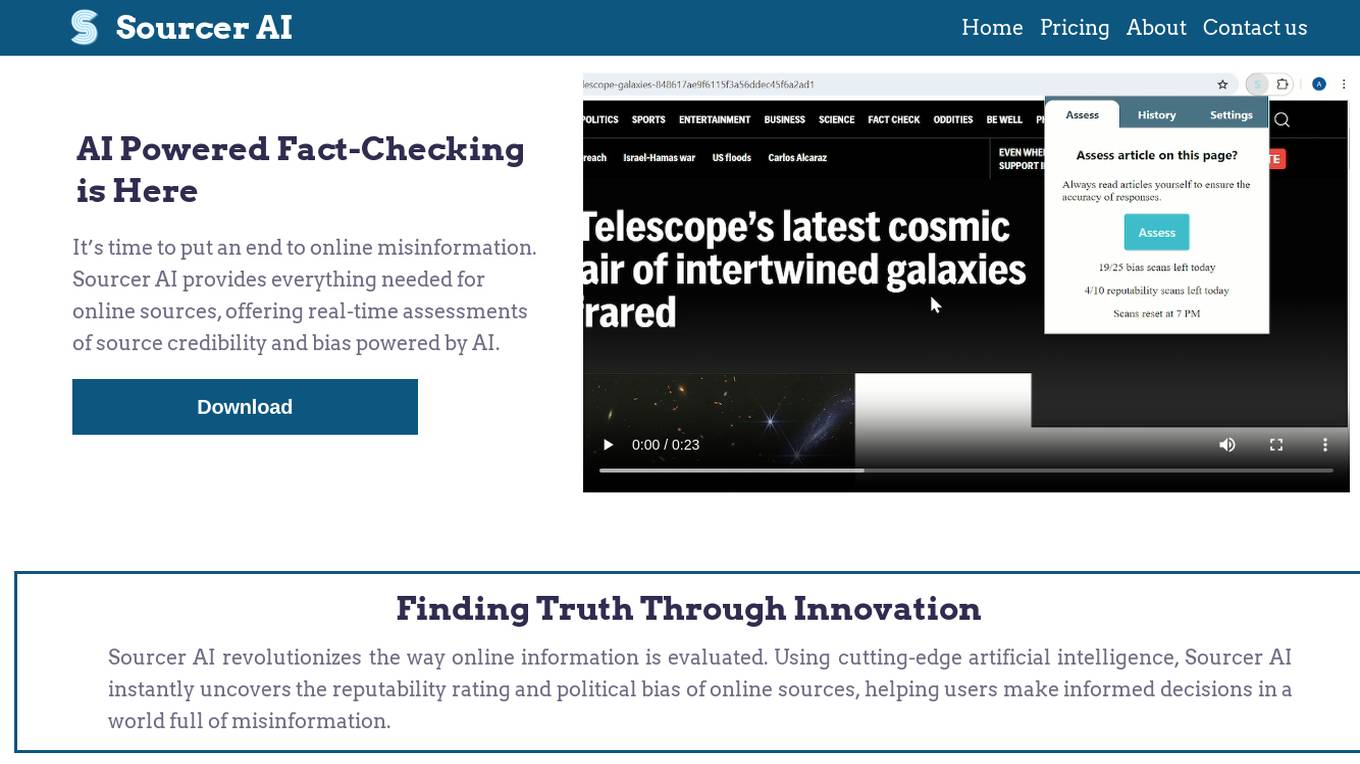

Sourcer AI

Sourcer AI is an AI-powered fact-checking tool that provides real-time assessments of source credibility and bias in online information. It revolutionizes the evaluation process by using cutting-edge artificial intelligence to uncover reputability ratings and political biases of online sources, helping users combat misinformation and make informed decisions.

VerifactAI

VerifactAI is a tool that helps users verify facts. It is a web-based application that allows users to input a claim and then provides evidence to support or refute the claim. VerifactAI uses a variety of sources to gather evidence, including news articles, academic papers, and social media posts. The tool is designed to be easy to use and can be used by anyone, regardless of their level of expertise.

Entry Point AI

Entry Point AI is a modern AI optimization platform for fine-tuning proprietary and open-source language models. It provides a user-friendly interface to manage prompts, fine-tunes, and evaluations in one place. The platform enables users to optimize models from leading providers, train across providers, work collaboratively, write templates, import/export data, share models, and avoid common pitfalls associated with fine-tuning. Entry Point AI simplifies the fine-tuning process, making it accessible to users without the need for extensive data, infrastructure, or insider knowledge.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

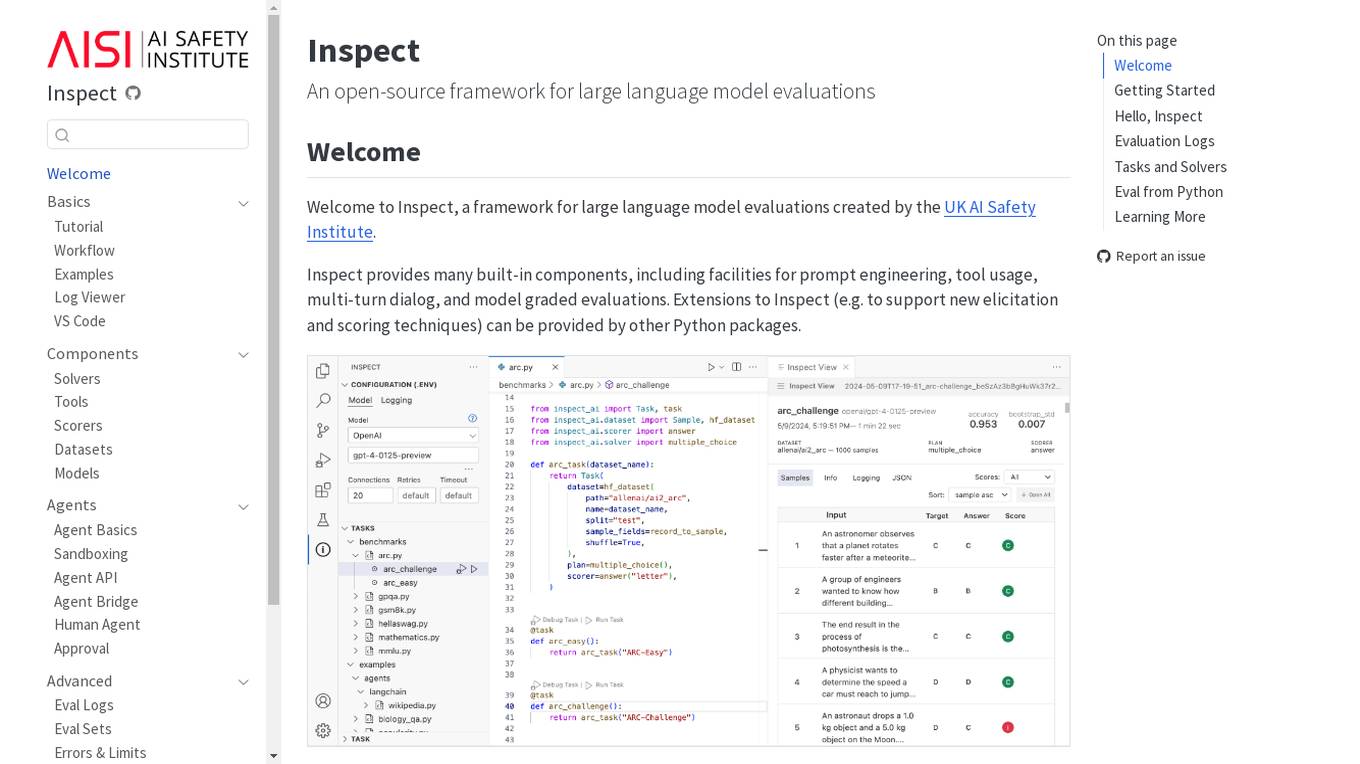

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

Candidate Search AI

The AI powered candidate search engine is a sophisticated tool designed to revolutionize the recruitment process by enabling recruiters to search their candidate database using natural language, context-aware, and lightning fast technology. It goes beyond traditional keyword-based search, offering semantic understanding, AI-driven candidate evaluation, and workflow automation to streamline the recruiting process. The tool also provides rich candidate profiles, skill highlights, and smart alerts for efficient talent discovery. With powerful analytics and visualizations, recruiters can transform their talent data into actionable intelligence, increasing ROI and reducing sourcing time. The tool ensures data security and privacy with enterprise-grade security features and compliance with data privacy regulations globally.

Datumbox

Datumbox is a machine learning platform that offers a powerful open-source Machine Learning Framework written in Java. It provides a large collection of algorithms, models, statistical tests, and tools to power up intelligent applications. The platform enables developers to build smart software and services quickly using its REST Machine Learning API. Datumbox API offers off-the-shelf Classifiers and Natural Language Processing services for applications like Sentiment Analysis, Topic Classification, Language Detection, and more. It simplifies the process of designing and training Machine Learning models, making it easy for developers to create innovative applications.

Agenta.ai

Agenta.ai is a platform designed to provide prompt management, evaluation, and observability for LLM (Large Language Model) applications. It aims to address the challenges faced by AI development teams in managing prompts, collaborating effectively, and ensuring reliable product outcomes. By centralizing prompts, evaluations, and traces, Agenta.ai helps teams streamline their workflows and follow best practices in LLMOps. The platform offers features such as unified playground for prompt comparison, automated evaluation processes, human evaluation integration, observability tools for debugging AI systems, and collaborative workflows for PMs, experts, and developers.

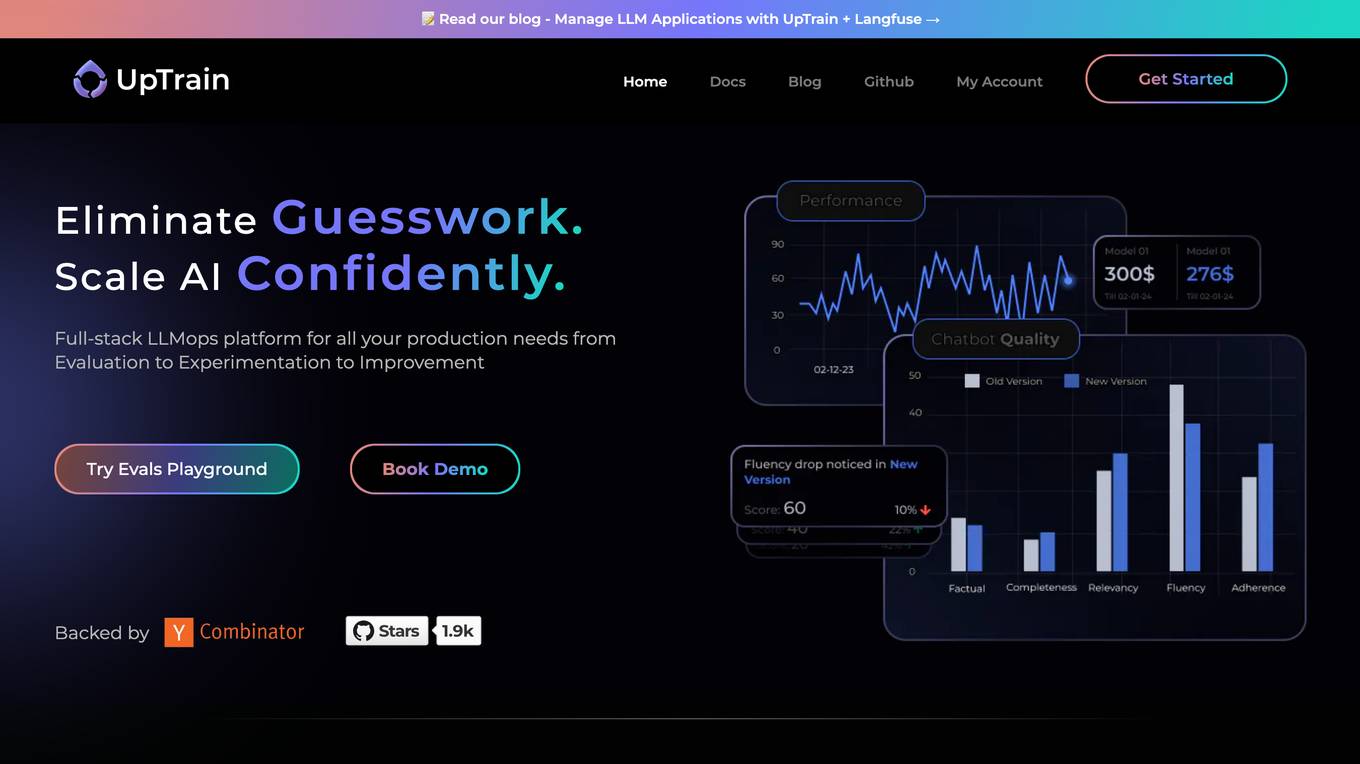

UpTrain

UpTrain is a full-stack LLMOps platform designed to help users confidently scale AI by providing a comprehensive solution for all production needs, from evaluation to experimentation to improvement. It offers diverse evaluations, automated regression testing, enriched datasets, and innovative techniques to generate high-quality scores. UpTrain is built for developers, compliant to data governance needs, cost-efficient, remarkably reliable, and open-source. It provides precision metrics, task understanding, safeguard systems, and covers a wide range of language features and quality aspects. The platform is suitable for developers, product managers, and business leaders looking to enhance their LLM applications.

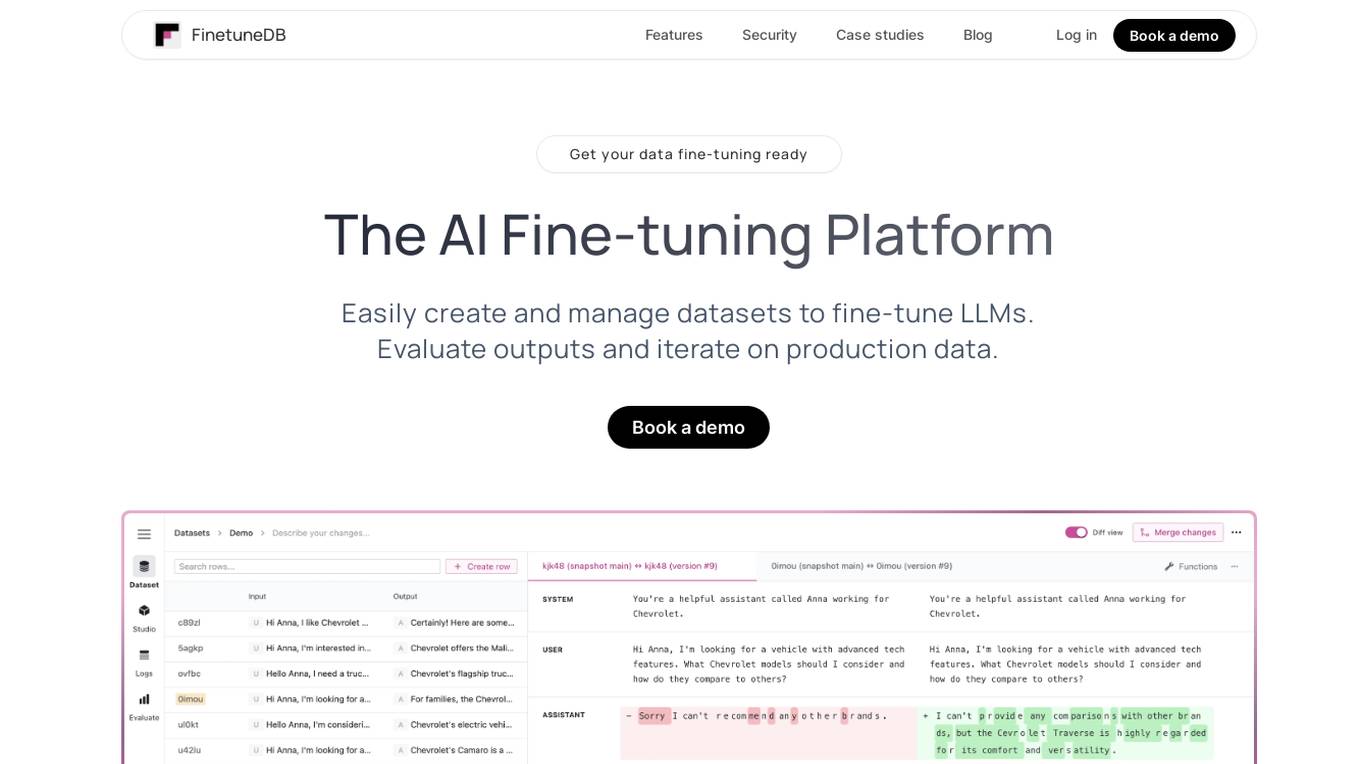

FinetuneDB

FinetuneDB is an AI fine-tuning platform that allows users to easily create and manage datasets to fine-tune LLMs, evaluate outputs, and iterate on production data. It integrates with open-source and proprietary foundation models, and provides a collaborative editor for building datasets. FinetuneDB also offers a variety of features for evaluating model performance, including human and AI feedback, automated evaluations, and model metrics tracking.

Covey Scout

Covey Scout is a talent relationship management platform that uses artificial intelligence (AI) to help recruiting teams with inbound and outbound candidate sourcing, screening, and engagement. The platform's AI-powered bots can evaluate millions of candidate profiles with human-level nuance, saving recruiters time and helping them focus on high-quality interactions with the best-fit candidates. Covey Scout also offers a range of other features, such as personalized email outreach, candidate relationship management (CRM), and reporting.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

Machine Translation Research Hub

This website is a comprehensive resource for research in statistical and neural machine translation. It provides information, tools, and datasets related to the translation of text from one human language to another using computer algorithms trained on vast amounts of translated text.

Flow AI

Flow AI is an advanced AI tool designed for evaluating and improving Large Language Model (LLM) applications. It offers a unique system for creating custom evaluators, deploying them with an API, and developing specialized LMs tailored to specific use cases. The tool aims to revolutionize AI evaluation and model development by providing transparent, cost-effective, and controllable solutions for AI teams across various domains.

Lucida AI

Lucida AI is an AI-driven coaching tool designed to enhance employees' English language skills through personalized insights and feedback based on real-life call interactions. The tool offers comprehensive coaching in pronunciation, fluency, grammar, vocabulary, and tracking of language proficiency. It provides advanced speech analysis using proprietary LLM and NLP technologies, ensuring accurate assessments and detailed tracking. With end-to-end encryption for data privacy, Lucy AI is a cost-effective solution for organizations seeking to improve communication skills and streamline language assessment processes.

Census GPT

Census GPT is an AI-powered tool that provides data analysis and insights based on census data in the USA. It offers information on crime rates, demographics, income levels, education levels, and population statistics. Users can ask specific questions related to different areas and topics to get detailed answers and insights.

AlphaLens Intelligence Solutions

AlphaLens Intelligence Solutions is an AI-powered platform that offers a comprehensive suite of tools for deal origination, sourcing, enrichment, and CRM integrations. It provides users with the ability to extract data from pitch decks, find and evaluate companies, and enrich company profiles with deep market insights. The platform leverages generative AI, semantic search, and active monitoring to surface hidden opportunities, automate deal screening, and sync data to the user's pipeline. With a focus on private market data, AlphaLens enables users to access a vast universe of company and product information, including funding rounds, growth metrics, and target audience insights. The platform also offers REST API access, Chrome extension, and integration with CRM systems for seamless data management.

Cakewalk AI

Cakewalk AI is an AI-powered platform designed to enhance team productivity by leveraging the power of ChatGPT and automation tools. It offers features such as team workspaces, prompt libraries, automation with prebuilt templates, and the ability to combine documents, images, and URLs. Users can automate tasks like updating product roadmaps, creating user personas, evaluating resumes, and more. Cakewalk AI aims to empower teams across various departments like Product, HR, Marketing, and Legal to streamline their workflows and improve efficiency.

PolygrAI

PolygrAI is a digital polygraph powered by AI technology that provides real-time risk assessment and sentiment analysis. The platform meticulously analyzes facial micro-expressions, body language, vocal attributes, and linguistic cues to detect behavioral fluctuations and signs of deception. By combining well-established psychology practices with advanced AI and computer vision detection, PolygrAI offers users actionable insights for decision-making processes across various applications.

1 - Open Source AI Tools

glossAPI

The glossAPI project aims to develop a Greek language model as open-source software, with code licensed under EUPL and data under Creative Commons BY-SA. The project focuses on collecting and evaluating open text sources in Greek, with efforts to prioritize and gather textual data sets. The project encourages contributions through the CONTRIBUTING.md file and provides resources in the wiki for viewing and modifying recorded sources. It also welcomes ideas and corrections through issue submissions. The project emphasizes the importance of open standards, ethically secured data, privacy protection, and addressing digital divides in the context of artificial intelligence and advanced language technologies.

20 - OpenAI Gpts

Source Evaluation and Fact Checking v1.3

FactCheck Navigator GPT is designed for in-depth fact checking and analysis of written content and evaluation of its source. The approach is to iterate through predefined and well-prompted steps. If desired, the user can refine the process by providing input between these steps.

Dedicated Speech-Language Pathologist

Expert Speech-Language Pathologist offering tailored medical consultations.

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

IELTS Writing Test

Simulates the IELTS Writing Test, evaluates responses, and estimates band scores.

WM Phone Script Builder GPT

I automatically create and evaluate phone scripts, presenting a final draft.

IELTS AI Checker (Speaking and Writing)

Provides IELTS speaking and writing feedback and scores.

Academic Paper Evaluator

Enthusiastic about truth in academic papers, critical and analytical.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

VC Associate

A gpt assistant that helps with analyzing a startup/market. The answers you get back is already structured to give you the core elements you would want to see in an investment memo/ market analysis

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.