Best AI tools for< Evaluate Generative Ai Performance >

20 - AI tool Sites

Arthur

Arthur is an industry-leading MLOps platform that simplifies deployment, monitoring, and management of traditional and generative AI models. It ensures scalability, security, compliance, and efficient enterprise use. Arthur's turnkey solutions enable companies to integrate the latest generative AI technologies into their operations, making informed, data-driven decisions. The platform offers open-source evaluation products, model-agnostic monitoring, deployment with leading data science tools, and model risk management capabilities. It emphasizes collaboration, security, and compliance with industry standards.

Getbound

Getbound is an AI solutions provider that enables companies to evaluate, customize, and scale technology solutions with artificial intelligence easily and quickly. They offer services such as AI consulting, NLP solutions, MLOps, generative AI development, data engineering services, and computer vision solutions. Getbound empowers businesses to turn data into savings, automate processes, and improve overall performance through AI technologies.

Outlier AI

Outlier AI is a platform that connects subject matter experts to help build the world's most advanced Generative AI. It allows experts to work on various projects from generating training data to evaluating model performance. The platform offers flexibility, allowing contributors to work from home on their own schedule. Outlier AI aims to redefine how AI learns by leveraging the expertise of domain specialists across different fields.

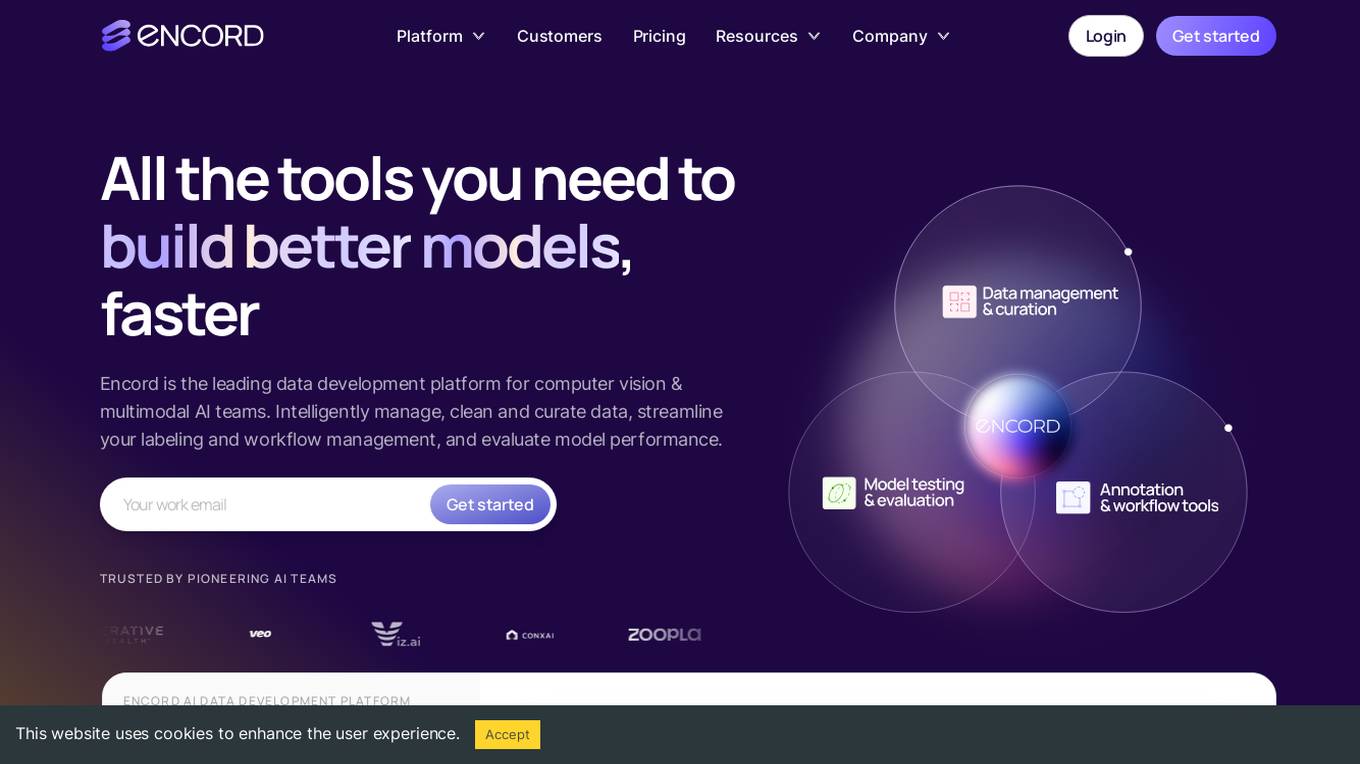

Encord

Encord is a complete data development platform designed for AI applications, specifically tailored for computer vision and multimodal AI teams. It offers tools to intelligently manage, clean, and curate data, streamline labeling and workflow management, and evaluate model performance. Encord aims to unlock the potential of AI for organizations by simplifying data-centric AI pipelines, enabling the building of better models and deploying high-quality production AI faster.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

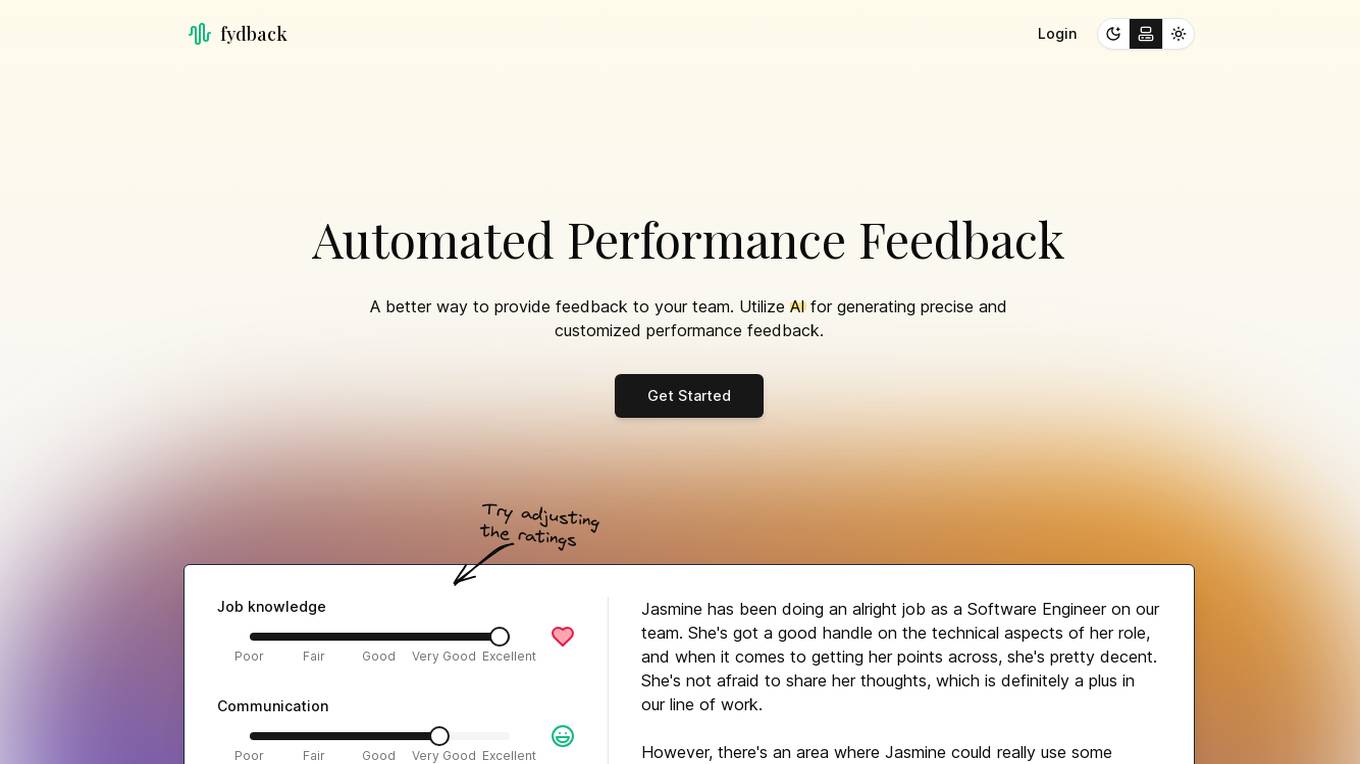

Fydback

Fydback is an AI-powered platform designed to revolutionize the process of providing performance feedback to team members. By leveraging artificial intelligence, Fydback offers precise and customized feedback based on various skill ratings. The application provides detailed insights into areas such as job knowledge, communication, productivity, and leadership, helping users enhance their performance and career growth. Fydback aims to streamline feedback processes and support teams in achieving their full potential.

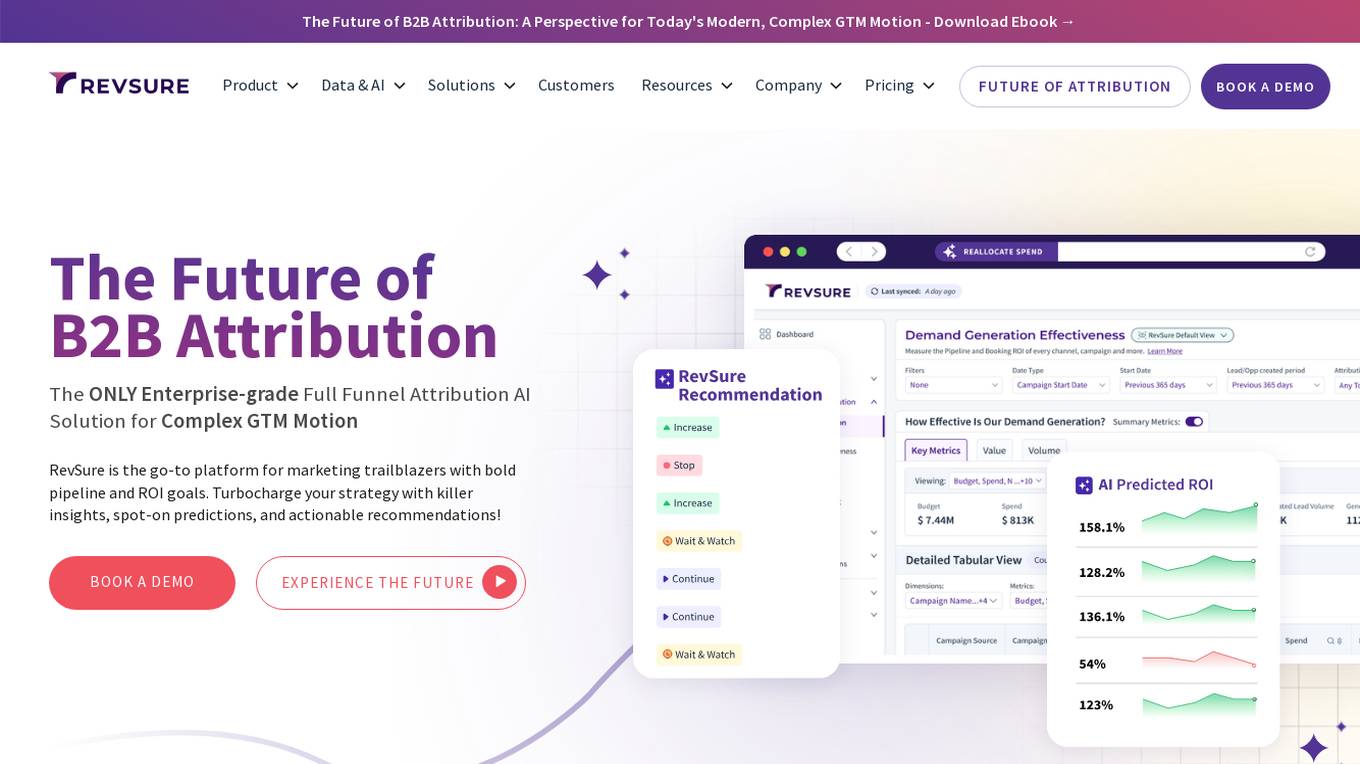

RevSure

RevSure is an AI-powered platform designed for high-growth marketing teams to optimize marketing ROI and attribution. It offers full-funnel attribution, deep funnel optimization, predictive insights, and campaign performance tracking. The platform integrates with various data sources to provide unified funnel reporting and personalized recommendations for improving pipeline health and conversion rates. RevSure's AI engine powers features like campaign spend reallocation, next-best touch analysis, and journey timeline construction, enabling users to make data-driven decisions and accelerate revenue growth.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

CloudExam AI

CloudExam AI is an online testing platform developed by Hanke Numerical Union Technology Co., Ltd. It provides stable and efficient AI online testing services, including intelligent grouping, intelligent monitoring, and intelligent evaluation. The platform ensures test fairness by implementing automatic monitoring level regulations and three random strategies. It prioritizes information security by combining software and hardware to secure data and identity. With global cloud deployment and flexible architecture, it supports hundreds of thousands of concurrent users. CloudExam AI offers features like queue interviews, interactive pen testing, data-driven cockpit, AI grouping, AI monitoring, AI evaluation, random question generation, dual-seat testing, facial recognition, real-time recording, abnormal behavior detection, test pledge book, student information verification, photo uploading for answers, inspection system, device detection, scoring template, ranking of results, SMS/email reminders, screen sharing, student fees, and collaboration with selected schools.

MonitUp

MonitUp is an AI-powered time tracking software that helps you track computer activity, gain insights into work habits, and boost productivity. It uses artificial intelligence to generate personalized suggestions to help you increase productivity and work more efficiently. MonitUp also offers a performance appraisal feature for remote employees, allowing you to evaluate and improve their performance based on objective data.

Inedit

Inedit is an AI-powered editor widget that enhances webpage content editing instantly. It offers features like AI technology, manual editing, effortless editing of multiple elements, and the ability to inspect deeper structures of webpages. The tool is powered by OpenAI GPT Models, providing unparalleled flexibility and performance. Users can seamlessly edit, evaluate, and publish content, ensuring only approved content reaches the audience.

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

AnalyStock.ai

AnalyStock.ai is a financial application leveraging AI to provide users with a next-generation investment toolbox. It helps users better understand businesses, risks, and make informed investment decisions. The platform offers direct access to the stock market, powerful data-driven tools to build top-ranking portfolios, and insights into company valuations and growth prospects. AnalyStock.ai aims to optimize the investment process, offering a reliable strategy with factors like A-Score, factor investing scores for value, growth, quality, volatility, momentum, and yield. Users can discover hidden gems, fine-tune filters, access company scorecards, perform activity analysis, understand industry dynamics, evaluate capital structure, profitability, and peers' valuation. The application also provides adjustable DCF valuation, portfolio management tools, net asset value computation, monthly commentary, and an AI assistant for personalized insights and assistance.

GreetAI

GreetAI is an AI-powered platform that revolutionizes the hiring process by conducting AI video interviews to evaluate applicants efficiently. The platform provides insightful reports, customizable interview questions, and highlights key points to help recruiters make informed decisions. GreetAI offers features such as interview simulations, job post generation, AI video screenings, and detailed candidate performance metrics.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

ParallelDots

ParallelDots is a next-generation retail execution software powered by image recognition technology. The software offers solutions like ShelfWatch, Saarthi, and SmartGaze to enhance the efficiency of sales reps and merchandisers, provide faster training of image recognition models, and offer automated gaze-coding solutions for mobile and retail eye-tracking research. ParallelDots' computer vision technology helps CPG and retail brands track in-store compliance, address gaps in retail execution, and gain real-time insights into brand performance. The platform enables users to generate real-time KPI insights, evaluate compliance levels, convert insights into actionable strategies, and integrate computer vision with existing retail solutions seamlessly.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

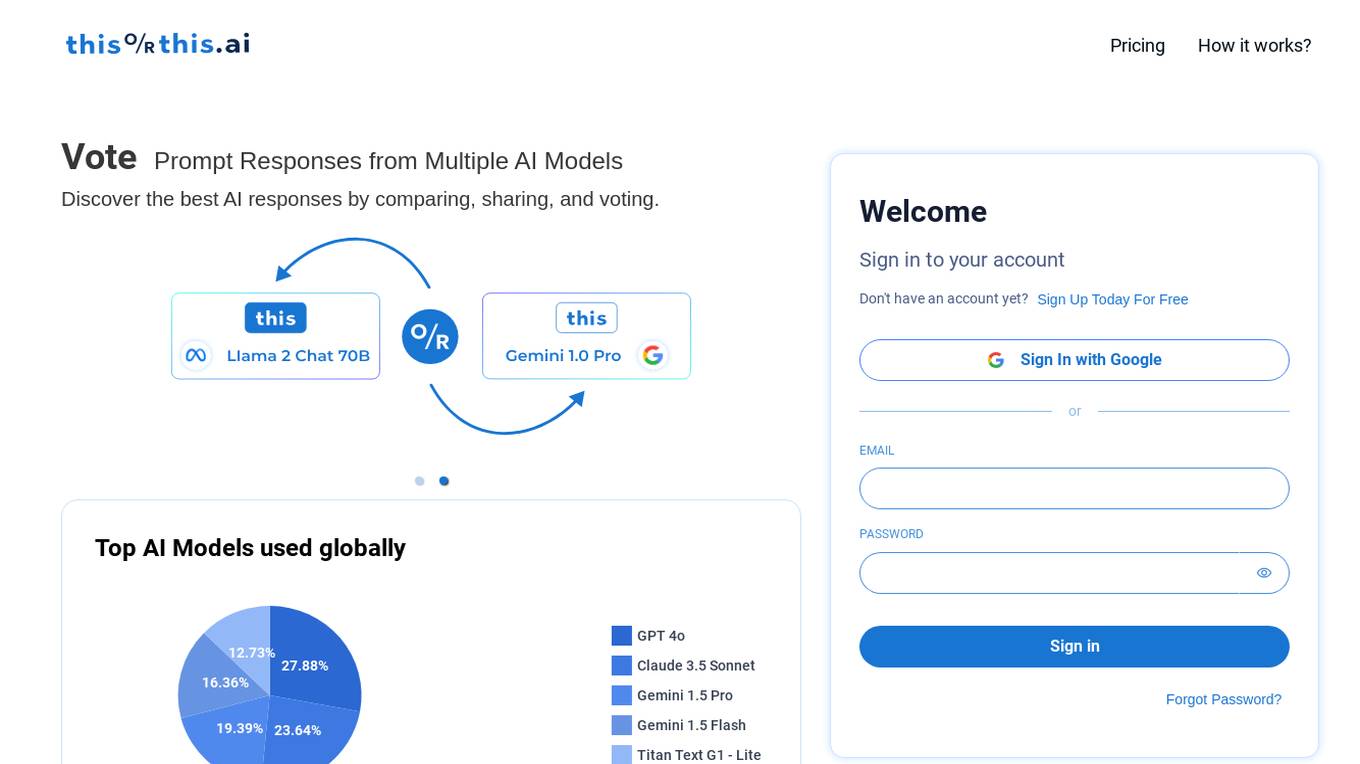

thisorthis.ai

thisorthis.ai is an AI tool that allows users to compare generative AI models and AI model responses. It helps users analyze and evaluate different AI models to make informed decisions. The tool requires JavaScript to be enabled for optimal functionality.

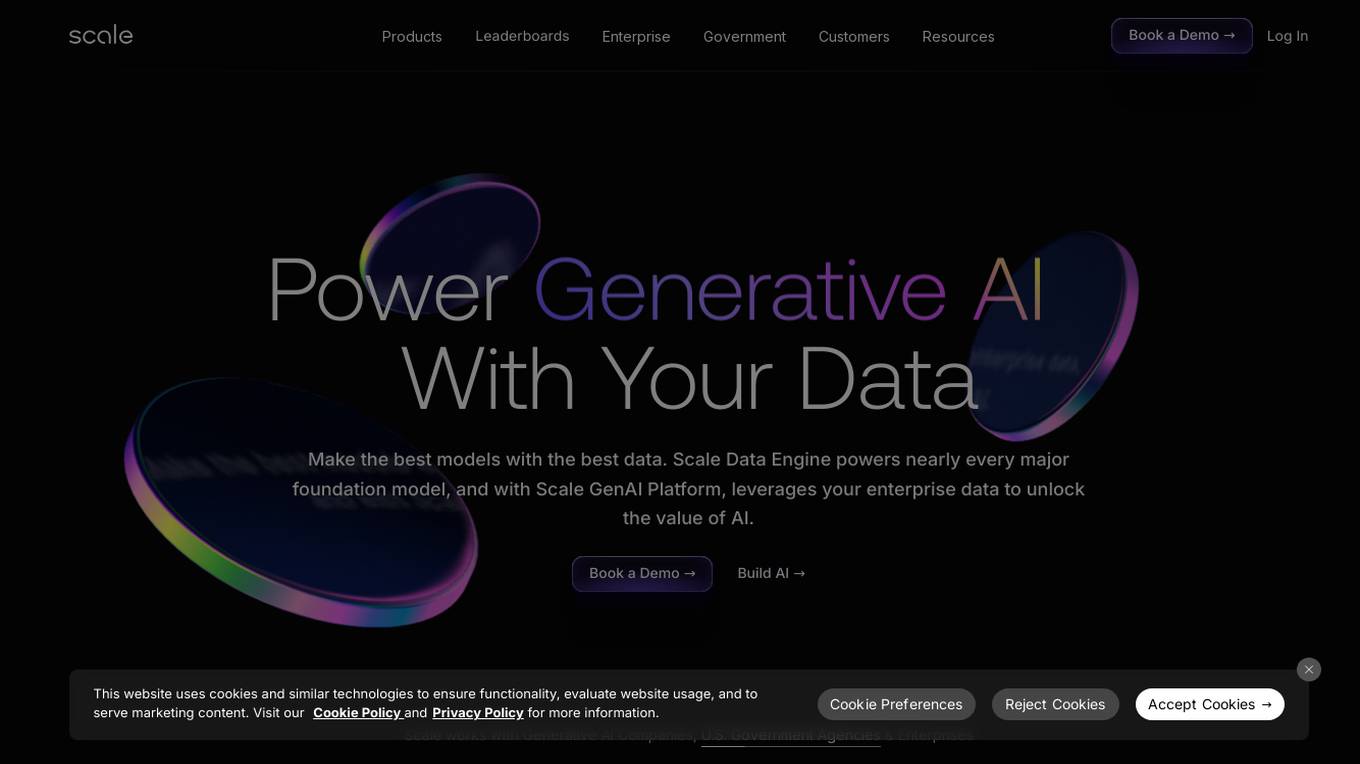

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for various sectors including enterprise, government, and automotive industries. It offers solutions for training models, fine-tuning, generative AI, and model evaluations. Scale Data Engine and GenAI Platform enable users to leverage enterprise data effectively. The platform collaborates with leading AI models and provides high-quality data for public and private sector applications.

1 - Open Source AI Tools

aiconfig

AIConfig is a framework that makes it easy to build generative AI applications for production. It manages generative AI prompts, models and model parameters as JSON-serializable configs that can be version controlled, evaluated, monitored and opened in a local editor for rapid prototyping. It allows you to store and iterate on generative AI behavior separately from your application code, offering a streamlined AI development workflow.

20 - OpenAI Gpts

WM Phone Script Builder GPT

I automatically create and evaluate phone scripts, presenting a final draft.

API Evaluator Pro

Examines and evaluates public API documentation and offers detailed guidance for improvements, including AI usability

GPT Architect

Expert in designing GPT models and translating user needs into technical specs.

The IPO Strategy

Expert in IPO Strategy, offers detailed guidance on business ideas, market paths, and opportunities. Created by Christopher Perceptions

Innovation YRP

An Innovation & R&D Management advisor who can help you turn ideas into new value creation using over 60 methodologies and tools. Attributed to Yann Rousselot-Pailley https://www.linkedin.com/in/yannrousselot/

Project Post-Project Evaluation Advisor

Optimizes project outcomes through comprehensive post-project evaluations.

Innovative Concepts Advisor

Advises on innovative concepts to drive organizational growth.

Learn about Responsible Innovation

A personal guide to socially responsible and beneficial innovation

Methodology Generator

Expert in diverse methodology methods, offering tailored and detailed suggestions.

Essay Prompt Generator

K12 assessment expert, creating grade-level appropriate essay prompts.