Best AI tools for< Detect Security Vulnerabilities >

20 - AI tool Sites

Giskard

Giskard is an automated Red Teaming platform designed to prevent security vulnerabilities and business compliance failures in AI agents. It offers advanced features for detecting AI vulnerabilities, proactive monitoring, and aligning AI testing with real business requirements. The platform integrates with observability stacks, provides enterprise-grade security, and ensures data protection. Giskard is trusted by enterprise AI teams and has been used to detect over 280,000 AI vulnerabilities.

Escape

Escape is a dynamic application security testing (DAST) tool that stands out for its ability to work seamlessly with modern technology stacks, test business logic, and help developers address vulnerabilities efficiently. It offers features like API discovery and security testing, GraphQL security testing, and tailored remediations. Escape provides advantages such as high code coverage improvement, fewer false negatives, time-saving benefits, and application risk reduction. However, it also has disadvantages like the need for manual code remediations and limited support for certain security integrations.

CodeDefender α

CodeDefender α is an AI-powered tool that helps developers and non-developers improve code quality and security. It integrates with popular IDEs like Visual Studio, VS Code, and IntelliJ, providing real-time code analysis and suggestions. CodeDefender supports multiple programming languages, including C/C++, C#, Java, Python, and Rust. It can detect a wide range of code issues, including security vulnerabilities, performance bottlenecks, and correctness errors. Additionally, CodeDefender offers features like custom prompts, multiple models, and workspace/solution understanding to enhance code comprehension and knowledge sharing within teams.

VIDOC

VIDOC is an AI-powered security engineer that automates code review and penetration testing. It continuously scans and reviews code to detect and fix security issues, helping developers deliver secure software faster. VIDOC is easy to use, requiring only two lines of code to be added to a GitHub Actions workflow. It then takes care of the rest, providing developers with a tailored code solution to fix any issues found.

Tokenomist.ai

Tokenomist.ai is an AI-powered security service website that helps protect against online attacks by enabling cookies and blocking malicious activities. It uses advanced algorithms to detect and prevent security threats, ensuring a safe browsing experience for users. The platform is designed to safeguard websites from potential risks and vulnerabilities, offering a reliable security solution for online businesses and individuals.

VULNWatch

VULNWatch is a web security platform that simplifies and makes website security accessible. The platform offers automated assessments using AI-powered tools with over 13 years of experience. It empowers business owners and developers to identify and address vulnerabilities quickly and easily in one place. VULNWatch provides effective web security assessment, including fingerprinting, protection against SQL injections, and web shells, with a focus on communication and collaboration with clients to ensure tailored cybersecurity solutions.

AquilaX

AquilaX is an AI-powered DevSecOps platform that simplifies security and accelerates development processes. It offers a comprehensive suite of security scanning tools, including secret identification, PII scanning, SAST, container scanning, and more. AquilaX is designed to integrate seamlessly into the development workflow, providing fast and accurate results by leveraging AI models trained on extensive datasets. The platform prioritizes developer experience by eliminating noise and false positives, making it a go-to choice for modern Secure-SDLC teams worldwide.

DryRun Security

DryRun Security is an AI-driven application security tool that provides Contextual Security Analysis to detect and prevent logic flaws, authorization gaps, IDOR, and other code risks. It offers features like code insights, natural language code policies, and customizable notifications and reporting. The tool benefits CISOs, security leaders, and developers by enhancing code security, streamlining compliance, increasing developer engagement, and providing real-time feedback. DryRun Security supports various languages and frameworks and integrates with GitHub and Slack for seamless collaboration.

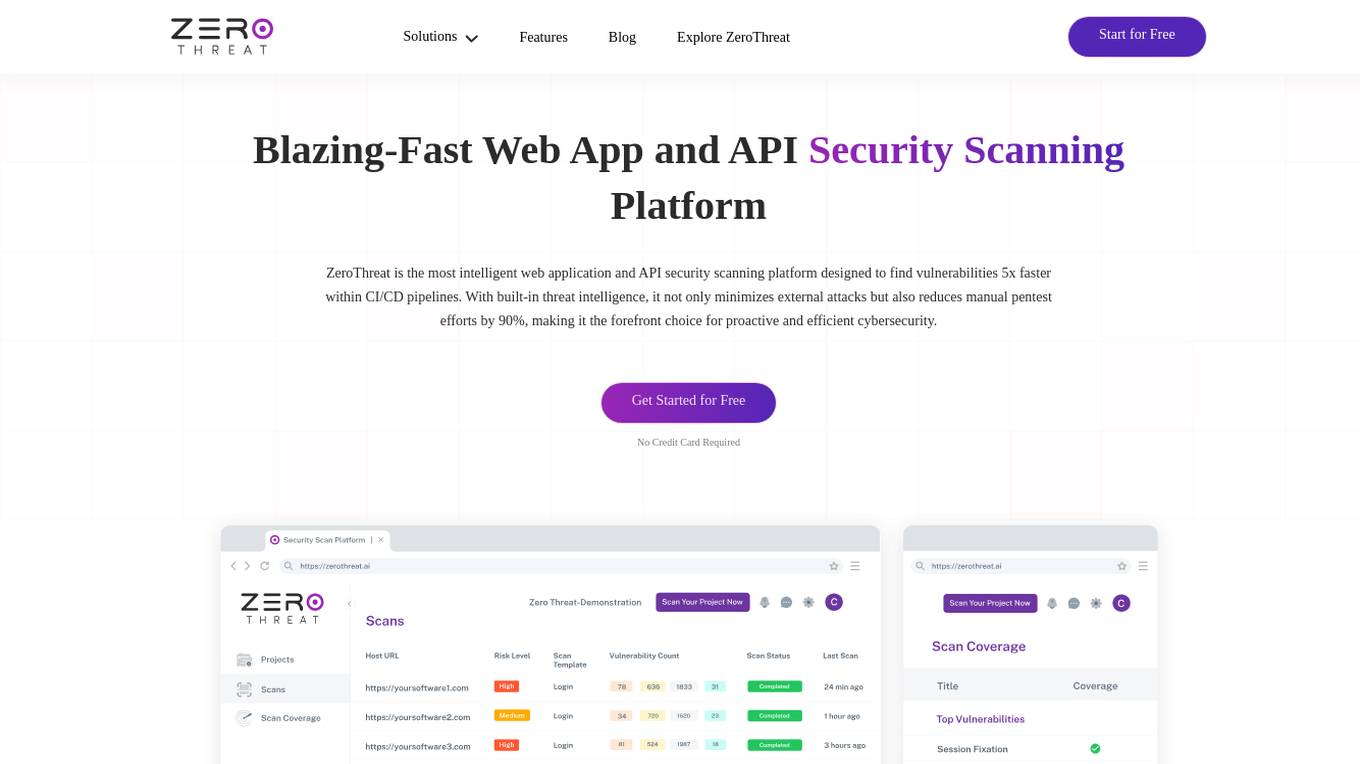

ZeroThreat

ZeroThreat is a web app and API security scanner that helps businesses identify and fix vulnerabilities in their web applications and APIs. It uses a combination of static and dynamic analysis techniques to scan for a wide range of vulnerabilities, including OWASP Top 10, CWE Top 25, and SANS Top 25. ZeroThreat also provides continuous monitoring and alerting, so businesses can stay on top of new vulnerabilities as they emerge.

Traceable

Traceable is an AI-driven application designed to enhance API security for Cloud-Native Apps. It collects API traffic across the application landscape and utilizes advanced context-based behavioral analytics AI engine to provide insights on APIs, data exposure, threat analytics, and forensics. The platform offers features for API cataloging, activity monitoring, endpoint details, ownership, vulnerabilities, protection against security events, testing, analytics, and more. Traceable also allows for role-based access control, policy configuration, data classification, and integration with third-party solutions for data collection and security. It is a comprehensive tool for API security and threat detection in modern cloud environments.

CloudDefense.AI

CloudDefense.AI is an industry-leading multi-layered Cloud Native Application Protection Platform (CNAPP) that safeguards cloud infrastructure and cloud-native apps with expertise, precision, and confidence. It offers comprehensive cloud security solutions, vulnerability management, compliance, and application security testing. The platform utilizes advanced AI technology to proactively detect and analyze real-time threats, ensuring robust protection for businesses against cyber threats.

Traceable

Traceable is an intelligent API security platform designed for enterprise-scale security. It offers unmatched API discovery, attack detection, threat hunting, and infinite scalability. The platform provides comprehensive protection against API attacks, fraud, and bot security, along with API testing capabilities. Powered by Traceable's OmniTrace Engine, it ensures unparalleled security outcomes, remediation, and pre-production testing. Security teams trust Traceable for its speed and effectiveness in protecting API infrastructures.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

Cyguru

Cyguru is an all-in-one cloud-based AI Security Operation Center (SOC) that offers a comprehensive range of features for a robust and secure digital landscape. Its Security Operation Center is the cornerstone of its service domain, providing AI-Powered Attack Detection, Continuous Monitoring for Vulnerabilities and Misconfigurations, Compliance Assurance, SecPedia: Your Cybersecurity Knowledge Hub, and Advanced ML & AI Detection. Cyguru's AI-Powered Analyst promptly alerts users to any suspicious behavior or activity that demands attention, ensuring timely delivery of notifications. The platform is accessible to everyone, with up to three free servers and subsequent pricing that is more than 85% below the industry average.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

Semgrep

Semgrep is an AI-powered application designed for static analysis and security testing of code. It helps developers find and fix issues in their code, detect vulnerabilities in the software supply chain, and identify hardcoded secrets. Semgrep offers features such as AI-powered noise filtering, dataflow analysis, and tailored remediation guidance. It is known for its speed, transparency, and extensibility, making it a valuable tool for AppSec teams of all sizes.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages artificial intelligence to anticipate threats, manage vulnerabilities, and protect resources across the entire enterprise ecosystem. With features such as Singularity XDR, Purple AI, and AI-SIEM, SentinelOne empowers security teams to detect and respond to cyber threats in real-time. The platform is trusted by leading enterprises worldwide and has received industry recognition for its innovative approach to cybersecurity.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages AI technology to anticipate threats, manage vulnerabilities, and protect resources across the enterprise ecosystem. SentinelOne provides real-time threat hunting, managed services, and actionable insights through its unified data lake, empowering security teams to respond effectively to cyber threats. With a focus on automation, efficiency, and value maximization, SentinelOne is a trusted cybersecurity solution for leading enterprises worldwide.

Qwiet AI

Qwiet AI is a code vulnerability detection platform that accelerates secure coding by uncovering, prioritizing, and generating fixes for top vulnerabilities with a single scan. It offers features such as AI-enhanced SAST, contextual SCA, AI AutoFix, Container Security, SBOM, and Secrets detection. Qwiet AI helps InfoSec teams in companies to accurately pinpoint and autofix risks in their code, reducing false positives and remediation time. The platform provides a unified vulnerability dashboard, prioritizes risks, and offers tailored fix suggestions based on the full context of the code.

Darktrace

Darktrace is an essential AI cybersecurity platform that offers proactive protection, cloud-native AI security, comprehensive risk management, and user protection across various devices. It accelerates triage by 10x, defends with confidence, and connects with various integrations. Darktrace ActiveAI Security Platform spots novel threats across organizations, providing solutions for ransomware, APTs, phishing, data loss, and more. With a focus on defense, Darktrace aims to transform cybersecurity by detecting and responding to known and novel threats in real-time.

2 - Open Source AI Tools

mcp-scan

MCP-Scan is a security scanning tool designed to detect common security vulnerabilities in Model Context Protocol (MCP) servers. It can auto-discover various MCP configurations, scan both local and remote servers for security issues like prompt injection attacks, tool poisoning attacks, and toxic flows. The tool operates in two main modes - 'scan' for static scanning of installed servers and 'proxy' for real-time monitoring and guardrailing of MCP connections. It offers features like scanning for specific attacks, enforcing guardrailing policies, auditing MCP traffic, and detecting changes to MCP tools. MCP-Scan does not store or log usage data and can be used to enhance the security of MCP environments.

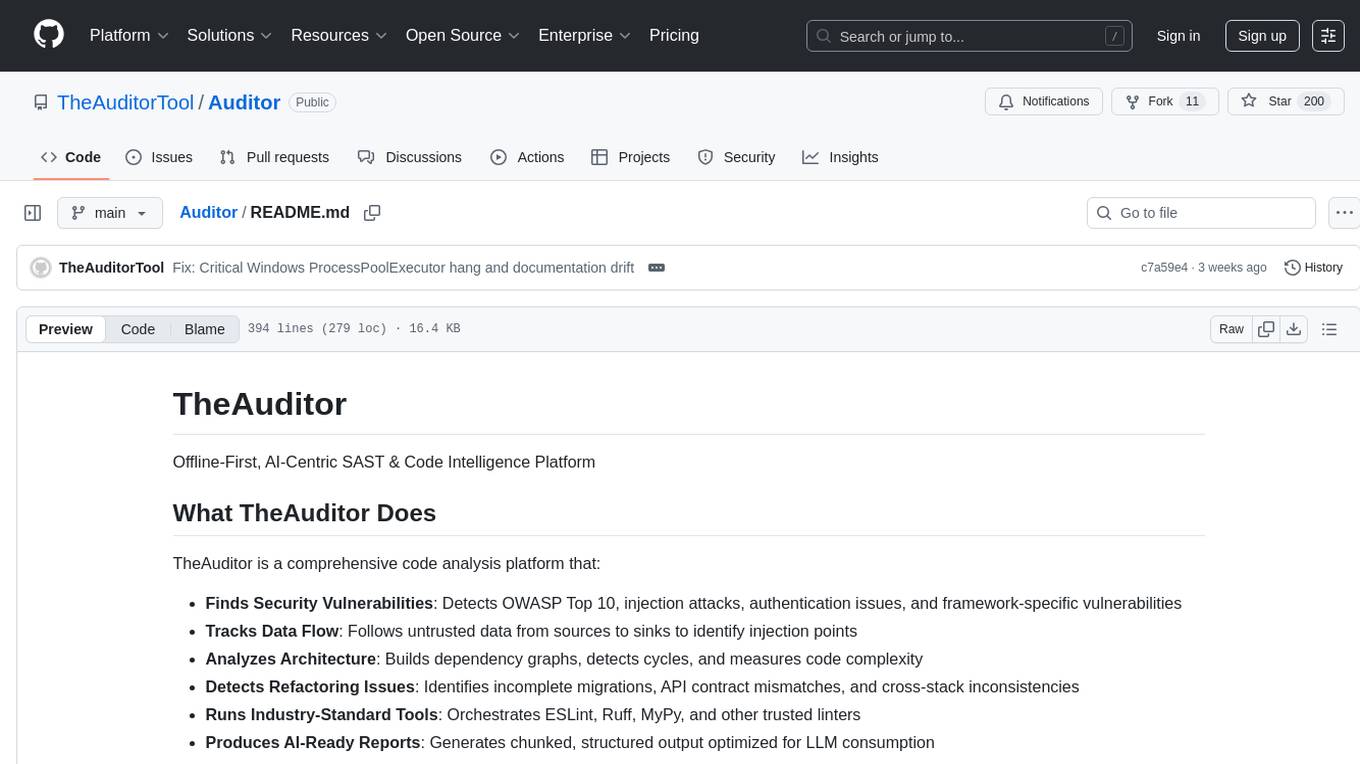

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

20 - OpenAI Gpts

Phoenix Vulnerability Intelligence GPT

Expert in analyzing vulnerabilities with ransomware focus with intelligence powered by Phoenix Security

Log Analyzer

I'm designed to help You analyze any logs like Linux system logs, Windows logs, any security logs, access logs, error logs, etc. Please do not share information that You would like to keep private. The author does not collect or process any personal data.

Mónica

CSIRT que lidera un equipo especializado en detectar y responder a incidentes de seguridad, maneja la contención y recuperación, organiza entrenamientos y simulacros, elabora reportes para optimizar estrategias de seguridad y coordina con entidades legales cuando es necesario

CISO GPT

Specialized LLM in computer security, acting as a CISO with 20 years of experience, providing precise, data-driven technical responses to enhance organizational security.

Phish or No Phish Trainer

Hone your phishing detection skills! Analyze emails, texts, and calls to spot deception. Become a security pro!

Defender for Endpoint Guardian

To assist individuals seeking to learn about or work with Microsoft's Defender for Endpoint. I provide detailed explanations, step-by-step guides, troubleshooting advice, cybersecurity best practices, and demonstrations, all specifically tailored to Microsoft Defender for Endpoint.

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.